Organizations deal with data collected from multiple sources, which increases the complexity of managing and processing it. Oracle offers a suite of tools that helps you store and manage the data, and Apache Spark enables you to handle large-scale data processing tasks.

The Oracle Spark connector enables data transfer between Apache Spark and Oracle. This integration allows you to leverage Spark’s data processing capabilities within the Oracle environment to analyze data thoroughly, facilitating informed decision-making.

This article will explore the Oracle Spark connector, its key features, some practical use cases, and more.

Table of Contents

Overview of Oracle Database

Oracle is a relational database management system. It is a popular enterprise application. It is known as Oracle database, OracleDB, and Oracle. It provides a logical and physical structure to your data. Oracle offers recovery in case of failure, portability on all platforms, high security, etc. Four different editions of Oracle are as follows:

- Enterprise Edition: It is the most robust and secure edition. It offers high security, enhanced performance, etc.

- Standard Edition: It provides all the basic functionality to its users.

- Express Edition (XE): It is a lightweight and free edition for Windows and Linux.

- Oracle Lite: It is for mobile devices.

Hevo simplifies Oracle data replication with its no-code platform, enabling real-time data flow to your target destinations. Avoid the complexity of manual replication and benefit from automated pipelines.

- Supports Oracle as a source along with 150+ integrations with seamless replication

- Pre and post-load transformations for clean, ready-to-use data

- Real-time replication to destinations like BigQuery, Redshift, and Snowflake

Rated 4.3 on G2, Hevo offers a simple solution for your data needs. Discover how companies like Postman have benefited from Hevo. For more details, try a personalized demo for free or explore our Documentation.

Get Started with Hevo for FreeUnderstanding Oracle Spark Connector

The Oracle Spark connector or Spark Oracle Connector is also known as the Oracle datasource for Apache Spark. It is an extension of the JDBC datasource provided by Spark. The connector simplifies the connection between Oracle database instances and Apache Spark.

The connector facilitates seamless data interchange between the two platforms and allows you to perform complex data processing on the Oracle data using Spark’s computing framework. It helps you streamline your data workflows, reduce movement overhead costs, and perform data processing tasks by optimizing Apache Spark’s functionalities over Oracle data.

Key Features of Oracle Spark Connector

The Oracle Spark Connector offers several benefits. Here are some of them:

- Scalability: You can distribute the data processing task across Spark nodes to handle extensive data and computational requirements.

- Advanced Querying: Oracle Spark connector allows you to execute complex queries for reading and writing, joining, and aggregating operations on Oracle data in Apache Spark.

- Security: Oracle’s robust security measures, including authentication, encryption, and access controls, secure the data in transit.

How to Deploy Spark on Oracle

Integrating Oracle databases with Apache Spark significantly benefits your organization, facilitating faster data processing. To deploy Spark on Oracle, you need to follow certain steps to set up a connector.

Prerequisites

- You need a Spark 3.1 or higher version to deploy Spark on Oracle.

- Spark Oracle can run on any type of cluster, including embedded, thriftserver, and standalone.

- Install Apache Spark on your system.

- You must have access to the Oracle database. If you don’t, you need to set up the Oracle Free Tier ADW instance.

- You need to build a Spark Oracle package.

- You must have Oracle database user permissions to access plan_table and DBMS_PARALLEL_EXECUTE.

- You need to store the Oracle wallet on each Spark node.

- If you want to use an external table, ensure an OCI credential is set up for the database.

Setting up the Oracle Free Tier ADW Instance

Before setting up an Oracle instance, you need to create an Oracle Free Tier Account and instantiate it. Follow the steps below for the connector setup:

- Download and configure Oracle Instant Tools and Oracle Instant Client. To do this, use sqlplus and imdp executables.

- Download and configure Oracle wallet for your instance:

- Download and unzip the Oracle wallet.

- You need to change sqlnet.ora file to a downloaded folder and try sqlplus.

- Connect the instance using sqlplus instant client and create a user to run the demo.

- Upload the cloud credentials to the instance using dbms_cloud.create_credential procedure.

- Load tpcds data and tables in your instance.

- A dump of tpcds scale 1 is available in the OCI location, where you can copy data to your own bucket.

- Lastly, import data by running the following command:

./impdp admin/<your_passwd>@<your_tns_address> directory=data_pump_dir credential=SPARK_ORA_CRED dumpfile=<your_dump_file_location>Deploy the Spark Oracle

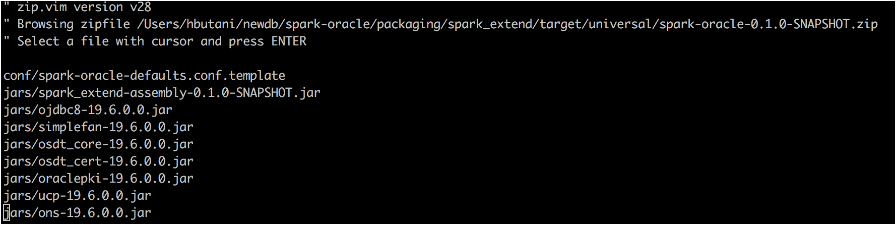

- You need to download the Spark Oracle zip file, which includes spark-oracle and oracle jdbc jar files.

- You can extract the Oracle zip file in the Spark deployment directory.

Configure Spark Oracle

To enable your Spark Oracle integration and pushdown functionality, you need to add the necessary configurations to your spark-defaults.conf file.

To enable your Spark Oracle integration and pushdown functionality, you must add the configurations to your spark-defaults.conf file.

How Can You Use Oracle Spark Connector with Data Flow?

Data Flow is a fully managed service OCI provides that allows you to run Apache Spark applications. It has a user-friendly interface and will enable you to integrate your applications and workflows with ease. There are two ways in which you can use Oracle Spark connector in data flow:

1. Enabling the Oracle Spark connector in the advanced option

When creating, editing, or running the application in the data flow, you can include a specific key to enable Oracle functionality in Spark. This lets you use the data source within your application.

2. Define the Oracle Spark connector format in DataFrame

You can specify how Apache Spark should read, write, and process Oracle data by importing all the necessary libraries and creating a Spark session.

Let’s look at some examples of using Oracle Spark connector with data flow using different languages.

Example 1: Using Java to Load Data from Oracle Autonomous Database at Root Compartment

A root compartment is an organizational unit that provides resource isolation, management, and access control capabilities.

You can use Java to integrate your data from an Oracle autonomous database within a root compartment into a Spark DataFrame for analysis and generating actionable insights.

For instance, you can load your sales data into a Spark Dataframe to calculate total sales for a month or identify top-selling products.

Note: You don’t have to provide a driver class name and JDBC URL.

Dataset<Row> oracleDF = spark.read()

.format("oracle")

.option("adbId","ocid1.autonomousdatabase.<REALM>.[REGION][.FUTURE USE].<UNIQUE ID>")

.option("dbtable", "schema.tablename")

.option("user", "username")

.option("password", "password")

.load();In the above code:

- .format(‘oracle’) is a method that specifies the source data format.

- spark.read() is a method to load data from the Oracle database.

- dbtable is the table from where the data will be loaded.

- schema.tablename specifies the schema of your database table.

Example 2: Using Java to Load Data from an Oracle Database With a Wallet from an Object Storage

Oracle Wallet is a secure container for storing sensitive data such as credentials, keys, and certificates for authentication. You can use Java code to connect Oracle Database with a wallet from object storage and load data to Spark Dataframe.

Once the data is loaded to Spark, you can perform various analysis tasks, such as generating finance or sales reports, calculating employee retention rates, and more.

Dataset<Row> oracleDF2 = spark.read()

.format("oracle")

.option("walletUri","oci://<bucket>@<namespace>/Wallet_DATABASE.zip")

.option("connectionId","database_medium")

.option("dbtable", "schema.tablename")

.option("user", "username")

.option("password", "password")

.load()In the above code:

- <bucket> specifies the name of your bucket where the object is stored.

- Wallet_DATABASE.zip is the name of the wallet file.

- database_medium is a part of the configuration option used to define the type of database connection profile.

Example 3: Using Python to Load Data from an Oracle Autonomous Database and Override the Net Service Name

The Net Service Name in Oracle specifies the network address for a particular database instance. You can use Python to load data in Pyspark DataFrame by overriding the Net Service Name to ensure you have access to the right database.

After integrating the data into Spark, you can analyze your business data to generate data-driven insights and make informed decisions.

Note: Using a map for configuration can be mutable or immutable.

properties = {"adbId":"ocid1.autonomousdatabase.<REALM>.[REGION][.FUTURE USE].<UNIQUE ID>","dbtable":"schema.tablename","connectionId":"database_high","user":"username","password":"password"}

oracle_df1 = spark.read \

.format("oracle") \

.options(**properties) \

.load()In the above code:

- abID is the Oracle database ID identifying the specific instance you want to connect Spark with.

- Oracle df1 is the DataFrame, which contains data loaded from the Oracle database table.

Example 4: Using Scala to Load Data from an Oracle Database With Wallet from OCI Object Storage

Utilizing an Oracle Wallet from OCI object storage ensures a secure connection. You can use Scala to load data to Spark DataFrame and perform data processing tasks such as filtering, calculating, and aggregating Oracle data. For instance, performing analysis on customer data to make segments according to region and age.

Note: You don’t need a username or password with auto-login enabled.

val oracleDF2 = spark.read

.format("oracle")

.option("walletUri","oci://<bucket>@<namespace>/Wallet_DATABASE.zip")

.option("connectionId","database_medium")

.option("dbtable", "schema.tablename")

.load()In the above code:

- walletUri is the location specifying where the Oracle file is stored.

- oracleDF2 is the Spark DataFrame, which loads all data from the Oracle database.

Challenges Associated with Oracle Spark Connector

Managing data integration with Oracle Spark Connector can be difficult sometimes due to the following reasons:

- Complex Configuration: Setting up Oracle Spark Connector can be complex due to different integration structures and technical expertise requirements. You need to understand configurations, drivers, and connection settings to complete the setup.

- Data Transformation Needs: The raw data from Oracle databases must be transformed into a standardized format to align with the target system. This includes tasks such as cleaning, schema mapping, and formatting.

How Hevo Can Streamline Data Integration for Oracle Spark Connector

Hevo is a real-time ELT tool that streamlines data integration for Oracle Spark Connector through its no-code, flexible, and automated data pipeline. This pipeline requires minimal time to set up compared to Oracle Spark Connector, which often has intricate configuration. In addition to rapid configuration, Hevo also provides built-in data transformation features that help you standardize data before it reaches Apache Spark.

Additionally, you can use Hevo’s Pipeline Jobs view to understand the movement of events from data ingestion to loading. This feature helps you monitor and manage the data flow between Oracle and Spark.

You can also read about:

- Set up CDC with Oracle and Debezium

- Oracle Change Tracking: what it is & how to enable

- Oracle data load: tools & best practices

Conclusion

- Oracle Spark Connector is a robust tool that combines Oracle’s data management functionality with Apache Spark’s data processing.

- It facilitates seamless data exchange and simplifies workflows. However, setting up Oracle Spark Connector can be long and tedious because of its complex configuration attributes.

- Want to master Oracle data load? Explore our guide to learn effective techniques for importing data into Oracle databases.

- Hevo addresses these challenges by providing a user-friendly interface with a no-code data pipeline, which is significantly faster and easier to set up.

- Its built-in transformation features help you prepare your data for analysis upon integration, enhancing business operational performance.

Sign up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

Frequently Asked Questions

Q1. How to connect Oracle from Spark?

You can connect Oracle to Spark using the Oracle Spark connector or data integration tools such as Hevo, which is a more efficient way to migrate data between the two platforms.

Q2. Can you use the ‘walletUri’ option for Oracle Autonomous Database in serverless and dedicated infrastructure modes?

Yes, the ‘walletUri’ option is compatible with the Oracle Autonomous database’s serverless and dedicated infrastructure modes.

Q3. What properties are available for the Oracle database besides Spark JDBC datasource?

The following properties are available for the Oracle database besides the Spark JDBC datasource:

– walletUri

– connectionId

– abId