Google has always pioneered the development of large and scalable infrastructure to support its search engine and other products. Its vast network servers have enabled us to store and manage immense volumes of data. As cloud computing gained notoriety, Google expanded its operations and launched Google Cloud Platform (GCP).

The Google Cloud Storage (GCS) allows users to store their data in Storage Buckets. These GCP Storage Buckets hold all kinds of data, such as files, documents, images, and videos. Over time, these GCP Storage Buckets have evolved according to business needs. They are now more unified in performing data storage, backup, content delivery, or application hosting operations.

In this article, you will learn how to list buckets GCP, grant GCP bucket permissions, and how to retrieve a list of buckets from a Google project. Let’s first uncover the foundational basics of the GCP Storage Buckets list.

Table of Contents

Understanding the GCP Storage Bucket List

To understand a bucket list, you must first know about Storage Buckets. Storage Buckets are containers in Google Cloud that hold your data inside them. They have a flat structure and are an independent entity, which means that they do not support nesting or additional buckets within them.

The GCP list buckets is a directory that helps you manage your Storage Buckets inside your organization’s Google environment. It provides a list of all existing buckets on your Google storage cloud. They allow you to navigate through different Google Projects and centralize storage resources.

Hevo automates the entire data loading process, ensuring a smooth flow from source to destination without manual intervention.

- Seamless Data Ingestion: Automatically extract data from 150+ sources, including databases, cloud storage, and SaaS apps.

- Real-time Sync: Keep your data fresh with continuous loading to destinations like Snowflake, BigQuery, and Redshift.

- No-code Setup: Set up data pipelines easily with Hevo’s intuitive, no-code interface.

- Automatic Schema Mapping: Handles schema changes dynamically without disruptions.

Efficient, automated, and reliable—Hevo simplifies data loading every step of the way.

Get Started with Hevo for FreeHow do you List a Bucket in a Google Project?

To list a bucket in a Google Project, you can use different methods, such as Google Console, or programmatically through REST API or the command line. This article will demonstrate the Google Console method. Let’s look at the steps for listing a bucket in a Google Project.

Step 1: Grant Permissions to List a Bucket Using Google Console

Access to Google resources requires GCP Storage Bucket permissions. You require permission from your administrator to access the buckets you want to list. You must have the GCP bucket roles of either IAM or the viewer.

Let’s look at the steps to grant permissions for the IAM role:

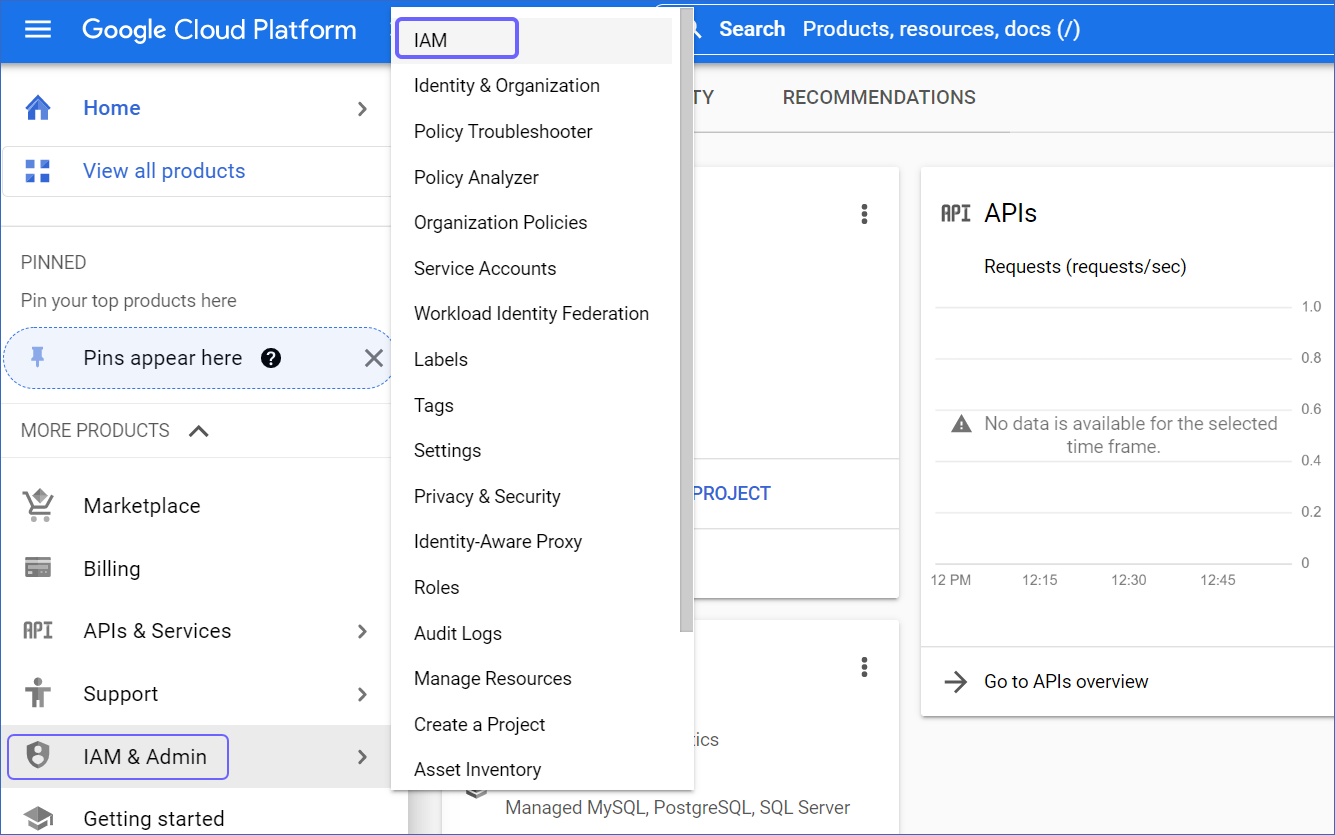

- Open your Google Cloud Console and select the IAM tab on the left.

- You can select a folder, project, or organization from the list or search for a particular folder using the search bar.

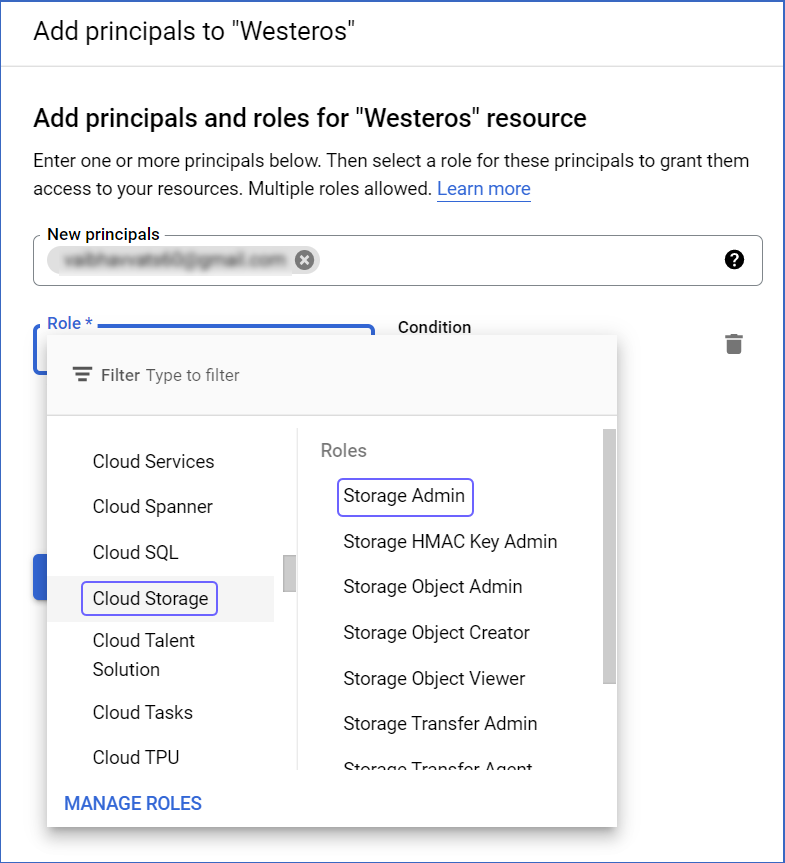

- To grant a user a role, select + Grant Access and enter the user’s email address and name.

- Now, select a role for your user from the drop-down list. In this case, the role will be IAM or Storage Viewer. You can also add a condition to the role.

- Click on the Save Button.

Step 2: List a Bucket in Google Project

Before you start, you must have permission to list buckets GCP. Let’s look at how you can list the buckets for a particular project.

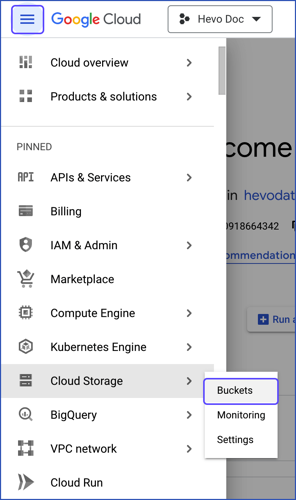

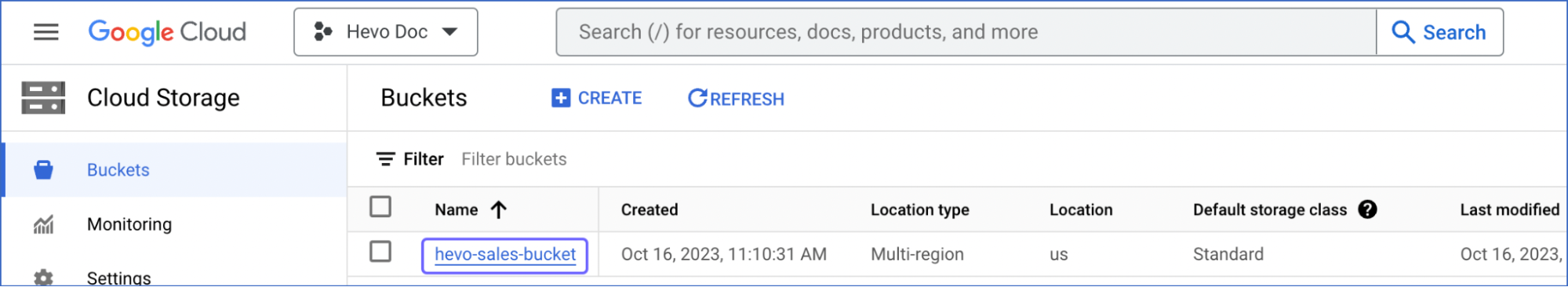

- Go to your Google Cloud Console and open the bucket page.

- Click on Go to Buckets, where you can find all the buckets for all the projects on your Google Cloud Console.

- Use the filtering option to select the bucket you want to list.

Step 3: Retrieve the List of Buckets

To retrieve the bucket list, you need to send an HTTPS request to your JSON API endpoint. In addition to the standard parameters, you should apply other parameters to this request. For a better understanding, refer to Parameters.

Execute the query below to retrieve a list of buckets for a given Google Project.

GET https://storage.googleapis.com/storage/v1/b- Here’s the result of the above query:

{

"Kind": "storage#buckets",

"nextPageToken": string,

"Items": [

buckets Resource ]

}In the above result, kind is the type of value that will be retired, nextPageToken provides a request to move to the next page, and the item is the list of buckets.

How to Return Metadata to a Bucket

Metadata can enhance object management and access control in GCP Storage Buckets. Metadata includes the attributes of objects stored inside your buckets, such as name, size, creation time, last modified time, etc. This additional information allows users to manage their data effectively.

You can use GCP storage.buckets.get to return metadata to a bucket by granting necessary permissions. Be sure to use your own credentials in the code:

GET /?METADATA_QUERY_PARAMETER HTTP/1.1

Host: BUCKET_NAME.storage.googleapis.com

Date: DATE_AND_TIME_OF_REQUEST

Content-Length: 0

Authorization: AUTHENTICATION_STRINGStreamlining Data Processing Workflow Using Cloud-based Integration in GCP Storage Bucket

The GCP Storage Buckets can serve as both source and destination while performing the data integration process. You can either upload data files, stream data into your GCP bucket, or process and migrate the data.

Let’s look at how you can integrate your data inside GCP Storage Buckets in different ways:

Integration Using Google Services.

Google Cloud Services:

The GCP Storage Buckets seamlessly integrate with different cloud services such as BigQuery, Dataflow, or AI platforms. This integration allows you to analyze, process, and query the data inside Storage Buckets using robust Google Cloud tools.

Google Cloud Connector:

You can use the Google Cloud Storage connector to connect your Google Storage Buckets to external applications and perform data transformation. The connector is a component of the Google Cloud Console that enables you to integrate several applications within the Google Cloud Platform.

You must know how to set up and configure the connection between applications using Google Cloud Storage Connector. To learn how to connect your applications to GCS buckets using a connector, refer to this Google documentation: Cloud Storage.

Integration ETL and ELT Platform

ETL Platform

In ETL processes, the data is transformed before being loaded into the target destinations. The transformation process includes methods like data cleansing and enrichment. You can access the GCP bucket to retrieve, store, and stage raw data. The staging process ensures only validated data moves through the ETL pipeline to enhance data quality.

ELT Platform

During the ELT process, the data is first loaded into the target system and then transformed. Through automated data pipelines, the ELT platform can directly connect your GCP Storage Bucket to a source or destination. One ELT platform, Hevo, allows you to set up data integration pipelines for your GCS buckets without writing code. Let’s see how Hevo can streamline your data integration process and workflows through its robust built-in features.

Performing Data Integration for GCS Buckets Using Hevo

Hevo is a no-code, real-time ELT platform that offers you flexible and automated data pipelines. With over 150+ data sources available, you can easily transfer files into your GCP Storage Bucket.

Here are some benefits of using Hevo:

- Data Transformation: With Hevo’s drag-drop and Python-based data transformation features, your data is readily available for analysis and reporting.

- Automated Schema Mapping: Hevo’s in-built schema functionality automatically reads your source data and effortlessly replicates it to your source.

- Incremental Data Loading: Hevo lets you load your modified data sets from source to destination in real-time.

You can refer to the Hevo blogs article to learn how Hevo enables smooth data integration. Here’s an example:: Google BigQuery to BigQuery.

Benefits of GCP Storage Buckets List

The GCP Storage Buckets list provides numerous benefits. Let’s look at some of them:

- Centralize Data Storage: The GCP Storage Buckets list allows you to read and manage all the Storage Buckets on your Google Cloud Platform project in a single location. This provides a centralized overview of all the buckets used on your cloud platform.

- Access Control: The GCP Storage Buckets list lets you control each bucket’s access and permissions. Granting limited access to authorized users helps maintain the data’s credibility and integrity.

- Monitoring: The storage list enables you to monitor storage usage, track activities, optimize storage resources, and decide on storage planning. It also helps you maintain storage costs by identifying the buckets that are unused or underutilized.

Key takeaways

Today, every business generates a large amount of data. However, storing and managing all this data without system crashes or overloading is challenging. Here’s where the Google Cloud Storage Bucket comes in. These Storage Buckets can handle petabytes of data without the stress of managing infrastructure.

You can also integrate your data into and out of Google Cloud Storage using a Google connector, APIs, or third-party platforms like Hevo. Hevo’s pre-built connectors and automated data pipelines simplify data integration.

Want to take Hevo for a spin? Try Hevo’s 14-day free trial and experience the feature-rich Hevo suite firsthand.

Share your experience with the GCP Storage Buckets List in the comments section below!

FAQs (Frequently Asked Questions)

1. How do you give read-only storage.buckets.list access to the GCP service account?

To provide read-only access to the GCP service account, you can create a custom role for the user and grant him specific permissions. To create the custom role, you must go to the roles tab and then apply it to the user on the IAM page. You can also use the same roles tab on your Cloud console to search for the permissions and roles you want to give your users.

2. What are storage buckets in GCP?

Storage buckets in GCP (Google Cloud Platform) are containers for storing data. They are part of Google Cloud Storage and can hold objects (files) like images, videos, and backups. Buckets allow for scalable, secure, and durable storage.

3. Does GCP have S3 buckets?

GCP does not have S3 buckets directly, as S3 is an Amazon Web Services (AWS) product. However, GCP offers a similar service called Google Cloud Storage buckets for object storage, which functions similarly to AWS S3.