Unlock the full potential of your Webhooks data by integrating it seamlessly with Databricks. With Hevo’s automated pipeline, get data flowing effortlessly—watch our 1-minute demo below to see it in action!

Integrating webhooks with Databricks might sound complex, but it doesn’t have to be. In this guide, you will learn how to connect the two and start unlocking valuable insights effortlessly.

Here’s what you’ll learn from this article:

- What are Webhooks?

- What is Databricks?

- How to Connect Webhooks to Databricks

- What You Can Achieve by Migrating Data from Webhooks to Databricks?

Table of Contents

Overview of Webhooks

Webhooks are API-like tools or HTTP callbacks that deliver information across connected applications in response to specific events triggered in an application.

They are extensively utilized in modern application networks for sending or receiving data in repeated patterns or cycles through Webhooks integration.

Overview of Databricks

Databricks is a unified analytics platform that is ideal for big data processing. By loading the data from Webhooks to Databricks, you can unlock the potential for advanced analytics and improved data-driven decision-making

Method 1: Move Data from Webhooks to Databricks using Custom Scripts

Manually extract data and load it into Databricks which requiring extensive coding and maintenance.This method offers flexibility but demands significant development resources and ongoing management.

Method 2: Use a No-Code Tool to Connect Webhooks to Databricks

Hevo Data, a no-code ETL tool, facilitates effortless Webhooks to Databricks migration by automating the process. It uses pre-built connectors for extracting data from various sources, transforms it, and loads it into the destination.

Get Started with Hevo for FreeMethod 1: Move Data from Webhooks to Databricks using Custom Scripts

Webhooks to Databricks migration through custom scripts involves the following steps:

Step 1: Extract Data from your Application Using Webhook

- Create a Webhook for your application and identify the endpoint URL to send data.

- Based on the events, the webhook will transmit data in JSON format.

- Use a Python script to fetch data from the webhook URL endpoint and store it on your local system.

Run the python -m pip install Flask command in your terminal to install Flask. Then, create a .py file, such as main.py and add the following code to the file:

from flask import Flask, request, Response

import json

app = Flask(__name__)

@app.route(‘/webhook’, methods=[‘POST’])

def respond():

data = request.json

file = open(“filename.json”, “w”)

json.dump(data, file)

file.close()

return Response(status=200)

This code imports the Flask class, request, and Response objects. An instance of the Flask application is created and stored in the variable app. Then, the @app.route decorator listens for POST requests made to the /webhook path.

POST requests made to the endpoint URL will trigger the respond function. It will extract the JSON data from the incoming request and store it in the variable data. Then a filename.json is opened in write mode to writes the contents of data into the file. Eventually, the file is closed and a Response with a status code of 200 is returned.

Now, run the following command:

export FLASK_APP=main.py

python -m flask runThis will run the application for the app to listen for a webhook with Python and Flask.

Step 2: Loading Data into Databricks

Here are the steps for uploading extracted data from Webhooks to Databricks:

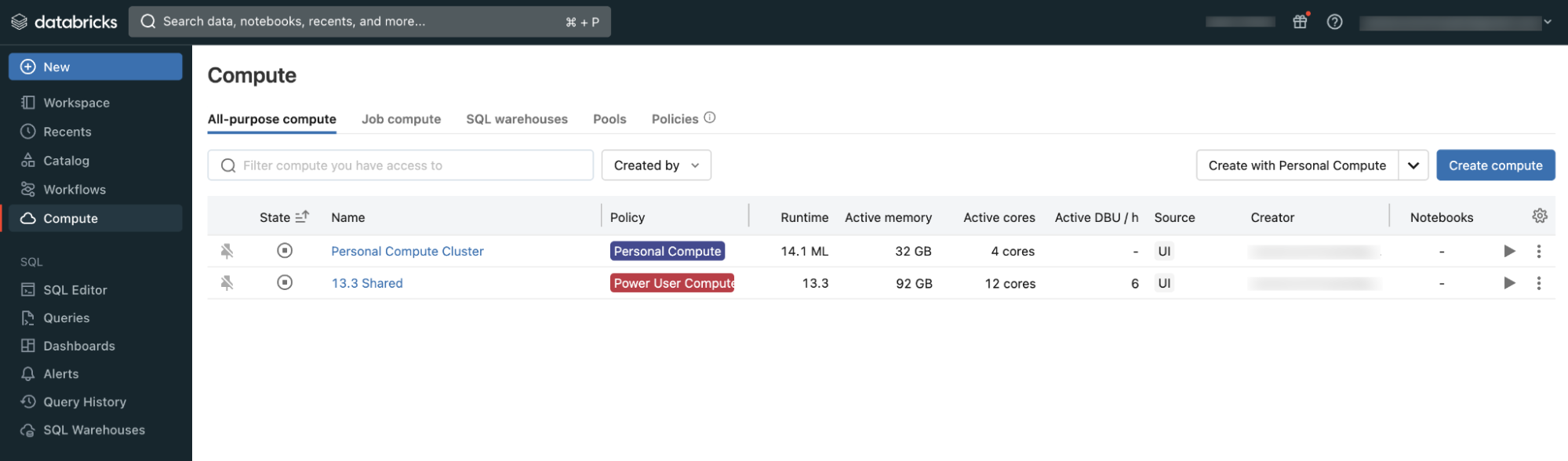

- Log in to your Databricks account and click on Compute on the left side of the Dashboard.

- On the Compute page, click Create Compute.

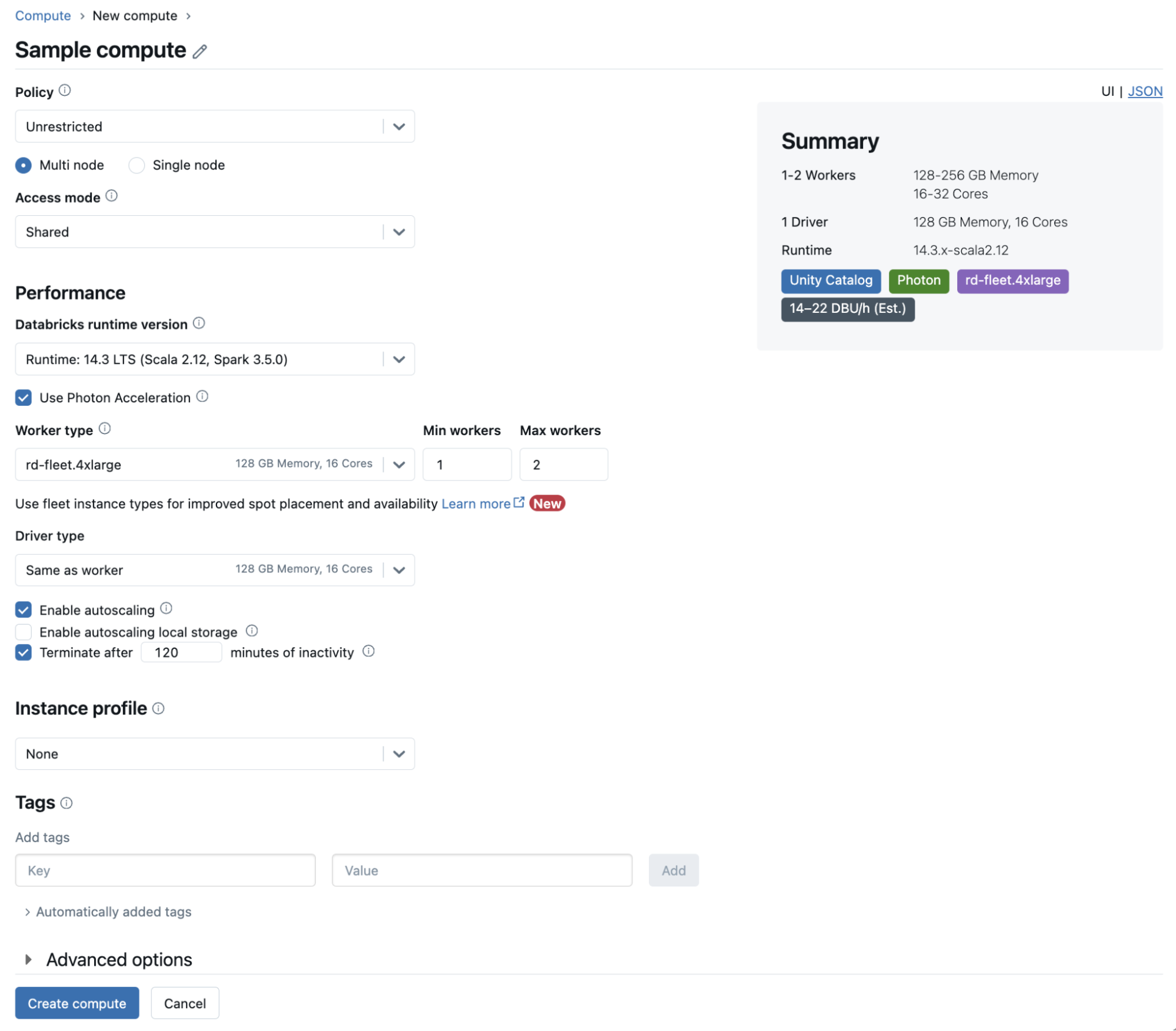

- Provide a name for the Cluster and select the Databricks runtime version according to your needs.

- Now, click on Create Cluster.

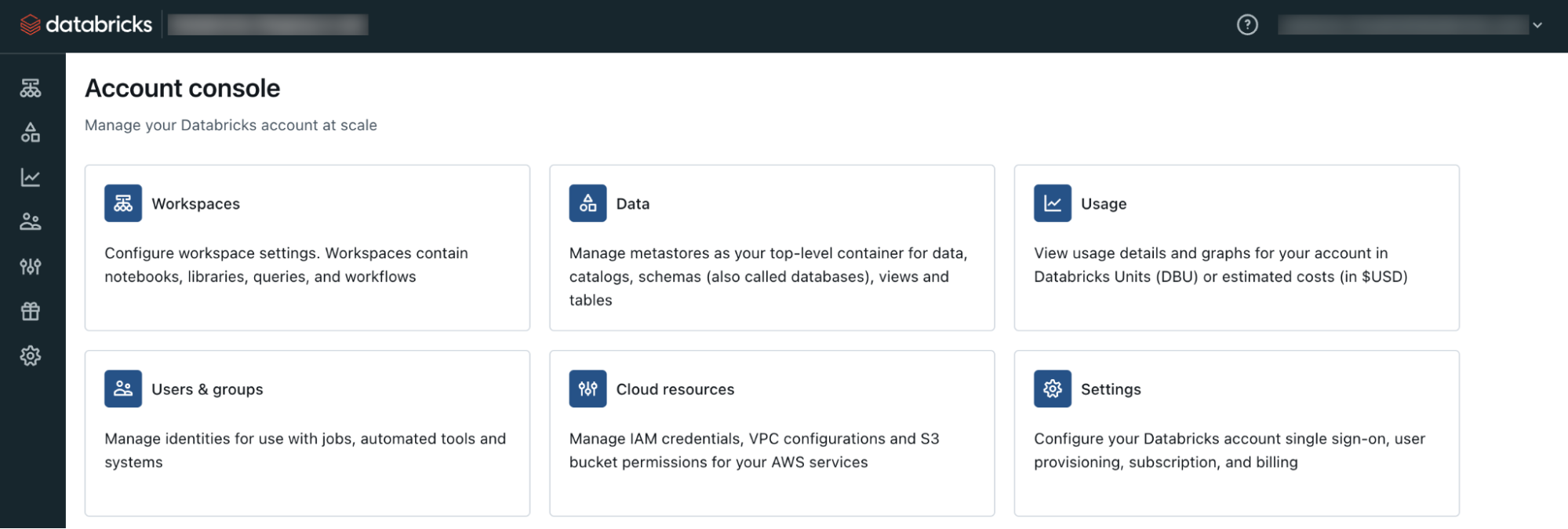

- Next, navigate to Settings, select Admin Console, and go to Workspace Settings.

- Enable the DBFS File browser. You need this for uploading data from your local drive.

- Now, head back to the Databricks dashboard and click on the Data, then select DBFS tab.

- Click Upload

- Now, select the DBFS Target Directory where you want to upload the data and browse the file from your computer to upload the data.

Scenarios where this Method is Preferred

- One-time migration: This approach is ideal for one-time data migration from Webhooks to Databricks when real-time data synchronization is not required. Its primary advantage lies in its simplicity, as it involves minimal setup and configuration, making the migration quick and straightforward.

- Data security: Businesses often prioritize data security and confidentiality when dealing with sensitive organizational data. With the custom script, you can eliminate the need for moving the data through third-party servers.

- Flexibility: With custom scripts, you can perform complex transformations tailored to your unique requirements before loading into Databricks. You can perform several data validation checks like formatting, de-duplication, and more.

Limitations of this Method

- Technical expertise: Building and deploying custom scripts for event-driven workflows requires extensive programming knowledge. This method demands proficiency in handling Webhook data and orchestrating seamless interactions with Databricks.

- Maintenance: Custom scripts demand more ongoing maintenance and support than specialized data integration tools. Unlike no-code ETL tools, which are often maintained by dedicated teams, custom scripts may rely on internal resources, making them more resource-intensive.

- Schema change: Custom scripts are designed to work with a specific schema provided by the webhook source. However, if the source schema is modified, the script may need to be modified to handle the changes correctly.

Method 2: Use a No-Code Tool to Connect Webhooks to Databricks

Hevo Data, a no-code ETL tool, facilitates effortless Webhooks to Databricks migration by automating the process. It uses pre-built connectors for extracting data from various sources, transforms it, and loads it into the destination.

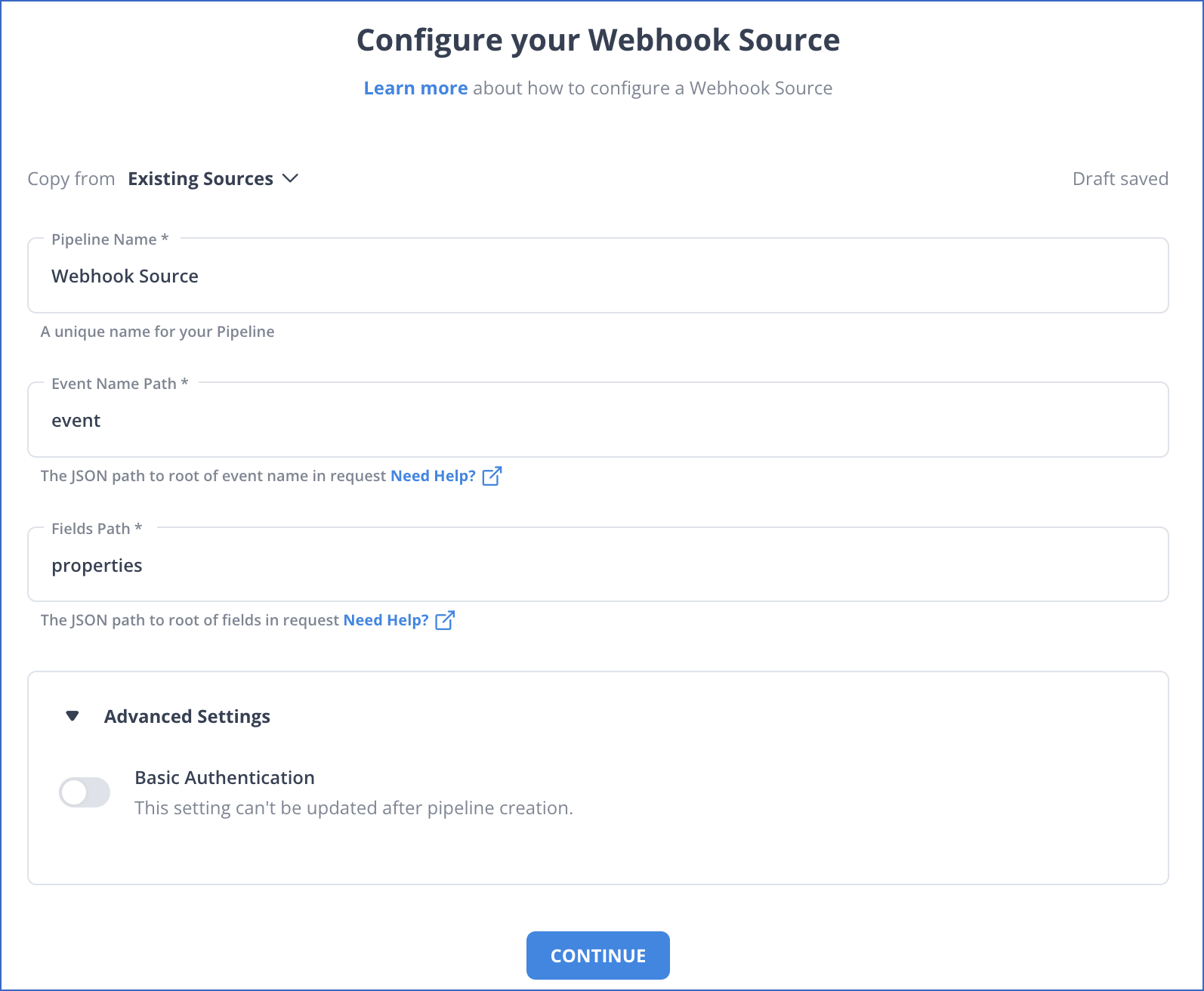

Step 1: Configure Webhooks as the Data Source

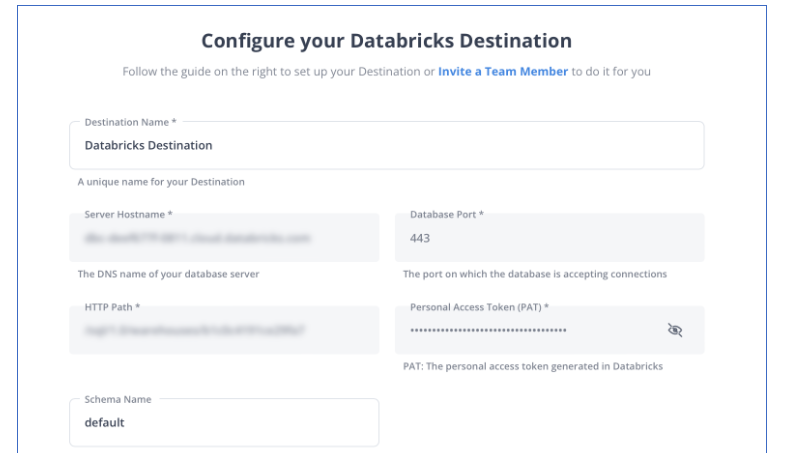

Step 2: Configure Databricks as the Destination

Overcome Limitations and Enjoy Benefits

Utilizing a no-code tool helps overcome the limitations of the first method and offers significant benefits:

- Fully automated data migration: By harnessing the power of no-code tools, you can simplify the data migration workflows. No-code tools typically provide automation capabilities for scheduled and recurring migrations.

- Scalability: No-code tools excel in handling large-scale data migrations as they are designed to accommodate expanding data volumes of organizations automatically. The inherent scalability of these tools empowers organizations to handle data migrations of varying sizes and complexities without compromising on performance.

- Rapid data pipeline deployment: No-code tools allow for quick setup of data integration pipelines and execute the data migration process in a fraction of the time. It offers pre-built connectors to reduce the time and effort required for data migration between any sources and destinations.

With Hevo, setting up a Webooks to Databricks ETL pipeline is a straightforward process and takes merely a few minutes. It supports 150+ data connectors to effortlessly extract data from your chosen source and seamlessly load it into the desired destination.

What Can You Achieve by Migrating Data from Webhooks to Databricks?

Upon completing the data migration from Webhooks to Databricks, you can unlock a plethora of benefits:

- Investigate engagements at different sales funnel stages to better understand your customer journey.

- Identify recurring customers to prioritize tailored incentives for strengthening relationships.

- Leverage data from diverse sources like project management and Human Resource platforms to establish key performance indicators for your team’s efficiency.

- Combine transactional data from Sales, Marketing, Product, and HR to address crucial inquiries.

- Analyze best-selling products and understand customer buying behavior.

Key Takeaways

- With Webhooks to Databricks integration, you can ensure that all the data response from the Webhooks source is stored at a centralized location.

- This allows you to perform in-depth data analysis into your business operations, like sales, marketing, and more.

- There are two ways to load data from Webhooks to Databricks: a custom script and a no-code tool approach.

- Custom scripts provide flexibility in data integration, but they may require technical expertise and constant maintenance.

- On the other hand, a no-code tool like Hevo Data automates the migration process, ensuring seamless and scalable data transfers.

Want to take Hevo for a spin? Sign up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

Frequently Asked Questions

1. Does Databricks support webhooks?

Yes. Databricks supports webhooks for integration and be connected using ETL tools like Hevo Data.

2. How to get data from a webhook?

You can get data from a webhook by setting up an endpoint to receive webhook requests and process the incoming data using tools like Hevo Data.

3. Can Databricks connect to API?

Yes. Databricks can connect to APIs using REST or Python libraries.