Easily move your data from YouTube Analytics to BigQuery to enhance your analytics capabilities. With Hevo’s intuitive pipeline setup, data flows in real-time. Check out our 1-minute demo below to see the seamless integration in action!

Connecting your YouTube Analytics to BigQuery has numerous benefits, such as custom reporting, cross-platform insights, scalable storage, and much more. Although this integration provides numerous benefits, integrating these tools can often be a little confusing for users with or without coding knowledge. Therefore, we have provided you with 2 easy step-by-step methods for integrating your data from YouTube Analytics to BigQuery.

Table of Contents

Benefits of Migrating Your Data from YouTube Analytics to BigQuery

- Custom Dashboards: You can construct custom dashboards to monitor and visualise the key metrics relevant to your business.

- Advanced Data Analysis: Perform complex query operations and detailed analysis on large datasets.

- Thorough Reporting: Combine YouTube data with other sources to get the fullest picture.

- Improved Decision Making: Drive content and marketing strategies with detailed, in-depth analytics.

- Better Data Integration: Pool the data from YouTube Analytics with other Google tools seamlessly.

Hevo’s no-code platform enables seamless integration of your YouTube Analytics data into BigQuery, empowering your team with real-time insights and streamlined analytics.

- Real-Time Insights: Stream data into BigQuery for up-to-date performance tracking of your YouTube channel.

- No-Code Setup: Simplify data integration without writing a single line of code.

- Centralized Analytics: Combine YouTube data with sales, product, and 360° view.

- Scalable Architecture: Handle high-volume video data with ease using BigQuery’s serverless infrastructure.

Try Hevo and discover why 2000+ customers have chosen Hevo and how 40+ teams across industry verticals use Hevo to power their analytics stack.

Get Started with Hevo for FreeMethods to Connect Youtube Analytics to BigQuery

Method 1: Integrate your Data using Hevo

Method 2: Replicating Data using YouTube Analytics API

Method 1: Replicate Data from YouTube Analytics to BigQuery Using Hevo

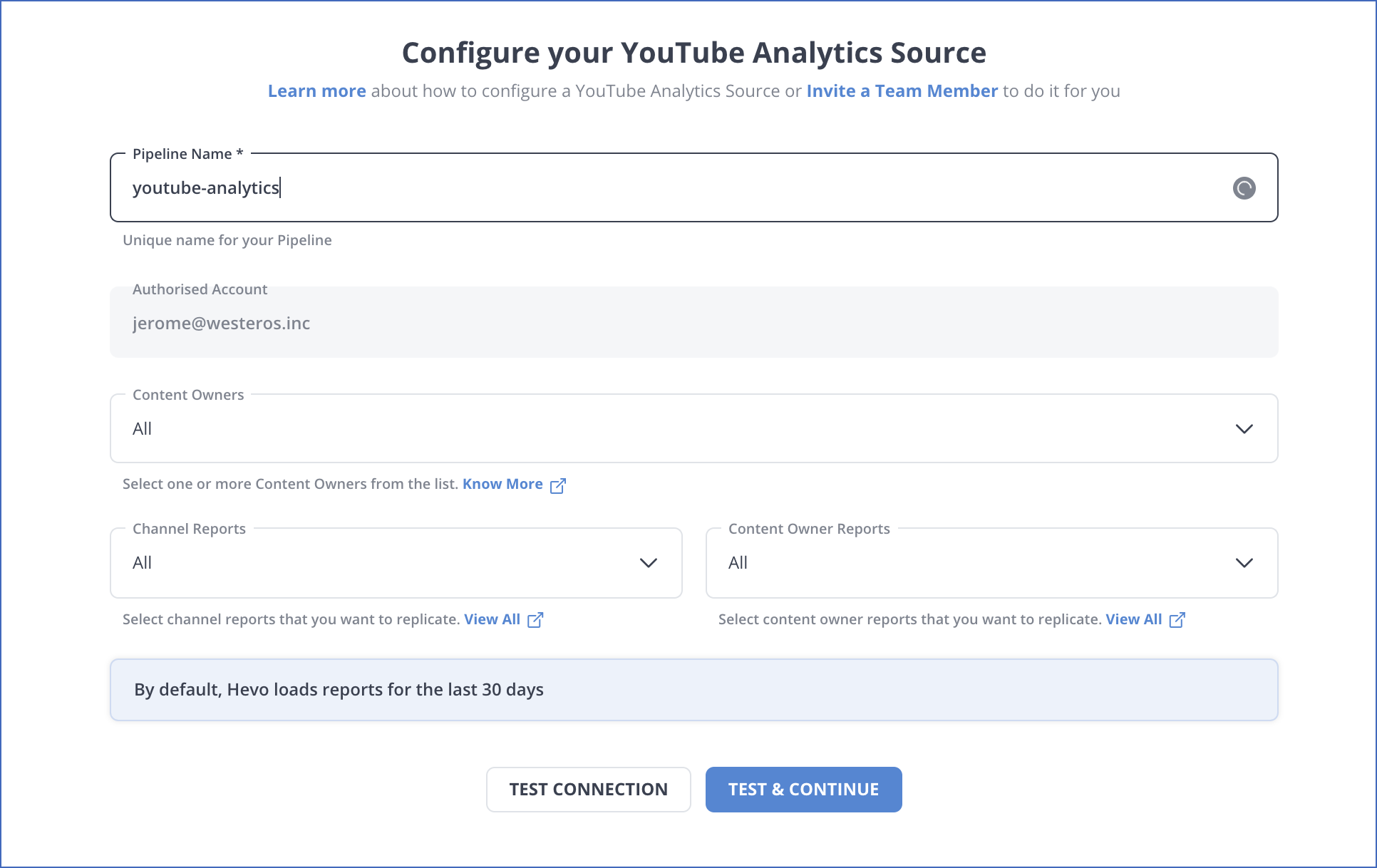

Step 1: Configure YouTube Analytics as a Source

Step 2: Configure BigQuery as a Destination

Your ETL Pipeline is set up! Once your Youtube Analytics to BigQuery ETL Pipeline is configured, Hevo will collect new and updated data from Youtube Analytics every five minutes (the default pipeline frequency) and duplicate it into BigQuery.

Method 2: Replicate Data from YouTube Analytics to BigQuery Using YouTube Analytics APIs

In the example below, the Reports Query API is used. You can hit API endpoints by specifying metrics parameters like Geography, Demographics, Traffic Source, etc.

Step 1: Generating YouTube Analytics API

- YouTube provides the Youtube Analytics API to retrieve YouTube data. The following command is generated by YouTube Analytics for you after you provide your authentication details.

GET https://youtubeanalytics.googleapis.com/v2/reports

Below is a sample response you will get on hitting the API endpoint:

{

"kind": "youtubeAnalytics#resultTable",

"columnHeaders": [

{

"name": string,

"dataType": string,

"columnType": string

},

... more headers ...

],

"rows": [

[

{value}, {value}, ...

]

]

}Step 2: JSON files into BigQuery

NOTE: Before uploading the data to BigQuery, you first need to navigate to Google BigQuery homepage and select a resource where you need to build the dataset.

- Create a dataset by providing a dataset ID, source location, and default table expiration period.

NOTE: If you select “Never” for table expiration, the physical storage location will not be chosen. You’ll need to specify how long you wish to keep temporary tables stored. - Now, create a table within the dataset and choose JSON as the file format to upload a JSON file from your computer, Google Cloud Storage, or Google Drive Disk.

Limitations of Using YouTube APIs

- Rate Limits: The use of the API is subject to quotas and rate limits, which could restrict the amount of data you can transfer.

- Complexity: Involves dealing with API authentication and data extraction/ transformation processes.

- Data Latency: Data being updated periodically does not reflect real-time updates and may have potential delays in being analysed.

- Limited data availability: Some metrics and dimensions in YouTube Analytics may be unavailable through the API.

Conclusion

In this blog, we have provided you with 2 step-by-step methods on how you can integrate your YouTube Analytics data to BigQuery. This is a vital integration for most users as it facilitates scalable storage, cross-platform insights, and advanced analytics. By using the following methods, we have provided issues such as API Quota, complex data structures, and authentication will never be an issue.

Although both methods we have provided are effective, we recommend using Hevo’s no-code pipeline tool that streamlines the entire process from real-time replication to transformation without needing to write a single line of code

Sign up for a 14-day free trial with Hevo and streamline your data integration. Also, check out Hevo’s pricing page for a better understanding of the plans.

Frequently Asked Questions

1. How do I extract data from YouTube Analytics?

You can use the YouTube Analytics API to extract data from YouTube Analytics.

2. How to transfer data from Google Analytics to BigQuery?

You can load data from Google Analytics to Bigquery Google Analytics BigQuery Export feature or by using automated platforms like Hevo.

3. Can you add YouTube Analytics to Google Analytics?

Direct integration is not available, but you can use YouTube Analytics API to pull data and manually combine it with Google Analytics data in tools like Google Sheets or BigQuery.

4. Is it possible to track YouTube Analytics?

Yes, you can track YouTube analytics via the YouTube Studio Dashboard.