Unlock the full potential of your Amazon Redshift data by integrating it seamlessly with Google BigQuery. With Hevo’s automated pipeline, get data flowing effortlessly—watch our 1-minute demo below to see it in action!

Amazon Redshift and Google BigQuery are the world’s two most popular data warehouses, both are built on the foundation to scale and enable enterprises to leverage and picture the economies of tomorrow.

Choosing the right cloud-based data warehouse is the underlying criteria for any enterprise to support its analysis, reporting, and BI functions.

Both Amazon Redshift and Google BigQuery are used widely across a company’s business functions — from BI to trends forecasting and budgeting.

Both are fully managed petabyte-scale cloud data warehouses and have strengths and weaknesses, but the right balance of needs and expectations will provide the best results.

Table of Contents

What is Amazon Redshift?

Amazon Redshift is built and designed for today’s data scientists, data analysts, data administrators, and software developers. It gives users the ability to perform operations on billions of rows, making Redshift perfect for analyzing large quantities of data. Its architecture is based on an extensive communication channel between the client application and the data warehouse cluster. To learn more about Amazon Redshift infrastructure, see Redshift documentation.

Important Amazon Redshift Features:

- AWS’s Integrated Analytics Ecosystem: AWS offers a wide range of in-build ecosystem services, making it easier to handle end-to-end analytics workflows without compliance and operational roadblocks. Some of the famous examples include AWS Lake Formation, AWS Glue, AWS EMR, AWS DMS, AWS Schema Conversion Tool, and many more.

- Redshift ML: A must for today’s data professionals, Redshift ML allows users to create and train Amazon SageMaker models through data from Redshift for predictive analytics.

- Machine Learning For Maximum Performance: Amazon Redshift offers advanced ML capabilities which deliver high throughput and performance. Its advanced algorithms predict incoming queries based on certain factions to help prioritize critical workloads.

What is Google BigQuery?

Google BigQuery is a fully managed, serverless data warehouse. Similar to Amazon Redshift, it allows users to run analysis over petabytes of data in real-time. It’s cost-effective and only requires users to understand and write standard SQL.

Important Google BigQuery Features:

- BigQuery Omni: With a consistent data experience, the ability to break data silos to gain crucial insights, and agility, BQ Omni allows users to execute queries through foreign cloud platforms as well, which come in handy when analyzing across clouds such as AWS and Microsoft Azure.

- BigQuery ML: Using simple SQL queries, users in BigQuery execute ML models. Some of the widely used models are as follows: Linear regression, Binary and Multiclass Logistic Regression, Matrix Factorization, Time Series, and Deep Neural Network models.

- BigQuery Data Transfer Service: It automates the data movement into BigQuery, which comes in handy when multiple data sources, including data warehouses, are involved.

Why do we need to migrate from Redshift to BigQuery?

Migrating from Amazon Redshift to Google BigQuery can be considered the right move because of the following reasons:

- Performance and Scalability: BigQuery provides better query performance and the scalability to handle large datasets without worrying about clusters.

- Separate storage and computing: BigQuery separates its storage and computing resources to enable independent scaling. This allows for optimized performance and cost management.

- Serverless Architecture: BigQuery is serverless, so there are no infrastructures to manage. Much of the time of the data engineer goes into data processing and analytics rather than the maintenance of clusters.

Method 1: Amazon Redshift to Google BigQuery Migration Using the Console.

This method involves migrating data from Redshift to Google Bigquery using web console and APIs. You will require some technical knowledge about APIs and BigQuery’s console to perform this method.

Method 2: Using automated platforms like Hevo

This method involves two simple steps that assist you in migrating your data from Amazon Redshift to Google BigQuery without requiring tedious steps.

Amazon Redshift to Google BigQuery Migration

Method 1: Amazon Redshift to Google BigQuery Migration Using the Console

The following image shows how data transfer from Redshift to Bigquery works:

Pre-Requisites:

- An active Google Cloud account with billing enabled.

- A Google Cloud Project created.

- BigQuery and BigQuery Data Transfer Service APIs enabled.

- Ensure that the principal creating the transfer has the following permissions in the project containing the transfer job:

- bigquery.transfers.update permissions to create the transfer

- Both bigquery.datasets.get and bigquery.datasets.update permissions on the target dataset.

You can perform this data transfer easily by performing the following steps:

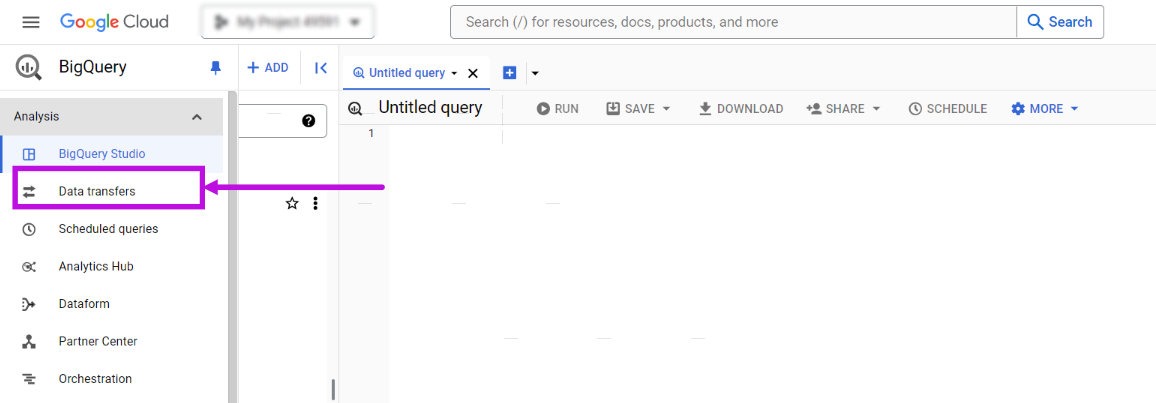

Step 1: In the Google Cloud console, go to the BigQuery page and click Data Transfers.

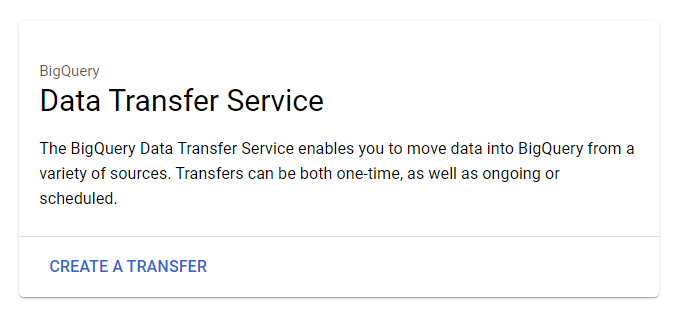

Step 2: Click on ‘CREATE A TRANSFER’

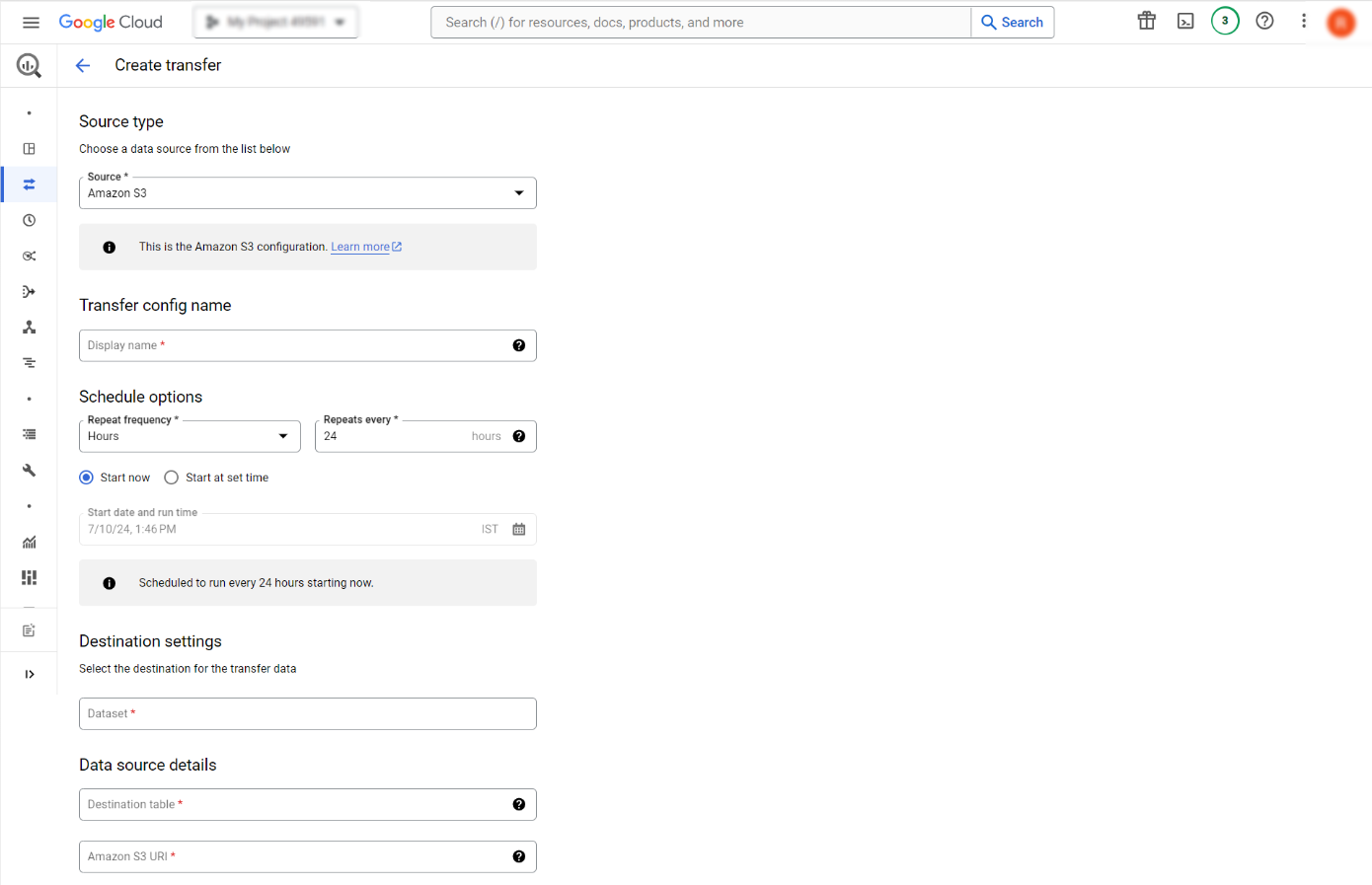

Step 3: In the Source type section, select Migration: Amazon Redshift from the Source list.

Step 4: Select ‘Source’ as ‘Amazon S3,’ and enter the migration name in the ‘Transfer config name’ box.

Step 5: Enter the data set ID in the ‘Destination settings’ box, and continue the process by filling in the ‘Data source details.’ It should look like this:

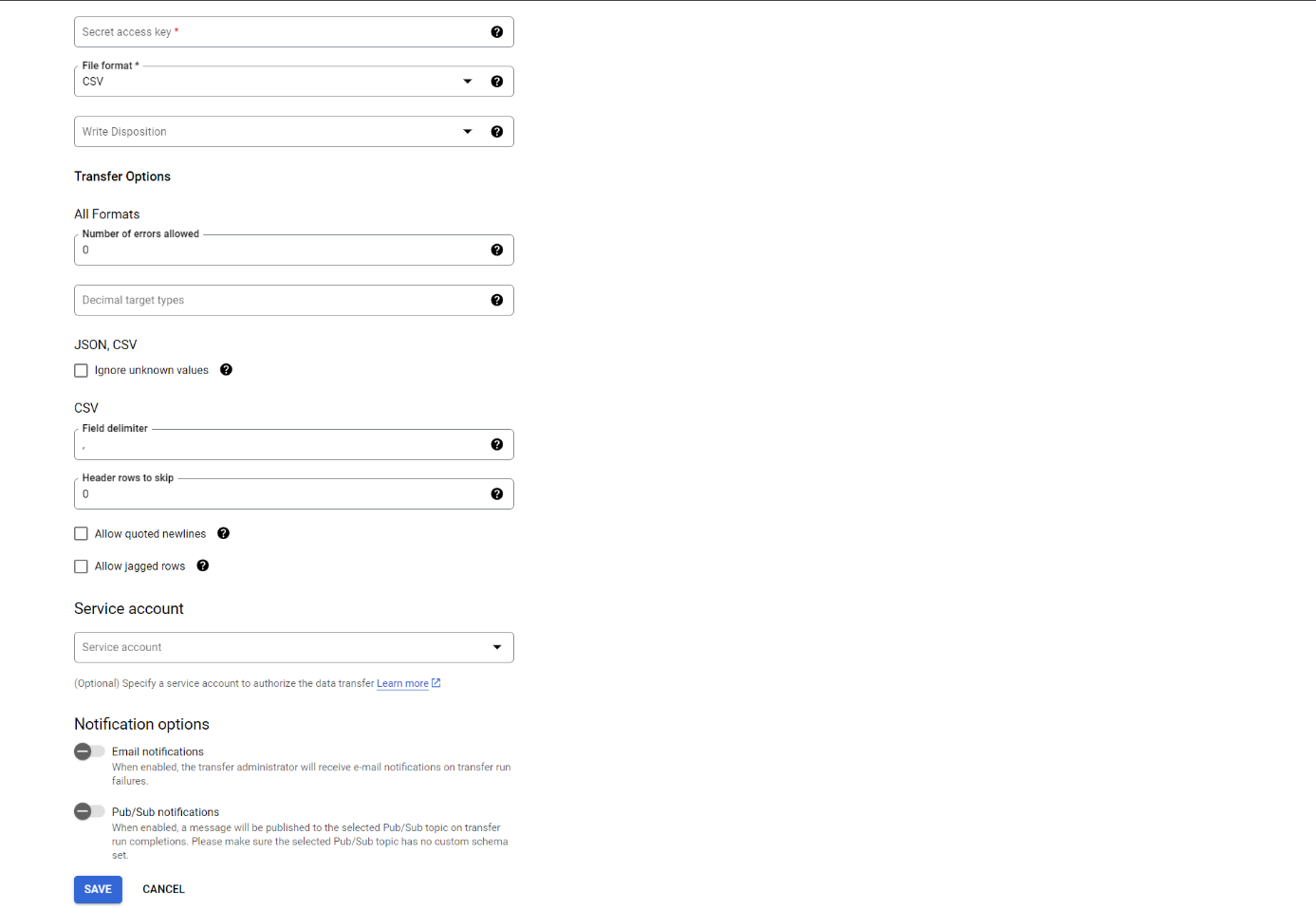

Step 6: Click on ‘Save’ to continue.

Step 7: After successful execution, the Cloud Console will display all the transfer setup details, including the Resource name.

Limitations of the manual method:

- Time-Consuming Process: Extracting large volumes from Redshift takes time and resources.

- Data Compatibility Issues: Differences in data types and schema structures between Redshift and BigQuery may require significant adjustments and transformations.

- Downtime and Disruption: Depending on the data to be migrated, manual migration may include some application downtime or graceful degradation.

- Lack of Automation: The manual process requires significant human effort, increasing the likelihood of errors and prolonging the migration timeline.

Method 2: Procedure to Enable Amazon Redshift to Google BigQuery Migration Using Hevo

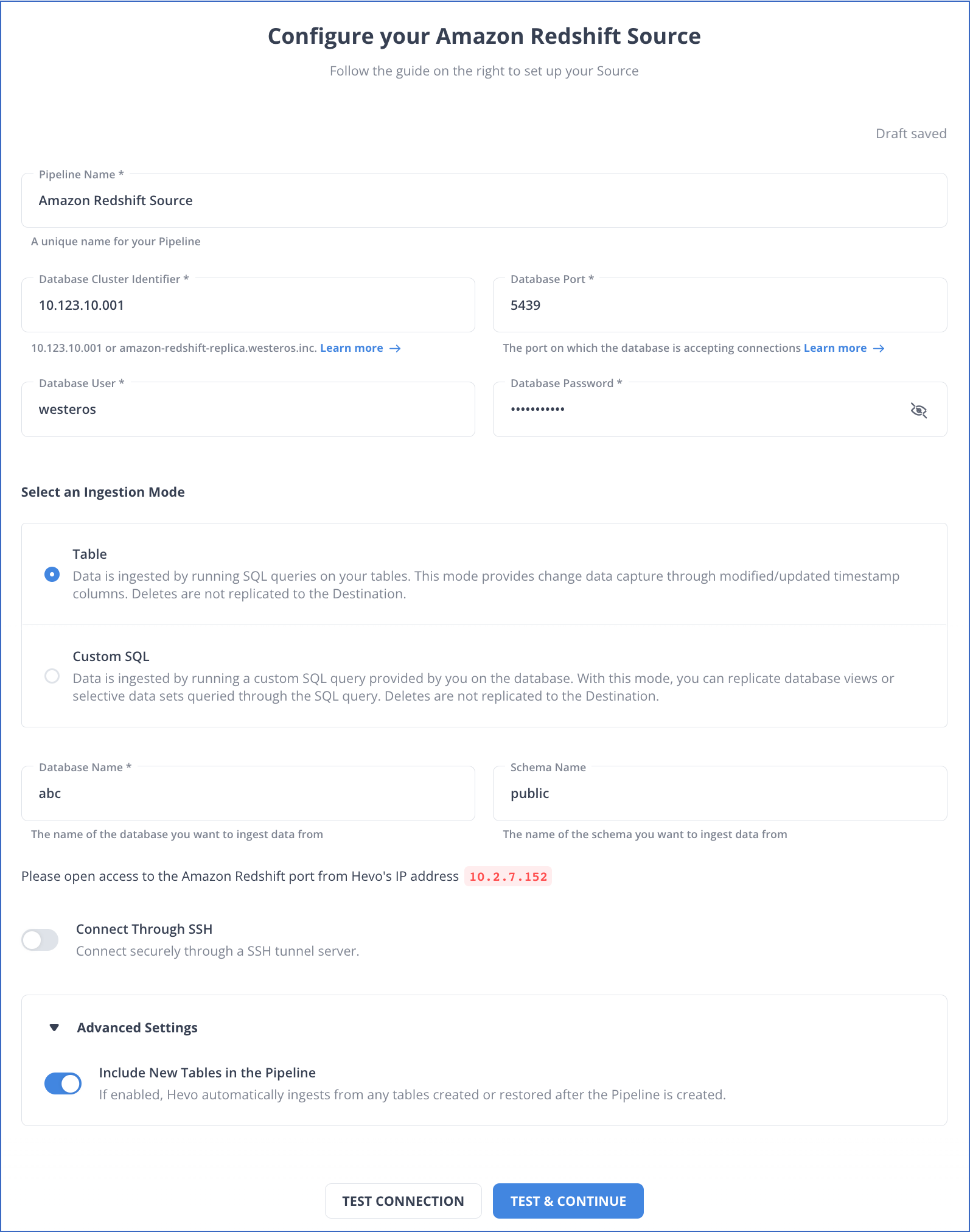

Step 1: Set up Amazon Redshift as a Source.

Step 2: Set up Google BigQuery as a Destination.

Conclusion

In this article, we waded through some basics of data warehouse and successfully discussed methods to migrate data from Amazon Redshift to Google BigQuery. We used two ways to obtain our desired results:

In the first method, we discussed in detail the manual way to migrate data. This approach requires users to have a sound understanding of Redshift and BigQuery, and their migration customs — leaving the door open for a new user to make mistakes.

In the second method, we used Hevo Data to achieve our desired results. Through Hevo, the process was much faster, fully automated, and required no code.

Frequently Asked Questions

1. How to connect Redshift with BigQuery?

To connect Redshift with BigQuery, you can opt for any of the following methods:

-Manually using Google Cloud Console.

-Using automated data platforms like Hevo.

2. Is Redshift equivalent to BigQuery?

Both are cloud-based data warehouses, but they differ in architecture and pricing. Redshift is more traditional with cluster-based management, while BigQuery is serverless and scales automatically.

3. How do I transfer data to BigQuery?

You can use the bq command-line tool, Google Cloud Storage (GCS) with gsutil, or data integration tools like Hevo.