Key Takeaways

Key TakeawaysYou can move CSV data to BigQuery using any of these four methods:

Method 1: Using Hevo Data (Recommended)

Step 1: Set Google Sheets as your source.

Step 2: Choose BigQuery as your destination.

Method 2: Using Command Line Interface

Step 1: Install and authenticate Google Cloud SDK.

Step 2: Set up your project and dataset using CLI commands.

Step 3: Load the CSV file with a single command.

Step 4: Preview and validate your data in BigQuery.

Method 3: Using BigQuery Web UI

Step 1: Create a new dataset in the BigQuery console.

Step 2: Upload your CSV file and let BigQuery auto-detect schema.

Step 3: Preview, edit schema, and verify the loaded table.

Method 4: Using Web API

Step 1: Enable the BigQuery API in Google Cloud Console.

Step 2: Configure your Python script with project and table details.

Step 3: Run the script to load your CSV data.

Step 4: Preview the imported table in BigQuery.

Migrating data from your CSV files to your BigQuery warehouse is crucial for analytical reporting and generating reports. Still, the migration process can often be daunting for those who lack coding knowledge or users who are not as experienced with building pipelines. Therefore, in this blog, we have provided you with an easy step-by-step guide on migrating your data from CSV to BigQuery.

Table of Contents

Why move data from CSV to BigQuery?

- Enhances overall efficiency: Uploading CSV files to BigQuery simplifies data management and enhances the efficiency of your analytical workflows, making it easier to handle and analyze large datasets.

- Performance: BigQuery is designed to handle massive volumes of data efficiently, offering quick query execution that reduces the time needed to gain insights from your data.

- Advanced Analytics: BigQuery provides advanced analytics tools, such as ML and spatial data analysis, which deliver deeper insights to inform wise decision-making.

- Cost-Effective: BigQuery’s pay-as-you-go pricing approach ensures you only pay for the storage and queries you use, eliminating the need for expensive hardware or software.

- Scalability: Features like real-time analytics, on-demand storage scaling, BigQuery ML, and optimization tools make it easier to manage and scale your data analysis processes as needed.

Seamlessly migrate your data to BigQuery. Hevo elevates your data migration game with its no-code platform. Ensure seamless data migration using features like:

- Seamless integration with your desired data warehouse, such as BigQuery.

- Transform and map data easily with drag-and-drop features.

- Real-time data migration to leverage AI/ML features of BigQuery.

Join 2000+ users who trust Hevo for seamless data integration, rated 4.7 on Capterra for its ease and performance.

Get Started with Hevo for FreeMethods To Load Data From CSV to BigQuery

- Method 1: Using Hevo Data

- Method 2: Using Command Line Interface

- Method 3: Using BigQuery Web

- Method 4: Using Web API

Method 1: Using Hevo Data

Step 1: Configure Google Sheets as your Source.

Step 2: Configure BigQuery as your Destination details

After the pipeline has completed ingesting, you can preview your loaded table by opening BigQuery and previewing it.

Method 2: Using Command Line Interface

Step 1: Google Cloud Setup

First, install the gcloud command line interface. Authenticate yourself to Google Cloud. For that, run the command we have given below. Then, sign in with your account and give all permissions to Google Cloud SDK.

gcloud auth loginAfter you complete all these steps, this window should appear:

Step 2: Command Prompt Configurations

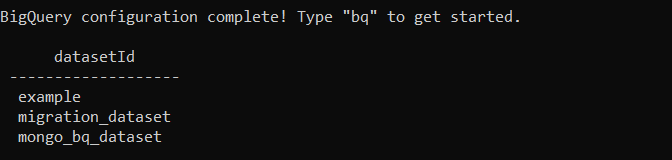

First, run this command bq to enter in Google’s BigQuery configuration. Now, to check the number of datasets in your projects, run this command:

bq ls <project_id>:

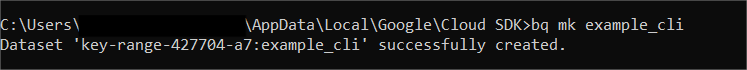

This is a method to create a new dataset. In order to do so, you can run the command:

Bq mk <new_dataset_name>

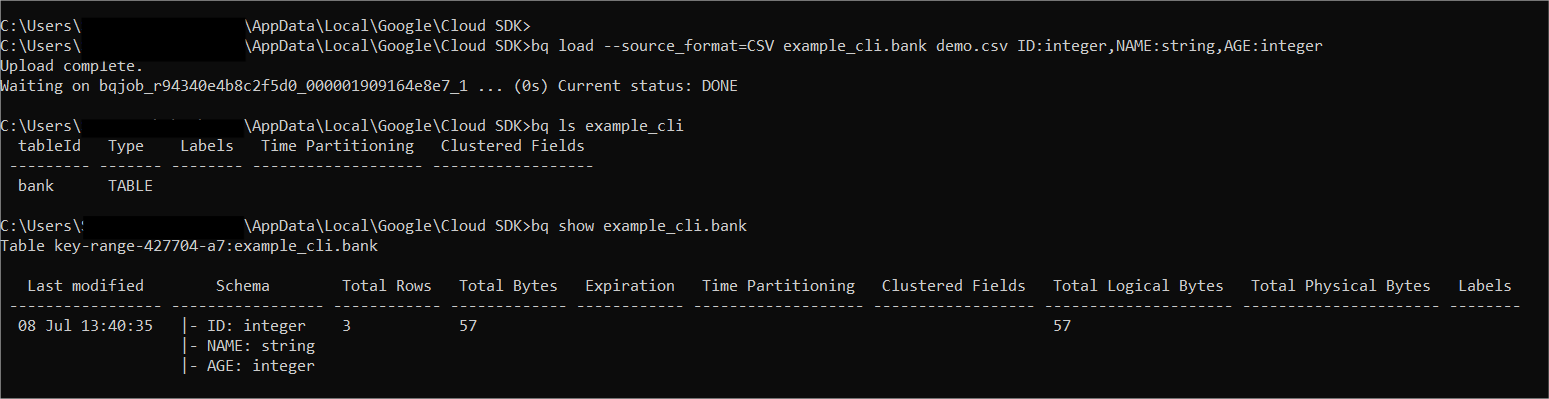

Step 3: Load the data into the dataset

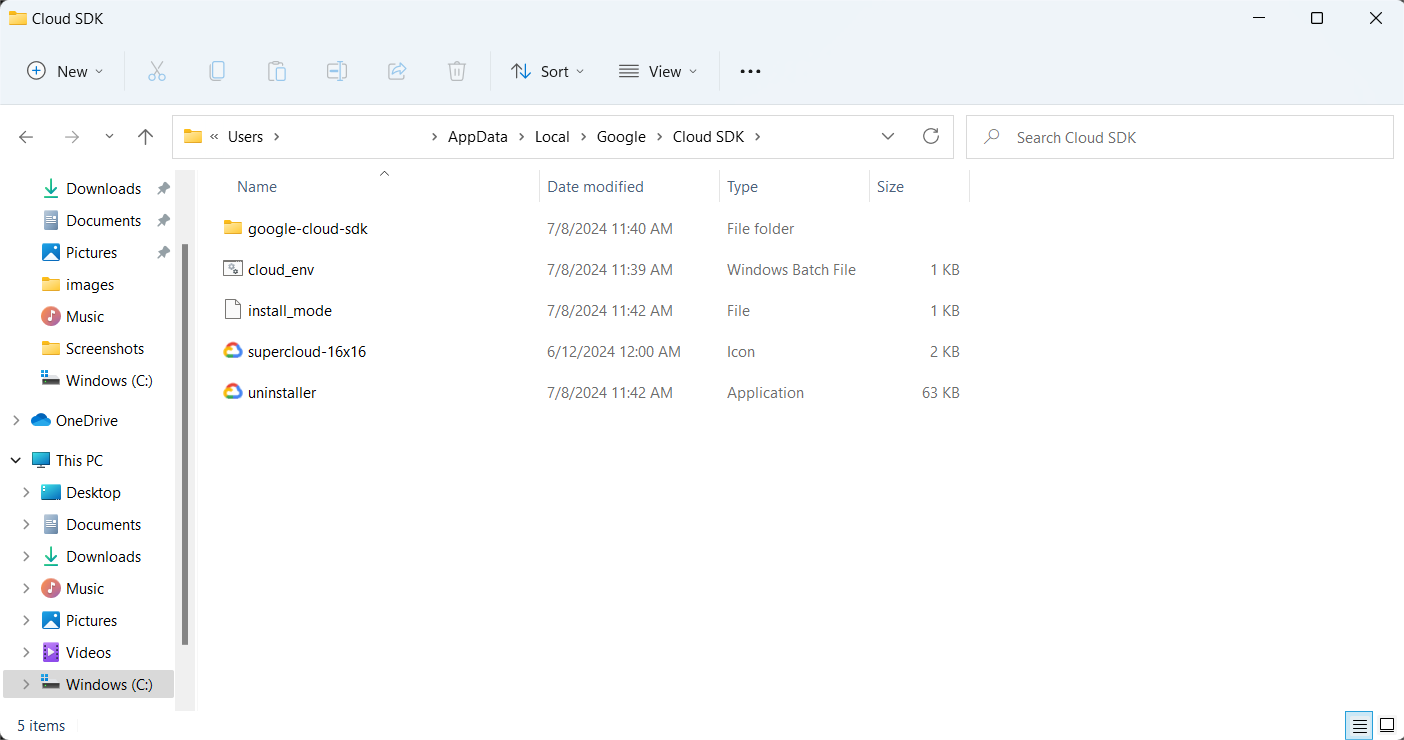

First, go to your cloud SDK directory and add the CSV file that you want to upload. We have uploaded a picture of our directory for reference. Now, to load the file, go back to your command line and run the command we have provided below. The output of this command will be “Upload Complete”.

bq load --source_format=CSV example_cli.bank demo.csv ID: integer,NAME:string,AGE:integer

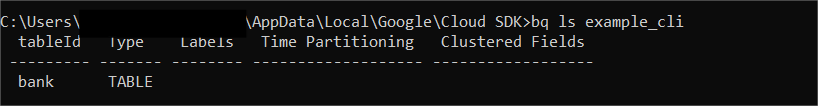

Step 4: Preview of the data

First, check whether the table has been created. You can use the following command:

bq ls example_cli

If you want to see the schema of the table, you can do so by using the command below. To preview your table, go back to BigQuery, go to tables, and click on preview.

bq show <dataset_name>.<table_name>

Method 3: Using BigQuery Web

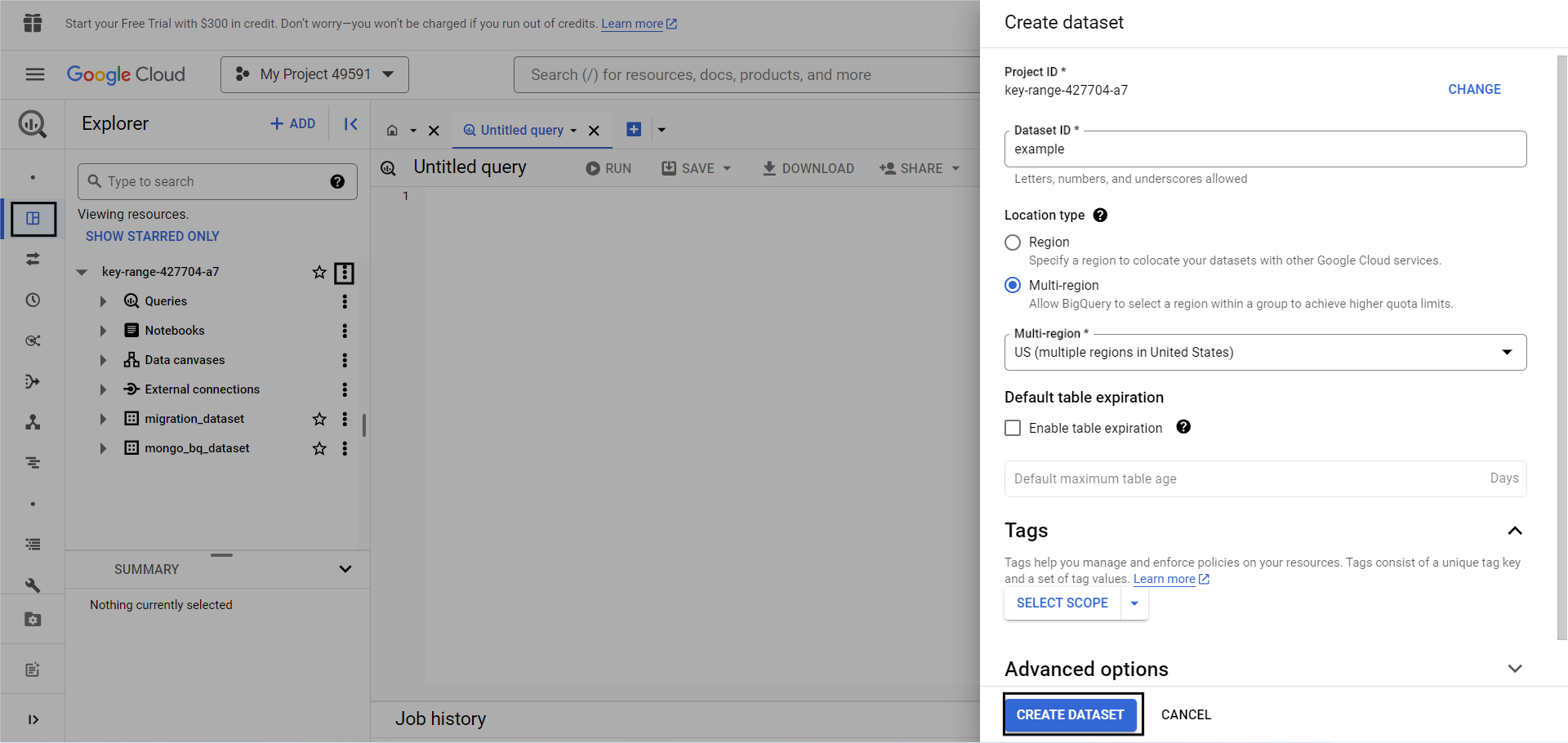

Step 1: Create a new Dataset

To create a new dataset in BigQuery, go to your BigQuery studio, click on the three dots beside your project ID, and click Create Dataset.

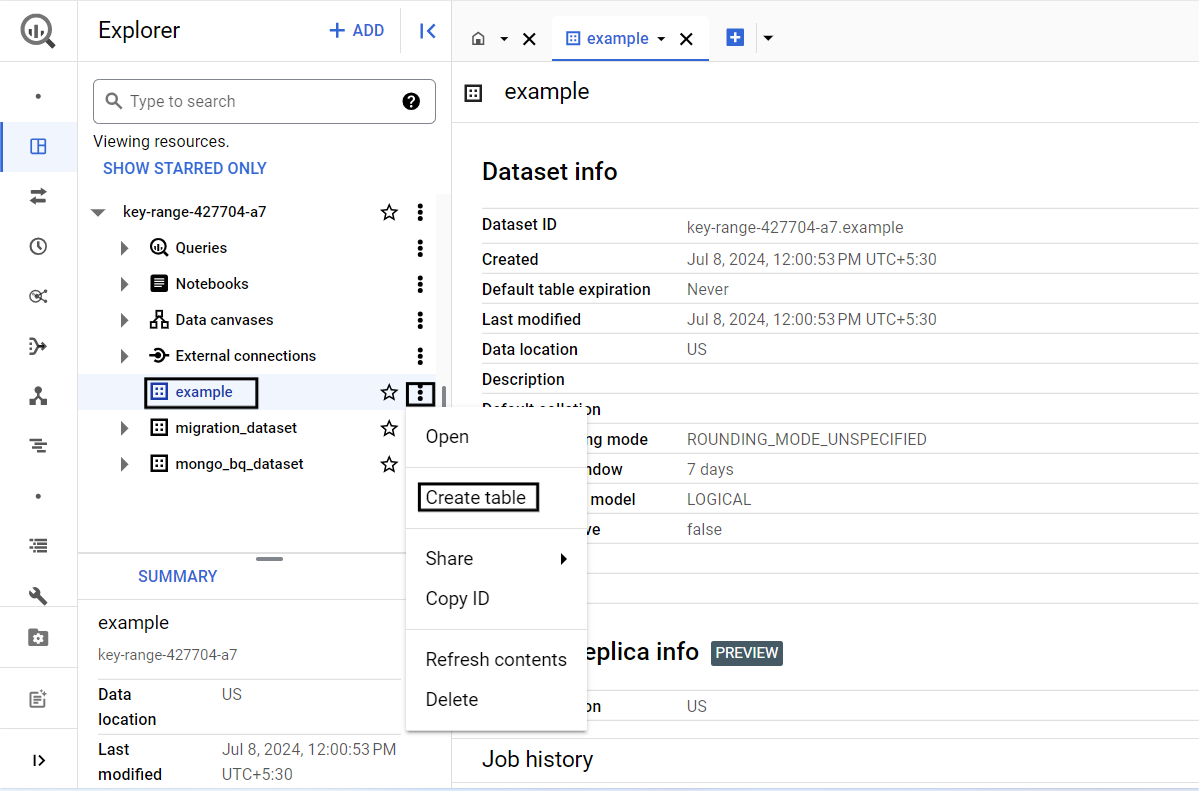

Step 2: Create a Table

To create a new table, click on the three dots next to your dataset name and click on Create Table.

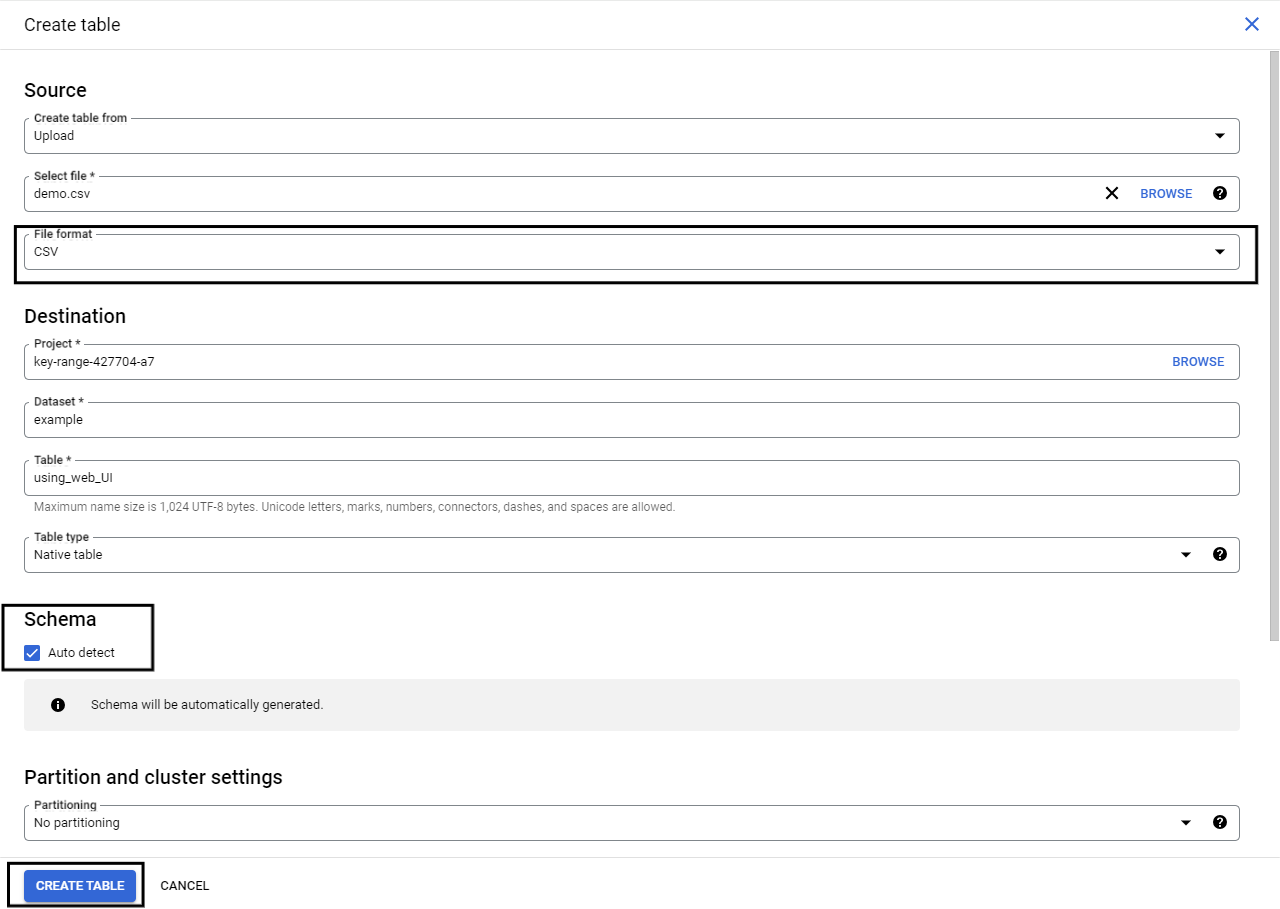

Note:

- Keep the file format as CSV.

- Turn on auto-detect. This will automatically detect the incoming table schema and generate a schema accordingly.

- Change create table ” to Upload”. Upload the file that you want to load into BigQuery.

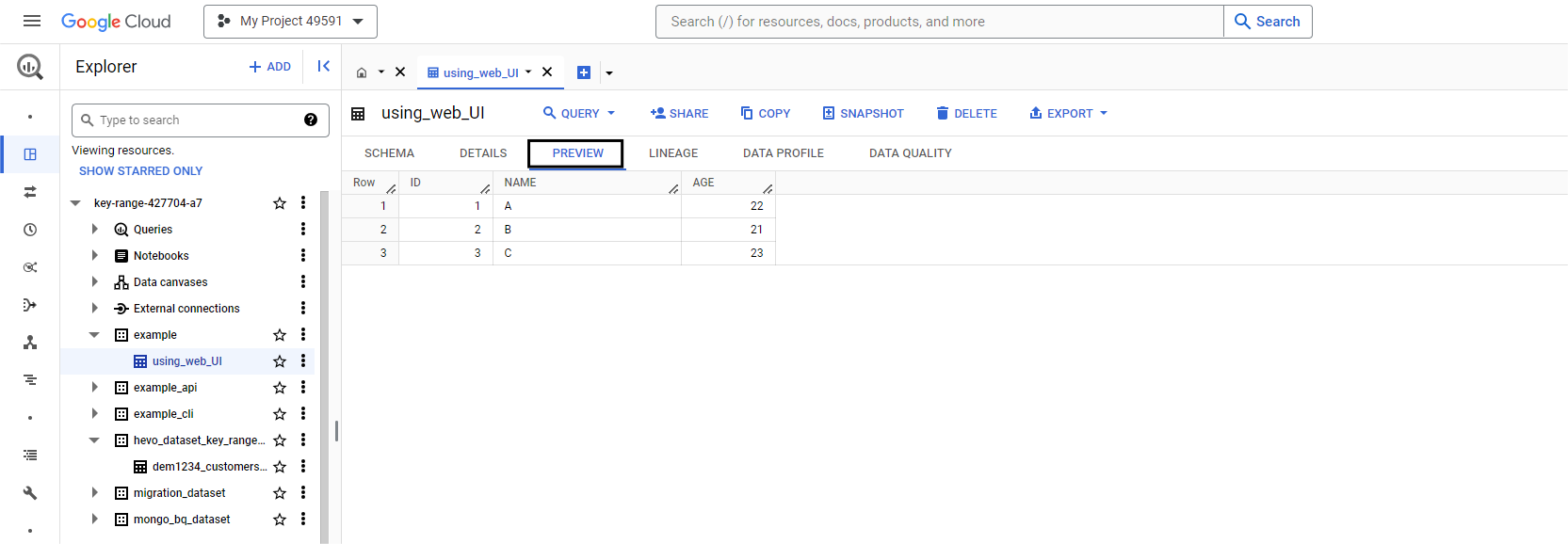

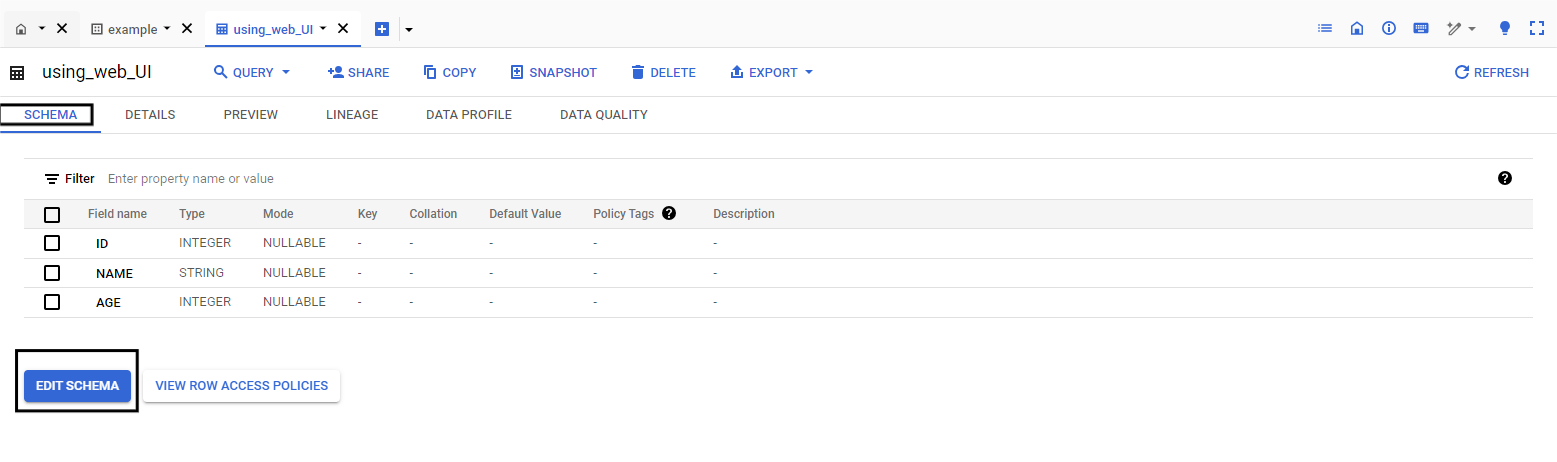

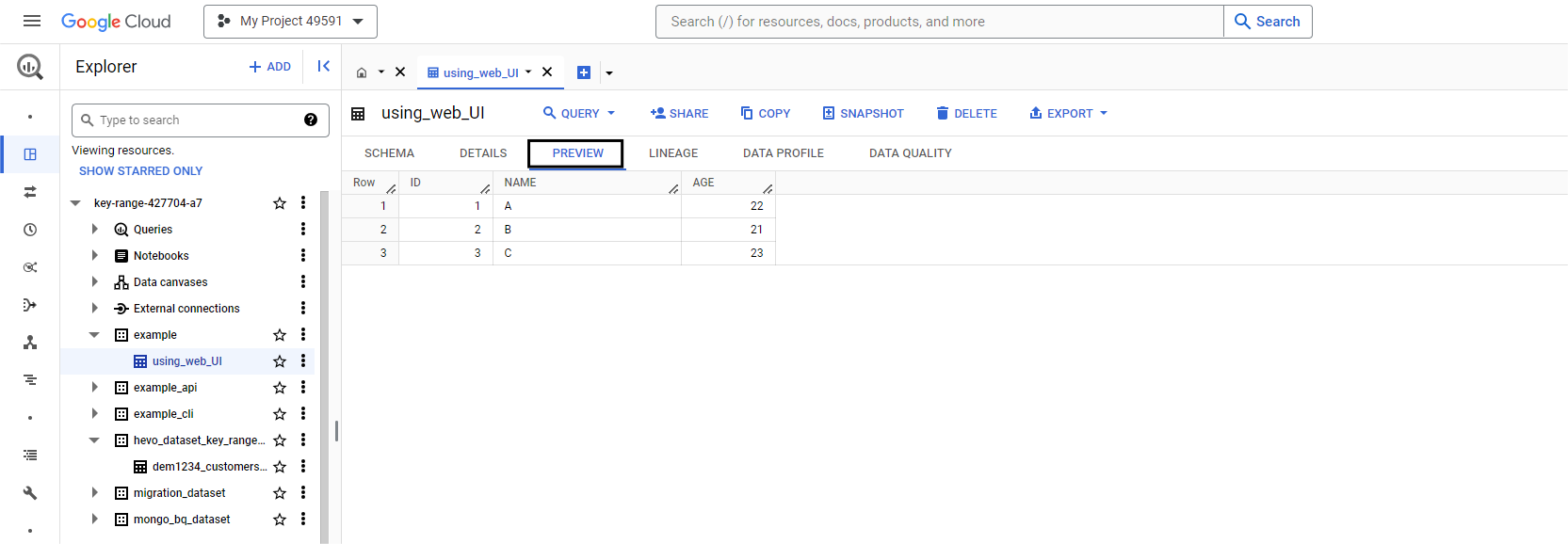

Step 3: Preview Table

- You can edit the schema you just created. To do so, click on the table name and click on the schema tab. You can make changes to the schema as you like from here, and finally, click on edit schema.

- To preview the table, click on the “preview tab” beside the details tab.

Method 4: Using Web API

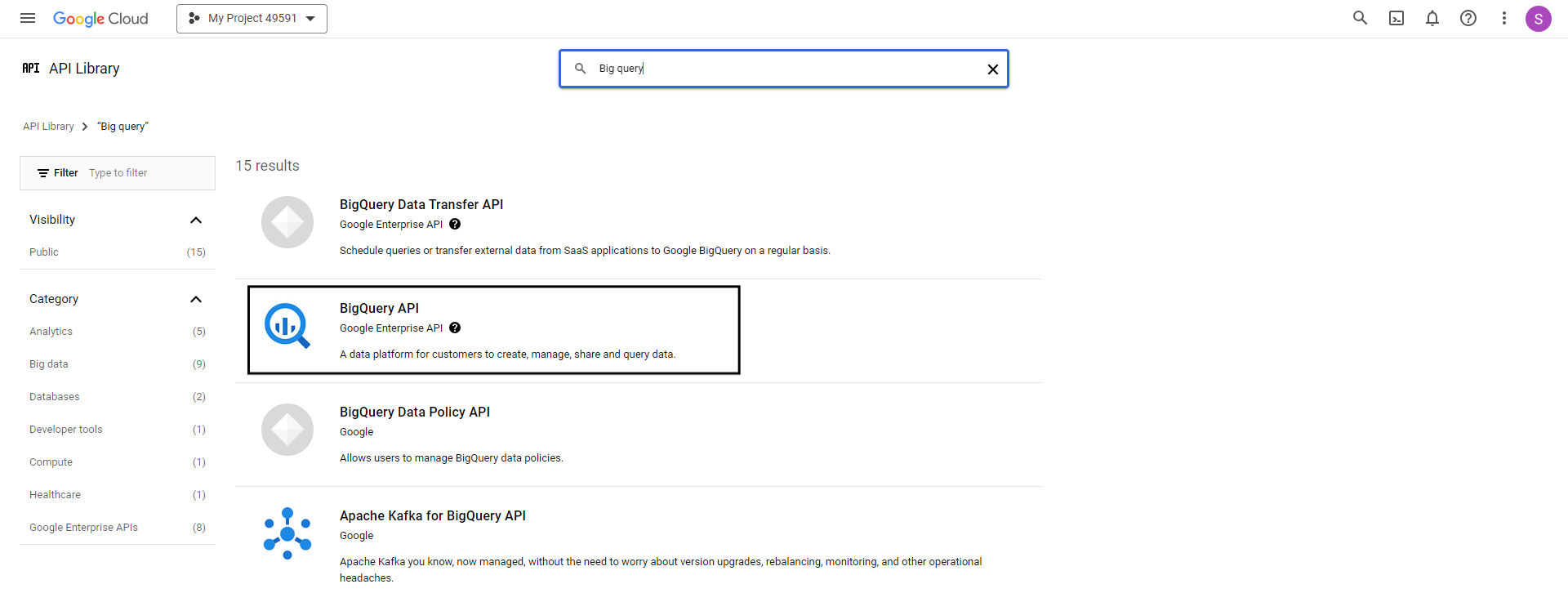

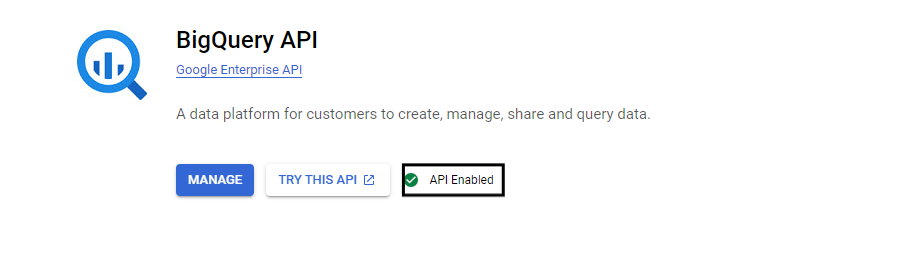

Step 1: Configure BigQuery API

Go to Google Cloud Console and look for APIs and services. Search for BigQuery and click on BigQuery API.

Note: Make sure that the service is enabled.

Step 2: Configuring the Python script

Open your code editor and type the given Python script.

from google.cloud import bigquery

import os, time

os.environ['GOOGLE_APPLICATION_CREDENTIALS'] = 'your-service-account.json'

client = bigquery.Client()

table_id = 'your-project.your_dataset.your_table'

job_config = bigquery.LoadJobConfig(

source_format=bigquery.SourceFormat.CSV,

skip_leading_rows=1,

autodetect=True,

)

with open('demo.csv', 'rb') as source_file:

job = client.load_table_from_file(source_file, table_id, job_config=job_config)

job.result() # Waits for the job to complete

table = client.get_table(table_id)

print(f"Loaded {table.num_rows} rows and {len(table.schema)} columns to {table_id}")

Note:

- In Google application credentials, provide your service account’s json key.

- In

table_id.Give a new table name for what you want to create.

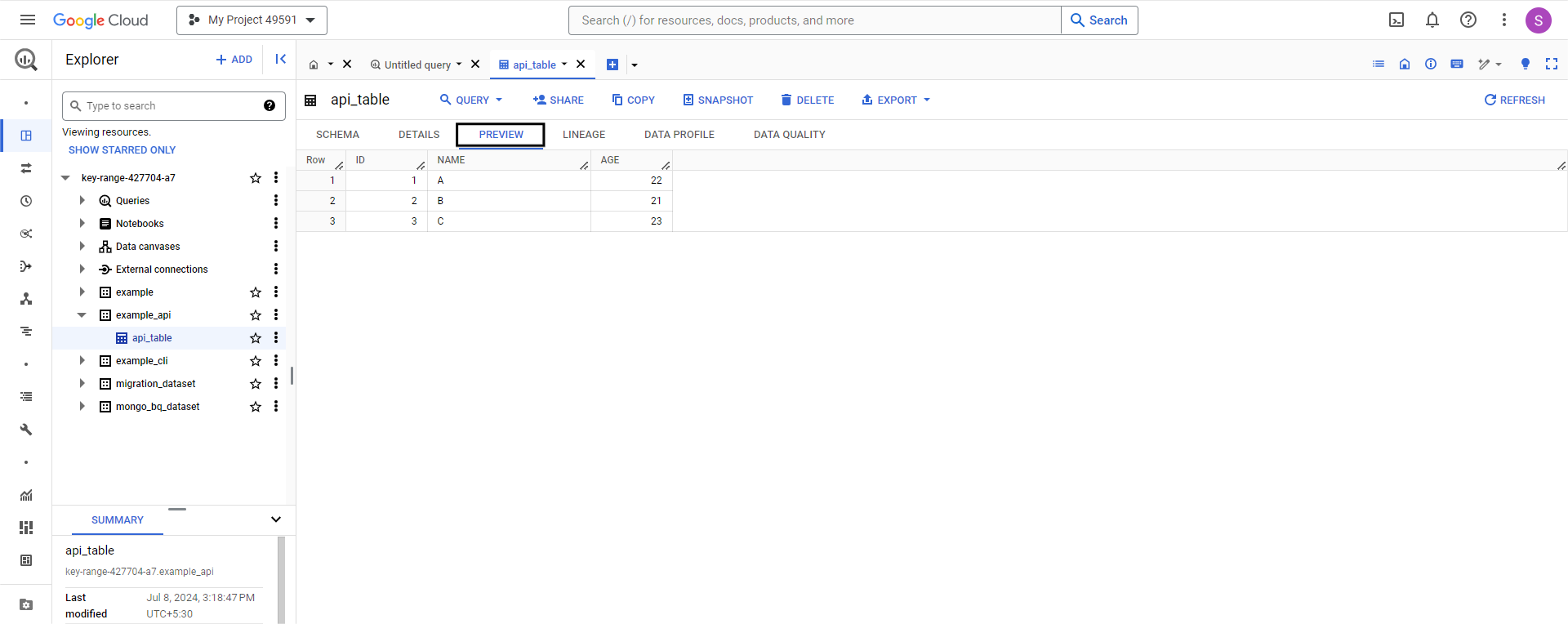

Step 3: Preview the table

You can now preview the table you created by following the steps mentioned in this blog.

Limitations of Moving Data from CSV to BigQuery

- Nesting and repetitive data are not supported in CSV files.

- BOM (byte order mark) characters should be removed. They may result in unanticipated consequences.

- BigQuery cannot read the data in parallel if you use gzip compression. Importing compressed CSV data into BigQuery takes longer than loading uncompressed data. See Loading compressed and uncompressed data for further information.

- You can’t use the same load job to load compressed and uncompressed files.

- A gzip file can be up to 4 GB in size.

Learn more on How to unload and Load CSV to Redshift and Import CSV File Into PostgreSQL Table

Conclusion

Loading CSV data into BigQuery is a powerful way to enhance your data management and analytical capabilities. Whether dealing with large datasets, requiring real-time analytics, or leveraging advanced analytics tools like BigQuery ML, importing your CSV data into BigQuery can significantly streamline your workflows. This article provides a step-by-step guide for setting up an import CSV to BigQuery connection using four different methods.

The first three methods are manual and can become time-consuming. Also, writing custom scripts requires high-level code knowledge, which not everyone may be familiar with. To avoid the manual part and automate the entire process, you can always look up Hevo Data and sign up for a 14-day free trial.

FAQ

How do I append a CSV file to a BigQuery table?

You can append additional data to an existing table by performing a load-append operation.

What is the fastest way to load data into BigQuery?

Bulk Insert into BigQuery is the fastest way to load data.

How to connect data to BigQuery?

On your computer, open a spreadsheet in Google Sheets.

In the menu at the top, click Data Data connectors. Connect to BigQuery.

Choose a project.

Click Connect.

How do I export CSV to storage in BigQuery?

Open the BigQuery page in the Google Cloud console. In the Explorer panel, expand your project and dataset, then select the table. In the details panel, click Export and select Export to Cloud Storage.

Share your thoughts on loading data from CSV to BigQuery in the comments!