Key Takeaways

Key TakeawaysData mapping tools streamline transferring data between systems by visually defining and automating how fields in a source dataset correspond to those in a target dataset.

Top 5 Data Mapping Tools for 2026:

- Hevo Data: Best for automated schema mapping with 150+ connectors and a no-code interface.

- Pentaho: Ideal for embedded analytics and ETL with a drag-and-drop GUI.

- CloverDX: Great for developers needing API-driven mapping and hybrid workflows.

- Pimcore: Strong fit for e-commerce use cases with robust PHP-based data management.

- Informatica PowerCenter: Enterprise-grade, scalable solution with deep AWS integration.

How to Choose:

Pick a tool that aligns with your team’s technical expertise, integration needs, data volume, and whether you prefer cloud or on-premise deployment. Prioritize ease of use, automation, and support for your data sources.

Which data mapping tool fits your stack?

This can be tricky if you don’t evaluate the available options.

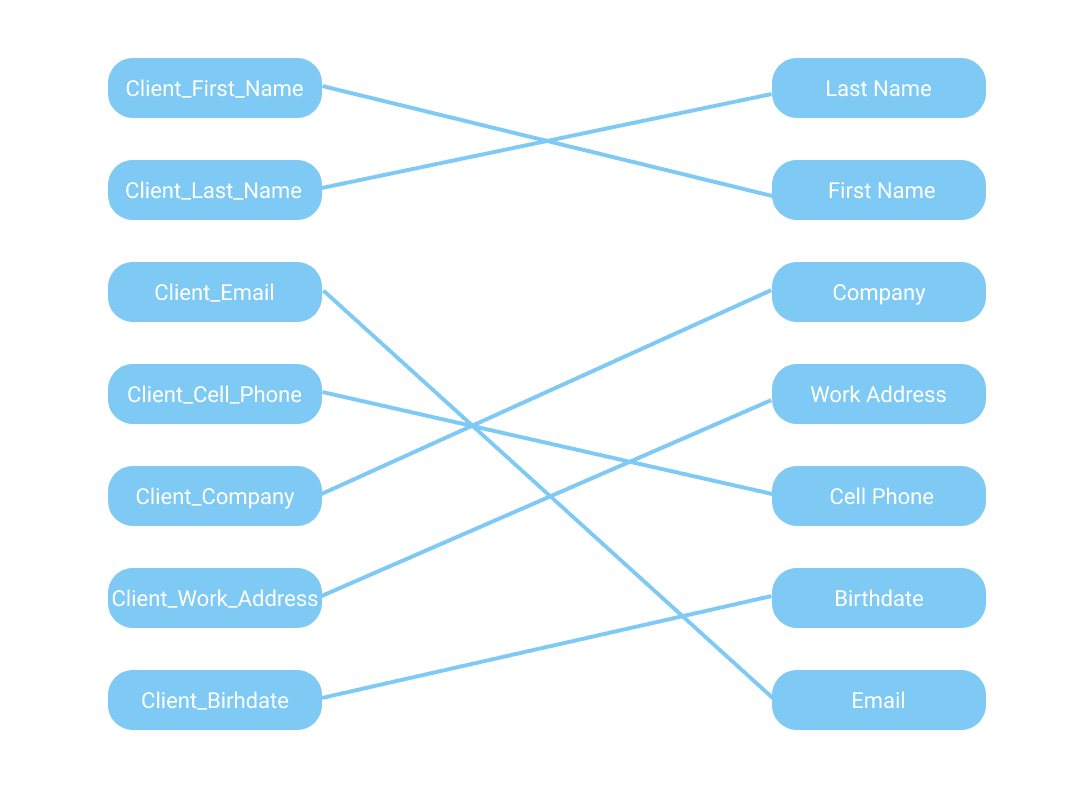

Data teams receive datasets from multiple sources, and the way each source stores data points often differs from how the destination stores them. Data mapping tools bridge this gap by connecting data fields, such as those in maps, with standardized data for effective data analysis.

This blog features a comprehensive list of 14 top data mapping tools to help you evaluate and choose the right fit for your needs.

- 1Best for automated schema mapping and real-time data replicationTry Hevo for Free

- 2Best for embedded analytics within applications

- 3Best for extensible e-commerce frameworks

- 25Tools considered

- 18Tools reviewed

- 14Best tools chosen

Table of Contents

How I Chose the Best Data Mapping Tools?

An ideal data mapping software fulfills data integration, enterprise data transformation, and data warehousing needs. So, before choosing the tool, I clearly defined my expectations and business objectives. This way, I could make an informed decision based on my unique data modelling requirements and essential features.

Here’s what I considered before finalizing the best Data Mapping solution:

- Graphical Drag-and-Drop, Code-Free User Interface: A code-free, intuitive interface with drag-and-drop functionality makes data mapping accessible, especially for non-technical users.

- Integration and Support for Diverse Systems: The tool should seamlessly connect with various data sources, including structured, semi-structured, and unstructured data like databases, REST APIs, XML, JSON, EDI, and more.

- Schema Support: Flexible schema support is vital for efficiently transforming data into usable formats tailored to project needs.

- Data Transformation Capabilities: The tool must handle complex data transformations to meet diverse business requirements.

- Ability to Schedule & Automate Database Mapping Jobs: Automation and scheduling features, such as time-based mapping functions or event-triggered workflows, enhance efficiency.

- Error Handling: Robust error handling capabilities to detect, report, and even correct data mismatches ensure accuracy and reliability in the mapped data.

I ensured that my Data Mapping Tools included the above features to build a strong foundation for a streamlined, efficient, error-free data mapping process. Consequently, I narrowed down the tools that met my technical criteria and aligned with my operational goals.

Comparison of the Data Mapping Tools for 2025

| Reviews |  4.5 (250+ reviews) |  4.3 (10+ reviews) |  4.4 (80+ reviews) |  4.3 (100+ reviews) |

| Pricing | Usage-based pricing | Open-source + Usage-based pricing | consumption-based pricing | Capacity-based pricing |

| Free Plan |  |  |  | |

| Free Trial |  14-day free trial |  |  30 day free trial | |

| UI/UX | No-code, drag-and-drop; great for non-technical users | Visual editor available; setup slightly technical | Powerful but complex UI; suited for advanced users | Intuitive GUI; easy to start, deeper features need learning |

| Automation | Auto schema mapping, real-time sync, error handling | Supports automation; limited in community edition | Full-featured automation with scheduling & error handling | Automation via Spark jobs and built-in orchestration |

| Deployment | Fully cloud-based | Cloud, on-premise, or hybrid | Cloud-first, hybrid supported | Cloud-first; on-prem supported via Remote Engine |

| Integration Support | 150+ connectors across SaaS, DBs, files, APIs | Wide support; more setup needed for some sources | Rich connectors, strong AWS integrations | Broad support, Spark-native for batch processing |

| Best For | Real-time, no-code integration for fast-moving teams | Open-source BI + ETL for analytics-heavy workloads | Enterprise-grade needs, governance, and scale | Large-scale ETL with transformation and profiling |

1. Hevo Data

Rating: 4.3/5 (G2)

Hevo is the only real-time ELT No-code Data Pipeline platform that cost-effectively automates data pipelines that are flexible to your requirements. The platform has the capability for integration with 150+ Data Sources (40+ free sources). It contains features for auto schema mapping along with many others.

- Automated schema mapper feature: This allows you to automate creating and managing all the mappings, and also highlights any incompatible incoming Events as failed for your review. You can also manually map an incoming event type to a destination table, which can be mapped to a different Destination table later.

- Apart from this, any fields containing sensitive data can be skipped. You can also flatten nested objects into a de-normalized Destination table if you want to improve the query performance and avoid unwanted complexities in the schema.

- Data transformation: You can modify and enrich your in-flight data using drag and drop or the Python console.

- Incremental data load: This allows updating data that is modified in real-time and provides an effective use of bandwidth on the source and destination.

Customer Review

Pros:

- Supports multiple workspace creation with the same domain name.

- Built-in smart assistant to provide complete control and enhanced visibility.

- Offers “within-the-product” customer support in the form of live chat.

Cons:

- Limited customization for users relying on custom script-based data transformations.

- Not ideal for organizations requiring on-premise and hybrid deployments.

Pricing

Hevo has a transparent pricing model tailored to specific business sizes:

- Professional: $679/month for up to 20 million events.

- Business Critical: Custom pricing

- Free plan up to 1 million events.

- Starter: $239/month for up to 5 million events.

Hevo simplifies data mapping with its no-code platform, offering automated schema mapping and seamless integration with over 150+ data sources. Whether it’s real-time transformation or managing complex workflows, Hevo empowers your business with effortless data management for analytics and beyond.

Get Started with Hevo for Free2. Pentaho Data Integration

Rating: 4.3/5 (G2)

Pentaho Data Integration (PDI) is an open-source data integration tool by Hitachi Data Systems. It offers ETL solutions to enterprises that need automatic Data Mapping and loading of data from source to destination.

It provides solutions for data mining, data warehousing, and Data Analysis. The other services it offers are OLAP services, reporting, information dashboards, and Data Mining. Pentaho Data Integration tool is codenamed Kettle.

Key Features

- The tool offers a No-code GUI interface for users to map data from source to destination and save time effortlessly.

- It supports deployment on single-node computers and on a cloud or cluster.

- Efficient transformation engine: It has flexible, high-performance abilities that help to visualize, combine, and connect to data wherever required.

- Metadata injection: This feature allows you to use the same transformation logic in multiple scenarios for better efficiency and consistency of data mapping.

Pros of Pentaho Data Integration

- It offers an interactive, user-friendly, No-code GUI.

- It provides analytics and task results to provide good insight into the business.

Cons of Pentaho Data Integration

- The community edition doesn’t have a scheduler and Job Manager, which makes some tasks manual.

- Documentation for PDI is not very helpful, os the implementation becomes hard.

3. CloverETL (CloverDX)

Rating: 4.3/5 (G2)

CloverETL is an open-source Data Mapping and Data Integration tool that is built in Java. It can be used to transform, map, and manipulate data. It provides flexibility to users to use it as a standalone application, a command-line tool, a server application, or be embedded in other applications.

CloverETL allows companies to efficiently create, test, deploy, and automate the data loading process from source to destination.

Key Features of CloverETL

- It provides visual as well as coding interfaces for developers to map and transform data.

- It provides reusable templates for streamlining complex pipelines

- The repeatable workflows allow you to save time in building multiple pipelines.

- It has the capability to help you conduct reverse ETL jobs.

Pros of CloverETL

- It offers good speed in data transformation.

- Data parallelism data services can be used to create web services.

Cons of CloverETL

- Lack of proper documentation for setup and implementation.

- A smaller number of files and formats are supported.

4. Pimcore

Rating: 4.4/5 (G2)

Pimcore is an open-source Data Management software platform that is entirely developed in PHP. It is an enterprise-level Data Mapping tool for content management, customer management, digital commerce, etc. It ensures the availability of up-to-date data to all the team members of a company.

The Key Features of Pimcore

- It offers an easy data import option from formats such as CSV, XLSX, JSON, XML, and map data without writing any code.

- Users can import data at regular intervals. It also integrates with other product-based websites like E-Commerce platforms, Social Media websites, etc.

- It offers a standard API, a full-featured REST Webservice API, and a Data Hub GraphQL API to connect anything in a two-way and real-time.

Pros of Pimcore

- It can easily integrate with other platforms using web services.

- It offers an enterprise-grade solution for free.

Cons of Pimcore

- Not easy to use for non-technical users.

- The asset portal extension of the DAM module is not compatible with mobile devices.

5. Informatica PowerCenter

Rating: 4.4/5 (G2)

Informatica PowerCenter provides a highly scalable Data Integration solution with powerful performance and flexibility. By using its proprietary transformation language, users can build custom transformations.

By using its pre-built data connectors for most AWS offerings, like S3/DynamoDB/Redshift, etc., users can configure a versatile Data Integration solution for AWS.

Many compliance and security certifications, like SOC/HIPAA/Privacy Shield, are adhered to by Informatica PowerCenter.

Key Features of Informatica PowerCenter

- Data mappings are regularly updated and managed during data integration.

- It has a monitoring console that helps to quickly identify and rectify any problems during data mapping and integration.

- It provides further flexibility by allowing you to write custom code in Java or JavaScript.

Pros of Informatica PowerCenter

- Informatica is suited if you have multiple data sources on AWS and have confidential data. It provides a centralized repository where all the data (e.g., databases/flat files/streaming data/network, etc., related to sources/targets) is stored.

Cons of Informatica PowerCenter

- Cost of initial licensing and heavy running costs.

- If you wish to use a Cloud Data Warehouse destination, it only supports Amazon Redshift.

- Microsoft Azure SQL Data Lake is the only Data Lake destination it supports.

6. IBM InfoSphere

Rating: 4.1/5 (G2)

IBM InfoSphere is a part of the IBM Information Platforms Solutions suite and a Data Integration platform that helps enterprises monitor, cleanse, and transform data. It is highly scalable and flexible when it comes to handling massive volumes of data in real-time.

Key Features of IBM InfoSphere

- It delivers high performance in Data Mapping and loading using its Massively Parallel Processing (MPP) capabilities.

- It provides authoritative views of information with proof of lineage and quality for better visibility and data governance.

- The in-built connectors are capable of managing all phases of an effective data integration project.

Pros of IBM InfoSphere

- It is a versatile and scalable platform to handle massive volumes of data.

- It can easily integrate with other IBM Data Management solutions and adds more flexibility to the features.

Cons of IBM InfoSphere

- IBM InfoSphere is not easy to use and is not quickly adaptive.

- It is expensive than many other Data Mapping tools available.

7. Microsoft SQL Integration Services

Microsoft SQL Server Integration Services is part of Microsoft SQL and a Data Integration and Data Migration tool. It is used for automating the maintenance of SQL Server databases and updates to multidimensional cube data. Most of the workflow of Microsoft SQL Server Integration Services includes coding, and the workspace looks similar to Visual Studio Code.

Key Features of Microsoft SQL

- Microsoft SQL Server Integration Services can perform complex jobs seamlessly and is empowered with a rich set of built-in tasks and transformation tools for constructing packages.

- It provides graphical tools for building packages.

- The Catalog database helps you store, run, and manage packages.

Pros of Microsoft SQL

- It comes with excellent support via Microsoft.

- It offers a GUI that helps users easily visualize the data flow.

Cons of Microsoft SQL

- It requires skilled developers to operate because it supports a coding interface.

- It is not efficient for handling JSON and has fewer Excel connections.

8. WebMethods

Rating: 4.3/5 (G2)

WebMethods Integration Server is a Java-based Integration server for enterprises. It supports many services such as Data Mapping and communication between systems.

WebMethods Integration Server can serve Data Mapping tasks to On-premise, hybrid, and Cloud. It also supports Java, C, and C++ for more flexibility for users. It is best suited for Data Mapping of B2B solutions.

Key Features of WebMethods

- It uses a “Lift & shift” cloud adoption strategy that gets your webMethods integrations to the cloud with no extra hardware costs or installations.

- It provides a library of transformation services to bind your data formats together.

Pros of WebMethods

- It supports Document tracking.

- It is easy to use, scalable, and includes most of the enterprise tools (all in one).

Cons of WebMethods

- Expensive for small and mid-sized companies.

- Lack of documentation on legacy systems.

9. Oracle Integration Cloud Service

Rating: 4.1/5 (G2)

ICS is an integration application that can perform Source to Target Mapping between many Cloud-Based applications and data sources.

It can also go beyond that to include some on-premises data. It also provides 50+ native app adapters for integrating on-premises and other application data.

Key Features of Integration Cloud Service

- It provides run-ready templates to unify workflows and permissions across ERP, HCM, and CX applications.

- It uses Oracle GoldenGate for real-time data integration, replication, and stream analytics.

- It has a well-streamlined API with full lifecycle management that allows you to design, create, promote, and secure internal or external APIs.

Pros of Oracle Integration Cloud Service

- Both SaaS Extension and Integration coalesce under one product.

- Seamlessly integrates with other Oracle offerings like Oracle Sales Cloud/API Platform Cloud Service/SPMS, etc.

Cons of Oracle Integration Cloud Service

- It could be overkill for your purpose as it includes the capabilities of Process Automation, Visual Application Building.

- Its costs could be prohibitive as it’s priced according to the many features it provides.

10. Dell Boomi AtomSphere

Rating: 4.4/5 (G2)

Dell Boomi is a Cloud-based Data Integration and Data Mapping tool from Dell. With the help of its visual designer, users can easily map data between the two platforms and integrate them. Dell Boomi AtomSphere is suitable for companies of all sizes.

Key Features of Dell Boomi AtomSphere

- The tool provides a number of pre-built connectors that help you avoid writing code.

- It updates and deploys synchronized changes to your integration processes, which takes away the burden of managing the mapping manually.

- It incorporates crowdsourced contributions from the Boomi support team and user community for error handling within the UI.

Pros of Dell Boomi AtomSphere

- It offers drag-and-drop features, which make the job easier for non-technical users.

Cons of Dell Boomi AtomSphere

- Lack of documentation.

- The point-and-click feature cannot resolve complex solutions.

11. Talend Cloud Integration

Rating: 4.3/5 (G2)

The open-source version of Talend Open Studio was retired on 31st January 2024. Talend Cloud Integration is an ETL solution that comes with a Data Mapping tool.

Talend Data Mapper allows users to define mapping fields and execute the transformation of data between records of two different platforms. Talend Cloud Integration offers a graphical user interface that makes the tool user-friendly and helps save time.

Key Features of Talend Cloud Integration

- Its data profiling feature helps you identify any issues in data quality and makes sure that the data mapping is consistent and accurate.

- It uses Spark batch processing for data replication, which enables efficient and reliable data mapping even for large datasets.

Pros of Talend Cloud Integration

- It offers a drag-and-drop feature in the tool palette, which makes the job easier.

Cons of Talend Cloud Integration

- It has fewer integrations with other modules.

12. Jitterbit

Rating: 4.6/5 (G2)

Jitterbit is a Data Integration and Data Mapping tool that allows enterprises to establish API connections between apps and services. It can automate the Data Mapping process in SaaS applications and on-premise systems. Jitterbit’s Automapper helps you map similar fields and make the transformation lot easier.

Features of Jitterbit

- With the help of its AI features, users can control the interface using speech recognition, real-time language translation, and a recommendation system.

- It has a Management Console that provides a centralized view for controlling and monitoring workflow integrations and processes.

Pros of Jitterbit

- Most of the configurations are point and click.

- It comes with an easy-to-use interface with great documentation.

Cons of Jitterbit

- Low-quality logging and debugging.

13. MuleSoft Anypoint Platform

Rating: 4.5/5 (G2)

MuleSoft Anypoint Platform is a unified iPaaS Data Mapping tool that helps enterprises to map data between the destination and SaaS applications as a source. It uses its own MuleSoft language to create and execute Data Mapping tasks.

Key Features of MuleSoft Anypoint Platform

- It also supports a mobile version that allows users to manage and monitor the Data Mapping and Data Integration tasks remotely.

- The flexibility allows you to deploy this platform in any architecture or environment.

- It helps you manage, secure, and scale all APIs from a single place.

Pros of MuleSoft Anypoint Platform

- It comes with many exciting connectors that save time writing code for new Data Mapping.

- The tool is an IDE that is easy to navigate and makes development and testing easy.

Cons of MuleSoft Anypoint Platform

- It features its own MuleSoft language to create solutions, while there are many Data Mapping tools that provide drag-and-drop features.

14. SnapLogic

Rating: 4.3/5 (G2)

SnapLogic is a Data Migration and Data Mapping tool that can automate most of the Data Mapping fields using its Workflow Builder and Artificial Intelligence. It auto-maps data between cloud applications and destinations to keep the streaming data in sync.

Users can track all the Data Migration and Data Mapping activities with the help of visualization and reporting tools, making it much easier to create digital reports.

Key Features of SnapLogic

- It provides a Cache Pipeline that helps you to set up archetypal references that can be accessed through expression language lookup.

- The tool provides Enhanced Account Encryption that allows you to store and use your own keys for encrypting account information in a Groundplex.

- By using Email Encryption, the administrators can add keys to encrypt users’ emails.

Pros of SnapLogic

- Data Mapping is easy to implement and provides flexibility to users.

- User-friendly interface and doesn’t require any developers.

Cons of SnapLogic

- It is not suitable for complex pipelines and field mappings.

- It is expensive for very large datasets.

Importance of Data Mapping in ETL Process

Having reviewed the top data mapping tools, you’re now better equipped to evaluate which one fits your needs. Since you are seriously considering a tool, it’s worth asking: What are the major use cases encouraging teams to adopt these tools?

Common use cases include:

- Dealing with schema mismatches across systems.

- Managing large-scale transitions and cloud migration.

- Organizations operating in real-time environments.

Understanding the driving forces of data mapping adoption promotes a strategic investment plan and selection of the right tool. Whether the goal is to break down information silos or enhance dashboards with consolidated KPIs, data mapping marks its importance.

Primarily, data mapping is the building block of a thriving ETL workflow. When data is collected from SaaS applications, databases, and other diverse sources, it is not in an analytic-ready format. The collected data is unified and transformed into a format that is suitable for operational and analytical processes, achieved through source-to-target mapping.

This enables teams to define relationships between the source and target systems, streamlining the transformation logic.

Now, do these align with your existing workflow and organizational goals? Let’s evaluate by understanding the importance of data mapping a bit closely:

- Automated Data Mapping & Data Integration: To bring all your data together, the data model for the source and the repository must be the same. However, data models are rarely the same in data warehouses. What data mapping does here is it bridges the differences in the schemas of the source and target repositories. This allows you to consolidate vital data from diverse data points without any hassle.

- Data Mapping Assists in Data Migration: Inaccurate data mapping makes Data Migration prone to errors, which results in inconsistent, unreliable, and flawed datasets. By leveraging a code-free mapping solution, you can automate the process of Data Migration to receive reliable data for effective analysis.

- Automated Data Mapping and Transformation: As you know, Data Transformation breaks information silos and delivers actionable insights. These insights are gathered through enterprise information present in distinct locations and formats. To make the most of it, you can automate data mapping to define conversions and apply transformations for accurate data preparation before it is loaded into the database.

Data Mapping Challenges & How a Data Mapping Tool Helps

Despite all the benefits data mapping brings to businesses, it’s not without its own set of challenges.

1. Mapping data fields

Mapping data fields accurately is critical for achieving the intended results from your data migration process. This can be challenging if the source and destination fields have distinct names or formats (for example, text, integers, and dates).

Furthermore, manually mapping hundreds of different data fields might be time-consuming. Employees may become more prone to mistakes over time, resulting in discrepancies and confusion.

Automated data mapping tools address this issue by incorporating automated workflows into the process.

2. Technical expertise

Another challenge is that data mapping necessitates knowledge of SQL, Python, R, or another computer language.

Sales and marketing professionals employ a variety of data sources, which must be mapped to uncover meaningful insights. Unfortunately, only a small percentage of these employees can use programming languages.

In most circumstances, they must involve the technical team in the process. However, the IT staff is busy with other duties and may not be able to react to the request right away. Eventually, a simple link between two data sources may take a long time or perhaps become an endless chain of jobs in the developers’ backlog.

3. Data cleansing and harmonization

Raw data is in no way suitable for data integration. First and foremost, data specialists must clean the basic dataset of duplicates, empty fields, and other useless data.

If done manually, this is a time-consuming and repetitive task. According to the Forbes poll, data scientists devote 80% of their time to data collecting, cleansing, and organization.

This is an unavoidable task. Data integration and migration techniques based on unnormalized data will lead you nowhere.

4. Handling errors

Errors while data mapping can have serious consequences for data accuracy and integrity in the long run.

Any data mapping method is subject to error. It could be a typo, a mismatch between data fields, or a change in data format.

To avoid data-related disasters, it is critical to have an error-handling strategy in place.

One solution is to implement an automated quality assurance system. It will monitor data mapping operations and correct problems as they arise. However, in this case, analysts will receive more manual labor because they will still have to manually correct errors.

What is Data Mapping?

Data mapping is the process of defining relationships between data fields in different models or systems. It ensures schema alignment during ETL, data integration, or migration, supporting data consistency and downstream transformations.

Optimize Data With the Right Data Integration Software

This blog gives a fair idea about Data Mapping. It first delved into what Data Mapping actually is, eventually describing the process of Data Mapping, its importance in the ETL process, and the factors a user should consider before picking out a suitable Data Mapping Tool from the market. Leverage ETL data mapping to structure and standardize data, ensuring seamless migration and improved analytics.

It finally talks about the 3 Best Data Mapping Tools spread across Open-Source Tools, Cloud-Based Tools, and On-Premise Tools. Finally, we discuss a tool that provides you with the goodness of most of the above approaches in an economical manner.

Now you can learn more about Schema Mapping, Source to Target Mapping, and also know how Hevo automates the tedious task of manually mapping the source schema with the destination schema. Sign up for a 14-day free trial and experience the feature-rich Hevo suite firsthand.

Additional Resources on Data Mapping Tools

FAQ on Data Mapping Tools

1. What is data mapping software?

Data mapping software helps combine data from different sources into a single database, making it easier to organize and analyze.

2. Is SQL a data mapping tool?

No, SQL (Structured Query Language) is not a data mapping tool. Instead, SQL is a programming language for managing and querying relational databases.

3. What are examples of data mapping tools?

Tools used for mapping are IBM InfoSphere DataStage, Talend, Altova MapForce, Dell Boomi, CloverETL (CloverDX), Pentaho, Pimcore, Informatica, and other data integration tools.

4. What are mapping data types?

Mapping data types involves aligning or converting data types between different systems, databases, or applications during data integration or migration processes.

5. What are the factors to consider when choosing data mapping software?

– User Interface: Easy-to-use, drag-and-drop design for non-technical users.

– Integration: Compatibility with various sources like databases and cloud apps.

– Schema Support: Flexible handling of different data formats.

– Data Transformation: Ability to manage complex data changes.

– Error Handling: Detects and resolves mismatched or inaccurate data.

6. Why is data mapping important in ETL and data integration workflows?

Data mapping ensures that data flows correctly between systems by aligning fields, formats, and structures. Without proper mapping, data may be lost, duplicated, or misinterpreted during ETL processes, leading to inaccurate reporting or analytics.

7. How do automated data mapping tools differ from manual mapping?

Automated tools detect and map fields using AI or pre-defined templates, reducing time and errors. Manual mapping requires human input, which offers more control but is slower and more error-prone—especially in large or complex datasets.

8. Can data mapping tools handle schema changes over time?

Many advanced tools support schema evolution, allowing them to adapt to changes in data structure (like added fields or renamed columns). Some also offer alerts or logs when mismatches are detected during sync or transformation.