Imagine your organization is transitioning to an improvised CRM system. In this scenario, you must ensure that all your important customer information in CRM makes the move without a hitch. Data migration is the digital counterpart of moving to a new location. Just as you’d want your belongings to be safe, secure, and organized during a physical move, the same applies to your data during migration.

This article delves into the realm of data migration, its importance, and the practices that can make the process successful.

Table of Contents

What is Data Migration?

Data migration is the movement of digital information from one place to another, say different storage systems, applications, or file formats. Its ultimate goal is the efficient and timely transfer of data with minimal interruption to business processes. It is often carried out for various reasons, including:

- Relocating data centers for cost efficiency or strategic purposes.

- Upgrading databases to enhance performance.

- Deploying new applications to meet evolving business needs.

- Transitioning to cloud-based storage for scalability and flexibility.

- Consolidating systems and websites to simplify operations.

- Replacing legacy software with modern solutions.

Data migration can take various forms. This might include:

- Transitioning data to modern software,

- Moving from one database or warehouse to another,

- Consolidating data from multiple sources to create a unified dataset,

- Or upgrading a system while preserving existing data.

Key Objectives

- Compatibility: Aligning the source and target systems to prevent integration issues.

- Data Integrity: Ensuring the accuracy and consistency of data during the transfer.

- Data Accessibility: Guaranteeing that the migrated data is readily available in the target system.

Data Migration Process

- Validation: Checking data integrity and usability during and after the migration to ensure a seamless transition.

- Planning: Careful selection, preparation, and extraction of data to be migrated.

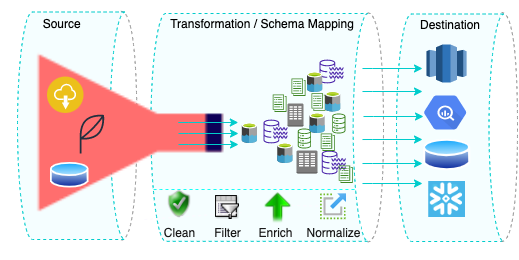

- Transformation: Cleaning and restructuring data to meet the target system’s requirements.

Engineering teams must invest a lot of time and money to build and maintain an in-house Data Pipeline. Hevo Data ETL, on the other hand, meets all of your needs without needing or asking you to manage your own Data Pipeline. That’s correct. We’ll take care of your Data Pipelines so you can concentrate on your core business operations and achieve business excellence.

Here’s what Hevo Data offers to you:

- Exceptional Live Support to its customers through chat, email, and support calls.

- Hevo’s fault-tolerant Data Pipeline offers you 150+ Data Sources (including 60+ free sources)

- Hevo takes away the tedious task of schema management

- Hevo allows the transfer of data that has been modified in real-time.

Types of Data Migration

Data migration projects can be categorized into various types based on the specific requirements. Here are some of the common types of data migration:

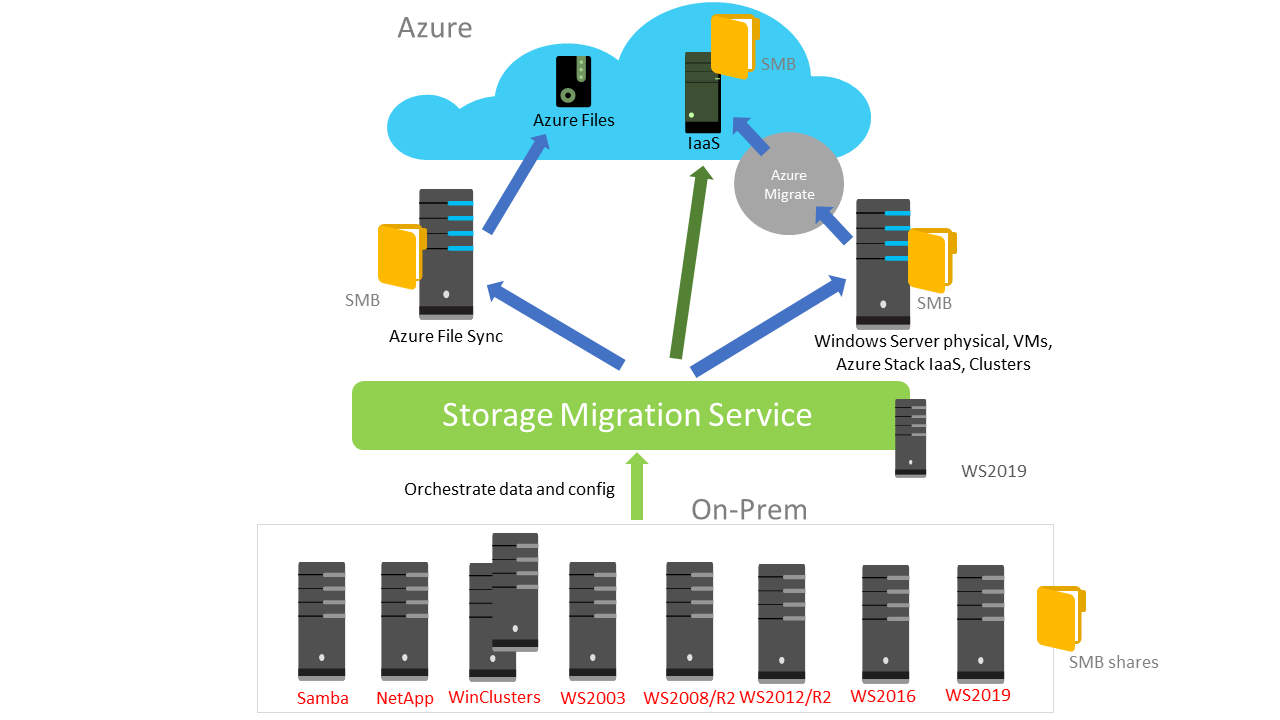

Storage Migration

This is the basic type of migration that involves moving data from one storage system to another. It could be migrating data from on-premises storage to cloud-based storage or upgrading to a more advanced storage solution. It is often executed to improve performance, cost-effectiveness, and scalability.

For example, consider you’ve been using local servers and storage devices for years and decide to shift your data to a cloud storage service like Azure Blob Storage to enhance security and accessibility and optimize maintenance efforts.

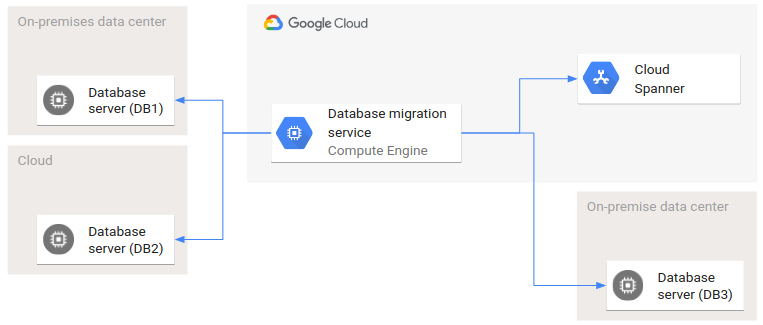

Database Migration

It is a process of moving data from one database to another database. For example, moving data from MySQL to Postgres or from an on-premise database to a cloud-based database service. You might consider opting for database migration when upgrading your database management system, relocating the database to a cloud environment, or switching to a new database vendor.

Unlike storage migration, which primarily focuses on data, database migration goes a step further by necessitating changes in data format. As a reason, you must plan meticulously, considering that there will be no impact on the applications using the database.

Cloud Data Migration

Cloud migration is a strategic decision that organizations make to leverage the benefits of cloud computing. It refers to the transferring of data, applications, or workloads to cloud platforms. Cloud migration can include moving on-premises data to the cloud or transferring it between different cloud services. If you choose to move data to the cloud services, you can utilize it’s advanced features like scalability, flexibility, enhanced security, and disaster recovery.

Application Migration

Application migration includes relocating software applications from one environment or platform to another. However, it may not only involve moving applications but also changing the underlying technology stack.

In some cases, databases might need modification or conversion in order to fit in the new environment, ensuring compatibility and functionality in the target platform. The primary goal of the application migration is to ensure that the application continues to function correctly in the new environment. It should also leverage the capabilities of the target system.

These scenarios might occur when you want to migrate data from on-premises to the cloud or upgrade your operating system. They may also happen when you decide to change vendors, relocate data centers, or modernize legacy applications.

Which Type of Software is Suitable for Data Migration?

When it comes to data migration software, you have several options to choose from, including on-premise software, cloud-based tools, or the development of self-scripted migration solutions. Each has its advantages and disadvantages, and the choice depends on your specific needs and resources. Here’s an overview of these software solutions:

On-Premise

Generally, on-premise data migration software is installed and operated within an organization’s physical or virtual environment. It’s used to move data between on-site systems to other on-site locations.

Advantages of On-Premise

- You’ll have control over the entire migration process as it takes place in your infrastructure.

- Preferable when you have a common source and destination database.

- Suitable for scenarios where privacy and security are a concern.

Limitations of On-Premise

- If you use a third-party tool, the provider may enforce security policies and privacy measures. This will potentially restrict your control over the migration process.

- With on-site premises comes the requirement of managing and maintaining hardware, software, and infrastructure.

- It involves higher upfront costs for hardware.

Examples of On-Premise

- Robocopy, Oracle Data Pump, or Rsync

Self-Scripted

This refers to developing a custom script to move data between systems. It is preferable for scenarios when you want maximum control and customization in the migration process.

Advantages of Self-Scripted

- Ideal for scenarios where a specific source or destination is not supported by other tools.

- When you’re managing sensitive or confidential data during migration, the self-scripted solution can provide you with privacy controls. You can implement data masking techniques, define access controls, or apply encryption methods to meet your privacy requirements.

Limitations of Self-Scripted

- Requires programming skills to develop and maintain migration scripts.

- It can be costly and time-consuming to build, test, and maintain custom solutions.

Example of Self-Scripted

- Self-scripted solutions are developed using programming languages such as Python, Ruby, Java, or PowerShell.

Cloud-Based

Cloud-based software is accessed over the internet and used to move data to and from cloud platforms. You can particularly use cloud-based data migration software for scenarios when you’re working with dynamic workloads or where your data migration needs may vary over time.

Advantages of Cloud-Based

- Because of its user-friendly interface, it is simple to use.

- Often comes with pre-built integrations for popular cloud platforms.

- Reduced hardware and software costs.

- Preferable for scalable and flexible requirements.

- Cloud-based software offers a pay-as-you-go pricing model, where you only pay for your usage.

Limitations of Cloud-Based

- Privacy must be thoroughly addressed when working with cloud-based tools.

Examples of Cloud-Based

- Some of the cloud-based data migration service providers include Hevo Data, CloudFuze, AWS Database Migration Service, Google Cloud Data Transfer Service, and Azure Migrate.

In many cases, organizations opt for a combination of these options, using cloud-based tools for straightforward migration and custom scripting for complex projects.

Challenges and Risks Associated with Data Migration

When choosing modern data management solutions, data migration has its own risks and challenges. Understanding these challenges is essential for planning optimal migration.

- Incomplete backups or not handling errors correctly in the migration process can lead to data loss.

- Many data migration scenarios necessitate systems to be offline during the process. This results in downtime and can disrupt business operations.

- In the database migration, data in the source system might need complex transformation to fit the structure of the target system. You might need to perform data cleaning or mapping in order to ensure data remains consistent and accurate in the new environment.

- Migrating data from traditional systems can be particularly time-consuming and challenging due to outdated technology, lack of support, limited documentation, and the need for legacy systems transition.

Best Practices to Follow for Data Migration

To ensure a successful migration and reduce the risks associated with errors, downtime, and data loss, a complete data migration strategy is necessary. Here are some of the best practices to follow:

- Before initiating the migration process, know your data. This will allow you to plan migration more effectively, minimizing the data loss and disruption gaps.

- Thoroughly plan the entire migration process considering the scope, objective, and timeline for the migration. Consider factors such as data volume and format of source, and target systems with their potential challenges.

- Always create a backup of your data before heading to the data migration journey to mitigate potential data loss.

- Maintain thorough documentation of the migration process, especially while transferring massive data, including configurations, scripts, and any changes made during the migrations. This documentation will serve as a valuable asset during the troubleshoots. In addition, create a data mapping document that outlines data elements in the source system corresponding to those in the target system.

- Security and compliance should be the core factors to be upheld during the migration process. Some of the data security practices that you can implement are data encryption, access control, compliance checks, audit trails, third-party vetting, or data masking.

- Test and validate the new environment’s functionality and data accuracy. This helps identify and resolve concerns before they affect actual operations.

- If your team is already working on a project and, at a certain phase, you decide to upgrade the technology stack or create a backup of the existing work, it’s important to consider informing all your teammates. This proactive communication with the team helps prevent confusion and ensures that everyone is on the same page.

Top 3 Data Migration Tools

Here are some popular data migration tools:

1) Hevo Data

Hevo is the only real-time ELT No-code Data Pipeline platform that cost-effectively automates data pipelines that are flexible to your needs. It allows integration with 150+ Data Sources (40+ free sources), lets you transform your data, & makes it analysis-ready.

To simplify the data migration at scale, it offers a comprehensive set of features like monitoring, error handling, automation capabilities, and an intuitive interface. Without writing a single line of code, you can swiftly relocate your data from the source to the target system. This feature is particularly helpful for a wide range of technical and non-technical users.

With Hevo, you can effectively address data migration challenges:

- Hevo offers an intuitive interface that makes data migration accessible to users with varying levels of technical expertise.

- It supports automatic schema mapping. This feature helps align schema changes from the source to the target system.

- You can also set alerts to quickly get notified of any issues that may arise during the migration process. This proactive approach helps identify and address concerns before they impact ongoing operations.

- It places a strong emphasis on security and compliance. Hevo offers security features like data encryption at transit and at rest, access control, and compliance checks.

2) Azure Cosmos DB

Azure Cosmos DB is a globally distributed, multi-model database service provided by Microsoft Azure. With the Azure Cosmos DB migration tool, you can seamlessly migrate high volumes of data to Azure Cosmos DB.

This open-source tool is capable of loading data into Cosmos DB from a variety of sources, including Azure Cosmos DB collections, JSON files, SQL Server, or CSV files. Notably, it supports multiple database models, encompassing key-value graphs, columnar storage, and graph data models. This flexibility allows you to select the most suitable data model for your specific application.

3) Robocopy

Robocopy, also known as Robust File Copy, is a command-line utility built into Windows OS. It is a data migration tool used for various data management tasks, primarily used for migrating files and directories from one location to another. It supports both local and network-based migrations.

All you need to do is open your command prompt, type robocopy, and specify the source and destination paths to initiate the data transfers.

Conclusion

- Data migration has become significantly vital due to technological advancements and ever-increasing data requirements. Understanding the migration process, its challenges, and conducting the most promising practices can help you effectively manage modern data.

- By doing so, you can minimize risks, maintain data integrity, and leverage the benefits of updated technologies. There’s a wide range of migration tools available in the market to relocate your data depending upon the use scenario.

- The right data migration tool will help you move data from source to destination. However, each tool has its advantages and limitations.

- So, evaluating it for a specific use, whether it’s data recovery, backup, or real-time analysis, can help you to make the right decision for your organization.

- Understand the roles and expertise required for a data migration team to handle complex migrations efficiently. Find out more at Building a Data Migration Team.

Take Hevo’s 14-day free trial to experience a better way to manage your data pipelines. You can also check out the unbeatable pricing, which will help you choose the right plan for your business needs.

Frequently Asked Questions

1. How to migrate customer data from WordPress to Shopify?

You can export customer data from WordPress using a plugin or manually via a CSV file. Then, import that data into Shopify through its admin dashboard’s import feature.

2. How to transfer data from Google Workspace?

Use Google Workspace’s data migration tool to move emails, contacts, and calendar data from one account or service to another. It’s available in the admin console.

3. What is an example of data migration strategies and best practices?

An example of data migration is transferring data from one system, like moving customer data from a legacy CRM to a new platform. You can also use tools like Hevo to simplify and automate this process.