Data is a productive asset, but it is also becoming complex. As organizations grow and accumulate vast amounts of data, managing data becomes a challenge. Raw data becomes overwhelming, especially for non-technical users. This problem causes a lot of inconsistencies in data interpretation, which makes it prone to misinformed decisions in the organization.

The dbt semantic layer is a powerful tool to close the gap between users and raw data. A semantic layer is an interface between raw data and the users who need to understand it. It gives a unified data view, simplifying any complexity that can make data overwhelming for non-technical users. This blog will explore how the dbt Semantic Layer addresses these issues and presents a significant solution for organizations to leverage raw data effectively.

Table of Contents

dbt and its Role in Modern Data Workflows

dbt has changed how we approach data transformations in data analytics and engineering. It has empowered every data-centric role; data analysts, analytics engineers, data scientists, and data engineers can write SQL queries that are scalable and manageable. It can track changes to the data transformations, enable testing, and deploy.

The importance of having consistent metrics across the board cannot be overstated. Inconsistency in metrics leads to bad reporting, affecting confidence and trust in the data. Data needs to be reliable and trustworthy to make data-driven decisions. A semantic layer helps to achieve this by ensuring that everyone uses the same definitions and calculations.

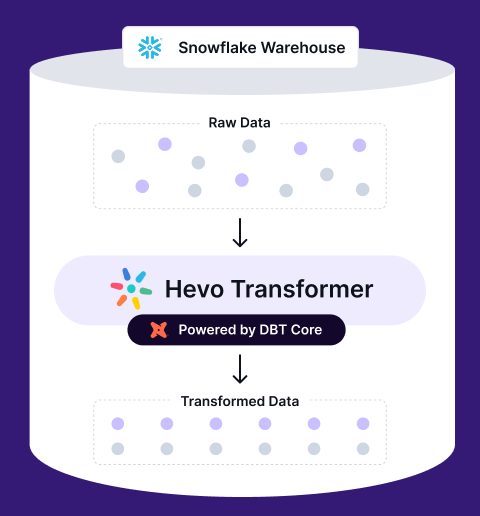

Transform, automate, and optimize your Snowflake data with Hevo Transformer—the ultimate no-code tool for dbt workflows. Experience lightning-fast integrations, real-time previews, and seamless version control with Git. Get results in minutes, not hours.

- Instant Integration – Connect and start transforming in minutes

- Effortless dbt Automation – Build, test, and deploy with a click

- Git Version Control – Collaborate and track changes effortlessly

- Real-Time Data Previews – See and push updates in an instant

How does dbt fit into Building the Semantic Layer?

dbt semantic layer specifically seeks to fix the issue of managing complex data. It plays a pivotal role in enabling data teams to define and manage data transformations in a scalable manner. You can build models that are SQL queries, which are then orchestrated to create a clean and consistent data layer.

Key Features of dbt Semantic Layer

- Modular Design: dbt enables complex data transformations by writing SQL code, which is easy to manage and scale.

- Version Control: dbt enables tracking changes to your data transformations over time, ensuring that changes are reversible.

- Testing Capabilities: dbt provides testing of your transformations, enabling teams to validate data transformations and quality.

- Documentation: dbt generates documentation automatically, which makes it easy to understand the data structure and business logic.

dbt Semantic Layer Architecture

The dbt semantic layer can define metrics and query them using multiple interfaces. It produces the SQL query needed to make the request and handles the laborious work of locating the requested data in your data platform.

- Raw Data: This is the initial point representing unprocessed data within the platform.

- Data Platform: This represents the storage such as a data warehouse or data lake where raw data is stored.

- dbt Cloud:

- Model: dbt models define how raw data should be transformed and structured.

- Test: dbt enables testing of these models.

- Metrics: This is defined within the dbt framework, allowing users to create standardized calculations.

- Semantic Layer Queries: This represents a set of standardized queries against the defined metrics.

- Output:

- The processed data are then sent to various output tools, such as BI Tools for reporting and visualization.

Core Components of the dbt Semantic Layer

The dbt Semantic Layer comprises multiple essential elements that offer a uniform and intuitive user interface for data.

Let us suppose that we have a retail company, “RetailCo” in Wonderland that stores raw data in a data warehouse. Tables include raw_orders, raw_customers and raw_products.

raw_orders

| order_id | customer_id | order_date | order_amount | product_id |

| 1001 | 2001 | 2023-08-01 | 120.5 | 3001 |

| 1002 | 2002 | 2023-08-02 | 51.3 | 3002 |

| 1003 | 2003 | 2023-08-03 | 75.0 | 3003 |

raw_customers

| customer_id | customer_name | signup_date |

| 2001 | John Doe | 2023-07-01 |

| 2002 | Jane Smith | 2023-07-02 |

| 2003 | Jane Doe | 2023-07-03 |

raw_products

| product_id | product_id | product_category | price |

| 1001 | 2001 | Beverage | 140.5 |

| 1002 | 2002 | Detergent | 40.2 |

| 1003 | 2003 | Oil | 100.0 |

dbt Models

Models are the heart of dbt. The dbt Semantic Layer is built on top of dbt Models. A model is simply a SQL file (.sql) specifying your data transformation. These building blocks transform unstructured data into cleaned and ready-for-business data.

Each model represents a step in the transformation process. Models that clean up raw sales data, aggregate it over various periods, and then determine important metrics such as profit YOY percentage change or average order value.

Here is an example of orders which can be transformed from raw tables.

dbt Sources

Dbt sources define the raw data inputs that your transformations rely on. In essence, these sources point to the raw tables or data stored in your data lake or data warehouse. Sources enable you to track the data’s lineage from the raw input to the final processed data.

Sources ensure transparency and trust of data. Understanding and trusting the outputs is easier when you can see exactly where your data originates from and how it is being transformed at each step.

For example, the source for the raw_orders table is defined in a YAML file:

dbt Metrics, now MetricsFlow

dbt Metrics are calculations that represent the key business indicators your organization relies on. Customer Lifetime Value, customer churn rate and sales turnover rate are all examples of metrics predefined by business. Metrics encapsulate the business logic that drives decision-making in an organization.

RetailCo wants to analyze key metrics, such as Total Revenue, Average Order Value (AOV), and Customer Retention Rate. These metrics are defined in dbt Metrics using MetricFlow.

By defining metrics within the dbt Semantic Layer, calculations are consistent across all your reports, dashboards, and analytics. This consistency is important as many teams may calculate the same metrics in different ways, such as net promoter score or customer satisfaction rate.

In dbt, metrics can be version-controlled, tested, and documented just like models. This ensures that they remain accurate and reliable as your data evolves.

dbt Documentation

dbt Documentation is a built-in automatic feature that provides detailed information about each model, source, and metric within a dbt project. It includes descriptions of the transformations, the business logic behind metrics, and the relationships between different models and sources.

dbt Documentation automatically generates detailed descriptions of models, sources, and metrics, enhancing transparency and understanding. It updates as models evolve, helping both technical and non-technical users track data transformations and maintain accurate, up-to-date information, reducing the risk of errors in decision-making.

Metadata API

The Metadata API provides programmatic access to detailed information about your dbt project, such as data lineage, dbt run history, and dbt model testing status. Organizations can track the performance of their data pipelines, create custom monitoring tools, and learn more about the flow and transformation of data.

How do all of these components work together?

- dbt Models and dbt Sources both build the main foundation of the data transformation pipeline, turning unstructured inputs into structured data.

- dbt Metrics and MetricsFlow empower how we query data for consistent key business calculations across the organization.

- dbt Documentation and Metadata API provide deeper insights into how data is manipulated, helping users understand the data.

- dbt Tests sustain the troubleshooting of data issues and ensure data quality and accuracy.

- Proxy Servers are not a separate dbt component, but they are pivotal in optimizing the interaction between users and the debt-transformed data.

Designing and Implementing a dbt Semantic Layer

Let us discuss the steps to design and implement a dbt Semantic Layer in a retail setting where we can empower the way data is managed and utilized.

For RetailCo, tables may include raw_orders, raw_customers and raw_products.

raw_orders

| order_id | customer_id | order_date | order_amount | product_id |

| 1001 | 2001 | 2023-08-01 | 120.5 | 3001 |

| 1002 | 2002 | 2023-08-02 | 51.3 | 3002 |

| 1003 | 2003 | 2023-08-03 | 75.0 | 3003 |

raw_customers

| customer_id | customer_name | customer_type | signup_date |

| 2001 | John Doe | New | 2023-07-01 |

| 2002 | Jane Smith | Recurring | 2023-07-02 |

| 2003 | Jane Doe | New | 2023-07-03 |

raw_products

| product_id | product_id | product_category | price |

| 1001 | 2001 | Beverage | 140.5 |

| 1002 | 2002 | Detergent | 40.2 |

| 1003 | 2003 | Oil | 100.0 |

Step 1: Decide Your Requirements

Start with defining the business logic and KPIs. We can use metrics such as Total Sales Revenue, Sales Per Store, Inventory Turnover, and Customer Lifetime Value (CLV). Expanding on this step, we will define how these metrics will be measured and calculated. These calculations will remain consistent across the organization.

These are some retail data sources that we could include:

- CRM systems

- POS Systems

- Salesforce

- Inventory Management Systems

Step 2: Develop your data model architecture

Continuing with the retail scenario, the next step is to design the data model architecture. We can implement an architecture and start with staging models to transform raw data from POS and inventory systems.

Staging Models: Staging models handle raw data, cleaning and normalizing it to prepare for further processing. For example, you might standardize transaction records from different stores with varying POS systems. You can create staging models in the models/staging directory and might create a stg_orders.sql file that cleanses and standardizes sales data from different stores.

- Intermediate Models: Intermediate models apply business logic to the staged data in the staging models, creating KPIs and metrics like daily total sales revenue per store inventory levels by product category. You can build these models in the models/intermediate directory and create an int_store_sales.SQL model that aggregates daily total sales data by store.

- Reporting Models: These models are the final processed data that aggregate data to create the defined metrics, such as monthly revenue and profit reports, inventory turnover rates, or monthly customer retention rates. You can make such models in the models/marts directory, which generates high-level metrics like monthly sales per region. For example, a mart_sales.sql model might report data monthly to the stakeholders.

- Data Lineage Mapping: dbt automatically tracks dependencies between models. You can map out the data flow from sources through the transformation process to the end outputs. This transparency of the dependencies between sources helps us troubleshoot issues later.

Step 3: Develop your dbt models

Once you define your metrics and business requirements and develop your architecture, initialize your dbt project and develop your models. Connect your dbt cloud with your data sources (it can easily be done if the data source is a Partner Connect). You can:

- Create dbt Models: Start developing models for each transformation step. For example, you might create a model that calculates daily sales for each store and another model that tracks customer churn rate.

- Define dbt Sources: Link sources such as raw sales data from Salesforce or your CRM system or stock levels from the inventory management system. sources.yml file can look like this:

- Prepare Documentation: Document everything about each model, clarifying how data from different stores and inventory systems is combined and transformed.

Step 4: Create and Validate Metrics

You can write queries to create metrics like total sales revenue, inventory turnover, and CLV. For example, total sales revenue could be calculated by summing the total purchases (price multiplied by the quantity sold) made by a customer. You might define Total Sales Revenue in a mart_sales.sql file by aggregating sales across all stores like this:

You can write and run tests to ensure that metrics like sales per store are consistent and accurate across different periods and geographic locations. Define tests in YAML files to check for data quality and consistency like this:

You can run these tests with the dbt build, run and test.

Step 5: Establish Best Practices

As we discussed, the dbt semantic layer is powerful due to many features such as version control, more robust governance, and continuous integration pipeline management. You should leverage these practices and avail yourself of such benefits.

- Version Control: Use a version control system to track and manage changes to dbt models.

- Continuous Integration (CI): Set up a pipeline to automatically test and validate changes to your dbt models. You can run dbt run, dbt test, and dbt docs generated on every pull request.

- Data Governance: Implement strong policies to manage access controls, data quality, and documentation standards.

Benefits of Using dbt for the Semantic Layer

- Cost-Effective Solution: The dbt Semantic Layer reduces time and resources for data preparation by centralizing metric logic and minimizing redundancy, leading to more cost-effective data solutions.

- Flexible Integration for Users: It offers a variety of APIs for seamless integration with reports, dashboards, and queries, allowing users to access consistent metrics without adjusting their data sources.

- Robust Governance: dbt’s governance features ensure data integrity and compliance with organizational standards, allowing users to explore data flexibly while maintaining consistency.

- Code Simplicity: dbt enables defining metrics once and reusing them across various contexts, with MetricFlow managing complex calculations, resulting in cleaner, more maintainable code.

Conclusion

With its ability to close the gap between complex raw data and practical insights, the dbt Semantic Layer substantially improves how organizations handle and interpret data. It guarantees accuracy and consistency by centralizing business logic and analytics, enabling technical and non-technical users to make well-informed decisions. Its modular architecture promotes agility across data teams, especially with version control, testing capabilities, and thorough documentation.

dbt’s Semantic Layer provides an easy, scalable, and flexible solution that smoothly connects with various cloud data warehouses and BI tools in a growing world of data complexity. Indeed, it is a priceless tool for all businesses who wish to be empowered by their data!

FAQ on dbt Semantic Layer

1. How does the dbt Semantic Layer architecture compare with traditional data modeling?

Unlike traditional data modeling, the dbt Semantic Layer prioritizes agility, consistency, and accessibility for non-technical users. It integrates with cloud data warehouses and BI tools, fostering collaboration and adapting to changing business needs more effectively.

2. What is the difference between the logical layer and the semantic layer?

The logical layer organizes data structure and relationships for technical purposes, while the semantic layer translates this into user-friendly terms, ensuring consistent, business-relevant interpretations and access for non-technical users.

3. What is the semantic model in dbt?

The semantic model in dbt standardizes business metrics, ensuring consistent data interpretation across an organization. It centralizes metric definitions, enabling non-technical users to interact with data using familiar terms. Key metadata includes entities (relationships), dimensions (grouping/filtering), and measures (calculations) for consistent reporting and analysis.

4. What’s the difference between dbt Metrics, MetricsFlow, and the semantic layer?

The semantic layer is the structure and framework that protects the consistency and application of metrics and business logic. MetricFlow, part of dbt’s Semantic Layer, replaces dbt Metrics with advanced features like SQL query construction using an internal DAG and sophisticated join handling. It ensures consistency and accuracy in metrics, unifying data interpretation across the organization. Together, they help to unify how data is interpreted.

5. Can dbt’s Semantic Layer be used with multiple data warehouses?

Absolutely! dbt’s Semantic Layer integrates with multiple data warehouses like Snowflake, Google BigQuery, AWS Redshift, and Databricks. It manages and standardizes metrics across these platforms, ensuring consistency and reliability in a multi-cloud environment.