As raw data comes in various forms and sizes, it is essential to have a proper system to handle big data. One of the significant challenges is referencing data points as the complexities increase in data workflows. The situation gets out of control even when there is a need for the slightest change in the source raw data.

You would have to change the code everywhere the table or column has been directly referenced. This is where dbt helps data engineers to create references to tables and columns with dbt source files. However, let’s first understand what is dbt.

Table of Contents

What is dbt?

dbt is a transformation tool used by data-driven organizations to obtain reliable data analytics workflows. Usually, dbt does the T of the ELT (Extract, Load, and Transform) process. What makes dbt popular among data engineers is that it can transform data within a few seconds or minutes instead of hours. With dbt, you can also transform the data within the data warehouse, thereby simplifying the transformation process. dbt allows different kinds of transformation like view, table, incremental, and ephemeral through materialization.

The transformation of data can be processed with a simple SQL SELECT statement. However, you can use Python as your preferred language for transformation tasks. Python in dbt is mainly used to perform complex transformations for data science workflows.

dbt has two offerings—dbt core and dbt cloud—for users to leverage the transformation tool. dbt core is an open-source command line tool that allows you to create dbt projects and enhance your analytics workflows. But, dbt core doesn’t have advanced features like scheduling and UI-based workflow of dbt cloud.

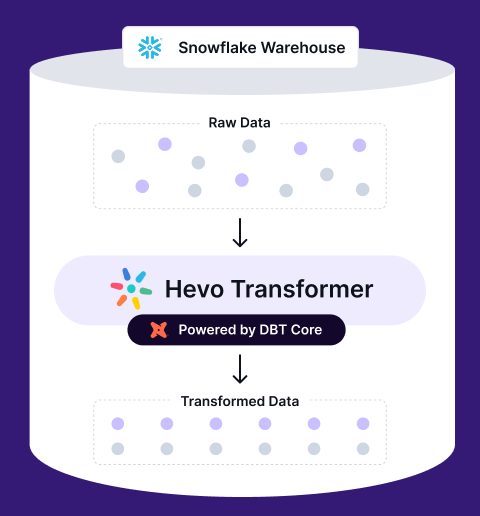

Want to use dbt but don’t want the hassle of manual setup? Hevo Transformer (Powered by dbt Core) simplifies dbt workflows, automates transformations, and streamlines model execution—all in one place.

- One-Click dbt Execution – Run and build models seamlessly.

- Automated dbt Workflows – Eliminate manual effort with smart scheduling.

- Built-in Version Control – Track and collaborate with Git integration.

- Instant Data Previews – Validate transformations before deployment.

How Does dbt Work?

dbt consists of projects that include several files and directories like dbt_project.yml, models, snapshots, and more. The dbt_project.yml is the project configuration file that also defines the directory of the dbt projects. At a minimum, you only need a dbt_project.yml file, but a dbt project can include other resources like seeds, tests, sources, and models.

One of the critical components after dbt_project.yml of dbt projects is models. These are SQL SELECT statements for the transformation of data. Usually, based on the requirements, you can have more than one model for your project, where each model is a single SQL SELECT statement. As a result, dbt models are modularised or reusable code snippets that can be used for data transformation. The models can also be referenced in downstream applications. This makes it easier for analysts so they do not have to start writing code from scratch for every analytics project. As a result, users can expedite the analytics workflows with references to models.

To ensure you have robust models, dbt also supports version control to keep track of changes and debug quickly. You can also write test cases and document the project for ease of reference.

What are dbt Sources?

dbt sources are located in the models/ directory in .yml files under sources: key. dbt sources are a way of declaring tables that come from your data stores for further reference. It is beneficial in cases where you only want a part of the data to transform for other processes. Sources are used while building dbt models. You can select from the source table using {{ source() }} function to help you define the lineage of data. Apart from using sources in models to get data, you can write test cases for maintaining quality and checking for the freshness of data. As a result, dbt sources allow users to focus on building models for transformation rather than focusing on the raw data.

Why Use dbt Sources?

- Consistent Data Definitions: dbt sources help establish standardized definitions for data coming from different sources, ensuring all team members are aligned.

- Data Lineage Tracking: By defining sources, you can easily trace where data originates, enhancing transparency and facilitating debugging.

- Improved Collaboration: Clear source definitions promote collaboration across teams, as everyone works with a shared understanding of data origins.

- Enhanced Data Governance: Using dbt sources supports better data governance by ensuring accurate documentation and compliance with organizational policies.

How do you use a dbt Source?

Declare a Source

With dbt source, you can declare the database and schema for each source. This simplifies the workflows when there are a lot of dependencies in downstream systems.

In the aforementioned code snippet,

- name: it is the name of the source

- schema: by default, it is the same as the name of the source

- tables: includes name and identifier

- name: it is the name of the table

- identifier: it is used while fetching data

Select From a Source

The name of the source is jaffle_shop, which can be further referenced using the following code:

The aforementioned code points to the jaffle_shop source and fetches the data from the orders table.

Documenting and Testing Source

You can check for unique, non-null, and accepted values with built-in data integrity capabilities. However, you are not limited to built-in features as it supports custom techniques for data integrity checks. This ensures you always get quality data while referencing information through dbt sources.

For documentation, you just need to write a description of the source and tables under the description key. dbt will automatically render it during documentation.

Here, the test cases check for not null and unique values in the orders table for the id column.

Test the Freshness of Loaded Data

With a freshness check, you can ensure that you don’t use duplicate data.

Here, the timestamp of the data is referenced to filter data.

Source Configurations

Sources can be configured by the config: block under the sources: key. However, you can also configure it by changing the dbt_project.yml file. dbt only supports enabled configuration for sources. It is used for configuring sources imported from a package. Packages are standalone dbt projects, which are modularized to be used in other projects. Packages are similar to Python libraries used to perform programming tasks without writing the code from scratch. You can enable or disable sources included in a package by providing boolean values like True and False.

In the aforementioned code snippet, the my_source is enabled. You can also enable/disable specific tables in the source files as executed in the above code. Here the table ignore_this_one is disabled.

Conclusion

dbt sources are an effective way to reference raw data during transformation. It especially helps while building dbt model, where developers spend the most time. Therefore, dbt sources act as a foundation for building superior dbt models. Besides, it empowers data engineers to obtain standardized data with different checks for duplicates and quality issues.

Curious about using dbt Python models? Explore our comprehensive guide to discover how integrating Python with dbt can enhance your data workflows and analysis.

Tools like dbt that emerge each day enable the data analytics industry to leverage the power of data. Hevo Data is one such company that provides automated data pipeline solutions for your needs. Sign up for a 14-day free trial and see the magic yourself.