Unlock the full potential of your Heroku For PostgreSQL data by integrating it seamlessly with BigQuery. With Hevo’s automated pipeline, get data flowing effortlessly—watch our 1-minute demo below to see it in action!

Heroku is a cloud Platform as a Service (PaaS) that allows developers to run, build, and operate applications while offering support for various programming languages such as Node.js, Scala, Ruby, Python, Go, etc. Heroku PostgreSQL offers an advanced open-source database as a secure, trusted, and scalable service that can be optimized for developers. To analyze the data you pull from this database, you’ll have to move it to a fully-managed data warehouse that allows you to extract valuable, actionable insights from it. This is where Google BigQuery comes in, it is a highly scalable Data Warehouse with a built-in query engine.

In this article, you’ll go through two methods to seamlessly move data from Heroku for PostgreSQL to BigQuery: using custom code and a no-code Data Pipeline solution, Hevo.

Seamlessly transfer your data from Heroku PostgreSQL to Google BigQuery with Hevo’s no-code, fully managed platform.

- Effortless Migration: Set up data pipelines in minutes with zero coding required.

- Real-Time Data Sync: Ensure your BigQuery warehouse is always updated with live data from Heroku PostgreSQL.

- Auto-Schema Mapping: Hevo automatically maps your Heroku PostgreSQL schema to BigQuery, reducing manual errors and speeding up the process.

Join thousands of users who trust Hevo for seamless data integration, rated 4.7 on Capterra for its ease and performance.

Get Started with Hevo for FreeTable of Contents

Heroku for PostgreSQL to BigQuery Integration Methods

Here are the two methods you can implement for Heroku for Postgres BigQuery migration:

- Method 1: Using Hevo as a Heroku PostgreSQL to BigQuery Connector

Leverage Hevo’s no-code platform to seamlessly connect Heroku PostgreSQL to BigQuery. Automate data migration and ensure real-time syncing with minimal effort. - Method 2: Using Custom Code for Heroku PostgreSQL to BigQuery Migration

Alternatively, you can opt for custom coding to transfer data, though this approach can be more complex and time-consuming.

Method 1: Using Hevo as a Heroku for PostgreSQL to BigQuery Connector

Hevo can also enrich and transform the data into an analysis-ready form without having to write a single line of code. Its fault-tolerant architecture ensures that the data is handled in a secure, consistent manner with zero data loss.

Using Hevo, Heroku for PostgreSQL to BigQuery Migration can be done in the following 2 steps:

Configure Heroku for PostgreSQL as a Source

- First, you need to log in to your Heroku account.

- Next, you need to choose the app containing the PostgreSQL database and open the databases dashboard.

- You can access the DATA tab and click on the PostgreSQL database you wish to use.

- Next, click on Settings > View Credentials.

- You can leverage the credentials provided below while setting up your PostgreSQL source in Hevo:

- Next, in the Configure your Heroku PostgreSQL Source page, you need to mention the following:

- Database Host: The Heroku PostgreSQL host’s DNS or IP address.

- Pipeline Name: This depicts a unique name for your Pipeline.

- Database User: The read-only user with permission to read tables in your database.

- Database Port: This refers to the port on which your PostgreSQL server listens for connections. The default value is 5432.

- Database Password: This refers to the password for the read-only user.

- Database Name: The database that you want to replicate.

- Select an Ingestion Mode: This refers to the desired mode by which you want to ingest data from the source. The available ingestion modes are Table, Logical Replication, and Custom SQL.

- For Ingestion Mode as Logical Replication, you must follow the steps provided in each PostgreSQL variant document to set up logical replication.

- For Ingestion Mode as Table, refer to the section, Object Settings, for steps to configure the objects that you want to replicate.

- Connection Settings:

- Use SSL: You can enable it to leverage an SSL-encrypted connection. You can also enable this if you’re using Heroku PostgreSQL databases. To enable this, you need to mention the following:

- Client Certificate: This refers to the client public key certificate file.

- CA File: The file containing the SSL server certificate authority (CA).

- Client Key: The client private key file.

- Connect through SSH: You can enable this option to connect Hevo using an SSH tunnel, as opposed to directly connecting your PostgreSQL database host to Hevo. This lends an additional layer of security to your database by not exposing your PostgreSQL setup to the public. If this option is disabled, you need to whitelist Hevo’s IP address.

- Use SSL: You can enable it to leverage an SSL-encrypted connection. You can also enable this if you’re using Heroku PostgreSQL databases. To enable this, you need to mention the following:

- Click “TEST & CONTINUE” to proceed with setting up the Destination.

Object and Query Mode Settings

Once you have specified the Source connection settings in Step 2 above, do one of the following:

- For Pipelines configured with the “Table” mode:

- In the “Select Objects” page, select the objects you want to replicate and click “CONTINUE”.

- Note: Each object represents a table in your database.

- In the Configure Objects page, specify the query mode you want to use for each selected object.

Note: In Full Load mode, Hevo attempts to replicate the full table in a single run of the Pipeline, with an ingestion limit of 25 million rows.

- For Pipelines configured with the “XMIN” mode:

- In the Select Objects page, select the objects you want to replicate.

- For the selected objects, only new and updated records are ingested using the XMIN column.

- The Edit Config option is unavailable for the objects selected for XMIN-based ingestion. You cannot change the ingestion mode for these objects post-Pipeline creation.

- Click “CONTINUE”.

Note: Each object represents a table in your database.

- For Pipelines configured with the “Custom SQL “mode:

- In the Provide Query Settings page, enter the custom SQL query to fetch data from the Source.

- In the Query Mode drop-down, select the query mode, and click CONTINUE.

You can check the official documentation for more details.

Configure BigQuery as a Destination

To set up Google BigQuery as a destination in Hevo, follow these steps:

- Step 1: In the “Asset Palette”, select “DESTINATIONS”.

- Step 2: In the “Destinations List” View, click “+ CREATE”.

- Step 3: Select Google BigQuery from the “Add Destination” page.

- Step 4: Choose the BigQuery connection authentication method on the Configure your Google BigQuery Account page.

- Step 5: Choose one of these:

- Using a Service Account to connect:

- Service Account Key file, please attach.

- Note that Hevo only accepts key files in JSON format.

- Go to CONFIGURE GOOGLE BIGQUERY ACCOUNT and click it.

- Using a user account to connect:

- To add a Google BigQuery account, click +.

- Become a user with BigQuery Admin and Storage Admin permissions by logging in.

- To grant Hevo access to your data, click Allow.

- Using a Service Account to connect:

- Step 6: Set the following parameters on the Configure your Google BigQuery page:

- Destination Name: A unique name for your Destination.

- Project ID: The BigQuery Project ID that you were able to retrieve in Step 2 above and for which you had permitted the previous steps.

- Dataset ID: Name of the dataset to which you want to sync your data, as retrieved in Step 3 above.

- GCS Bucket: To upload files to BigQuery, they must first be staged in the cloud storage bucket retrieved in Step 4 above.

- Step 7: Click “Test Connection” to test connectivity with the Amazon Redshift warehouse.

- Step 8: Once the test is successful, click “SAVE DESTINATION” to complete the Heroku for PostgreSQL to BigQuery integration.

Method 2: Using Custom Code for Heroku for PostgreSQL to BigQuery Migration

In this Heroku for PostgreSQL to BigQuery integration method, you’ll first move data from Heroku for PostgreSQL to Amazon Redshift, and then from Redshift to BigQuery.

Moving Data from Heroku for PostgreSQL to Amazon Redshift

You can move data from Heroku for PostgreSQL to Amazon Redshift in two ways: by using SSL validation or without using SSL validation. If you want to use SSL validation to establish a Heroku for PostgreSQL to Amazon Redshift connection, you must first create a Heroku database. Next, you’ll have to add the Amazon RDS SSL certificate you wish to use for your application. Once you’ve dumped and loaded it into Amazon RDS, you’ll have to test the connection.

For more details on the connection, refer to the Heroku for PostgreSQL to Redshift article.

Moving Data from Redshift to BigQuery

You’ll be leveraging the BigQuery Transfer Service to copy your data from an Amazon Redshift Data Warehouse to Google BigQuery. BigQuery Transfer Service engages migration agents in GKE and triggers an unload operation from Amazon Redshift to a staging area in an Amazon S3 bucket. Your data would then be moved from the Amazon S3 bucket to BigQuery.

Here are the steps involved in the same:

- Step 1: Go to the BigQuery page in your Google Cloud Console.

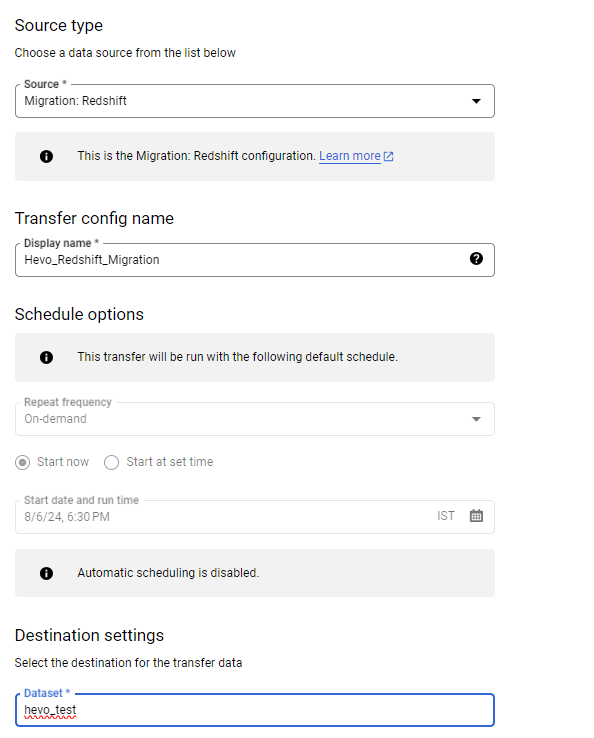

- Step 2: Click on “Transfers”. On the New Transfer Page, you’ll have to make the following choices:

- For Source, you can pick Migration: Amazon Redshift.

- Next, for the “Display name”, you’ll have to enter a name for the transfer. The display name could be any value that allows you to easily identify the transfer if you have to change the transfer later.

- Finally, you’ll have to pick the appropriate dataset for the destination dataset.

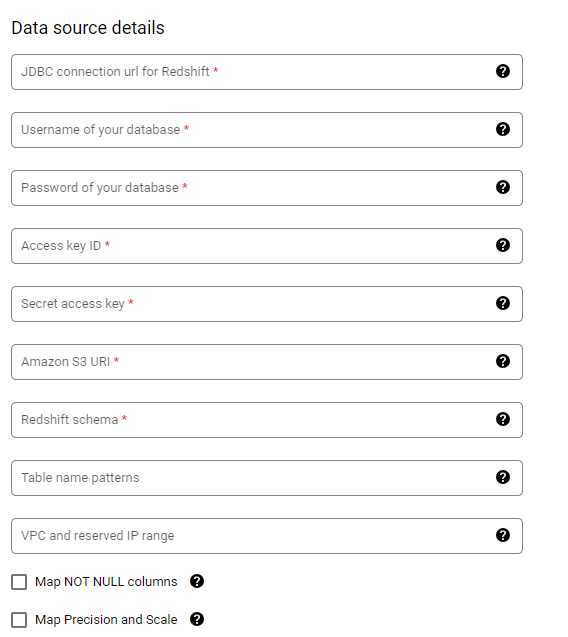

- Step 3: Next, in Data Source Details, you’ll have to mention specific details for your Amazon Redshift transfer as given below:

- For the JDBC Connection URL for Amazon Redshift, you’ll have to give the JDBC URL to access the cluster.

- Next, you’ll have to enter the username for the Amazon Redshift database you want to migrate.

- You’ll also have to provide the database password.

- For the Secret Access Key and Access Key ID, you need to enter the key pair you got from Grant Access to your S3 Bucket.

- For Amazon S3 URI, you must enter the URI of the S3 Bucket you’ll leverage as a staging area.

- Under Amazon Redshift Schema, you can enter the schema you want to migrate.

- For Table Name Patterns, you can either specify a pattern or a name to match the table names in the Schema. You can leverage regular expressions to specify the pattern in the following form: <table1Regex>;<table2Regex>. The pattern needs to follow Java regular expression syntax.

- Step 4: Click on “Save”.

- Step 5: Google Cloud Console will depict all the transfer setup details, including a Resource name for this transfer. This is what the final result of the Heroku for PostgreSQL to BigQuery export looks like:

What is the Importance of Heroku for PostgreSQL BigQuery Integration?

The benefit of using Heroku for Postgresql Bigquery connector for integration is two-fold: it allows you to further query offline, and easier analysis for data-driven decision making. As a fully managed service, Google BigQuery enables you to extract the most from data without the admin overhead.

Database forks and follower instances from Heroku for PostgreSQL can turn your data into an agile resource. This can be used for safe experimentation with various use cases. Utilizing Heroku Postgres ETL tools, developers can efficiently migrate and synchronize data across different platforms. Deploying on Heroku Postgres simplifies database management tasks for developers.

With Heroku for PostgreSQL, new data strategies, instances dedicated to analytics, development instances, and data warehousing are ready to go in just a few clicks.

Additional Resources on PostgreSQL to BigQuery Integration.

- How to load data from postgresql to biquery

- Postgresql on Google Cloud Sql to Bigquery

- Migrate Data from Postgres to MySQL

- How to migrate Data from PostgreSQL to SQL Server

- Connecting PostgreSQL to Power BI

Conclusion

In this guide, we’ve walked you through two effective methods to integrate Heroku for PostgreSQL with BigQuery: custom scripts and Hevo’s no-code Data Pipeline solution. While custom scripts offer flexibility, they can be complex and time-consuming. On the other hand, Hevo simplifies the process, enabling real-time data synchronization with minimal effort.

Sign up for a 14-day free trial with Hevo for seamless data integration. Also, check out Hevo’s pricing to choose the best plan for your needs!

FAQ Heroku for PostgreSQL to BigQuery

How to Transfer Data from Postgres to BigQuery?

To transfer data from PostgreSQL to Google BigQuery, you can use several methods depending on your requirements and resources:

1. Using data transfer services such as Hevo, Fivetran

2. Custom ETL Pipeline with Python and Google BigQuery

3. Manual Import and Export

Can I Use PostgreSQL in BigQuery?

BigQuery does not natively support PostgreSQL as a backend database engine. However, you can use BigQuery’s external query capabilities or transfer data from PostgreSQL to BigQuery for analysis. By doing this, you can utilize BigQuery’s powerful data processing and analytics features while keeping PostgreSQL as a source for your data.

Can You Host a PostgreSQL Database on Heroku?

Yes, you can host a PostgreSQL database on Heroku. Heroku offers a managed PostgreSQL service called Heroku Postgres. It provides fully managed PostgreSQL databases, including automatic backups, scaling, and high availability.

Want to take Hevo for a spin? Sign Up for a 14-day free trial and experience the feature-rich Hevo suite firsthand. You can also have a look at our unbeatable pricing that will help you choose the right plan for your business needs!

Comment below to tell us about your experience of connecting Heroku for PostgreSQL to BigQuery!