Key Takeaways

Key TakeawaysTransferring data from AWS RDS Oracle to Snowflake can either be donw using COPY command in Amazon S3 or in two simple steps using an automated tool like Hevo.

Here’s a detailed breakdown of the steps:

Method 1: Using Hevo Data

Step 1: Configuring AWS RDS Oracle as the Source

Step 2: Configuring Snowflake as the Destination

Method 2: Using Amazon S3

Step 1: Migrating Data from AWS RDS Oracle to S3

Step 2: Data Transfer from Amazon S3 to Snowflake

Unlock the full potential of your AWS RDS Oracle data by integrating it seamlessly with Snowflake. With Hevo’s automated pipeline, get data flowing effortlessly—watch our 1-minute demo below to see it in action!

Integrating the on-premise data present in the database into a data warehouse has become an essential part of every business workflow. By doing so, organizations tend to take a more data-driven approach and are able to decide what steps to take for better business performance.

Amazon RDS Oracle is a popular relational database service that companies use to store data. Moving this data into a data warehousing environment like Snowflake can enable you to generate valuable insights that benefit your business. This article will discuss some popular methods to move your data from AWS RDS Oracle to Snowflake.

Table of Contents

Why Integrate AWS RDS Oracle to Snowflake?

- Integrating data from AWS RDS Oracle to Snowflake can help you apply advanced data analytics and machine learning to your data

- It can provide a cost-effective advantage in performing ACID compliance, multi-partitioning, and other data management features.

- You can use Snowflake to scale your data beyond the limits of Amazon RDS Oracle.

Method 1: Migrate AWS RDS Oracle to Snowflake Using Hevo Data

In this method, we can migrate our data using an automated data pipeline with no code, using just two steps. This is a very efficient method and less prone to errors.

Method 2: Convert AWS RDS Oracle to Snowflake Table Using Amazon S3

This method involves moving the data into an S3 bucket and then importing it into Snowflake. It is more time-consuming than the first method.

Get Started with Hevo for FreeAn Overview of AWS RDS for Oracle

Amazon Relational Database Service (RDS) Oracle is a cloud relational database service enabling you to create, manage, and scale your database.

Amazon RDS for Oracle provides an hourly pricing feature, enabling you to pay according to your requirements without any upfront fee. It allows you to use time efficiently by performing database administration tasks like backups, monitoring, and software patchups.

The Amazon RDS Multi-AZ feature provides high availability and durability for up to 99.95% of the time. To learn more about it, read Amazon RDS for Oracle.

An Overview of Snowflake

Snowflake is a fully managed data warehouse platform providing a single interface for data lakes, warehouses, application development, and real-time data sharing. It offers maintenance, supervision, and upgrade features in a cloud environment.

With its Elastic Multi-Cluster Compute feature, Snowflake can manage any workload with a single scalable engine. You can leverage Snowpark’s powers to create AI models, develop pipelines, and securely deploy applications on the cloud. To know more about Snowflake, refer to Snowflake Architecture and Concepts.

Method 1: Migrate AWS RDS Oracle to Snowflake Using Hevo Data

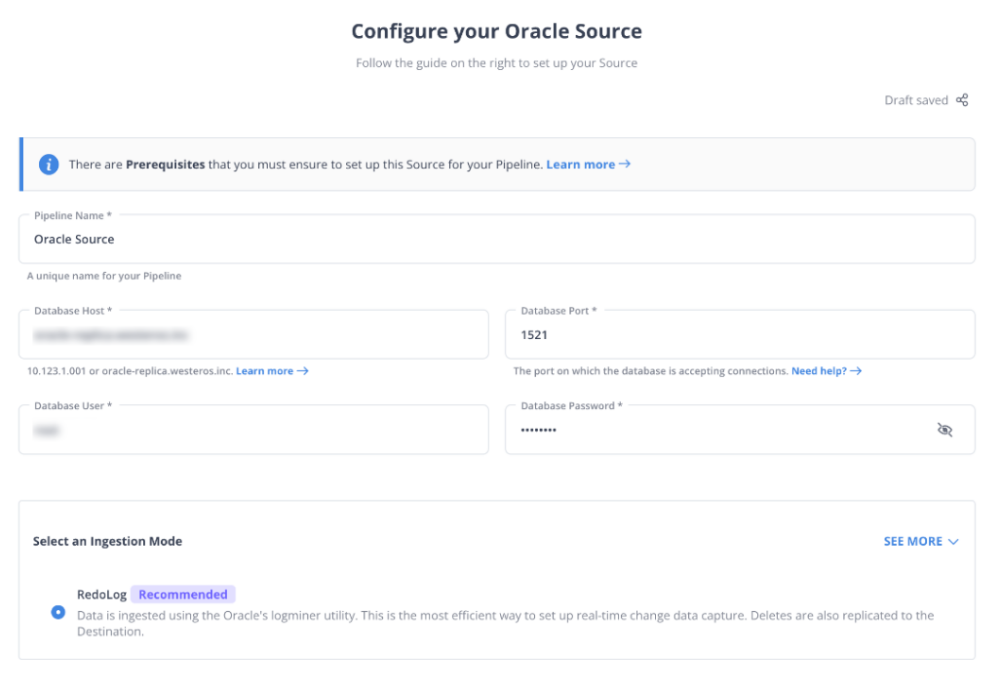

Step 1: Configuring AWS RDS Oracle as the Source

Before proceeding with the steps involved in setting up AWS RDS Oracle as a source, you must ensure that all the prerequisites are satisfied.

Prerequisites

- Oracle 12c+.

- Enable Redo Log replication (if using).

- Whitelist Hevo IPs and ensure RDS security group privileges.

- Grant SELECT privileges.

- Have the database port and hostname.

- Be a Team Admin, Pipeline Admin, or Collaborator in Hevo.

After satisfying all the prerequisite conditions, configure the source

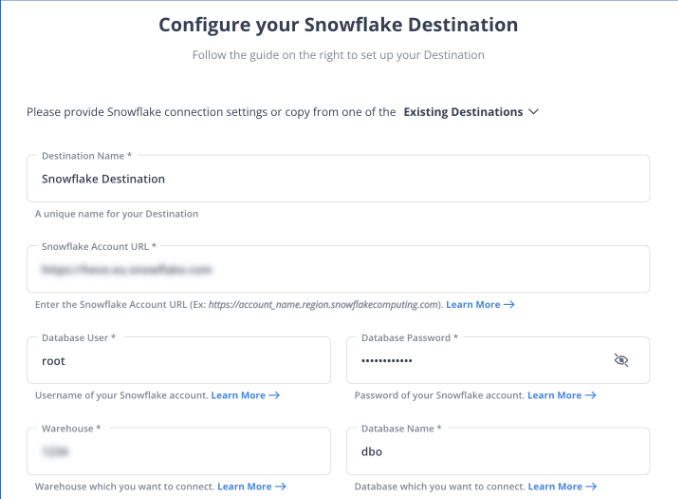

Step 2: Configuring Snowflake as the Destination

In this section, you will configure Snowflake as your destination. But before proceeding, make sure the prerequisites are satisfied.

Prerequisites

- Active Snowflake account.

- ACCOUNTADMIN, SECURITYADMIN, and SYSADMIN roles as needed.

- Hevo requires various USAGE, CREATE, and MODIFY permissions.

- Team Collaborator or any admin role (except Billing Admin) in Hevo.

- Obtain your Snowflake Account URL.

- Optionally, create a Snowflake warehouse for Hevo.

After following the prerequisites, configure the destination.

Following these steps, you can easily sync AWS RDS Oracle to Snowflake. To know more about the steps involved, refer to Hevo Data Snowflake Documentation.

Method 2: Convert AWS RDS Oracle to Snowflake Table Using Amazon S3

With this method, you can move your data from Amazon RDS to S3 and load it into Snowflake using the COPY command. You can follow another method: move your data from AWS RDS Oracle to S3 and then transfer it to Snowflake. Read Amazon S3 to Snowflake ETL to migrate data from Amazon S3 to Snowflake using Hevo Data.

Step 1: Migrating Data from AWS RDS Oracle to S3

In this section, you must move your data from the AWS RDS Oracle instance to Amazon S3.

- You must create an IAM policy for your Amazon RDS role.

- You can create an IAM policy for your S3 bucket if you want to.

- Creating an IAM role with your policy to attach your policies to the IAM role.

- You must associate your IAM role with your RDS Oracle DB instance.

- You must add the Amazon S3 integration option to allow data transfer between AWS RDS Oracle and S3.

- Follow the steps given in transfer your data between RDS Oracle and S3 to move your data from RDS Oracle to S3.

Following the above-mentioned steps, you can quickly move your data from AWS RDS to S3. For more information about the steps involved, read Amazon RDS Oracle and S3 integration documentation.

Step 2: Data Transfer from Amazon S3 to Snowflake

Following the steps mentioned in this section, you can quickly move your data from S3 to Snowflake.

- You need to create an external (S3) stage that specifies the location of the stored data files to access and load the data into a table. To do so, follow the steps mentioned in creating an S3 stage.

- You can utilize the COPY INTO <table> command to load the data from the staged file you created in the previous step.

You can refer to the Bulk Loading Guide from Amazon S3 to learn more about the steps involved.

Limitations of Using Amazon S3 to Transfer Data from Amazon RDS Oracle to Snowflake

Although both the methods mentioned above efficiently transfer data, there are certain limitations associated with using Amazon S3 to transfer data from Amazon RDS Oracle to Snowflake.

- Lack of Automation: This method lacks the automation essential for seamless data integration. You are required to perform manual tasks to transfer large datasets, which can consume valuable time.

- Lack of Real-Time Data Integration: Manually transferring data from source to destination lacks the essential real-time data integration feature. You must update the Snowflake tables constantly to ensure changes made to the source appear at the destination.

Use Cases of Integrating AWS RDS Oracle to Snowflake

- By loading data from AWS RDS Oracle to Snowflake, you can perform advanced statistical and analytical analysis on the data to produce valuable insights.

- Migrating data from AWS RDS Oracle to Snowflake can help you use features like schema evolution, table snapshots, and hidden partitioning.

- Integrating AWS RDS Oracle to Snowflake allows you to analyze your data cost-effectively. Snowflake’s cost management feature will enable you to optimize performance while minimizing costs.

You can also read about:

- Oracle database replication methods & tools

- Set up Oracle GoldenGate replication

- Replicate Oracle to MongoDB in 9 steps

- Connect Tableau to Oracle

Conclusion

In this article, you reviewed two of the most popular methods for inserting AWS RDS Oracle data into a Snowflake table. Although both methods are efficient in moving data from AWS RDS Oracle to Snowflake, the second method has certain limitations.

To overcome these limitations, you can use Hevo Data. It automates your data pipelining procedure, reducing the required manual effort. You can connect data from 150+ data source connectors through its easy user interface.

Want to take Hevo for a spin? Explore the 14-day free trial and experience the feature-rich Hevo suite firsthand. Also check our unbeatable pricing to choose the best plan for your organization

Share your experience of AWS RDS Oracle to Snowflake integration in the comments section below!

FAQs

1) How to migrate data from RDS to Snowflake?

1. Configure RDS and Snowflake environments.

2. Create an Amazon S3 bucket.

3. Take a snapshot of the RDS instance.

4. Export the snapshot to the S3 bucket.

5. Load the data into Snowflake.

2) How to migrate Oracle DB to Snowflake?

1. Open Hevo.

2. Configure the source as Oracle DB

3. Configure the destination as Snowflake.

4. Establish the connection.

3) How do I upload data from a local file into Snowflake?

1. Open Snowflake’s home page.

2. Create a database or choose an existing one.

3. Choose an existing table or create a new one.

4. After that, click Upload and select the local file from your Files on the computer.