Unlock the full potential of your Intercom data by integrating it seamlessly with Databricks. With Hevo’s automated pipeline, get data flowing effortlessly—watch our 1-minute demo below to see it in action!

So, trying to replicate data from Intercom to Databricks right? It’s always a pleasure talking to someone who gives utmost priority to the customer experience. Being focused on providing top-notch support to your customers is what makes you a great player.

At times, there would be a need to move your support & customer engagement data from Intercom to a data warehouse. That’s where you come in. You take the responsibility of replicating data from Intercom to a centralized repository. By doing this, the analysts and key stakeholders can take super-fast business-critical decisions.

Table of Contents

What is Intercom?

Intercom is a platform that is used for customer messaging. It helps businesses to establish connection with the customer so that they can communicate in more personal and efficient way. It has numerous ways to engage the customers via live chat, email campaigns, and targeted messaging. It also offers valuable customer data and insights, helping businesses to understand their audience and make better decisions.

What is Databricks?

Databricks is an open-source storage layer that helps you build a data lakehouse architecture. This setup combines the speed of data warehousing with the cost-effectiveness of a data lake. Databricks works seamlessly with your existing data lake and is fully compatible with Apache Spark, an open-source engine for processing large data sets.

Getting your Intercom data into Databricks doesn’t have to be a hassle. Hevo makes it smooth and straightforward just for you!

From Intercom to Insights—All in a Few Clicks!

- Seamless Setup: With Hevo’s user friendly interface, transfer your data without any prior coding.

- Always Fresh: Hevo syncs your data in real time without missing a beat.

- Save Time: No need for manual uploads—automate your data transfers and focus on what really matters for you.

Let Hevo do the heavy lifting for you. Try the 14-day trial for Hevo and experience the ultimate one-stop data pipeline solution for all your problems.

Get started for Free with Hevo!How to Replicate Data From Intercom to Databricks?

To replicate data from Intercom to Databricks, you can do either of the following:

Prerequisites

- Access to Intercom and Databricks accounts.

- Permissions to export data from Intercom.

- Databricks workspace set up to import CSV files.

- Hevo account to configure and manage the data pipeline.

Method 1: Replicate Data from Intercom to Databricks Using Hevo

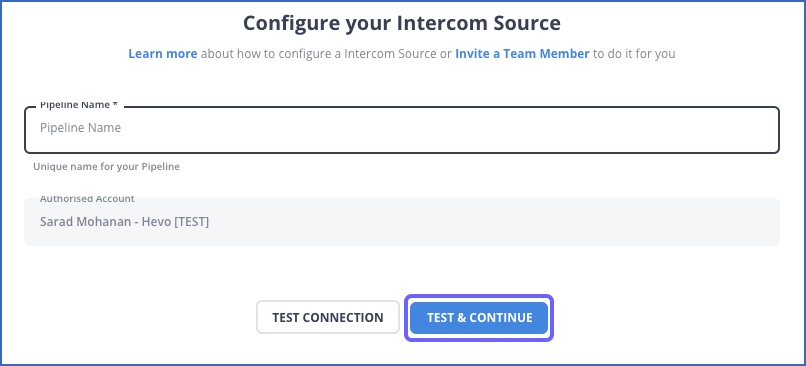

Step 1: Configure Intercom as your Source

- You can select “Intercom App” or “Intercom Webhook” based on your source requirements.

- Fill in the required credentials required for configuring Intercom as your source.

Step 2: Configure Databricks as your Destination

Now, you need to configure Databricks as the destination.

After implementing the 2 simple steps, Hevo will take care of building the pipeline for replicating data from Intercom to Databricks based on the inputs given by you while configuring the source and the destination.

You don’t need to worry about security and data loss. Hevo’s fault-tolerant architecture will stand as a solution to numerous problems. It will enrich your data and transform it into an analysis-ready form without having to write a single line of code.

Why you should consider Hevo

- Fully Managed: You don’t need to spend time building pipelines. With Hevo’s easy-to-use dashboard, you can keep an eye on every process and have complete control over your data flow.

- Ease of Data Transformation: Clean and transform your data effortlessly using drag-and-drop features or Python scripts. It’s built to handle various use cases with both pre-load and post-load transformation options.

- Faster Insights: Get near real-time data replication, so you can access insights and make decisions faster.

- Automatic Schema Management: Hevo takes care of schema mapping for you, automatically detecting and managing data to fit your destination schema.

- Scalable Infrastructure: Hevo is capable of scaling itself with growing volumes and sources of data.

- Clear Pricing: Choose a plan that suits your needs. Hevo’s pricing is straightforward, and you can set credit limits and get alerts for increased data flow.

- 24/7 Live Support: In case of any queries or help, Hevo’s support team is available around the clock via chat, email, and calls.

Method 2: Replicate Data from Intercom to Databricks Using CSV Files

Intercom, being a cloud-based support platform, stores data about conversations, leads, customers, and their engagement. You have to run multiple exports for different types of data.

You can even export the reports in CSV format.

Follow along to replicate data from Intercom to Databricks in CSV format:

Step 1: Export CSV Files from Intercom

- For exporting User or Company data

- First, select the users whose data you want to export.

- Select the column icon in the top-right corner. Then select the attributes for which you want to export data.

- Then, click on the “More” button at the top of the page. From the drop-down menu, click on the “Export” option.

- A dialog box appears. You get two options to select from, i.e., “Export with the currently displayed columns” or “Export with all the columns.”

- The CSV file will be delivered to you by email. Please note that the link to your CSV file will expire after one hour.

- For exporting data from Reports

- Go to the Reports tab, then select the report from which you want to export data. There are three reports by default: Lead Generation, Customer Engagement, and Customer Support. There might even be some custom reports.

- Then, in the left navigation pane, click on the “Export” button.

- Select the data range. To apply more filters, click on the “Add Filter” option and then add filters.

- Now, you can select the fields from which you want to export data.

- Then, click on the “Export CSV” button.

Step 2: Import CSV Files into Databricks

- In the Databricks UI, go to the side navigation bar. Click on the “Data” option.

- Now, you need to click on the “Create Table” option.

- Then drag the required CSV files to the drop zone. Otherwise, you can browse the files in your local system and then upload them.

Once the CSV files are uploaded, your file path will look like:

/FileStore/tables/<fileName>-<integer>.<fileType>

Step 3: Modify & Access the Data

- Click on the “Create Table with UI” button.

- The data now gets uploaded to Databricks. You can access the data via the Import & Explore Data section on the landing page.

- To modify the data, select a cluster and click on the “Preview Table” option.

- Then, change the attributes accordingly and select the “Create Table” option.

The above 3-step Intercom to Databricks process is optimal for the following scenarios:

- Less Amount of Data: This method is appropriate for you when the number of reports is less. Even there shouldn’t be massively large number of rows in each report.

- One-Time Data Replication: This method suits your requirements if your business teams need the data only once in a while.

- Limited Data Transformation Options: Manually transforming data in CSV files is difficult & time-consuming. Hence, it is ideal if the data in your spreadsheets is clean, standardized, and present in an analysis-ready form.

- Dedicated Personnel: If your organization has dedicated people who have to perform the manual downloading and uploading of CSV files, then accomplishing this task is not much of a headache.

However, when the frequency of replicating data from Intercom increases, this process becomes highly monotonous. It adds to your misery when you have to transform the raw data every single time. With the increase in data sources, you would have to spend a significant portion of your engineering bandwidth creating new data connectors. Just imagine — building custom connectors for each source, transforming & processing the data, tracking the data flow individually, and fixing issues. Doesn’t it sound exhausting?

How about you focus on more productive tasks than repeatedly writing custom ETL scripts, downloading, cleaning, and uploading CSV files? This sounds good, right?

What Can You Achieve by Replicating Your Data from Intercom to Databricks?

Here’s a little something for the data analyst on your team. We’ve mentioned a few core insights you could get by replicating data from Intercom to Databricks. Does your use case make the list?

- Which In-app issues have the highest average Response Time for the corresponding Support Tickets raised?

- In which geography does a particular messaging have the most engaging customers?

- How much percentage of your targeted emails have converted signups into active customers?

- What are the different segments of customers you should target for a particular message?

- Leads coming from which marketing channels can be targeted for a customized message?

You can also read more about:

- Google Sheets to Databricks

- Databricks Connect to SQL Server

- Databricks to S3

- Databricks ETL Methods

Summing It Up

Exporting and uploading CSV files is reasonable if your data analyst requires fresh data from Intercom only occasionally. However , if the frequency increases , this method becomes redundant. To solve this issue, you can consider Hevo , an automated data pipeline tool to handle data replication .

Hevo offers an automated, no-code data pipeline with over 150 plug-and-play integrations and transformation capabilities to streamline data replication.

Try Hevo’s 14-day trial to build a data pipeline from Intercom to Databricks to see better results

We hope you have found the appropriate answer to the query you were searching for. Happy to help!

FAQs

1. How do I transfer data to Databricks?

With Hevo, it’s super easy! Simply pick Databricks as your destination, select your data source, and Hevo will automatically handle the transfer for you with no coding needed.

2. How do I extract data from Intercom?

You can choose Intercom as a source in Hevo, and it will fetch and sync the data in real-time automatically .

3. How do I give access to Databricks?

Simply select Databricks as your destination in Hevo, then enter the server hostname, HTTP path, and access token. Hevo will securely connect and start transferring your data.