Unlock the full potential of your Outbrain data by integrating it seamlessly with BigQuery. With Hevo’s automated pipeline, get data flowing effortlessly—watch our 1-minute demo below to see it in action!

The journey that a customer takes through an eCommerce platform is complicated. They use various devices, such as smartphones and computers, to research items before making a purchase. This research includes visiting numerous websites, utilizing review platforms, and looking into various products. If you want to target a specific group of people, use marketing solutions like Outbrain that leverage emails, SMS, search engine ads, and remarketing to efficiently target potential buyers. You can learn more about product demand trends by looking at ad impressions, click-through rates, conversion rates, and search histories on marketing platforms.

In this article, you will learn how to integrate Outbrain to BigQuery. You will also learn about Outbrain and BigQuery and their key features.

Method 1: Outbrain to BigQuery Integration Using Hevo

Hevo‘s no-code technology allows you to convert your Outbrain data to BigQuery easily. No coding is required to experience seamless integration, real-time sync, and configurable transformations. Integrate your Outbrain data with BigQuery in only two steps.

Method 2: Manual Outbrain to BigQuery Integration

Manually connect Outbrain to BigQuery using APIs. This solution necessitates coding skills and setup, but it enables extensive customization of your data transmission process.

Get Started with Hevo for Free!Table of Contents

What is Google BigQuery?

Google BigQuery is a Data Warehouse hosted on the Google Cloud Platform that helps enterprises with their analytics activities. This Software as a Service (SaaS) platform is serverless and has outstanding data management, access control, and Machine Learning features (Google BigQuery ML). Google BigQuery excels in analyzing enormous amounts of data and quickly meets your Big Data processing needs with capabilities like exabyte-scale storage and petabyte-scale SQL queries.

Key Features of Google BigQuery

- Fully Managed: An in-house setup is not required since Google BigQuery is a fully managed Data Warehouse. To use Google BigQuery, you only need a web browser to log in to the Google Cloud project.

- Exceptional Performance: Google BigQuery provides several advantages, like higher storage efficiency and quicker ability to scan data. These features minimize slot consumption, querying time, and data use by supporting nested tables for practical data storage and retrieval.

- Security: Google BigQuery offers Column-level protection, verifies identity and access status, and establishes security policies as all data is encrypted and in transit by default.

- Partitioning: Google BigQuery’s decoupled Storage and Computation architecture employs column-based segmentation to lower the quantity of data retrieved from discs by slot workers.

What is Outbrain?

Outbrain is a large content discovery and native-advertising platform that connects publishers with advertisers for more audience engagement. Advanced algorithms will then assess the behavior and interests of users and deliver recommended content on publisher websites, hence keeping the reader connected to relevant articles, videos, and other media sources of non-intrusive native ads, which are more appealing with higher rates of engagement.

Key Features of Outbrain

- Data-Driven Insights: With advanced algorithms and analytics, Outbrain ensures that the relevant content gets recommended to its users based on their behavior.

- Customized targeting options: Users will be able to personalize advertising efforts by division of audiences and targeted campaigns based on their interests.

- Performance Tracking: Advertisers can monitor the performance of their campaign live to make relevant adjustments to maximize engagement and return on investment.

Why Integrate Outbrain to BigQuery?

The most challenging aspect of Outbrain’s marketing efforts for marketers is the money wasted on redundant adverts. Consider the case of advertisements for products that are temporarily unavailable, which represent a significant financial loss. Outbrain’s ability to solve this problem lies in its ability to receive vital data from other platforms. Your Outbrain marketing campaigns are not producing more income for you for several reasons, one of which is that you do not have enough specific data. It is not possible to send Outbrain this information in its original format at this time.

Methods to Integrate Outbrain to BigQuery

Method 1: Outbrain to BigQuery Integration Using Hevo

Hevo provides Google BigQuery as a Destination for loading/transferring data from any Source system, which also includes Outbrain for Outbrain to BigQuery Integration.

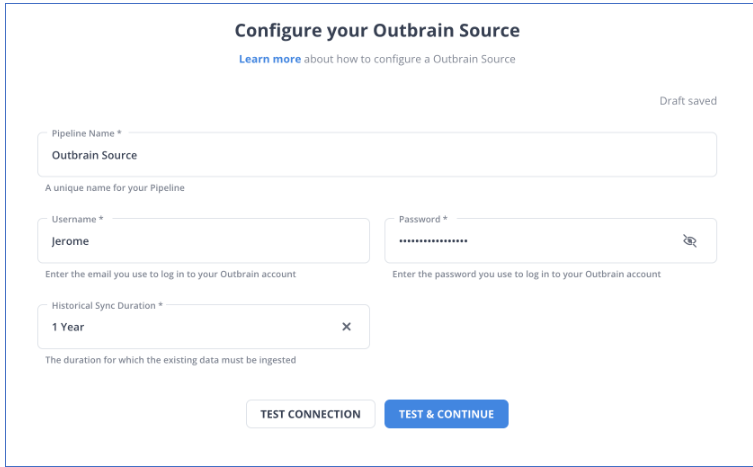

Step 1.1: Configure Outbrain as a Source

Configure Outbrain as the Source in your Pipeline as shown below

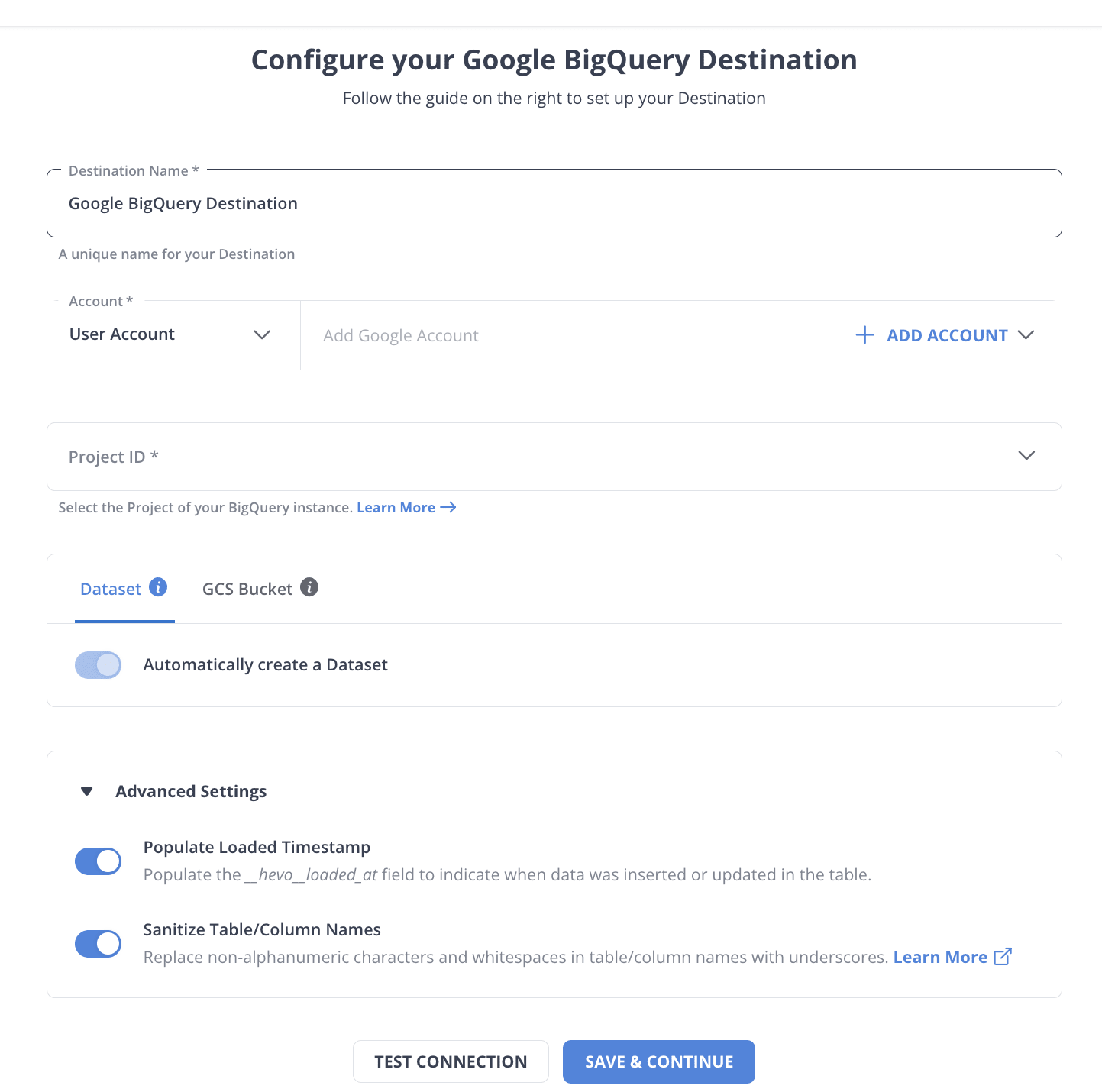

Step 1.2: Configure BigQuery as a Destination

Select BigQuery as your desired destination.

To test the connection, click TEST CONNECTION and then SAVE DESTINATION to finish the setup and complete Outbrain to BigQuery Integration.

Method 2: Outbrain to BigQuery Integration Manually

Step 2.1: Get Data out of Outbrain

In Outbrain to BigQuery Integration, the RESTful Amplify API from Outbrain allows you to extract data about marketers, campaigns, performance, and more. With calls like GET/reports/marketers/[id]/content, you can create an API call that specifies performance metrics like impressions, clicks, clickthrough rate, and cost. To limit, filter, and sort the results, you can use any of a dozen alternative options.

Step 2.2: Sample the Data

The second step in Outbrain to BigQuery Connection is sampling the data. The following is an example of a JSON response from an API query for performance data:

{

"results": [

{

"metadata":

{

"id": "00f4b02153ee75f3c9dc4fc128ab041962",

"text": "Yet another promoted link",

"creationTime": "2017-11-26",

"lastModified": "2017-11-26",

"url": "http://money.outbrain.com/2017/11/26/news/economy/crash-disaster/",

"status": "APPROVED",

"enabled": true,

"cachedImageUrl": "http://images.outbrain.com/imageserver/v2/s/gtE/n/plcyz/abc/iGYzT/plcyz-f8A-158x114.jpg",

"campaignId": "abf4b02153ee75f3cadc4fc128ab0419ab",

"campaignName": "Boost 'ABC' Brand",

"archived": false,

"documentLanguage": "EN",

"sectionName": "Economics",

},

"metrics":

{

"impressions": 18479333,

"clicks": 58659,

"conversions": 12,

"spend": 9187.16,

"ecpc": 0.16,

"ctr": 0.32,

"conversionRate": 0.02,

"cpa": 765.6

}

}

],

"totalResults": 27830,

"summary": {

"impressions": 1177363701,

"clicks": 2615150,

"conversions": 2155,

"spend": 455013.97,

"ecpc": 0.17,

"ctr": 0.22,

"conversionRate": 0.08,

"cpa": 211.14

},

"totalFilteredResults": 1,

"summaryFiltered": {

"impressions": 18479333,

"clicks": 58659,

"conversions": 12,

"spend": 9187.16,

"ecpc": 0.16,

"ctr": 0.32,

"conversionRate": 0.02,

"cpa": 765.6

}

}Step 2.3: Prepare the Data

You’ll need to construct a schema for your data tables if you don’t already have a data structure in which to store the data you obtain in Outbrain to BigQuery Integration. Then, for each value that is returned in the response, you will need to determine a predefined datatype (such as INTEGER, DATETIME, etc.) and make a table that can store it. You should be able to find information about the available fields and the data types that they relate to in the documentation for each endpoint.

Step 2.4: Load Data into BigQuery

- You can perform bulk inserts into an existing table using statements from the data manipulation language (DML), or you can store the results of a query in a new table.

- When you want to create a new table based on the results of a query, use the CREATE TABLE… AS statement.

- Execute a query, and then save the results of that query to a table. You have the option of writing the results to a new table or appending them to an existing table.

Step 2.5: Keeping Outbrain Data Up to Date

After gathering data and then transferring it to a data warehouse, the next thing is updating or adding fresh data from Outbrain into BigQuery in a way that does not result in duplication of data. Instead of re-importing data from Outbrain, look at significant fields, such as auto-incrementing timestamps such as “updated at” or “created at” to track changes. This way, your script can be run as a cron job or continuous loop to fetch new updates. In fact, the upkeep of the code is even more important because changes in the Outbrain API or user needs may make necessary changes to the code to get it to work without errors and integrate data correctly.

Conclusion

In this article, you learned two methods of Integrating Outbrain to BigQuery. The first method used Hevo, and the second method manually transferred data. You also learned why you need to integrate Outbrain to BigQuery.

However, as a Developer, extracting complex data from a diverse set of data sources like Databases, CRMs, Project management Tools, Streaming Services, and Marketing Platforms to your Database can seem to be quite challenging. If you are from non-technical background or are new in the game of data warehouse and analytics, Hevo can help!

Moreover, Hevo lets you directly load data to your desired destination. Try a 14-day free trial to explore all features. You can also have a look at our unbeatable pricing that will help you choose the right plan for your business needs!

Frequently Asked Questions

1. How do you load data into BigQuery?

Prepare your data in CSV or JSON format, navigate to your BigQuery project, select the target dataset, and upload files directly or link them to Cloud Storage. Once you have set up the schema and your load options, you will be taken to the “Load” button. It’s loaded into your tables from there.

2. How to load data from API to BigQuery?

Take data from an API by first pulling it out of the API with either a script or a data integration tool that is able to make API calls. From there, you can transform the data into a form that BigQuery will understand, perhaps JSON or CSV, and then either through the BigQuery Data Transfer Service or BigQuery API directly into tables of BigQuery.

3. How do I merge two datasets in BigQuery?

Use the SQL MERGE statement when you have to merge two datasets in BigQuery. The MERGE statement inserts, updates, or deletes rows in a target table based on data from a source table. With MERGE, you can determine which conditions represent matching rows and define actions that should be taken based on those matches-for example, insert, update, or delete.