Unlock the full potential of your PostgreSQL on Amazon RDS data by integrating it seamlessly with Azure Synapse. With Hevo’s automated pipeline, get data flowing effortlessly—watch our 1-minute demo below to see it in action!

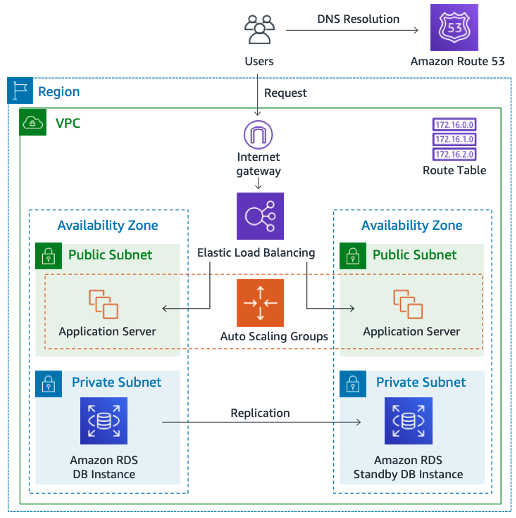

Transferring data from PostgreSQL on Amazon RDS to Azure Synapse is essential for harnessing advanced analytics and data-driven decision-making. Amazon RDS provides a robust relational database, while Azure Synapse offers powerful analytics capabilities.

By migrating data into a centralized storage like Synapse, you can seamlessly integrate sales, inventory, and customer data. This integration enables real-time insights, allowing you to uncover sales trends, tailor marketing strategies, manage inventory levels, and improve customer experiences.

In this article, we explore two ways to seamlessly shift data, enabling you to tap into the full potential of your data for growth and insights.

Method 1: Move Data from PostgreSQL on Amazon RDS to Azure Synapse Using CSV Files

Export your data manually from MySQL on Amazon RDS into CSV files and then upload them to Azure Synapse for further processing.

Method 2: Migrate from PostgreSQL on Amazon RDS to Azure Synapse Using Hevo

Use Hevo’s automated, no-code pipeline to seamlessly transfer data from MySQL on Amazon RDS to Azure Synapse, ensuring real-time sync and pre/post-load transformations.

Table of Contents

Method 1: Load data from PostgreSQL on Amazon RDS to Azure Synapse using CSV Files

For this method, keep the following prerequisites in mind:

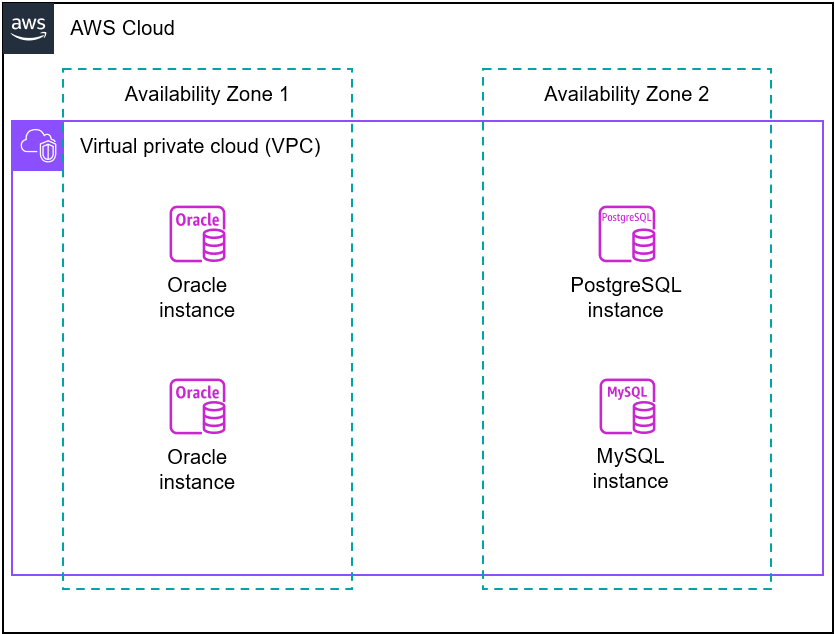

- AWS Account: You’ll need an AWS account with the necessary permissions to access your AWS RDS for the PostgreSQL instance.

- RDS Instance: An AWS RDS PostgreSQL instance set up and running with the data you want to export.

- Azure Account: You need an Azure account with access to Azure Blob Storage.

- Azure CLI

- Azure Synapse Analytics

Step 1.1: Export Data to CSV from AWS RDS for PostgreSQL

- Connect to your AWS RDS instance

- Log in to the AWS Management Console and go to the AWS RDS service.

- Select your PostgreSQL database instance and get the connection details, including the endpoint, port, username, and password.

- Use psql CLI to establish a connection with your RDS PostgreSQL instance using the database details.

psql -h rds-endpoint -U username -d database

Replace rds-endpoint, username, and database with the appropriate values.

- Export data to CSV

- Use the COPY command to export data from the RDS instance into CSV files.

COPY (SELECT * FROM table_name) TO '/local_directory/file.csv' DELIMITER ',' CSV HEADER;- Replace table_name, local_directory, and CSV file name with respective values.

- DELIMITER ‘,’ specifies that the CSV file will use a comma as the delimiter.

- CSV Header includes the column headers in the CSV files.

- Check the specific local directory to ensure the CSV file has been downloaded correctly.

Step 1.2: Upload CSV Files to Azure Blob Storage Container

To import CSV files into Azure Synapse Analytics, you must use an intermediate storage solution such as Azure Blob Storage. It is a cloud-based object storage service offered by Microsoft Azure. Azure Blob Storage offers seamless integration with various Azure services, facilitating the creation of data-driven workflows within the Azure ecosystem.

To get started, transfer the CSV files from your local machine to Azure Blob Storage through Azure CLI or the Azure portal. Afterward, use the Azure Blob Storage connector to move data from Blob Storage to Synapse Analytics.

- First, you need to create an Azure Blob Storage container.

- Install and configure your Azure account using the Azure CLI:

az login- Create an Azure Blob Storage container using the az storage container create command:

az storage container create \

--name $your_containerName \

--account-name $your_storageAccount \

--auth-mode loginReplace $your_containerName and $your_storageAccount with the desired container and Azure Storage account name.

- Ensure that the CSV files are properly formatted and ready to upload from your local machine.

- Use the AzCopy command to copy CSV files to the Azure Blob Storage container. AzCopy is a command-line utility provided by Microsoft for efficient data transfer to and from Azure Storage services, including Blob Storage.

- Refer to the following AzCopy command:

azcopy copy 'path/to/local/csv_files' 'https://your-storage-account-name.blob.core.windows.net/your-containerName' --recursive=true- path/to/local/csv_files should point to the directory containing your CSV files on your local machine.

- https://your-storage-account-name.blob.core.windows.net/your-containerName is the URL of your Azure Blob Storage container where you want to upload the data.

- recursive=true is used to copy files recursively if you have multiple files or directories.

- By following these steps, you’ve created an Azure Blob Storage container and loaded your CSV files into it.

Step 1.3: Upload to Azure Synapse

You can use Azure Data Factory to create data pipelines that automate the process of copying data from Azure Blob Storage to Azure Synapse Analytics. Azure Data Factory provides built-in connectors for Blob Storage and Synapse Analytics, making it simple to orchestrate data transfer.

- Create an Azure Data Factory Instance

- Log in to your Azure portal.

- Click on the Create a Resource button and then search for Data Factory.

- Follow the prompts to create an Azure Data Factory instance. Choose a region, configure settings, and create a new or use an existing Azure Storage linked service for Data Factory.

- In your Azure Data Factory, create two linked services: one for Azure Blob Storage and the other for Synapse Analytics.

- Configure both linked services with the necessary connection details to access your storage account and Analytics workspace, respectively.

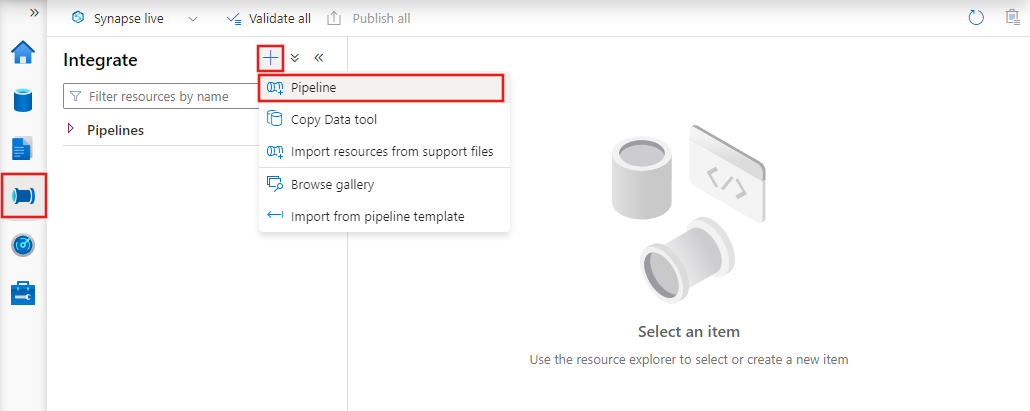

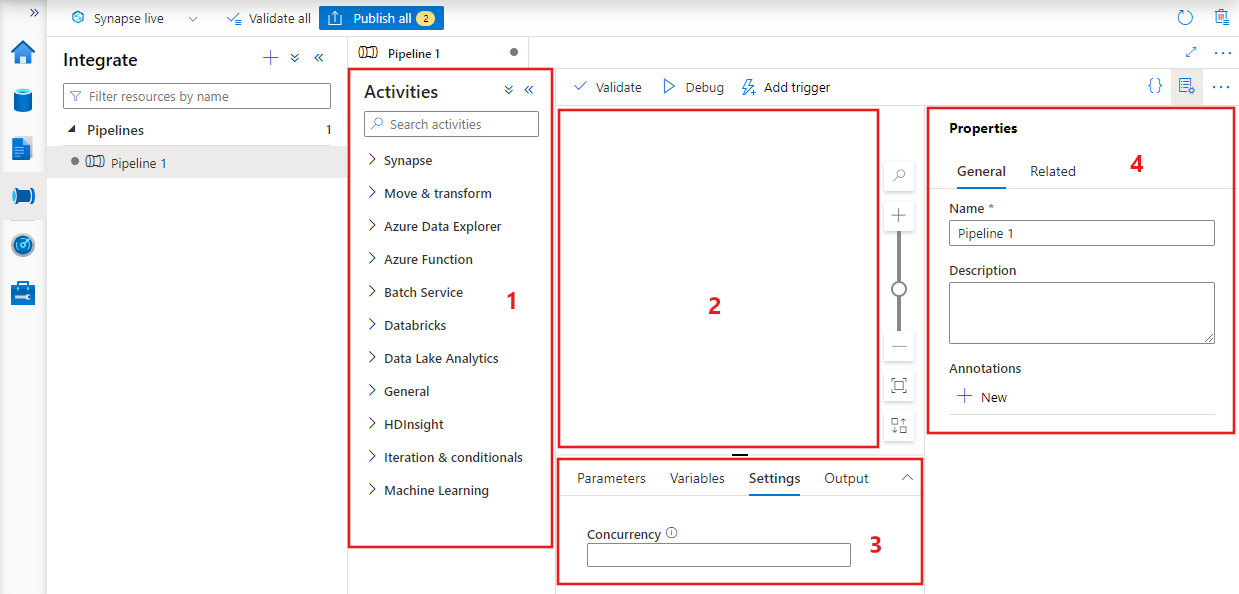

- Create a Data Pipeline

- Navigate to the Author & Monitor section.

- Click on the Author tab and create a new pipeline.

- In the Copy Data activity, configure the source dataset to point to your Azure Blob Storage linked service and specify the CSV files’ location. Configure the destination dataset to point to your Synapse Analytics linked service and specify the target table.

- You can schedule your pipeline to run at specific intervals (hourly, daily) if you want automated, regular data transfers. You can also manually trigger the pipeline to test the data transfer process.

- Use the monitoring and debugging feature to monitor the progress of your data pipeline.

These steps will help you transfer data from PostgreSQL on Amazon RDS to Azure Synapse.

PostgreSQL on Amazon RDS to Azure Synapse data replication using CSV files is suitable for the following scenarios:

- One-Time Backups: This method is particularly well-suited for scenarios where you only require a single, one-time backup operation with small to moderate data volumes.

- Data Security: Manually loading data allows you to implement data security measures, ensuring sensitive information is protected during both import and export processes. As you manually export specific AWS RDS PostgreSQL data into CSV files, you have the flexibility to customize the data selection. You can also enforce extra security features, like encryption and secure FTP, to maintain confidentiality during the transfer process.

Limitations of using CSV files for PostgreSQL on Amazon RDS to Azure Synapse Migration

- Human Error: The CSV approach is susceptible to human errors during the data extraction, transformation, and loading. It can lead to extracting data redundantly, leading to data duplication issues. Additionally, during transformations, you may mishandle data format conversions, leading to data type mismatch or data loss. Also, inaccuracies in data validation rules can load incorrect data into the target system.

- Latency: Exporting data from RDS PostgreSQL in CSV format and storing it in Azure Blob Storage before loading it into Synapse introduces extra steps. This can potentially cause delays, especially when dealing with larger datasets. It necessitates ongoing manual interventions for data updates, schema mapping, and maintaining data quality, which could affect data accessibility. This approach may not be suitable for real-time analysis or immediate reporting needs.

Method 2: Using a No-Code Tool Like Hevo Data to Build PostgreSQL on Amazon RDS to Azure Synapse ETL Pipeline

Step 2.1: Connect to Amazon RDS PostgreSQL as a Data Source

Step 2.2: Connect to Azure Synapse as a Destination

Features Offered by Hevo

- Pre-built Connectors: Hevo offers 150+ in-built connectors to cater to diverse data integration needs. These connectors help you to collect data from multiple sources and load it into your desired warehouse or destination. Hevo provides data connectors for cloud storage, databases, APIs, event streaming, SaaS applications, and custom sources, facilitating the data integration process.

- Drag-and-Drop Transformation: You can use Hevo’s drag-and-drop interface to simply design and configure data pipelines. With drag-and-drop transformations, you have a code-free solution to clean and enrich the ingested data before it is sent to the destination. However, for complex transformations, you can opt for Python code-based transformations.

- Customer Support: Hevo Data offers customer support through various channels, including email, chat, and phone, along with comprehensive documentation and technical assistance to help you with data integration challenges.

What can you Achieve with PostgreSQL on Amazon RDS to Synapse Integration?

Integrating PostgreSQL on Amazon RDS to Azure Synapse can allow you to achieve various insights related to sales, customer, and team performance. Here are some of them:

Sales Analytics

- Combine data from AWS RDS PostgreSQL and Azure Synapse to analyze sales performance metrics such as revenue, profit margins, and sales growth trends.

- Use historical sales data to develop predictive models for sales forecasting, helping with inventory planning and resource allocation.

- You can access the performance of your sales team by analyzing sales quotas, conversion rates, and individual contributions.

Customer Analytics

- Segment customers based on purchase behavior, demographics, and interest. Identify high-value, regular customers and tailor marketing strategies accordingly.

- You can utilize customer data to create personalized marketing campaigns, product recommendations, and shopping experiences.

Learn More:

Conclusion

This blog discusses two approaches for seamlessly connecting PostgreSQL on Amazon RDS to Azure Synapse. If your data transfers from Amazon RDS PostgreSQL to Azure Synapse are infrequent, the first method is suitable. However, if you need real-time data replication, an automated solution like Hevo is the way to go. The best part is that Hevo simplifies the process of moving data between various sources and destinations while reducing the risk of human errors.

Sign up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

Frequently Asked Questions

1. How to migrate PostgreSQL database to Azure SQL Database?

You can migrate a PostgreSQL database to Azure SQL using Azure Data Migration Service (DMS), or export and import data using SQL scripts.

2. What is Azure Synapse equivalent in AWS?

The AWS equivalent of Azure Synapse is Amazon Redshift. Both are cloud-based data warehousing solutions designed for analytics.

3. How to migrate PostgreSQL RDS to Aurora?

You can use Hevo to seamlessly migrate data from PostgreSQL RDS to Aurora, ensuring real-time replication without coding. Alternatively, you can use AWS DMS for the migration.