Unlock the full potential of your PostgreSQL on Amazon RDS data by integrating it seamlessly with Snowflake. With Hevo’s automated pipeline, get data flowing effortlessly—watch our 1-minute demo below to see it in action!

Are you struggling to connect PostgreSQL on Amazon RDS to Snowflake? Do you find it challenging to integrate the two platforms and leverage the powerful analytics features of Snowflake? You’re not alone. To analyze the vast amounts of data stored in PostgreSQL databases on Amazon RDS, integration with Snowflake becomes a viable solution.

In this guide, we will explore two simple methods for migrating data from PostgreSQL Amazon RDS to Snowflake.

Let’s dive in!

Method 1: Move Data from PostgreSQL on Amazon RDS using CSV files

This method has two steps: exporting the data to CSV files and then importing the CSV files into Snowflake. Though both methods are simple compared to the second one, this one can be pretty time-consuming.

Method 2: Using an automated data pipeline solution – Hevo

It is an easy way to load data in minutes; all that is to be done is to configure the source and the destination. It is a no-code method that any user can understand and use.

Discover why BeepKart chose Hevo with a 14-day free trial.

Get Started with Hevo for FreeTable of Contents

Prerequisites To How to Connect PostgreSQL on Amazon RDS to Snowflake?

Keep the following prerequisites in mind for the PostgreSQL on Amazon RDS to Snowflake migration process:

- Access to PostgreSQL database on Amazon RDS.

- An active Snowflake account.

Method 1: Move Data from PostgreSQL on Amazon RDS using CSV files

Step 1: Export Data from PostgreSQL on Amazon RDS to a CSV File

- Use the psql to connect to your Amazon RDS PostgreSQL database. You’ll require the necessary credentials like, the hostname (endpoint) of your RDS instance, database name, username, and password. To access your Amazon RDS PostgreSQL database, enter the following command:

psql -h rds_endpoint -U username -d database_nameReplace rds_endpoint, username, and database_name with the appropriate values.

- Now, you can execute the COPY TO command to export data from a table to a CSV file.

COPY table_name TO 'file_path/file_name.CSV' WITH CSV HEADER;Replace table_name with the name of the table from where you want to export data. Replace file_path/file_name.CSV with the name and path of the CSV file.

- After executing the above command, specified table data will be copied into a CSV file. Make sure the CSV files are in the correct format and that the structure matches the target Snowflake table. Verify and clean the data as per the requirement before loading it into the Snowflake table.

Step 2: Create a Snowflake Internal Stage

- To load CSV files from a local machine into a Snowflake table, you would need to create an internal stage. This stage will map to a location within your Snowflake account. It’ll be used to temporarily store the CSV files during the data loading process. You can create an internal stage using the following command in SnowSQL CLI:

CREATE STAGE internal_stage_nameReplace internal_stage_name with the name of your choice for the stage.

- Now you need to upload CSV files from your local machine into the Snowflake internal stage. This is achieved by using the PUT command. Execute the below command to upload CSV files into the internal stage:

PUT file:///path_to_local_csv_file/file.csv @internal_stage_name;Replace /path_to_local_csv_file/file.csv with the path and name of the CSV file on your local machine, and @internal_stage_name with the internal stage name that you’ve just created.

Step 3: Upload CSV Files to Snowflake

- Before uploading the data, ensure you have a target table in Snowflake with the correct schema that matches the CSV file’s structure. You can create the table in Snowflake using a CREATE TABLE statement.

- Finally, use the COPY INTO command to load data from the internal stage into the Snowflake table.

COPY INTO snowflake_table_name

FROM <internal_stage_name>/<path_to_csv_file>

FILE_FORMAT = (TYPE = ‘CSV’);Replace snowflake_table_name with the name of the target table you want to load data into. Also, update the path and CSV filename you uploaded to the internal stage.

That’s it! You’ve successfully loaded data from your PostgreSQL on Amazon RDS into Snowflake using the CSV approach.

Limitations of Manual Migration from PostgreSQL on Amazon RDS to Snowflake

There are several drawbacks to performing PostgreSQL on Amazon RDS to Snowflake ETL using CSV files. Here are some of them:

- Time-Consuming: Downloading PostgreSQL data from Amazon RDS to CSV and uploading it to Snowflake can be slow, especially with large datasets.

- Data Consistency and Validation: It is critical to ensure consistency between PostgreSQL and Snowflake. Without proper testing and validation, data integrity issues may arise, leading to inaccuracies.

- Data Security: While Snowflake offers strong security, careful access control during manual migration is essential to prevent vulnerabilities and ensure secure data transfer.

Method 2: Automating the Data Replication Process Using a No-Code Tool

Automating data replication from your PostgreSQL on Amazon RDS to Snowflake using a no-code tool provides significant advantages compared to using CSV files. Here are some of them:

- User-Friendly: No-code tools are easy to use, allowing non-technical users to manage data replication without writing code, making synchronization efficient.

- Integration with Multiple Data Sources: These tools provide pre-built connectors for seamless integration of multiple data sources, simplifying data consolidation and management.

- Real-Time or Scheduled Updates: No-code tools support real-time or scheduled updates, ensuring your analytics platform remains up to date without manual effort.

Hevo Data is a popular no-code tool for automating data replication. It provides a simple and intuitive interface for setting up data pipelines between PostgreSQL on Amazon RDS and Snowflake.

To migrate data from PostgreSQL on Amazon RDS to Snowflake using Hevo Data, follow these steps:

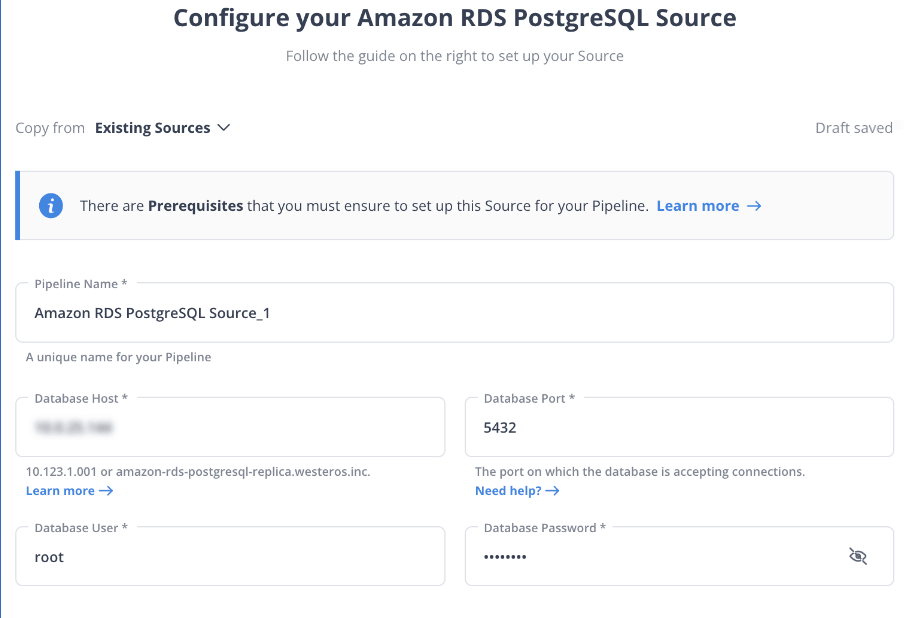

Step 1: Configure Amazon RDS PostgreSQL as Source

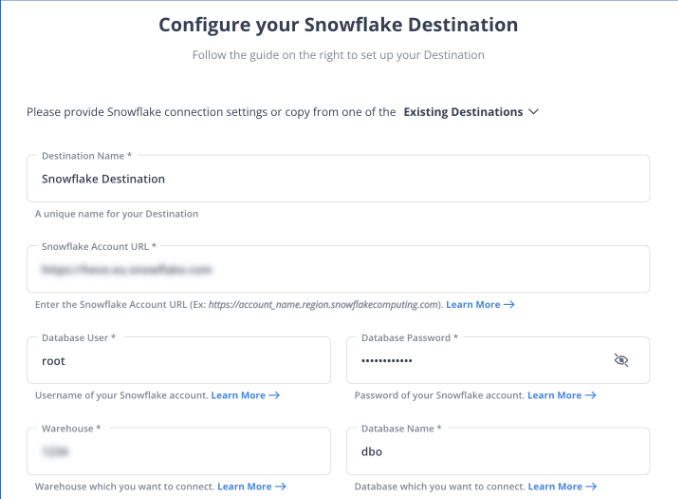

Step 2: Configure Snowflake as Destination

You have successfully connected your PostgreSQL database on Amazon RDS to Snowflake. Now you can use Snowflake to work with your PostgreSQL data, allowing you to explore and analyze your data with ease.

What can you Achieve by Migrating Data from PostgreSQL on Amazon RDS to Snowflake?

Here are some of the analyses you can perform after PostgreSQL on Amazon RDS to Snowflake replication:

- Explore sales funnel stages for valuable insights.

- Analyze email touchpoints to segment highly engaged customers.

- Evaluate employee performance using data from project management and HR platforms.

- Integrate data from multiple departments (Sales, Marketing, HR) to answer key questions, such as identifying high-conversion marketing campaigns, monitoring social media engagement, and assessing financial performance for overall company growth.

Conclusion

- PostgreSQL on Amazon RDS to Snowflake integration can be achieved by using CSV files or with an automated no-code tool.

- With CSV files you get more control and flexibility, allowing you to tailor data transformations to your needs. However, it requires a higher level of expertise and effort to implement.

- On the other hand, no-code tools like Hevo Data are an excellent choice for a simple and efficient data integration solution.

- Hevo’s user-friendly interface and pre-built connectors make the integration process easy and accessible to users of all levels without coding expertise.

With real-time data streaming and transformation capabilities, Hevo enables seamless integration and data synchronization between PostgreSQL on Amazon RDS and Snowflake. This functionality ensures that you can work with up-to-date data, facilitating timely and data-driven decision-making.

If you don’t want SaaS tools with unclear pricing that burn a hole in your pocket, opt for a tool that offers a simple, transparent pricing model. Explore the 14-day free trial to check out the rich features of Hevo.

Schedule a demo to see if Hevo would be a good fit for you, today!

FAQs

1) How do we migrate data from RDS to Snowflake?

1. Configure Snowflake and RDS environments.

2. Next, create a S3 Bucket.

3. Take a snapshot of the Amazon RDS instance.

4. Export the snapshot to S3 Bucket.

5. Load the data into Snowflake.

2) How to load data from the database to Snowflake?

Using the web interface:

1. In your Snowflake account, create a new table or choose an existing one to load your data into.

2. In the table details, select the Load button to open the Data Wizard, load the desired file into your table, and select the data warehouse you need.

3) Why is Snowflake faster than PostgreSQL?

This is due to the Snowflake architecture, which allows for independent scaling of compute resources and scaling, ensuring optimal performance tailored to your needs.