One of Snowflake’s greatest strengths is how fast it can ingest structured and unstructured data. But, understanding different aspects of handling Snowflake requires effort. You need a guide to figure out how Snowflake handles its JSON objects and arrays in JSON data. This is precisely what we are going to cover in this blog.

In this post, you will look at how to work with JSON files in Snowflake and how to directly query the Snowflake JSON data and copy it into a Snowflake table. Let’s get started!

Table of Contents

Basic JSON Syntax

Rules:

- <KEY>:<VALUE> Data is in key/value pairs

- Data is separated by commas

- Objects are enclosed in curly braces ({})

- Arrays are enclosed in square brackets ([])

"movies":[

{"movie":"Iron Man", "producer":"Avi Arad"},

{"movie":"Sherlock Holmes", "producer":"Joel Silver"},

{"movie":"Dolittle", "producer":"Joe Roth"}

]JSON Syntax Rewrite :

- Key/Value Pairs: JSON data is written as key/value pairs. It consists of a field name (in double quotes), followed by a colon, then the value. For example: “firstName”:”John”.

- Objects: In JSON, objects are a collection of name/value pairs and are enclosed in curly braces {}. An object can contain multiple name/value pairs. Each name is followed by a colon and the name/value pairs are separated by commas. For example: {“firstName”:”John”, “lastName”:”Doe”}.

- Arrays: Arrays in JSON are ordered collections and are enclosed in square brackets []. An array can contain multiple values, which can be strings, numbers, objects, arrays, boolean, null, etc. The values in an array are separated by commas. For example:

"movies":[

{"movie":"Iron Man", "producer":"Avi Arad"},

{"movie":"Sherlock Holmes", "producer":"Joel Silver"},

{"movie":"Dolittle", "producer":"Joe Roth"}

]4. Values: JSON values can be:

- A number (integer or floating point). For example: 100, 3.14.

- A string (must be in double quotes). For example: “Hello”.

- A Boolean (true or false). For example: true, false.

- An array (enclosed in square brackets). For example: [“apple”, “banana”, “cherry”].

- An object (enclosed in curly braces). For example: {“name”:”John”, “age”:30}.

Looking for an easy way to centralize all your data to Snowflake? Hevo Data, a No-Code Data Pipeline platform, helps to transfer data from 150+ Data sources to Snowflake. Hevo completely automates the process of not only exporting data from your desired source but also transforming it into an analysis-ready form. Why choose Hevo?

- Provides real-time sync for better insights.

- Get 24/7 live chat support.

- Provides an automapping feature to automatically map your schema.

Experience why Ebury chose Hevo over Stitch and Fivetran to build complex pipelines with ease and after factoring in the excellent customer service and reverse ETL functionality.

Effortlessly Load your data into Snowflake with HevoWhy Query Snowflake JSON Data?

- In Snowflake, you can natively ingest semi-structured data in JSON and XML, Parquet, Avro, ORC, and other formats. This means that in Snowflake, you can efficiently store JSON data and access it using SQL.

- Snowflake JSON allows you to parse json into columns. You can then query this data with SQL and join it to other structured data without doing any transformations.

- This will enable organizations to reduce the complexity of their data pipelines and increase the speed at which this data becomes available for analysis.

- The ease of Snowflake JSON parsing makes it a preferred choice for organizations seeking streamlined data extraction and analysis from JSON files.

- In addition to this, Snowflake understands nesting, data types, and the difference between objects and lists. Thus, Snowflake offers native support to JSON.

How does Snowflake handle Snowflake JSON Objects?

Snowflake does this using a data type they call a VARIANT. This data type allows semi-structured data to be loaded, as is, into a column called VARIANT in a relational table as you shall see later. As this data is ingested, Snowflake automatically discovers the attributes, keys, and structure that exists in the JSON document. Statistics are also collected in Snowflake’s metadata repository which enables optimization.

Consider an employee table with different employee attributes created using statements as shown below:

create or replace table employee (

id number,

name string.

age number

dob date,

height_in_ft number (3,1),

married boolean,

has_kids boolean

stock_options number.

phone text.

created_at timestamp

);How to Parse JSON in Snowflake?

When working with complex Snowflake JSON data, the ‘snowflake parse JSON into columns’ functionality simplifies the process of extracting structured information. Parse_JSON Snowflake function is a powerful tool that allows you to effortlessly extract and manipulate JSON data within your data warehouse.

Below is an example of using VARIANT Data types to Parse and Insert JSON objects in the Snowflake table.

--a function called parse_json

insert into json_tbl(json_col)

select parse_json("{"firstName":"John", "empid":1001}’);

select from json_tbl;

delete from json_tbl;

insert into json_tbl (json_col)

select parse_json (Column1) from values

('{"firstName": "Name-1", "empid":1001}"),

(‘{"firstName":"Name-2", "empid":1002}'),

(‘{"firstName":"Name-3", "empid":1003}'),

('{"firstName":"Name-4", "empid":1004}'),

(‘{"firstName": "Name-5", "empid":1005}’)Follow the steps below to parse Snowflake JSON data in Snowflake:

Step 1: Create a Table

- Execute a simple create statement.

create or replace table json_table (v variant);- This command creates a single-column table with a column “v”.

Step 2: Load JSON Data

- Load a sample JSON document using a simple

INSERTstatement and Snowflake’sPARSE_JSONfunction.

insert into json_table

select

parse_json(

'{

"fullName":"Robert Downey",

"age":55,

"gender":"Male",

"address": {

"areaCode":"91506",

"suite":"916 West Burbank Blvd"

},

"movies": [

{"name":"Iron Man","budget":"$150M","producer":"Avi Arad"},

{"name":"Sherlock Holmes","budget":"$200M","producer":"Joel Silver"},

{"name":"Dolittle","budget":"$175M","producer":"Joe Roth"}

]

}');In this example, you have an array of highly nested objects. In a production environment, you would use snowpipe or a copy command e.g.

copy into json_table from '@demo_stage/path_to_file/file1.json;OR

copy into json_table from 's3://demo_bucket/file1.json;- Snowflake parses the JSON into sub-columns based on the key-value pairs at load time. These keys are recorded as pointers in metadata for optimization.

- Structural information is dynamically derived based on the schema definition embedded in the JSON string (Schema-on-Read).

- No other characters except colon (:) are allowed after column names, especially for the root element. Also, keys in JSON files are case-sensitive.

Consider another example of an employee table in Snowflake. On execution, the record looks as shown below:

– Let me insert one single record insert into employee_tbl(emp_json)

select parse_json(

{

"name": "John",

"age": 30,

"height_in_ft": 5.11,

"married": true,

"has_kids": false,

"stock_options": null

}');

select * from employee_tbl;Step 3: Start Pulling Data

Let’s get the data from the sub-column “fullName”.

select v:fullName from json_table;| 1 Row Produced | |

| row # | V:FULLNAME |

| 1 | “Robert Downey” |

A colon is used to reference the JSON sub-columns where:

- v = the column name in the json_table table

- fullName = attribute in the JSON schema

- v:fullName = notation to indicate which attribute in column “v” we want to select

Step 4: Casting the Data

The table that was returned in the previous step came back with double quotes around it and now you are going to cast that data into an actual data type.

select v:fullName::string as full_name from json_table;| 1 Row Produced | |

| row # | V:FULL_NAME |

| 1 | Robert Downey |

You used :: to cast to a string data type and added an alias with “as” just like in regular SQL.

select

v:fullName::string as full_name,

v:age::int as age,

v:gender::string as gender

from json_table| 1 Row Produced | |||

| row # | FULL_NAME | AGE | GENDER |

| 1 | Robert Downey | 55 | Male |

On execution, it shows the data type of each attribute

Handling Arrays in Snowflake JSON

You can embed arrays inside Snowflake JSON documents, for example;

"movies": [

{"name": "Iron Man", "budget": "$150", "producer": "Avi Arad"},

{"name": "Sherlock Homes", "budget": "$200", "producer": "Joel Silver"},

{"name": "Dolittle", "budget": "$175", "producer": "Joe Roth"}

]“movies” is a sub-column with an array. There are 3 rows in the array and each row has 3 sub-columns (name, budget, and producer). To pull the data of each row, use FLATTEN with the FROM clause and give it a table alias. FLATTEN takes an array and returns a row for each element in the array. It selects all the data in the array as though it were rows in the table.

select

f.value:name::string as movie_name,

f.value:budget::string as movie_budget,

f.value:producer::string as movie_producer

from json_table, table(flatten(v:movies)) f;f = table alias for the flattened array

name = sub-column in the array

value = returns the value of the sub-column

| 3 Rows Produced | |||

| row # | MOVIE_NAME | MOVIE_BUDGET | MOVIE_PRODUCER |

| 1 | Iron Man | $150M | Avi Arad |

| 2 | Sherlock Holmes | $200M | Joel Silver |

| 3 | Dolittle | $175M | Joe Roth |

Method 1: Parsing JSON Arrays Directly from a Staged JSON File

You can access the first entry in the JSON object array within the JSON data file uploaded as json_sample_data2 like so:

SELECT 'The First Employee Record is '||

S.$1:root[0].employees[0].firstName||

' '||S.$1:root[0].employees[0].lastName

FROM @~/json/json_sample_data2 (file_format => 'json') as S;The returned output would be: The First Employee Record Is: John Doe.

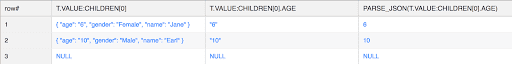

Method 2: Using the PARSE_JSON Function

This method parses text as a JSON document and returns a VARIANT value. If the input is NULL, the output is also NULL. If the input text is ‘null,’ it is processed as a JSON null value; therefore, the output is not a SQL NULL but a legal VARIANT value containing null (the distinction is obvious when displaying this VARIANT value).

SELECT t.value:children[0],

t.value:children[0].age,

parse_json(t.value:children[0].age)

FROM @~/json/json_sample_data.gz (file_format => 'json') as S, table(flatten(S.$1,'root')) t;

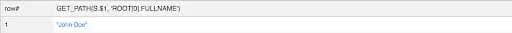

Method 3: Using the GET_PATH Function

The GET_PATH method extracts a value from structured data based on a path name. A GET variation accepts a variation, OBJECT, or ARRAY value as the first parameter. Then, it extracts the VARIANT value of the field or element using the path name as the second argument.

Path name notation is a typical JavaScript notation consisting of a concatenation of field names (identifiers) followed by dots and index operators [index]. The leading dot in the first field name is optional. The index operations accept non-negative decimal integers (for arrays) or single or double-quoted text literals (for objects).

GET_PATH represents a chain of GET functions. If the path name matches any element, it returns NULL.

SELECT GET_PATH(S.$1, 'root[0].fullName')

FROM @~/json/json_sample_data.gz (file_format => 'json') as S;

SELECT t.value:fullName

FROM @~/json/json_sample_data.gz (file_format => 'json') as S

, table(flatten(S.$1,'root')) t;

SELECT

t.value[0]:children as First_person_children,

t.value[1]:children as Second_person_children,

t.value[2]:children as Third_Person_children

FROM @~/json/json_sample_data.gz (file_format => 'json') as S

, table(flatten(S.$1,'')) t;Output

The SELECT command returns the whole contents of the json_sample_data file (some results are shown):):

+-----------------------------------+

| VALUE |

|-----------------------------------|

| [ |

| { |

| "age": 22, |

| "children": [ |

| { |

| "age": "6", |

| "gender": "Female", |

| "name": "Jane" |

| }, |

| { |

| "age": "15", |

| "gender": "Male", |

| "name": "John" |

| } |

| ], |

| "citiesLived": [ |

| { |

| "place": "Seattle", |

| "yearsLived": [ |

| "1995" |

| ] |

| }, |

| { |

| "place": "Stockholm", |

| "yearsLived": [ |

| "2005" |

| ] |

| } |

| ], |

| "fullName": "John Doe", |

| "gender": "Male", |

| "kind": "person", |

| "phoneNumber": { |

| "areaCode": "206", |

| "number": "1234567" |

| } |

| }, |

..

+-----------------------------------SELECT t.value

FROM @~/json/json_sample_data.gz (file_format => 'json') as S, table(flatten(S.$1,'root')) t;Output

The three data arrays representing the three workers’ records and their dependant data are returned.

SELECT

array_size(t.value)

FROM @~/json/json_sample_data.gz (file_format => 'json') as S, table(flatten(S.$1,'')) t;Output

In the JSON example file used in this lesson, there are three employee records and all of their dependent entries (children’s names, ages, and so on).

SELECT emps.value:fullName, citliv.value:place, citliv.value:yearsLived , yrliv.value

FROM @~/json/json_sample_data.gz (file_format => 'json') as S,

table(flatten(S.$1,'root')) emps,

table(flatten(emps.value:citiesLived,'')) citliv ,

table(flatten(citliv.value:yearsLived,'')) yrliv;Output:

SELECT emps.value:fullName, citliv.value:place, count(yrliv.value) as Years_Count

FROM @~/json/json_sample_data.gz (file_format => 'json') as S,

table(flatten(S.$1,'root')) emps,

table(flatten(emps.value:citiesLived,'')) citliv,

table(flatten(citliv.value:yearsLived,'')) yrliv

group by emps.value:fullName, citliv.value:place

order by 1,2;Conclusion

In summary, you can see that the VARIANT data type offers native support for querying Snowflake JSON without analyzing the structure ahead of time or performing complex transformations. Querying Snowflake JSON data performs like all the standard relational data types.

Extract targeted text segments with the Snowflake SUBSTRING function. Explore details at Snowflake SUBSTRING Overview. Use the SUBSTRING function in Snowflake for precise text extraction. More at Snowflake SUBSTRING.Hevo is the only real-time ELT No-code Data Pipeline platform that cost-effectively automates flexible data pipelines to your needs. With integration with 150+ Data Sources (60+ free sources), we help you not only export data from sources & load data to the destinations but also transform & enrich your data, & make it analysis-ready. Try a 14-day free trial and experience the feature-rich Hevo suite firsthand. Also, check out our unbeatable pricing to choose the best plan for your organization.

FAQs

1. What data formats are supported in Snowflake?

Snowflake accepts all data formats-from structured formats such as CSV, JSON, XML, and Parquet to semi-structured data like Avro and ORC, and then moves to unstructured data formats as images, PDFs, and videos.

2. Can Snowflake store JSON data?

Yes, Snowflake supports the storing of JSON data by using its VARIANT data type. This allows the storage and querying of semi-structured data like JSON data. Even functions for parsing and querying JSON are offered.

3. What is a nested JSON?

A nested JSON is just a JSON object with other JSON objects or arrays as their values, which suggests nesting of data at multiple levels.