Are you looking to execute operational database activities on your BigQuery data by transferring it to a PostgreSQL database? Well, you’ve come to the right place. Data replication from BigQuery to PostgreSQL is now much more straightforward.

This article provides a quick introduction to PostgreSQL and Google BigQuery. You’ll also learn how to set up the integration using three different techniques. You can migrate your data automatically using Hevo or explore the manual approaches using Cloud Data Fusion or Apache Airflow. After reading this article, you will know how to migrate your BigQuery data into the PostgreSQL database effortlessly.

Table of Contents

Introduction to PostgreSQL

PostgreSQL is a high-performance, enterprise-level open-source relational database that enables SQL (relational) and JSON (non-relational) querying. It’s a reliable database management system with more than two decades of community work to thank for its high resiliency, integrity, and accuracy. Many online, mobile, geospatial, and analytics applications utilize PostgreSQL as their primary data storage or data warehouse.

Introduction to Google BigQuery

Google BigQuery is a serverless, scalable, and cost-effective data warehouse with built-in machine learning and fast SQL processing. It helps manage business transactions, database integration, and access controls using Google’s powerful infrastructure. Companies like UPS, Twitter, and Dow Jones rely on BigQuery for tasks like forecasting shipments, managing ad changes, and processing millions of data points in real time.

Ditch the manual process of writing long commands to connect your BigQuery data to PostgreSQL, and choose Hevo’s no-code platform to streamline your data migration.

With Hevo:

- Easily migrate different data types like CSV, JSON, etc.

- 150+ connectors like Postgres and BigQuery(including 60+ free sources).

- Eliminate the need for manual schema mapping with the auto-mapping feature.

Experience Hevo and see why 2000+ data professionals, including customers such as Thoughtspot, Postman, and many more, have rated us 4.4/5 on G2.

Get Started with Hevo for FreeWhy You Should Migrate Your Google BigQuery Data to PostgreSQL

- Cost Optimization: PostgreSQL offers a cost-effective solution for storing and managing data compared to BigQuery’s pay-per-query pricing model.

- On-Premises Deployment: If your business requires an on-premises solution, PostgreSQL offers the flexibility that BigQuery, as a cloud-only platform, lacks.

- Custom Application Needs: PostgreSQL’s compatibility with a wide range of applications makes it ideal for building and managing custom software solutions.

- Open-Source Flexibility: PostgreSQL’s open-source nature allows for greater control, customization, and community support.

- Transactional Workloads: PostgreSQL is well-suited for handling transactional operations, making it a better choice for applications requiring ACID compliance.

- Hybrid Database Environment: Migrating data to PostgreSQL can support a hybrid environment where you leverage both cloud-based and on-premises systems efficiently.

Method 1: Using Hevo Data to Set Up BigQuery to PostgreSQL Integration [Recommended]

Step 1.1: Configure Your BigQuery Source

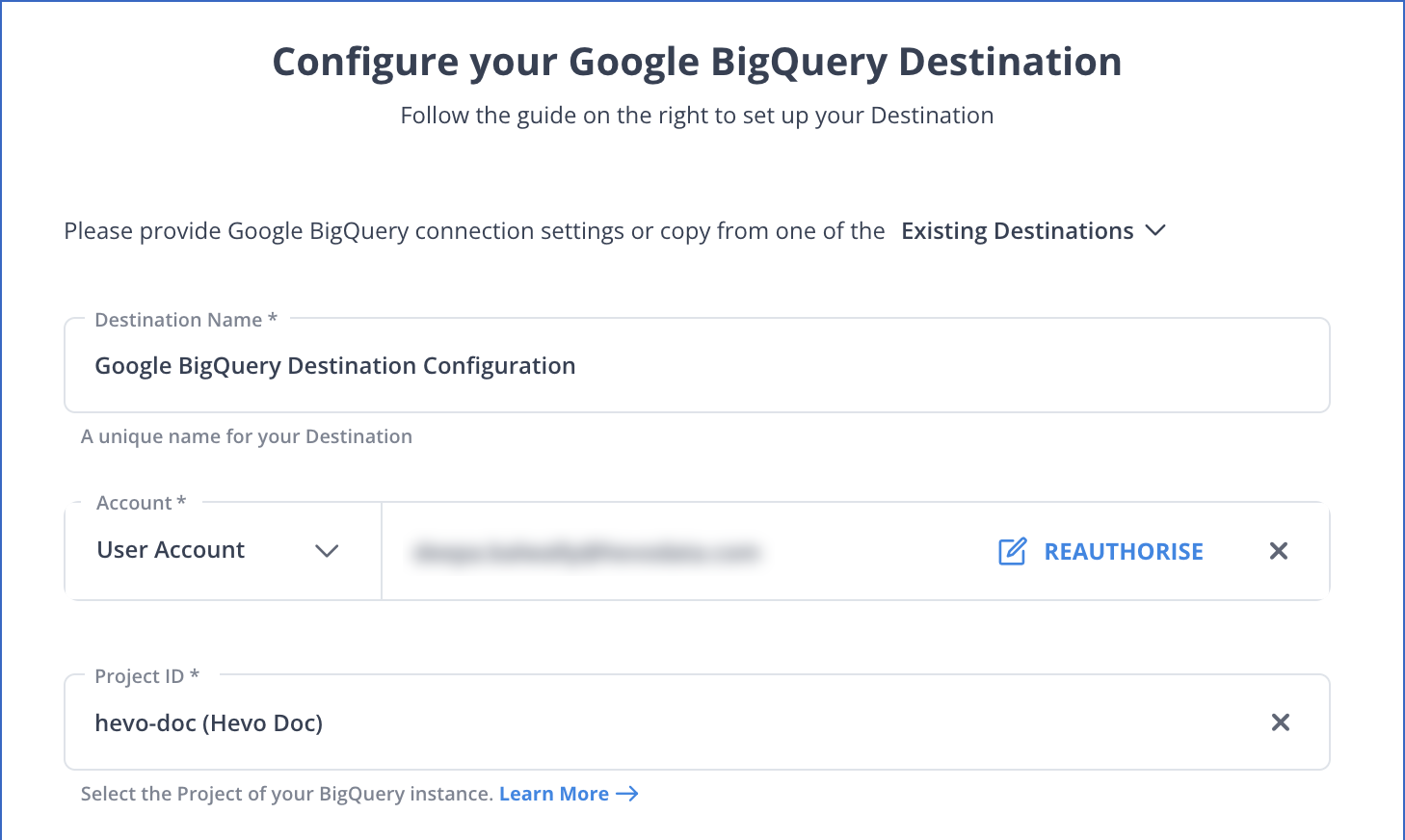

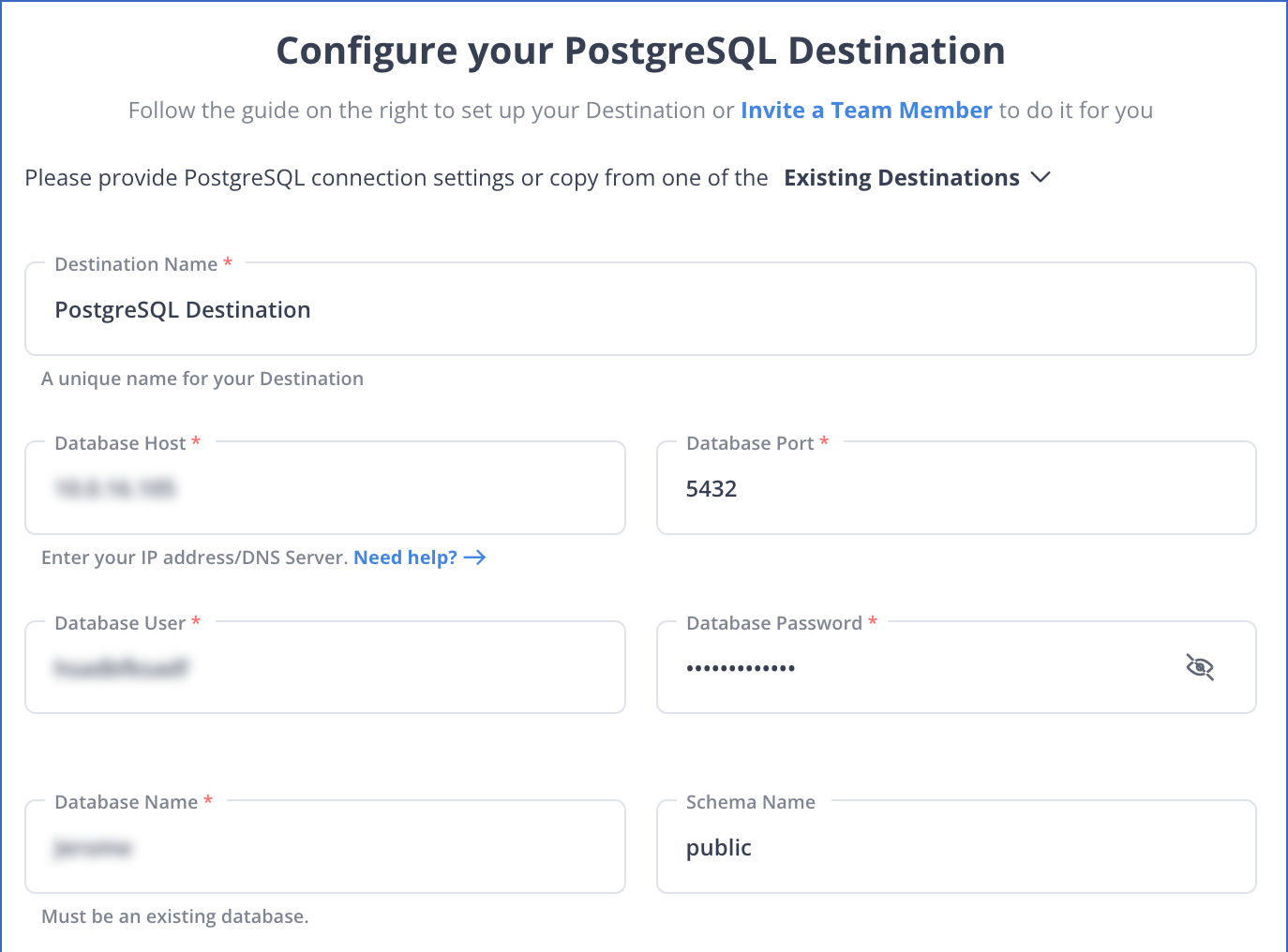

Step 1.2: Configure Your PostgreSQL Destination

When I saw Hevo, I was amazed by the smoothness it works with so many different sources with zero data loss.

– Swati Singhi, Lead Engineer, Curefit

Method 2: Manual ETL Process To Set Up the Integration Using Cloud Data Fusion

Note: Enable your PostgreSQL database to accept connections from Cloud Data Fusion before you begin. It is recommended to use a private Cloud Data Fusion instance to perform this safely.

Step 2.1: Open your Cloud Data Fusion instance.

In your Cloud Data Fusion instance, enter your PostgreSQL password as a secure key to encrypt. See Cloud KMS for additional information on keys.

- Go to the Cloud Data Fusion Instances page in the Google Cloud console.

- To open your instance in the Cloud Data Fusion UI, click View instance.

Step 2.2: Save your PostgreSQL password as a protected key.

- Click “System admin> Configuration” in the Cloud Data Fusion UI.

- Make HTTP Calls by clicking the “Make HTTP Calls” button.

- Select “PUT” from the dropdown list.

- Enter

namespaces/default/securekeys/pg_passwordin the path field. - Enter

"data":"POSTGRESQL_PASSWORD"in the Body field.POSTGRESQL_PASSWORDshould be replaced with your PostgreSQL password. - Click the “Send” button.

You can also look at how you can easily set up PostgreSQL Clusters to store your PostgreSQL data more efficiently.

Step 2.3: Connect to Cloud SQL For PostgreSQL.

- Open the Cloud Data Fusion UI menu and go to the Wrangler page.

- Click the “Add Connection” button.

- To connect, select “Database” as the source type.

- Click Upload under Google Cloud SQL for PostgreSQL.

- A JAR file containing your PostgreSQL driver should be uploaded. The format of your JAR file must be

NAME-VERSION.jar. Rename your JAR file if it doesn’t meet this format before uploading. - Click the “Next” button.

- Fill in the fields with the driver’s name, class, and version.

- Click the “Finish” button.

- Click on “Google Cloud SQL for PostgreSQL” in the “Add Connection” box that appears. Under Google Cloud SQL for PostgreSQL, your JAR name should be displayed.

- Fill in the connection fields that are necessary. Select the secure key you stored previously saved in the Password field. This guarantees that Cloud KMS retrieves your password.

- In the “Connection string” field, enter your connection string.

- Replace the following:

DATABASE_NAME:the Cloud SQL database name as listed in the Databases tab of the instance details page.INSTANCE_CONNECTION_NAME: the Cloud SQL instance connection name as displayed in the Overview tab of the instance details page.

Example:

- Allow the Cloud SQL Admin API to be used.

- Give the service-PROJECT NUMBER@gcp-sa-datafusion.iam.gserviceaccount.com account the following IAM roles:

- Cloud SQL Admin (roles/cloudsql.admin)

- Cloud Data Fusion Admin (roles/datafusion.admin)

- Cloud Data Fusion API Service Agent (roles/datafusion.serviceAgent)

See Manage Access for additional information on granting roles.

- To check that the database connection has been made, click “Test Connection.”

- Click the “Add Connection” button.

Limitations of Using Cloud Data Fusion

- JDBC Connection Fail: If you start the data fusion for the first time in your project, you must connect your VPC to the Data Fusion tenant project. Even if you enable Private server access in the VPC, you will receive connection failure errors.

- Worker Nodes Count: To execute a single node DataProc cluster, you must set the Worker node to 0, while a multi-node cluster requires at least two worker nodes.

- Minimum Memory: You need at least 3.5 GB as the minimum memory for both master and worker nodes of the DataProc cluster.

You can also check out how to migrate data from PostgreSQL to BigQuery in just two steps!

Use Cases of BigQuery to Postgres Migration

- Manufacturing: Migrating to PostgreSQL can help manufacturers optimize the supply chain, foster innovation, and make manufacturing customer-centric.

- Data Analytics: It can facilitate data integrity and support all data types to boost analytics performance.

- Web Applications: PostgreSQL is a go-to choice for building web applications with other open-source technologies like Linux, Apache, PHP, and Perl (LAMP stack).

- E-commerce: Because of the scalability and reliability of PostgreSQL, it is well-suited for e-commerce applications that handle large volumes of product data and transaction records.

Read more about Migrating from MySQL to PostgreSQL to seamlessly load your other sources of data to the PostgreSQL destination.

Conclusion

This article offers an overview of PostgreSQL and BigQuery and a description of their features. Furthermore, it described three approaches for transferring data from BigQuery to PostgreSQL.

Although successful, the manual approach will take a lot of time and resources. Data migration from BigQuery to PostgreSQL is a time-consuming and tedious operation, but with the help of a data integration solution like Hevo, it can be done with little work and in no time.

Sign up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

FAQs

1. How do you copy data from BigQuery to Postgres?

To copy data from BigQuery to Postgres, you need to first export the data to a file format such as CSV, then use Postgres’s COPY command or tools like psql to import the data, and you can optionally also transform the data to ensure compatibility.

2. Does BigQuery support PostgreSQL?

BigQuery itself does not support PostgreSQL as a direct integration.

3. Is BigQuery faster than Postgres?

Due to its distributed computing architecture and parallel processing capabilities, BigQuery is typically faster than PostgreSQL for large-scale analytical queries over massive datasets.

4. How to connect to PostgreSQL in GCP?

In order to connect PostgreSQL in GCP, you can access instance details by navigating to Google Cloud Console, then configure the Firewall Rules, and finally connect using Client Tools like psql or pgAdmin.