dbt’s extensive features have made it the central hub for transformations. It is an open-source tool that data analysts and engineers to transform data in a data warehouse efficiently. You can not only transform data but also test, deploy, and document your tasks.

To transform data, you need to build dbt models, which are simple SQL SELECT statements. Since SQL is popular among professionals, dbt became the go-to choice for users. dbt also supports Python models to allow complex data science and machine learning workflows.

Let’s understand ways to build dbt Python Models.

Table of Contents

Why Run Python in dbt

dbt tool was initially developed to perform transformations in SQL. However, data engineers couldn’t solve all the use cases with SQL. Although SQL language is simple to understand and query, it has limited capabilities for machine learning workflows.

Python contains packages and libraries which allow data engineers to perform transformations efficiently. Along with transformation, Python can also incorporate data science techniques. Therefore, Python extended the capabilities of SQL data models, and it became a preferred choice among data engineers.

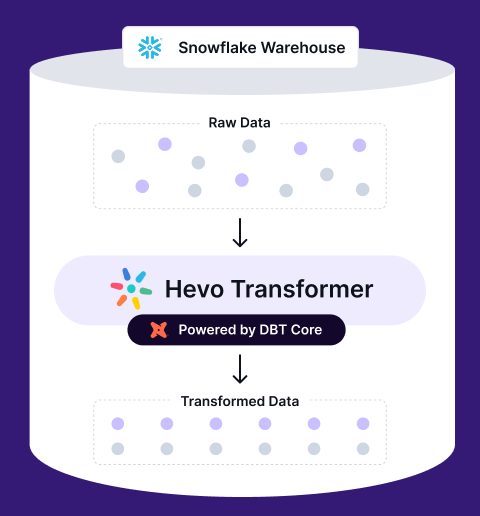

Say goodbye to long lines of code and complex setups! With Hevo Transformer (Powered by dbt Core), you can use a plug-and-play solution to seamlessly integrate and manage your dbt workflows.

With Hevo Transformer, enjoy:

🚀 Seamless Warehouse Integration – Auto-fetch schemas, no setup hassle

⚡ Effortless dbt Workflow Automation – Build, test, and deploy in one place

📂 Built-in Version Control – Track every change with Git

What is dbt Python Model?

Unlike dbt SQL models, dbt Python models are defined in the .py files instead of the .sql files. Python models are functions named models that return dataframes. A dataframe is a 2D table of rows and columns like a spreadsheet. With Python models, you can reference dbt sources, apply transformations, and return the transformed data.

In dbt, SQL models return the output of the SELECT statement, whereas Python models return a final dataframe. And the type of dataframe depends on the data platform. Python dbt models are supported across three data platforms: Snowflake, Databricks, and BigQuery. Currently, dbt supports Python via the three adapters: dbt-Snowflake, dbt-BigQuery, and dbt-Databricks.

Defining Python Models

Whenever data engineers or analysts use dbt Python models, they need cloud services to run their models. This is because dbt Python models cannot run on the machine. Therefore, Python dbt models run on data platforms. Data platform services are enhanced for running big data processing workloads. They can run distributed data processing tasks across multiple nodes in a cluster.

As a result, before creating dbt Python models, you need an adapter for a data platform to support fully featured Python runtime.

In the models/ folder, every Python model defines a function called model() that consists of two parameters.

- dbt: It is a class compiled by dbt Core, which allows you to run Python code in the context of your project.

- session: It refers to the data platform’s connection.

Every dbt Python model looks like this:

In dbt Python models, you can reference other models and sources using the dbt.ref() and dbt.source() functions. Python models only support two materialization options: table and incremental.

When you run the dbt Python model using the command <a href="https://hevodata.com/data-transformation/dbt-run-command/" target="_blank" rel="noreferrer noopener">dbt run</a> --select python_model, dbt will prepare and pass in both arguments dbt and session.

When defining a dbt Python model function, you can import other functions into the model, as shown in the below example:

Here, add_one() is a custom function that is referenced in the Python model.

Note: Currently, Python dbt models defined in one dbt model cannot be imported and reused in other models.

Referencing Models

You can reference other models in the Python model using the dbt.ref() method. It is similar to how you use ref() in SQL models. However, if you want to read data directly from the source table, you can use dbt.source() in the Python model.

The dbt.source() method returns dataframes pointing to the upstream source, model, snapshot, or seed. The dbt.ref() and <a href="https://hevodata.com/learn/dbt-sources/" target="_blank" rel="noreferrer noopener">dbt.source()</a> method is used as follows:

You can also use the ref() function to reference the dbt Python model in the downstream SQL models, as follows:

Configuring dbt Python Models

There are three ways to configure dbt Python models:

- You can configure many models at once with dbt_project.yml.

- In the models/ directory, you can configure dbt Python models with .yml files.

- You can configure model.py by using the db.config() method as well.

You can set the configurations for your Python models by calling the dbt.config() method.The dbt.config() method is similar to the {{config()}} macro in .sql model files. The dbt.config() method accepts only literal values like strings, boolean, and numeric. It cannot support any other data structure because dbt statistically analyzes the arguments to config() while parsing the dbt model without executing the Python code.

For a deeper look into how you can design, structure, and optimize models programmatically, check out our guide on data modelling in Python

Limitations of dbt Python Model

Although dbt Python models have capabilities that SQL models lack, they also have some disadvantages compared to SQL models:

- Python only supports three data platforms Snowflake, Databricks, and BigQuery.

- Dbt users find it easier to write code that is performant at scale. If you try to translate the well-written SQL models into Python, it can make your model slow.

- There are several syntax differences between dbt SQL and Python-based models. Python offers extensive ways to code. If there are five ways to write something in SQL language, there can be 500 ways to write it in Python with varying performance and standards. Many options can be overwhelming and can confuse the dbt users.

Data Platforms Using dbt Python Models

Since dbt supports Python through three data platforms, Snowflake, BigQuery, and Databricks, let’s understand each of them in detail.

How to Build dbt Python Models in Snowflake

Follow the steps mentioned below to build dbt Python models in Snowflake:

- Prerequisites

- A Snowflake account.

- A Snowflake database named DEMO_DB.

- A Snowflake user having appropriate permissions.

- Anaconda is installed on your machine.

- dbt installed on your machine.

- Your favorite IDE.

- After setting the prerequisites, you need to run the

dbt initcommand to initialize the project. - Follow the prompt to create the dbt project and enter the values appropriate to your environment. But, for schema and database, provide the following details:

- You will end up with the following dbt models:

- Open a terminal, move to the dbt project, and execute the

dbt run. This is to verify that everything is configured correctly. - After creating the dbt project, you will now create a simple Python model. In the models/example folder. Create a new file,‘

my_first_python_model.py, and copy the below code into it.

- Save and execute the

dbt runcommand. If everything is executed correctly, you have successfully built your first dbt Python model in Snowflake. You do not have to import the Snowpark Python library explicitly, dbt does that for you.

Pros and Cons using Snowflake

| Pros | Cons |

| Excellent scalability and performance. | Costs can escalate with heavy usage. |

| Seamless integration with other data tools. | Learning curve for new users. |

How to build dbt Python Models in Databricks

Follow the steps mentioned below to build a dbt Python model in Databricks.

- Create a virtual environment to isolate the packages from conflict. Run the below-mentioned command to create an empty directory named dbt_project:

mkdir ~/dbt_project

cd ~/dbt_project- Create a file named Pipfile using the code mentioned below. Pipfile is used by the Python virtual environment to manage project and library dependencies:

[[source]]

url = "https://pypi.org/simple"

verify_ssl = true

name = "pypi"[packages]

dbt-databricks = "*"[requires]

python_version = "3.9.12”- Now, install all the packages defined in the Pipfile containing the dbt Databricks adapter package. dbt-databricks automatically installs dbt Core and other library dependencies required:

pipenv install- Activate the virtual environment using the following command:

pipenv shellOutput:

- After the initial setup and configuration of the virtual environment, your next step is to create a dbt project. In the activated virtual environment, run the

dbt initcommand followed by the name of your dbt project:

dbt init my_first_dbt_project- You need to enter the details like number 1 to select Databricks, HTTP path, personal access token, default schema name, and number 4 when prompted for the number of threads.

- To verify the Databricks connections settings, run the following commands:

dbt debug --config-dir

open /Users/<your-username>/.dbt- Now, run build the project by running the following command:

dbt debugOutput:

- Create a dbt Python model. You can create a dbt Python model in Databricks, using the following command:

In Databricks, dataframes are Apache Spark dataframes by default. Therefore, you need to use PySpark.

Import PySpark as follows:

pyspark lib import pyspark.sql.functions as F.

In Databricks, you need to specify the cluster for the Python code to run inside the config function. You can run your code faster by setting the ‘create_notebook’ parameter to ‘False.’

You can know more about the above parameters in dbt’s documentation. Run the following command:

$<a href="https://hevodata.com/data-transformation/dbt-build-command/" target="_blank" rel="noreferrer noopener">dbt build</a>Output:

You have completed building your first Python models in dbt with Databricks.

Pros and Cons using Databricks

| Pros | Cons |

| Unified analytics with ML capabilities. | Higher costs compared to traditional solutions. |

| Strong support for big data processing. | Complexity in setup and management. |

How to build dbt Python Models in BigQuery

Follow the steps mentioned below to build dbt Python models with BigQuery:

- Create the Google Cloud Platform account. Create a new project named ‘jaffle-shop’ in the console.

- Let’s create a Service Account which can be used by dbt. Go to IAM & Admin > Service Accounts to create Service Account.

- Give a name to the Service Account as ‘dbt-python.’

- Now, you need to define roles for the Service Account. We will be giving resource admin permissions for simplicity.

- Create the Service Account and go to Action > Manage Keys to create the key file.

- Click on Add Key > Create a New Key, select JSON, and click on create. The private key is created, and the .JSON key file is downloaded.

- You will now need to create a bucket. Search for ‘Cloud Storage’ in the console and click ‘Create Bucket.’ Name your bucket and select the region. You need to remember the name of your bucket and region, as it will be required in the following steps:

- Go to the Marketplace and search for ‘Dataproc API.’ You need to enable the Cloud Dataproc API.

- You can select the components like Anaconda in the cluster. You need to change the Image Type and Version to do so.

- Go to ‘Dataproc’ in your console and click ‘Create Cluster.’ Name your cluster, and select the same region as your bucket.

- You must change the Series and Machine type of Manager and Worker nodes if you use your account’s default quotas. Select n1-standard-2 to prevent exceeding quotas. Do the same for the Worker nodes.

- Now, define the initialization actions and the cluster metadata. Paste the following content into the initialization action:

gs://goog-dataproc-initialization-actions-${REGION}/connectors/connectors.sh- Paste the following into the metadata:

bigquery-connector-version=1.2.0

spark-bigquery-connector-version=0.21.0

- The Google recommendation for production environments is to put the above information in the bucket and read the cluster from there. In this tutorial, you are pasting the information into the cluster properties for simplicity.

- After completing the GCP configuration, let’s start the dbt setup. Install the BigQuery adapter using the below command:

$ pip3 install dbt-bigquery- As shown in the following example, you need to create a new profile inside the ~/.dbt/profiles.yml file:

- You will require project_id, information in your JSON key file, the name of the region, and a bucket for creating dbt models in Python.

- The dbt Python models created in BigQuery are similar to those in Databricks, as the Dataproc cluster also uses PySpark. The parameters of the dbt.config() function are similar. You can read more about the config for Python models over here.

- Run the following command.

$ dbt buildOutput:

You have now built your first Python model in dbt using BigQuery.

Pros and Cons using BigQuery

| Pros | Cons |

| Serverless architecture simplifies operations. | Pricing can be unpredictable based on usage. |

| Fast query performance with large datasets. | Limited support for advanced analytics compared to others. |

Conclusion

It is recommended to use SQL language if you are unfamiliar with Python programming to create dbt data models. However, if you have expertise in Python, you can use it to create dbt models. This article discusses different ways of building dbt Python models in data warehouses like Snowflake, Databricks, and BigQuery.

In the future, dbt will support other platforms with Python programming language. Besides three data warehouses, there is a powerful third-party tool called dbt-fal to run Python code locally instead of relying on cloud resources. The dbt-fal tool runs with any abt adapter like BigQuery, Snowflake, or Databricks.

To simplify the implementation of data pipelines, you can opt for cloud-based automated ETL tools like Hevo Data, which offers more than 150 plug-and-play integrations.

Saving countless hours of manual data cleaning & standardizing, Hevo Data’s pre-load data transformations get it done in minutes via a simple drag n-drop interface or your custom python scripts. No need to go to your data warehouse for post-load transformations. You can run complex SQL transformations from the comfort of Hevo’s interface and get your data in the final analysis-ready form.

Sign up for a 14-day free trial and manage your workflows seamlessly.

FAQ

Can dbt be used with Python?

Yes, dbt can integrate Python through the use of Python models, allowing users to leverage Python’s capabilities alongside SQL for data transformations.

What are dbt models?

dbt models are SQL files that define transformations and data models within a data warehouse. They allow users to structure their data workflows and enable efficient querying.

Is dbt SQL or Python?

dbt primarily uses SQL for data modeling, but it also supports Python for certain tasks, such as data transformations, enhancing flexibility in data processing.