Google Analytics helps organizations track user activity and the effectiveness of their content. Marketers and advertisers worldwide widely use it to gain critical insights from their marketing campaigns. The data obtained from Google Analytics is further transferred to the SQL server to successfully back up significant business data to be used for further analysis. This blog post introduces you to two approaches that can be used for data transfer from Google Analytics to SQL Server.

Table of Contents

Methods to Connect Google Analytics to SQL Server

Prerequisites

The following checklist will help you stay prepared for the data transfer:

- A valid Google Analytics account with admin privileges.

- Basic knowledge of Python and how to use Python libraries.

- An SQL Server installation with write permission.

You can use the following methods to establish a connection from Google Analytics to SQL Server in a seamless fashion:

Method 1: Export Google Analytics Data to SQL Server Using Hevo

Hevo streamlines the process, offering smooth data integration between Google Analytics and SQL Server. Enjoy automated, real-time syncing with our no-code platform—saving you time and eliminating manual errors.

Method 2: Using Manual ETL Scripts to Connect Google Analytics to SQL Server

In this method, you will need to manually write ETL Scripts which requires complex technical knowledge. It is very difficult to automate the connection between Google Analytics and SQL Server using this method.

Get Started with Hevo for FreeMethod 1: Export Google Analytics Data to SQL Server Using Hevo

The data migration from Google Analytics to SQL server can be carried out in 2 simple steps:

Step 1: Configure the Source

Connect Hevo to Google Analytics to set it up as your source by filling in the Pipeline Name, Account Name, Property Name, View Name, Metrics, Dimensions, and Historical Import Duration.

Step 2: Configure the Destination

Configure SQL Server as a destination and start moving data instantly by entering the Destination Name, Database Host, Database Port, Database User, Database Password, Database Name, and Database Schema.

You have successfully Exported Google Analytics data to SQL Server using Hevo.

For a data pipeline tool, we expect to be able to seamlessly integrate our data sources with custom scheduling, logging, and alerts. Hevo provides all these features and allows us to get access to data when we critically need it.

– Chris Lockhart, Data Science Manager, Scratchpay

Method 2: Using Manual ETL Scripts to Connect Google Analytics to SQL Server

This post section will help you build a custom script to migrate data from Google Analytics to SQL Server.

Google Analytics provides data through Reporting API V4. These APIs provide aggregate analytics information on the basics of metrics and dimensions. Dimensions are terms based on which metrics are aggregated. The number of users is a typical example of a metric. The time window is one of the dimensions on which the user count is aggregated.

The API uses the OAuth authentication protocol and requires a service account to access the data. Below are the steps in developing custom scripts to load data from Google Analytics to SQL Server.

Step 1: Generating Account Keys on Google Analytics

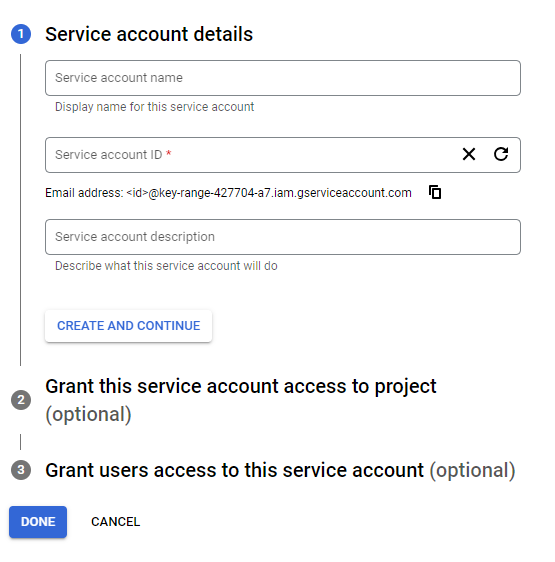

Go to the Google API console and create a project. In the ‘credential’ section of the project click ‘Create Credentials’. Select ‘Service Accounts’ and click ‘Create Key’. Select JSON and save the key on your computer. We will be using this key in our next steps.

Step 2: Installing the Required Python Libraries

Google Analytics provides tools in Python, Java, Javascript, etc to access their APIs. Using command-line utilities like CURL is a tedious process because of the complexity of the JSON structure and the authentication process.

Use the following command to install the Python GA library to your environment.

sudo pip install --upgrade google-API-python-clientThe above command needs a Python environment to be pre-installed. Let us now proceed to build the script.

Step 3: Building a Python Script

This section deals with building a Python script using the installed Google API client library. We will start by importing the required libraries. The various stages involved in this stage are as follows:

1. Import the Required Libraries

Use the following command to import the Python libraries.

from apiclient.discovery import build

from oauth2client.service_account import ServiceAccountCredentials2. Initialize the Keys and Configuration Parameters

SCOPES = ['https://www.googleapis.com/auth/analytics.readonly']

KEY_FILE_LOCATION = '<JSON_FILE_WITH_KEY>'

VIEW_ID = '<VIEW_ID>'

credentials = ServiceAccountCredentials.from_json_keyfile_name(KEY_FILE_LOCATION, SCOPES)

# Build the service object.

analytics = build('analyticsreporting', 'v4', credentials=credentials)The JSON file is the one that was saved in the first step. The VIEW_ID can be found in the admin section. Go to the Admin section, under the ‘views’ column, and click view settings. Under basic settings, you will find the VIEW_ID.

3. Request the Reports JSON from the API

response = analytics.reports().batchGet(

body={

'reportRequests': [

{

'viewId': VIEW_ID,

'dateRanges': [{'startDate': '7daysAgo', 'endDate': 'today'}],

'metrics': [{'expression': 'ga:sessions'}],

'dimensions': [{'name': 'ga:country'}]

}]

}

).execute()The above code snippet accesses the reports for a specific date range. The metric we access here is the session count and the dimension is ‘country’.

4. Parse the JSON to Extract the Relevant Fields

import pandas as pd from pandas.io.json

import json_normalize

ga_reports = response['reports'][0]

columnHeader = ga_reports['columnHeader']['dimensions']

metricHeader = ga_reports['columnHeader']['metricHeader']['metricHeaderEntries'] report_columns = columnHeader for metric in metricHeader:

report_columns.append(metric['name'])

report_data = json_normalize(ga_reports['data']['rows'])

g_dimensions = pd.DataFrame(report_data['dimensions'].tolist())

g_metrics = pd.DataFrame(report_data['metrics'].tolist())

g_metrics = data_metrics.applymap(lambda x: x['values'])

g_metrics = pd.DataFrame(data_metrics[0].tolist())

result = pd.concat([g_dimensions, g_metrics], axis=1, ignore_index=True)

result.to_csv('reports.csv')The above code snippet uses a pandas data frame to process the JSON and save it as a CSV with metrics and dimensions. Execute the script to generate the CSV file. The resulting CSV will have the below columns:

id, ga: country, ga: sessions

123x, India, 23

341a, Germany, 34With this, you have seen how to export data from Google Analytics. The next step is to load this data into SQL Server.

Step 4: Loading Data from Google Analytics to SQL Server

You will now load the above CSV to SQL Server using the below command.

BULK INSERT GaSessions

FROM 'C:CSVDatareports.csv' WITH

( FIRSTROW = 2,

FIELDTERMINATOR = ',',

ROWTERMINATOR = 'n',

TABLOCK )That concludes the steps to load data from Google Analytics to SQL Server. The task required us to build a custom script, generate a CSV using it, and then load the CSV to SQL Server. In reality, there are a lot more challenges in executing such a load process. The below section will give you a good idea about the limitations of this approach.

Limitations of using Manual ETL Scripts to Connect Google Analytics to SQL Server

There are a few limitations of using Manual ETL Scripts to Connect Google Analytics to SQL Server:

- The first and foremost one is that this method requires you to write a lot of custom code. If you have a transformation requirement before loading to SQL Server, be prepared to spend even more time writing custom code.

- This approach is suitable for a one-time bulk insert. In most cases, you will need to execute a continuous load process to ensure that SQL Server always has the latest data. In that case, you will need logic to handle the deletes and duplicates.

- The above approach only deals with one API. Google Analytics provides a large amount of data in various APIs, each of which follows different JSON structures. The developer must be familiar with all these and write custom code to accommodate them.

- Google APIs are rate-limited. Hence the logic has to work around the thresholding and extract data without triggering the rate violation exceptions.

Use Cases of Google Analytics to SQL Server Migration

Below are some practical applications of Google Analytics to SQL Server Migration:

- Marketing: SQL is used to determine marketing metrics such as cost per acquisition, customer lifetime value, etc. You can analyze your marketing campaign and sales to identify trends and patterns.

- Finance: SQL is used to prepare financial reports and to ensure regulatory compliance. It is also used to analyze portfolios with risks and market trends and retrieve data of assets and liabilities.

- Cybersecurity: It is used to detect threats and analyze malware. It is also used to manage vulnerable data and investigate security breaches.

- Social Media: It is used to track social media metrics like performance, user profiling, social network analysis, etc.

Before wrapping up, let’s cover some basics.

Introduction to Google Analytics

Google Analytics helps companies keep track of user details and advertising campaigns using a simple javascript plugin. It provides an excellent reporting dashboard and the ability to run various analyses on user activity data. It even supports advanced machine learning capabilities and helps organizations to deploy this capability to make better decisions without having to know the concepts or implementation details of machine learning algorithms.

Google Analytics also makes raw user activity data available to companies that want to run custom algorithms and reports. This requires the raw clickstream data to be loaded into the organization’s own data store.

Introduction to SQL Server

SQL Server is a relational database system provided by Microsoft. It can hold petabytes of data and have 100s of parallel running cores. It has a comprehensive querying layer that aids in business analysis and tightly integrates with reporting tools like Power BI. Recent versions have Spark support built-in; which means analyzing large amounts of data using Spark SQL without much additional setup needed.

It supports ANSI SQL, the standard SQL (structured query language) language. SQL Server comes with its implementation of the proprietary language called T-SQL (transact SQL). SQL server management studio (SSMS) is the main interface tool, consisting of a client and server. A client is an application for sending requests to the SQL Server installed on a given machine, whereas a server can process input data as requested.

SQL Server provides enterprise-grade security and support, which makes it a favorite among organizations with strict compliance requirements. This post is about loading data from Google Analytics to SQL Server.

Relational databases store and manage data in a traditional table format. Businesses use relational databases to handle data from their applications and ensure they always have access to critical information.

You can also read more about:

Conclusion

This blog talks about the two methods you can deploy to connect Google Analytics to SQL Server seamlessly. It also briefly introduces Google Analytics and SQL Server, including their salient features and benefits. Extracting complex data from a diverse set of data sources can be challenging; this is where Hevo saves the day!

Sign Up for a 14-day free trial and experience the feature-rich Hevo suite first hand. You can also look at our unbeatable pricing that will help you choose the right plan for your business needs!

FAQ on Google Analytics to SQL Server

1. Can Google Analytics connect to database?

Google Analytics does not directly connect to databases. It primarily integrates with web and app data for tracking and analysis. However, you can extract data from Google Analytics and then load it into a database using third-party tools or custom solutions.

2. Can I extract data from Google Analytics?

Yes, you can extract data from Google Analytics. Google Analytics offers various methods to export data, including:

1. Exporting Reports

2. Google Analytics API

3. Google Data Studio

3. How do I Connect Google to SQL?

To connect Google Analytics data to a SQL database, you typically follow these steps:

1. Extract data from Google Analytics

2. Load into SQL