Easily move your data from PostgreSQL on Google Cloud SQL to BigQuery to enhance your analytics capabilities. With Hevo’s intuitive pipeline setup, data flows in real-time—check out our 1-minute demo below to see the seamless integration in action!

Businesses are increasingly adopting cloud solutions to leverage benefits such as enhanced scalability, cost-efficiency, and cutting-edge analytics. Google Cloud SQL for PostgreSQL is one such cloud solution that serves as a robust platform for managing relational data. By integrating PostgreSQL on Google Cloud SQL to BigQuery, you can harness the potential of marketing, sales, and customer data stored on the cloud.

This allows you to derive actionable insights, predict future trends, and make informed decisions, which will help drive organizational growth and innovation.

BigQuery is a popular choice for its lightning-fast query performance across petabyte-scale datasets. With a seamless transfer of data from Google Cloud PostgreSQL to BigQuery, your business can harness the full potential of cloud-based data warehouse solutions.

Table of Contents

Overview of PostgreSQL on Google Cloud SQL

PostgreSQL on Google Cloud SQL is a database service managed fully by Cloud SQL for PostgreSQL. It enables you to administer your PostgreSQL relational databases on the Google Cloud Platform.

Method 1: Using CSV file to Integrate Data from PostgreSQL on Google Cloud SQL to BigQuery

This method would be time-consuming and somewhat tedious to implement. Users will have to write custom codes to enable two processes, streaming data from PostgreSQL on Google Cloud SQL to BigQuery. This method is suitable for users with a technical background.

Method 2: Using Hevo Data to Integrate PostgreSQL on Google Cloud SQL to BigQuery

Hevo Data, an Automated Data Pipeline, provides you with a hassle-free solution to connect GCP Postgre to BigQuery within minutes with an easy-to-use no-code interface. Hevo is fully managed and completely automates the process of loading data from PostgreSQL on Google Cloud SQL to BigQuery and enriching the data and transforming it into an analysis-ready form without having to write a single line of code.

Get Started with Hevo for FreeKey Features of PostgreSQL on Google Cloud SQL

- Automation: GCP automates administrative database tasks such as storage management, backup or redundancy management, capacity management, or providing data access.

- Less Maintenance Cost: PostgreSQL on Google Cloud SQL automates most administrative tasks related to maintenance and significantly reduces your team’s time and resources, leading to lower overall costs.

- Security: GCP provides powerful security features, such as rest and transit encryption, identity and access management (IAM), and compliance certifications, to protect sensitive data.

Overview of Google BigQuery

Google BigQuery was created as a flexible, fast, and powerful Data Warehouse that is tightly integrated with the other Google Platform services. It has a Serverless Model, user-based pricing, and is cost-effective.

The Analytics and Data Warehouse platform of Google BigQuery uses a built-in Query Engine on top of the Serverless Model to process terabytes of data in seconds.With Google BigQuery, you can run analytics at scale with a lower three-year TCO of 26 percent to 34 percent than other Cloud Data Warehouse alternatives.

Key Features of Google BigQuery

Here are a few of Google BigQuery’s key features:

- Serverless Computing: In general, organizations in a Data Warehouse environment must commit to and specify the server hardware on which computations will run. Administrators must then plan for performance, dependability, elasticity, and security.

- Support for SQL and Programming Languages: Users can connect to Google BigQuery using Standard SQL. Aside from that, Google BigQuery has client libraries for writing data-accessing applications in Python, C#, Java, PHP, Node.js, Ruby, and Go.

- The architecture of Trees: By structuring computations as an Execution Tree, Google BigQuery and Dremel can easily scale to thousands of machines. A Root Server receives incoming queries and forwards them to branches known as Mixers.

- Multiple Data Types: Google BigQuery offers support for a vast array of data types, including strings, numeric, boolean, struct, array, and a few more.

- Security: Data in Google BigQuery is automatically encrypted either in transit or at rest. Google BigQuery can also isolate jobs and handle security for multi-tenant activity. Since Google BigQuery is integrated with other GCP products’ security features, organizations can take a holistic view of Data Security.

Prerequisites

- PostgreSQL version of 9.4 or higher.

- Host name or IP address of your PostgreSQL server is available.

- A GCP project, with essential roles for the project assigned to the connecting Google account.

- An active billing account associated with the GCP project.

Method 1: Use CSV Files to Move Data from PostgreSQL on Google Cloud SQL to BigQuery

You can export data from PostgreSQL on Google Cloud SQL as CSV files and then load these files to BigQuery tables. This will help in seamless data transfers between both platforms. Here are the steps involved in this process:

Step 1.1: Export Data from PostgreSQL on Google Cloud SQL as a CSV File

To export data from a PostgreSQL instance on Google Cloud SQL to a CSV file, follow these steps:

- Log in to your Google Cloud account.

- Navigate to the Google Cloud Console > Cloud SQL Instances.

- Click on Export > Offload export.

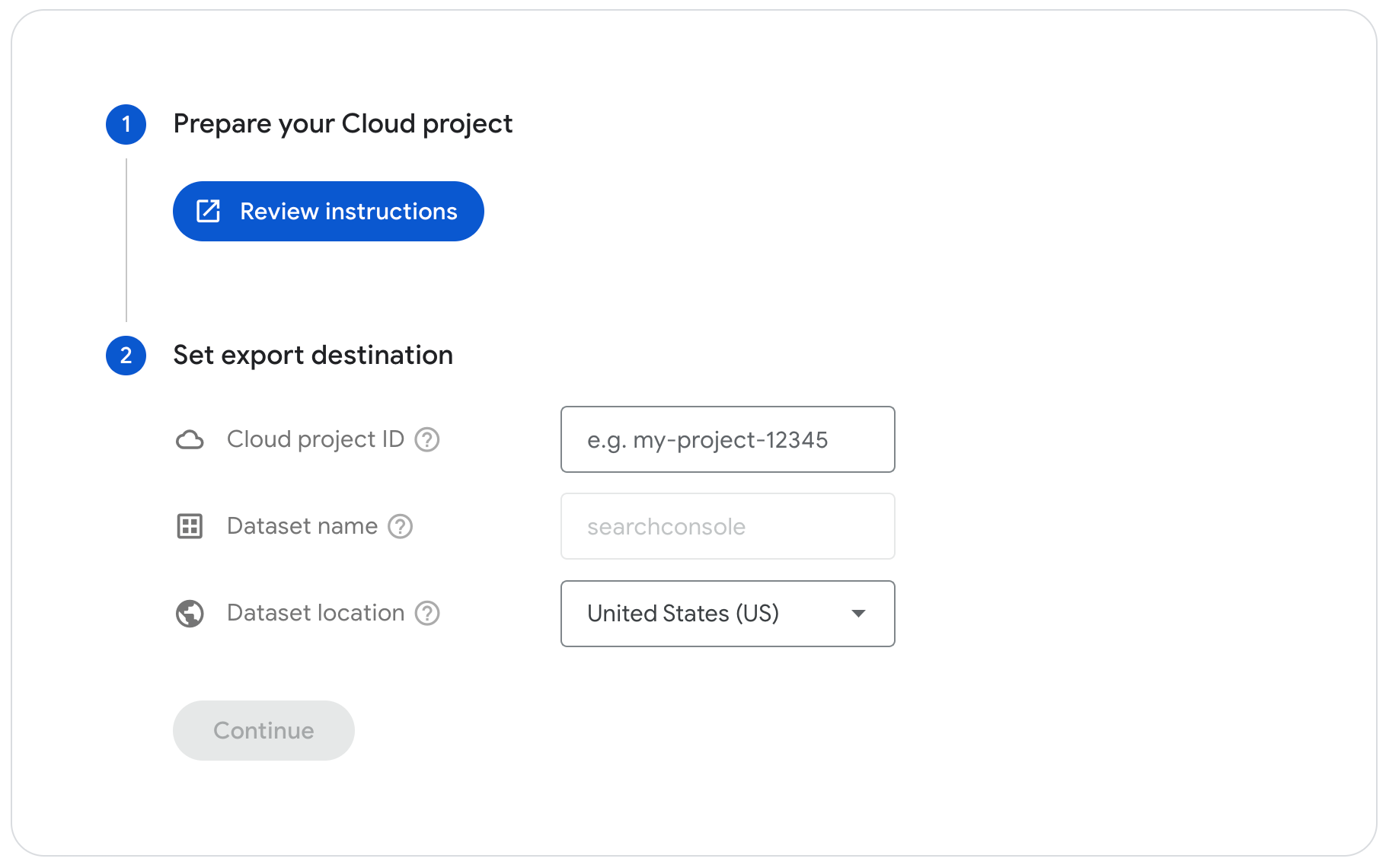

- In the Cloud Storage export location section, specify the name of the bucket, folder, or file that you want to export. Alternatively, click on Browse to create or find a bucket, folder, or file.

If you select Browse:

- For Location, select a GCS bucket or folder for the export.

- In the Name box, add a name for the CSV file. You can also use the Location section to select an existing file.

- Click Select.

- For Format, click on CSV.

- In the Database to export section, use the drop-down menu to select the name of the database.

- Click on Export to start the export.

This will export data from the PostgreSQL instance on Google Cloud SQL as a CSV file to a GCS bucket.

Step 1.2: Load the CSV File to BigQuery

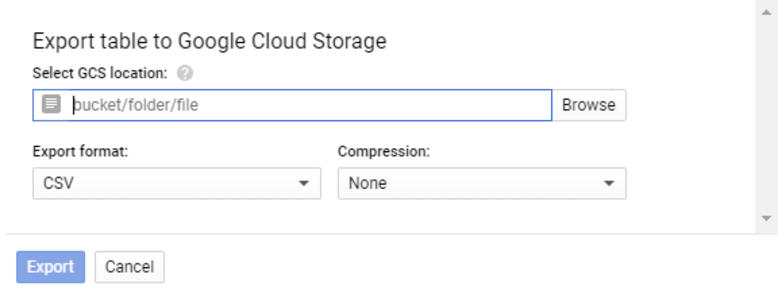

Now, you must load the CSV file into a BigQuery table. Here are the steps involved in this process:

- Navigate to the BigQuery Console.

- If you don’t already have a dataset in BigQuery, create one. Use the Create data set option of a project to proceed with this step.

- For the dataset to which you want to migrate your data, click on Create table for each table you want to load in this dataset.

- In the Source section of the Create table pane, select the Google Cloud Storage option.

- Click on BROWSE to select the file to load from the GCS bucket. Alternatively, you can provide a URI, like gs://mybucket/fed-samples/*.csv. This allows you to select only files with a .csv extension in the folder fed-samples and any of its subfolders.

- Specify the File format as CSV.

- Provide a name in the Table box.

- For Schema, you can select Auto-detect to automatically generate the schema.

- Click on CREATE TABLE to start the load job.

This will load the CSV files containing data from your Google Cloud PostgreSQL instance to a BigQuery table.

Benefits of using CSV export/import for a PostgreSQL on Google Cloud SQL to BigQuery integration

- This method is ideal for one-time or infrequent data transfers, especially of smaller datasets, between the platforms. The associated latencies of the data export or import won’t impact operations significantly.

- You don’t require any scripting or programming knowledge to use this method. Both platforms provide simple UIs, making it easy to export or import data.

- There is no need for ongoing maintenance efforts since there is no pipeline, system, or tool involved in the process. This also reduces the costs associated with this migration.

- The comprehensive documentation of Google Cloud PostgreSQL and BigQuery will help simplify the process of handling CSV exports and imports.

Limitations of the CSV export/import method to connect PostgreSQL on Google Cloud SQL to BigQuery

- The CSV export process in Cloud SQL can take an hour or more for large databases. During this data export, you cannot perform any other operations.

- This method isn’t suitable for real-time or near-real-time data migrations owing to the latencies involved in the export and manual import.

- You cannot automate the migration of data from PostgreSQL on Google Cloud SQL to BigQuery. Every time you want to move data, you must perform the repetitive tasks manually, making it effort-intensive and time-consuming.

Method 2: Use a No-Code Tool to Automate the Migration Process from PostgreSQL on Google Cloud SQL to BigQuery

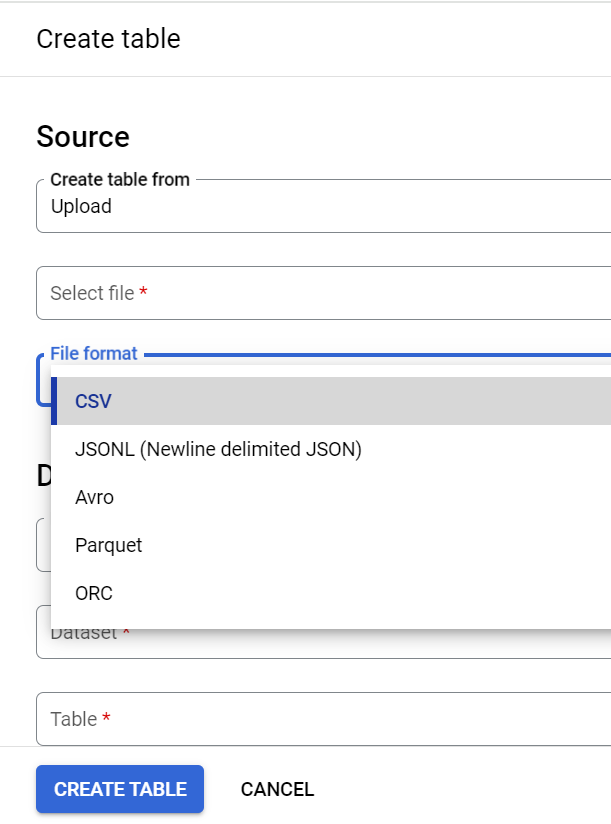

Step 2.1: Configure Google Cloud PostgreSQL as your Data Source

Step 2.2: Configure BigQuery as your Data Destination

With the two simple steps, you successfully connected PostgreSQL on Google Cloud SQL with BigQuery. There are numerous reasons to choose Hevo as your no-code ETL tool for integrating PostgreSQL on Google Cloud SQL with BigQuery.

Significant Features of Hevo

- Transformation: Hevo provides a drag-and-drop transformation feature, a user-friendly method of performing simple data transformations. Alternatively, you can use the Python interface for specific data transformations.

- Fully Managed Service & Live Support: Hevo manages the entire ETL process, from data extraction to loading, ensuring flawless execution. Additionally, Hevo provides round-the-clock support for your data integration queries via email, call, or live chat.

- Pre-Built Connectors: Hevo offers 150+ pre-built connectors for various data sources, enabling you to establish an ETL pipeline quickly.

- Live Monitoring: Hevo provides live monitoring support, allowing you to check the data flow at any point in time. You can also receive instant notifications about your data transfer pipelines across devices.

What Can You Achieve With PostgreSQL on Google Cloud SQL BigQuery Integration?

A PostgreSQL on Google Cloud SQL to BigQuery integration can help data analysts answer questions such as:

- Are there any recurring patterns in customers’ purchase behavior, such as a correlation with certain marketing campaigns or seasonality?

- Which teams have the quickest response times?

- How often do customer complaints or queries get escalated, and which teams handle the highest number of escalations?

- What is the lifetime value of customers acquired through different marketing channels?

- Which marketing channels have the highest customer acquisition rate?

Additional Resources on PostgreSQL to BigQuery Integration

- How to load data from postgresql to biquery

- Heroku for Postgresql to Bigquery

- Migrate Data from Postgres to MySQL

- How to migrate Data from PostgreSQL to SQL Server

Conclusion

Migrating data from PostgreSQL on Google Cloud SQL to BigQuery can provide your business with real-time insights to stay ahead of the competition.

To enhance your PostgreSQL performance, check out our blog on query optimization tricks and techniques.

You can integrate these two platforms using two methods. The first method involves the exporting of Google Cloud PostgreSQL data as CSV files and loading these files to BigQuery tables. This method is associated with drawbacks such as being time-consuming, effort-intensive, and lacking real-time and automation capabilities.

Discover the process of syncing PostgreSQL from Google Cloud SQL to Redshift to improve your data management. Explore our tips for a smooth setup.

Consider using no-code tools like Hevo Data to overcome these limitations of the CSV export/import method. It is a fully managed tool and includes benefits like readily available built-in integrations, auto schema management, and scalability to simplify the migration process.

Sign up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

Frequently Asked Questions (FAQs)

1. How to transfer data from PostgreSQL to BigQuery?

You can use Hevo to easily transfer data from PostgreSQL to BigQuery in real-time without coding, ensuring seamless integration. Other options include using BigQuery Data Transfer Service or writing custom scripts.

2. Can I use PostgreSQL in BigQuery?

No, BigQuery doesn’t directly run PostgreSQL, but you can load PostgreSQL data into BigQuery for analysis.

3. How do I sync Cloud SQL with BigQuery?

You can sync Cloud SQL with BigQuery using BigQuery’s Data Transfer Service or set up custom pipelines with tools like Cloud Dataflow.