Unlock the full potential of your Azure MySQL data by integrating it seamlessly with Snowflake. With Hevo’s automated pipeline, get data flowing effortlessly—watch our 1-minute demo below to see it in action!

In today’s digital era, businesses continually look for ways to manage their data assets. Azure Database for MySQL is a robust storage solution that manages relational data. However, as your business grows and data becomes more complex, managing and analyzing it becomes more challenging.

- This is where Snowflake comes in. Snowflake enables you to analyze complex data from multiple sources, giving you a complete view of your business operations.

- Migrating data from Azure MySQL to Snowflake is a strategic move to generate data-driven insights and make informed decisions for your business.

This article is a comprehensive guide on how to connect and load data from Azure MySQL to Snowflake using different methods.

Table of Contents

Why Integrate Data from Azure MySQL to Snowflake?

Integrating data from Azure MySQL to Snowflake helps you optimize your data processing and analysis. Here are two important reasons to move your data from Azure MySQL to Snowflake:

- Multi-Cluster Architecture: Snowflake is designed to meet your storage, processing, and analytics needs. Its multi-cluster architecture processes every query independently by its virtual cluster, ensuring it runs without impacting other users’ performance.

- Support Complex Analysis: You can perform complex analytics tasks, such as predictive modeling, machine learning, statistical analysis, and more, to optimize your processes, resources, and decisions.

Overall, the integration enables you to work efficiently on your data even in high concurrent environments.

Use Hevo’s no-code data pipeline platform, which can help you automate, simplify, and enrich your data replication process in a few clicks. You can extract and load data from 150+ Data Sources, including Azure MySQL straight into your Data Warehouse, such as Snowflake or any Database.

Why Hevo?

- Incremental Data Load: Hevo allows the transfer of data that has been modified in real time. This ensures efficient utilization of bandwidth on both ends.

- Live Support: Our team is available round the clock to extend exceptional support to our customers through Chat, Email, and Support Calls.

- Automapping: Hevo provides you with an automapping feature to automatically map your schema.

Explore Hevo’s features and discover why it is rated 4.3 on G2 and 4.7 on Software Advice for its seamless data integration. Try out the 14-day free trial today to experience hassle-free data integration.

Get Started with Hevo for FreeOverview of Azure MySQL

- Azure Database for MySQL is a fully managed relational database that is easy to set up and scale.

- It provides automated maintenance of your MySQL database server, including routine updates, backups, and security checkups.

- Azure MySQL supports SQL-based querying, making it suitable for various applications.

- Also, it has a pay-as-you-go model, where you only pay for resources you use, making it cost-effective for all users and businesses.

Overview of Snowflake

- Snowflake is a fully managed SaaS data warehousing, analysis, and sharing platform.

- It allows you to create databases within data warehouses and can be hosted on different platforms, such as Microsoft Azure, AWS, and Google Cloud Platform.

- Its unique architecture separates storage and computing layers, allowing you to pay for the services you actively use.

- Thus, Snowflake provides data scalability, making it a sound choice among modern-day businesses.

Methods to Migrate Data from Azure MySQL to Snowflake

There are two ways to migrate Azure MySQL to Snowflake smoothly:

- Method 1: Migrating Data from Azure MySQL to Snowflake Using Hevo

- Method 2: Migrating Data from Azure MySQL to Snowflake Using CSV Files

Method 1: Migrating Data from Azure MySQL to Snowflake Using Hevo

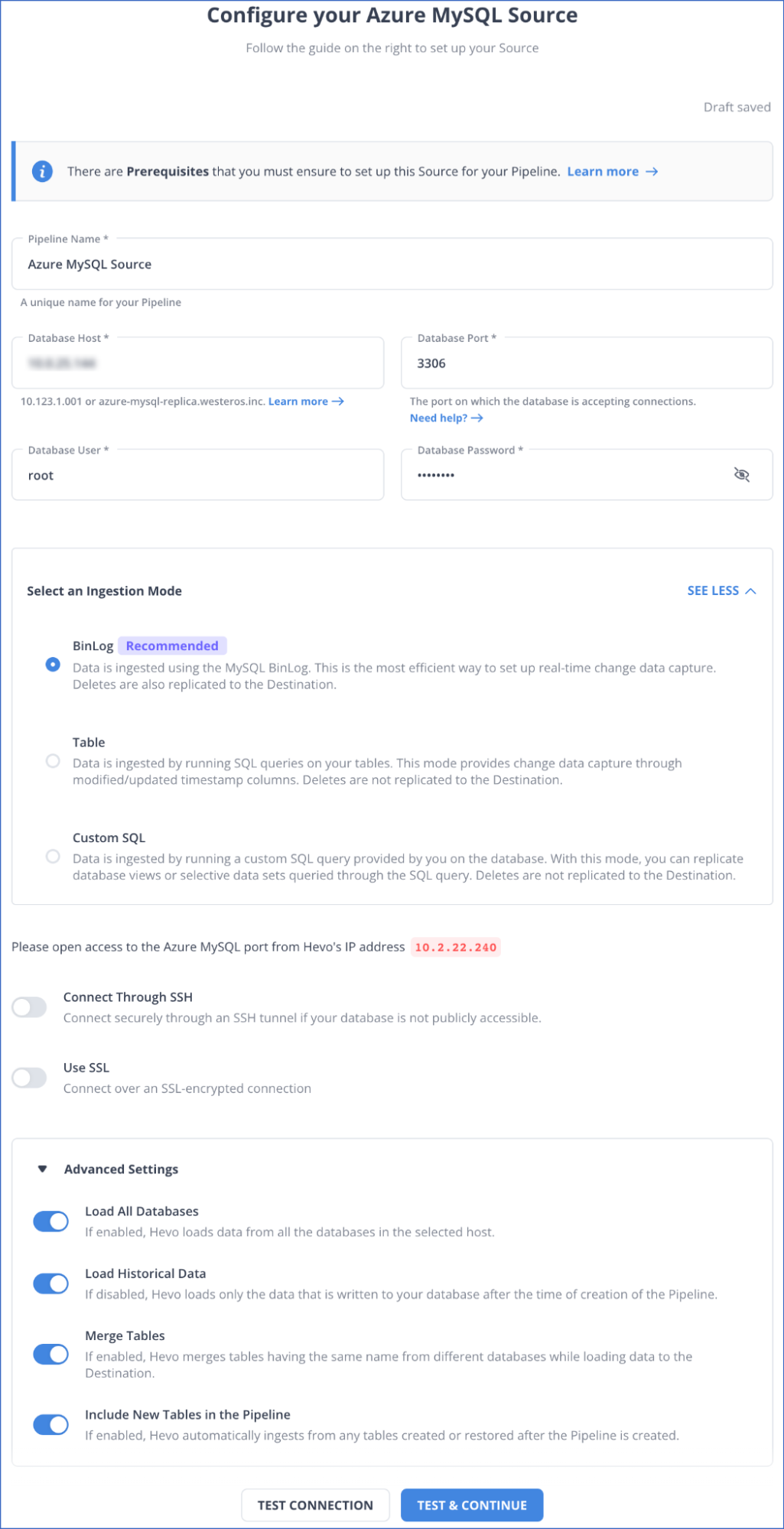

Step 1: Configure Azure MySQL as Your Source

Prerequisites

- Check if your Azure MySQL database instance is running

- Set up MySQL Binary log and enable replication.

- Whitelist Hevo’s IP Addresses.

- Create a database user to configure your source and grant permissions.

- You must be a Team Collaborator, Team Administrator, or Pipeline Administrator to create a data pipeline in Hevo.

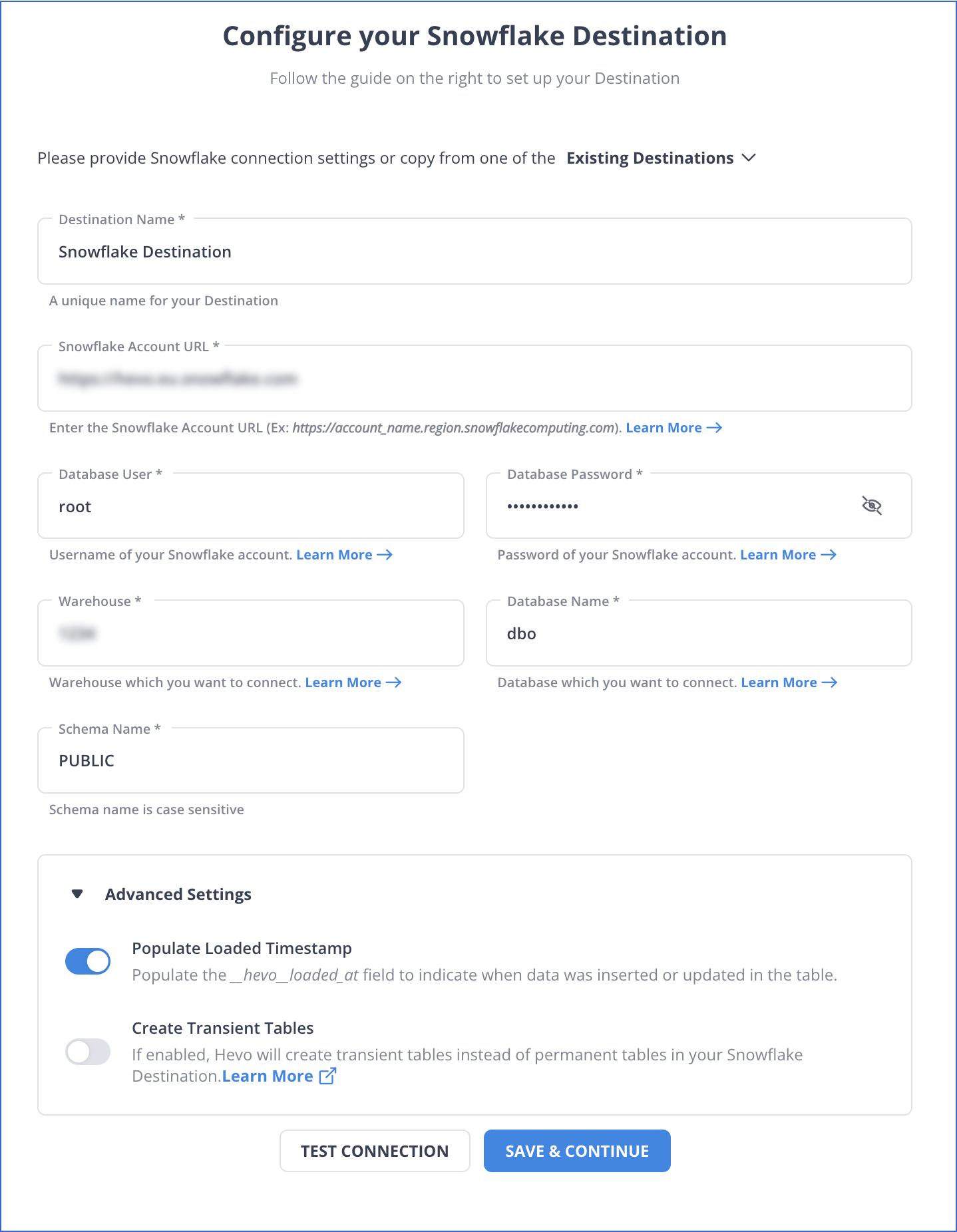

Step 2: Configure Snowflake as Your Destination

Prerequisites

- Create a Snowflake account that should be active.

- Create your Snowflake warehouse.

- You must have either SECURITYADMIN or ACCOUNTADMIN role in Snowflake to generate a new role or Hevo.

- Hevo must be assigned USAGE and CREATE SCHEMA permissions on databases.

- Hevo must be assigned USAGE, MONITOR, CREATE, and MODIFY permissions for the current and future schema.

- You must obtain your Snowflake Account URL.

- To create the destination in Hevo, you must be assigned any administrator role except for the Billing Administrator.

Hence, your data will be replicated from the source to the desired destination.

Method 2: Load Data from Azure MySQL to Snowflake Using CSV Files

With this method, you will learn how to load Azure MySQL to Snowflake using CSV Files.

Prerequisites

- Connect your MySQL Workbench to your MySQL database hosted on Azure.

- Provide details on setting the connection, such as the username and password.

Step 1: Export Data from Azure MySQL to a CSV File

First, you must use MySQL Workbench and the Data Export Wizard to export your Azure MySQL data into a CSV file. Then, you can load this CSV file into the Snowflake table using the COPY command.

There are two methods to export data in a CSV file using MySQL Workbench:

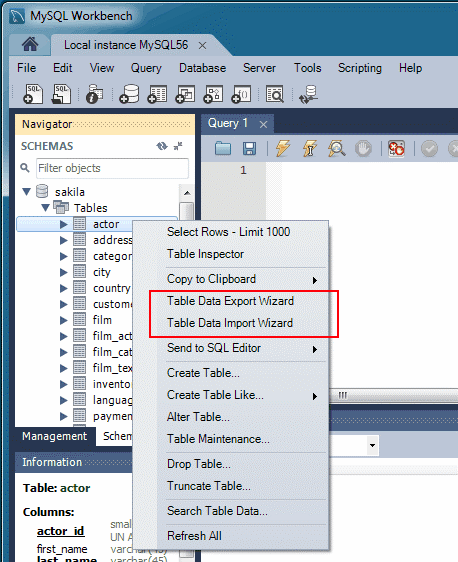

Method 1: Using a Data Export Wizard from Object Browser

You can access the wizard from the Object Browser. Go to the Context Menu, right-click on the table you want to export, and then click Table Data Export Wizard.

Follow the steps to export the table to a CSV file:

- Right-click on the table you want to export.

- Click on Table Data Export Wizard. You can select columns, row offset, and count (if any).

- On Select data for export window, select Next and then choose the file path and CSV file type.

- Also, choose the method for enclosing strings and line and field separators.

- On the Select output file location window, select Next. This will lead to the Export data pane. Select Next to export the table to a CSV file.

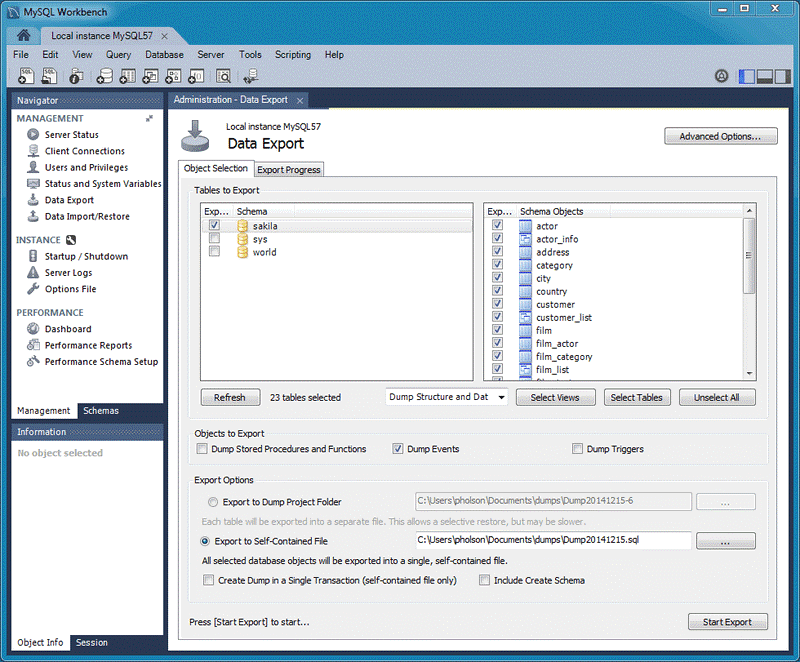

Method 2: Using a Data Export Wizard from Navigator Pane

You can access the Data Export Wizard directly from the Navigator pane to export Azure MySQL data to a CSV file.

Follow the steps below to export data from the MySQL database in Azure:

- In the Navigator pane, select Data Export.

- On the Data Export window, select the CSV file schemas you want to export.

- Choose the database object for exporting and configuring the related options.

- Refresh to load present objects.

- Select the Start Export option to start the export process.

Step 2: Export Data from CSV File to Snowflake

You can load your local CSV files into Snowflake using the COPY command.

Prerequisites

- Create a Snowflake account with the necessary privileges to create databases, tables, and objects.

- Install SnowSQL.

Follow the steps below to copy data to target tables in Snowflake:

Step 2.1: Create a File Format Object for Your CSV Data

Run the CREATE FILE FORMAT command to create a ‘mycsvformat’ file format.

CREATE OR REPLACE FILE FORMAT mycsvformat

TYPE = 'CSV'

FIELD_DELIMITER = '|'

SKIP_HEADER = 1;Step 2.2: Create the Stage Objects

Execute the CREATE STAGE command to create a ‘my_csv_stage’ stage.

CREATE OR REPLACE STAGE my_csv_stage

FILE_FORMAT = mycsvformat;Step 2.3: Stage Your CSV Data Files

You must use the PUT command to upload your sample data files from the local system to the stage you created earlier.

For Linux or macOS

PUT file:///tmp/load/contacts*.csv @my_csv_stage AUTO_COMPRESS=TRUE;For Windows

PUT file://C:\temp\load\contacts*.csv @my_csv_stage AUTO_COMPRESS=TRUE;Step 2.4: Copy the Data in the Target Table

Execute the COPY INTO<table> to load the staged data to the target table. This example uses contact information uploaded to Snowflake.

COPY INTO mycsvtable

FROM @my_csv_stage/contacts1.csv.gz

FILE_FORMAT = (FORMAT_NAME = mycsvformat)

ON_ERROR = 'skip_file';After copying data to the target tables, you must remove copied data files from the intimidatory staged table using the Remove command.

Limitations to Load Azure MySQL to Snowflake Using CSV Files

- When using the Data Export Wizard to export data to a CSV file, you must manually set up configuration options like separators and mapping. This can be confusing if you are unfamiliar with the wizard’s functionalities.

- If the COPY command skips files when loading the staged CSV file into the target table, errors, losses, and inconsistencies are generated. These losses and inconsistencies can be especially challenging when dealing with large datasets.

Use Cases

- Operations Optimization: Integrating data from Azure MySQL to Snowflake enables you to set up automated alerts, dashboards, and reports within Snowflake. It helps you detect anomalies and take proactive steps to address them promptly.

- Advanced Analytics and Machine Learning: Snowflake supports programming different languages, such as SQL, Python, R, etc. Using these languages, you can perform advanced analytics tasks on Azure MySQL data, such as analyzing historical data to recognize trends and patterns.

Here are some other migrations that you may need:

Conclusion

- Migrating data from Azure MySQL to Snowflake allows you to handle your varying workloads efficiently.

- To export and read Azure MySQL in Snowflake, you can either use Hevo, a third-party data integration tool, or CSV files.

- Hevo offers automation and real-time capabilities, whereas utilizing CSV files leans towards manual processes.

Try a 14-day free trial and experience the feature-rich Hevo suite firsthand. Also, check out our unbeatable pricing to choose the best plan for your organization.

FAQs (Frequently Asked Questions)

1. Is there a method to export MySQL data to Snowflake without a third-party connector?

You can use CSV files to extract data from the MySQL database and move it into your Snowflake tables. However, this process is lengthy.

2. What are some of the best tools to automate integration between Azure MySQL database and Snowflake?

Hevo is one of the best tools for integrating data between the Azure MySQL database and Snowflake. It is a real-time ELT platform that simplifies your data integration process with its automated data pipeline.