Quick Takeaway

Quick TakeawayManually migrating data from SQL Server to BigQuery involves extracting, transforming, and loading data using custom scripts, but it is time-consuming and prone to errors. Other methods like Cloud Data Fusion Web Interface offer a flexible but code-driven solution. No-code tool like Hevo simplifies the process by automating ETL and real-time syncing.

Detailed breakdown:

Method 1: Using Custom Scripts

- Step 1: Export the Data from SQL Server using SQL Server Management Studio (SSMS)

- Step 2: Upload to Google Cloud Storage

- Step 3: Upload to BigQuery from Google Cloud Storage (GCS)

- Step 4: Update the Target Table in BigQuery

Method 2: Using Cloud Data Fusion

- Step 1: Create and Deploy a Job in the Cloud Data Fusion Web Interface

- Step 2: Start and Monitor the Job

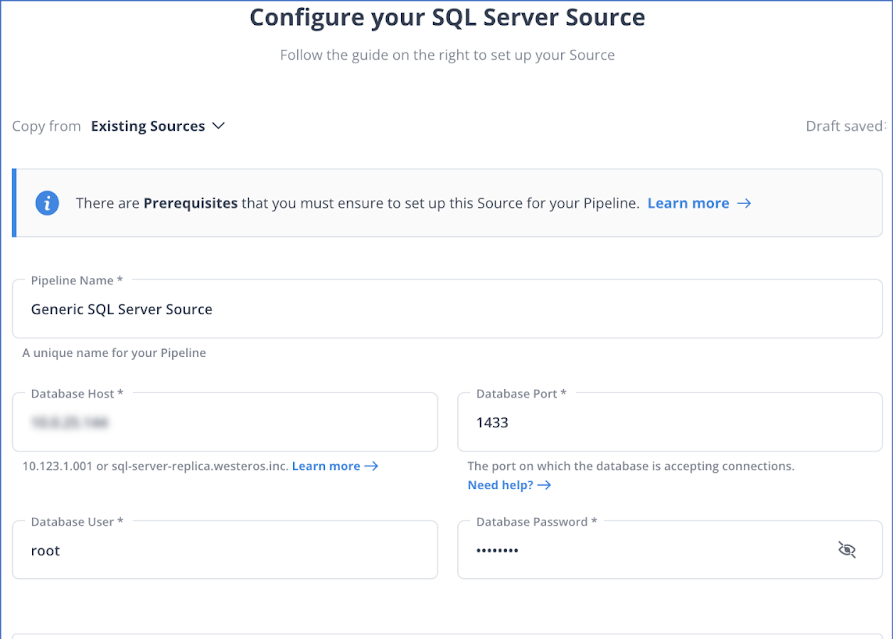

- Step 1: Configuring SQL Server as your Source

- Step 2: Connecting BigQuery as your Destination

Migrating data from SQL Server to BigQuery can unlock powerful analytics capabilities, but it also comes with a unique set of challenges. This article will walk you through the end-to-end process of setting up the integration, highlight common pitfalls, and help you overcome them with ease. We’ll explore three popular methods to move your data efficiently and ensure a smooth transition to BigQuery.

Table of Contents

Overview of SQL Server

SQL Server is a relational database management system (RDBMS) developed by Microsoft. It is designed to store, retrieve, and manage data in a structured format using SQL (Structured Query Language). SQL Server provides a robust platform for data management and analytics, with features supporting high performance, scalability, and security. It is ideal for both small-scale applications and large enterprise environments.

Key Features of SQL Server

- TSQL: Powerful language for running complex queries and creating stored procedures.

- Scalability: Supports huge databases and multiple concurrent users.

- High Availability: Features of SQL Server High Availability include Always On and Failover clustering.

- Security: Tight security through solid encryption, auditing, and row-level security.

- Integration: Integrates very well with other Microsoft services and third-party tools.

- Data Tools: In-depth tools for ETL, reporting, and data analysis.

- Cloud Integration: Comparatively much easier to integrate with Azure services.

What is BigQuery?

BigQuery is Google’s cloud enterprise data warehouse that primarily serves business agility in running complex SQL queries and performing analysis on huge datasets efficiently. It is based on Google technology called Dremel, using columnar storage and tree architecture to support high-speed scanning of data for querying efficiency.

Key Features of BigQuery

- Serverless Architecture: BigQuery manages servers and storage in the background, so a user does not need to.

- High Scalability: It scales seamlessly to handle petabytes of data.

- SQL Compatibility: It supports ANSI SQL, which is useful for people who already know SQL and want to write and run queries. This also allows a user to combine various BI tools for data visualization.

- Machine Learning: BigQuery ML allows users to train and run machine learning models in BigQuery using only SQL syntax.

Facing challenges migrating your customer and product data from SQL Server into BigQuery? Migrating your data can become seamless with Hevo’s no-code intuitive platform. With Hevo, you can:

- Automate Data Extraction: Effortlessly pull data from SQL Server(and other 60+ free sources).

- Transform Data effortlessly: Use Hevo’s drag-and-drop feature to transform data with just a few clicks.

- Seamless Data Loading: Quickly load your transformed data into your desired destinations, such as BigQuery.

Try Hevo and join a growing community of 2000+ data professionals who rely on us for seamless and efficient migrations.

Get Started with Hevo for FreeHow To Set Up Microsoft SQL Server BigQuery Integration?

Method 1: Using Hevo Data [Recommended]

Step 1.1: Configure MS SQL Server As Your Source

Step 1.2: Configure BigQuery As Your Destination

With this, you have successfully set up Microsoft SQL Server BigQuery Integration using Hevo Data.

Method 2: Connecting SQL Server and BigQuery Using JDBC Driver and Cloud Data Fusion Web Interface

Prerequisites:

- Download the SQL Server JDBC driver on your local machine.

- Create a public Cloud Data Fusion instance in version 6.3.0 or later. If you create a private instance, set up VPC network peering.

- Enable Cloud Data Fusion, BigQuery account, and Cloud Storage APIs.

- Enable CDC in your SQL Server database

Step 2.1: Create and Deploy a Job in the Cloud Data Fusion Web Interface

- Click ‘Replication’ in the Cloud Data Fusion interface, then create a replication job.

- Enter a Job Name and click ‘Next’.

- Configure Source:

- Select Microsoft SQL Server as the source.

- Enter the Host, Port (1433), and Database Name (e.g., Hevo_test).

- Choose the JDBC plugin Name and provide the required credentials.

- Click ‘Next’.

- Configure Destination

- Select BigQuery as the target.

- Use the default Project ID and Service Account Key, or customize options in the Advanced section (e.g., Cloud Storage bucket, load-interval).

- Click ‘Next’.

- Choose tables from your database and specify events like Insert, Update, and Delete and click Next.

- Review mappings for schema issues or connectivity problems and resolve them.

- Review the replication job details and click ‘Deploy Replication Job’.

Step 2.2: Start and Monitor the Job

- From the replication page, click the replication job name and then click ‘Monitoring’.

- Review the replication job details and deploy the replication job.

Method 3: Manual ETL Process to Set Up Data Integration

The steps to execute the custom code are as follows:

- Step 3.1: Export the Data from SQL Server using SQL Server Management Studio (SSMS)

- Step 3.2: Upload to Google Cloud Storage

- Step 3.3: Upload to BigQuery from Google Cloud Storage (GCS)

- Step 3.4: Update the Target Table in BigQuery

Step 3.1: Export the Data from SQL Server using SQL Server Management Studio (SSMS)

SQL Server Management Studio(SSMS) is a free tool built by Microsoft to enable a coordinated environment for managing any SQL infrastructure. SSMS is used to query, design, and manage your databases from your local machine. You can use the SSMS to extract the data in comma separated value (CSV) format in the steps below.

- Install SSMS if you don’t have it on your local machine.

- Open SSMS and connect to a structured query language (SQL) instance. Select a database from the object explorer window, right-click the tasks sub-menu, and choose the ‘Export’ data option.

- The welcome page of the Server Import and Export Wizard will be opened. Click the ‘Next’ icon to proceed with exporting the required data.

- You will now see a window to choose a data source. Select your preferred data source.

- In the Server name dropdown list, select a SQL Server instance.

- In the Authentication section, choose the authentication method for the data source connection. Next, from the Database dropdown list, select a database from which data will be copied. Once you have filled out the required fields, click Next.

- The following window is the Choose the Destination window. Here, you need to specify the location where the data will be copied into the SQL Server. Under the Destination dropdown list, select the Flat File destination option.

- In the File name box, specify the CSV file where the data from the SQL database will be exported, and then click Next.

- The next window is the Specify Table Copy or Query window, where you can choose to copy data from one or more tables or views to get all the data from the selected table(s).

- Next, you will see a Configure Flat File Destination window. Select the table from the source table to export the data to the CSV file you specified earlier.

- At this point, your file would have been exported. To view the exported file, click ‘Preview’.

- Complete the exportation process by hitting ‘Next‘. The Save and Run package window will pop up. Click on ‘Next‘.

- The Complete Wizard window will appear next. It gives you an overview of your choices during the exporting process. To complete the exportation process, hit on ‘Finish‘.

- The exported CSV file will be found in Local Drive, where you specified it would be exported.

Step 3.2: Upload to Google Cloud Storage

After completing the exporting process to your local machine, the next step is to transfer the CSV file to Google Cloud Storage(GCS). Let’s discuss the following methods:

Method 3.2.1: Using gsutil

gsutil is a GCP tool that uses Python and gives you access to GCS from the command line. To initiate gsutil, refer to the quickstart documentation. gsutil provides a unique way to upload a file to GCS from your local machine. To create a bucket in which you copy your file to:

gsutil mb gs://my-new-bucketThe new bucket created is called “my-new-bucket“. Your bucket name must be globally unique. If successful the command returns:

Creating gs://my-new-bucket/...To copy your file to GCS:

gsutil cp export.csv gs://my-new-bucket/destination/export.csv

In this command, “export.csv” refers to the file you want to copy. “gs://my-new-bucket” represents the GCS bucket you created earlier. Finally, “destination/export.csv” specifies the destination path and filename in the GCS bucket where the file will be copied to.

Method 3.2.2: Using Web Console

The web console is another alternative to upload your CSV file to the GCS from your local machine. The steps to use the web console are outlined below.

- First, you will have to log in to your GCP account. Toggle on the hamburger menu, which displays a drop-down menu. Select Storage and click Browser on the left tab.

- In order to store the file you want to upload from your local machine, create a new bucket. You need to ensure the name chosen for the browser is globally unique.

- The bucket you just created will appear in the window. Click on it and select ‘Upload Files’. This action will redirect you to your local drive, where you will need to choose the CSV file you want to upload to GCS.

- As soon as you start uploading, a progress bar is shown. The bar disappears once the process has been completed. You will be able to find your file in the bucket.

Step 3.3: Upload Data to BigQuery From GCS

BigQuery is where the data analysis you need will be carried out. Hence, you need to upload your data from GCS to BigQuery.

- The first point of call when using the Web UI method is to select BigQuery under the hamburger menu on the GCP home page.

- Select the “Create a new dataset” icon and fill in the corresponding drop-down menu.

- Create a new table under the data set you just created to store your CSV file.

- In the create table page select GCS to browse your bucket and select the CSV file you uploaded to GCS – make sure your file format is set to CSV.

- Fill in the destination tab and the destination table.

- Under schema, click on the auto-detect schema.

- Select create a table.

- After creating the table, click on the destination table name you created to view your exported data file.

- Using the Command Line Interface, the Activate Cloud Shell icon will take you to the command-line interface. You can also use the auto-detect feature to specify your schema.

Your schema can be specified using the command line. An example is shown below:

bq load --autodetect --source_format=CSV --schema=schema.json your_dataset.your_table gs://your_bucket/your_file.csv

In the above example, schema.json refers to the file containing the schema definition for your CSV file. You can customize the schema by modifying the schema.json file to match the structure of your data.

There are three ways to write to an existing table on BigQuery. You can make use of any of them to write to your table. Illustrations of the options are given below

A. Overwrite the data

To overwrite the data in an existing table, you can use the --replace flag in the bq command. Here’s an example code:

bq load --replace --source_format=CSV your_dataset.your_table gs://your_bucket/your_file.csv

In the above code, the --replace flag ensures that the existing data in the table is replaced with the new data from the CSV file.

B. Append the table

To append data to an existing table, you can use the --noreplace flag in the bq command. Here’s an example code:

bq load --noreplace --source_format=CSV your_dataset.your_table gs://your_bucket/your_file.csv

The --noreplace flag ensures that the new data from the CSV file is appended to the existing data in the table.

C. Add a new field to the target table. An extra field will be added to the schema.

To add a new field (column) to the target table, you can use the bq update command and specify the schema changes. Here’s an example code:

bq update your_dataset.your_table --schema schema.json

In the above code, schema.json refers to the file containing the updated schema definition with the new field. You need to modify the schema.json file to include the new field and its corresponding data type.

Please note that these examples assume you have the necessary permissions and have set up the required authentication for interacting with BigQuery.

Step 3.4: Update the Target Table in BigQuery

GCS (Google Cloud Storage) acts as a staging area for BigQuery. When using the command line to upload data to BigQuery, the data is first stored in an intermediate table. For the changes to reflect in the target table, the data in the intermediate table must be updated.

There are two ways to update the target table in BigQuery.

3.4.1] Update the rows in the final table and insert new rows from the intermediate table.

UPDATE final_table t SET t.value = s.value

FROM intermediate_data_table s

WHERE t.id = s.id;

INSERT INTO final_table (id, value)

SELECT id, value

FROM intermediate_data_table

WHERE id NOT IN (SELECT id FROM final_table);

In the above code, final_table refers to the name of your target table, and intermediate_data_table refers to the name of the intermediate table where your data is initially loaded.

3.4.2] Delete all the rows from the final table which are in the intermediate table.

DELETE FROM final_table

WHERE id IN (SELECT id FROM intermediate_data_table);

In the above code, final_table refers to the name of your target table, and intermediate_data_table refers to the name of the intermediate table where your data is initially loaded.

Make sure to replace final_table and intermediate_data_table with the actual table names you are working with.

This marks the completion of the SQL Server BigQuery connection. Now, you can seamlessly sync your CSV files into the GCP bucket in order to integrate SQL Server with BigQuery and supercharge your analytics to get insights from your SQL Server database.

Limitations of Manual ETL Process to Set Up Microsoft SQL Server BigQuery Integration

Businesses need to put systems in place that will enable them to gain the insights they need from their data. These systems have to be seamless and rapid. Using custom ETL scripts to connect has limitations that will affect the reliability and speed of these systems:

- Writing custom code is only ideal if you’re looking to move your data once from Microsoft SQL Server into BigQuery.

- Custom ETL code does not scale well with stream and real-time data. You will have to write additional code to update your data, which is not ideal.

- When there’s a need to transform or encrypt your data, custom ETL code fails as it will require you to add additional processes to your pipeline.

- Maintaining and managing a running data pipeline using custom scripts will require you to invest heavily in engineering resources.

- BigQuery does not ensure data consistency for external data sources, as changes to the data may cause unexpected behavior while a query is running.

- The data set’s location must be in the same region or multi-region as the Cloud Storage Bucket.

- CSV files cannot contain nested or repetitive data since the format does not support it.

- When utilizing a CSV, including compressed and uncompressed files in the same load job is impossible.

- The maximum size of a gzip file for CSV is 4 GB.

While writing code to move data from SQL Server into BigQuery looks like a no-brainer in the beginning, however, the implementation and management are much more nuanced than that. The process has a high propensity for errors, which will, in turn, greatly impact the data quality and consistency.

What Are The Use Cases For Migrating From Sql Server To Bigquery?

Integrating data from SQL Server into BigQuery offers several advantages. Here are a few usage scenarios:

- Advanced Analytics: The BigQuery destination’s extensive data processing capabilities allow you to run complicated queries and data analyses on your SQL Server data, deriving insights that would not be feasible with SQL Server alone.

- Data Consolidation: If you’re using various sources in addition to SQL Server, synchronizing to a BigQuery destination allows you to centralize your data for a complete picture of your operations, as well as set up a change data collection process to ensure that there are no discrepancies in your data again.

- Historical Data Analysis: SQL Server has limitations with historical data. Syncing data into BigQuery enables long-term data retention and study of historical trends over time.

- Data Security and Compliance: BigQuery includes sophisticated data security capabilities. Syncing SQL Server data to a BigQuery destination secures your data and enables comprehensive data governance and compliance management.

- Scalability: BigQuery can manage massive amounts of data without compromising speed, making it a perfect solution for growing enterprises with expanding SQL Server data.

Best Practices For SQL Server BigQuery Integration

- Plan Your Data Model: Before transferring data, ensure your data model is aligned between SQL Server and BigQuery to avoid issues during migration.

- Optimize Data Types: Match data types between SQL Server and BigQuery to avoid data loss or errors. Be mindful of differences in data type handling.

- Use Efficient Data Transfer Methods: Leverage bulk data transfer methods like Google Cloud Storage or use tools like Hevo to simplify and automate the process.

- Automate Data Transfers: Set up automated pipelines to regularly sync data, reducing manual work and improving efficiency.

- Monitor and Maintain Performance: Regularly monitor the performance of your BigQuery queries and optimize them by using partitioned tables and clustering to improve query speed and cost.

- Ensure Data Security: Protect sensitive data by using encryption, both in transit and at rest, and implement proper access controls.

- Test and Validate: Always test data transfers and validate that the data in BigQuery matches the original data in SQL Server to avoid discrepancies.

Conclusion

This article gave you a comprehensive guide to setting up Microsoft SQL Server BigQuery integration using three popular methods. It also gave a brief overview of Microsoft SQL Server and Google BigQuery. The limitations associated with the custom ETL method to connect SQL Server with BigQuery are also discussed in this article.

With Hevo, you can achieve simple and efficient data replication from Microsoft SQL Server into BigQuery. Hevo can help you move data from SQL Server and other 150+ data sources. Sign up for Hevo’s 14-day free trial and experience seamless data migration.

FAQs

1. Can you connect SQL Server to BigQuery?

Yes, you can connect SQL Server to BigQuery and migrate data between them.

2. How to migrate data from SQL Server to BigQuery?

a) Using Google Cloud Dataflow with Apache Beam

b) Using Google Cloud Storage (GCS) as an Intermediary

c) Using Data Migration Tools like Hevo

3. Can I use SQL in BigQuery?

Yes, you can use SQL in BigQuery.