With growing volumes of data, is your SQL Server getting slow for analytical queries? Are you simply migrating data from MS SQL Server to Redshift? Whatever your use case, we appreciate your smart move to transfer data from MS SQL Server to Redshift.

This article, in detail, covers the various approaches you could use to load data from SQL Server to Redshift.

This article covers the steps involved in writing custom code to load data from SQL Server to Amazon Redshift. Towards the end, the blog also covers the limitations of this approach.

Note: For MS SQL to Redshift migrations, compatibility and performance optimization for the transferred SQL Server workloads must be ensured.

Table of Contents

What is MS SQL Server?

Microsoft SQL Server is a relational database management system (RDBMS) developed by Microsoft. It is designed to store and retrieve data as requested by other software applications, which can run on the same computer or connect to the database server over a network.

Some key features of MS SQL Server:

- It is primarily used for online transaction processing (OLTP) workloads, which involve frequent database updates and queries.

- It supports a variety of programming languages, including T-SQL (Transact-SQL), .NET languages, Python, R, and more.

- It provides features for data warehousing, business intelligence, analytics, and reporting through tools like SQL Server Analysis Services (SSAS), SQL Server Integration Services (SSIS), and SQL Server Reporting Services (SSRS).

- It offers high availability and disaster recovery features like failover clustering, database mirroring, and log shipping.

- It supports a wide range of data types, including XML, spatial data, and in-memory tables.

Using specialized ETL tools for SQL Server and Redshift can simplify the migration process to Redshift, enabling smooth data extraction, transformation, and loading.

What is Amazon Redshift?

Amazon Redshift is a cloud-based data warehouse service offered by Amazon Web Services (AWS). It’s designed to handle massive amounts of data, allowing you to analyze and gain insights from it efficiently. Here’s a breakdown of its key features:

- Scalability: Redshift can store petabytes of data and scale to meet your needs.

- Performance: It uses a parallel processing architecture to analyze large datasets quickly.

- Cost-effective: Redshift offers pay-as-you-go pricing, so you only pay for what you use.

- Security: Built-in security features keep your data safe.

- Ease of use: A fully managed service, Redshift requires minimal configuration.

Understanding the Methods to Connect SQL Server to Redshift

A good understanding of the different Methods to Migrate SQL Server To Redshift can help you make an informed decision on the suitable choice.

- Method 1: Using Hevo Data to Connect SQL Server to Redshift

Hevo Data provides an automated, no-code solution for SQL Server Replication. Hevo not only duplicates your data from SQL Server, but also enriches and transforms it into an analysis-ready format—all without the need for code. - Method 2: Using Custom ETL Scripts to Connect SQL Server to Redshift

This method provides 2 ways to load your data one is one-time load and the other is incremental load, when the data volume is high. - Method 3: Using AWS Database Migration Service (DMS) to Connect SQL Server to Redshift

In this method DMS provides a fully managed service that is designed to minimize downtime and handle large-scale migrations with ease.

Method 1: Using Hevo Data to Connect SQL Server to Redshift

Hevo helps you directly transfer data from SQL Server and various other sources to a Data Warehouse, such as Redshift, or a destination of your choice in a completely hassle-free & automated manner. Hevo is fully managed and completely automates the process of not only loading data from your desired source but also enriching the data and transforming it into an analysis-ready form without having to write a single line of code. Its fault-tolerant architecture ensures that the data is handled securely and consistently with zero data loss.

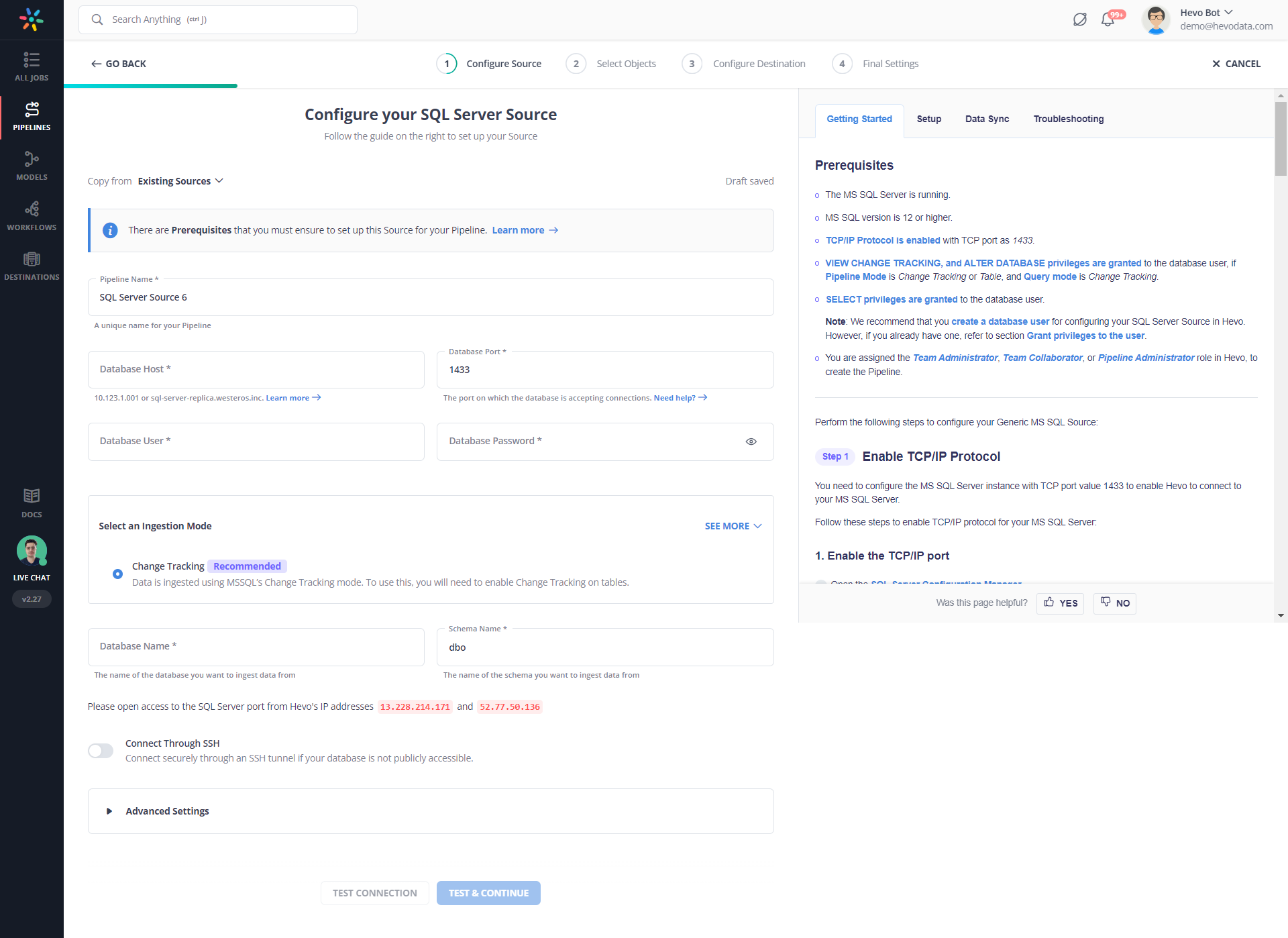

Step 1: Configure MS SQL Server as your Source

- Click PIPELINES in the Navigation Bar.

- Click + CREATE in the Pipelines List View.

- In the Select Source Type page, select the SQL Server variant

- In the Configure your SQL Server Source page, specify the following:

Step 2: Select the Replication Mode

Select the replication mode: (a) Full Dump and Load (b) Incremental load for append-only data (c) Incremental load for mutable data.

Step 3: Integrate Data into Redshift

- Click DESTINATIONS in the Navigation Bar.

- Click + CREATE in the Destinations List View.

- In the Add Destination page, select Amazon Redshift.

- In the Configure your Amazon Redshift Destination page, specify the following:

As can be seen, you are simply required to enter the corresponding credentials to implement this fully automated data pipeline without using any code.

Check out what makes Hevo amazing:

- Real-Time Data Transfer: Hevo with its strong Integration with 150+ sources, allows you to transfer data quickly & efficiently. This ensures efficient utilization of bandwidth on both ends.

- Data Transformation: It provides a simple interface to perfect, modify, and enrich the data you want to transfer.

- Secure: Hevo has a fault-tolerant architecture that ensures that the data is handled securely and consistently with zero data loss.

- Tremendous Connector Availability: Hevo houses a large variety of connectors and lets you bring in data from numerous Marketing & SaaS applications, databases, etc. such as Google Analytics 4, Google Firebase, Airflow, HubSpot, Marketo, MongoDB, Oracle, Salesforce, Redshift, etc. in an integrated and analysis-ready form.

- Simplicity: Using Hevo is easy and intuitive, ensuring that your data is exported in just a few clicks.

- Completely Managed Platform: Hevo is fully managed. You need not invest time and effort to maintain or monitor the infrastructure involved in executing codes.

Method 2: Using Custom ETL Scripts to Connect SQL Server to Redshift

As a pre-requisite to this process, you will need to have installed Microsoft BCP command-line utility. If you have not installed it, here is the link to download it.

For demonstration, let us assume that we need to move the ‘orders’ table from the ‘sales’ schema into Redshift. This table is populated with the customer orders that are placed daily.

There might be two cases you will consider while transferring data.

- Move data for one time into Redshift.

- Incrementally load data into Redshift. (when the data volume is high)

Let us look at both scenarios:

One Time Load

You will need to generate the .txt file of the required SQL server table using the BCP command as follows :

Open the command prompt and go to the below path to run the BCP command

C:Program Files <x86>Microsoft SQL ServerClient SDKODBC130ToolsBinnRun BCP command to generate the output file of the SQL server table Sales

bcp "sales.orders" out D:outorders.txt -S "ServerName" -d Demo -U UserName -P Password -cNote: There might be several transformations required before you load this data into Redshift. Achieving this using code will become extremely hard. A tool like Hevo, which provides an easy environment to write transformations, might be the right thing for you. Here are the steps you can use in this step:

- Step 1: Upload Generated Text File to S3 Bucket

- Step 2: Create Table Schema

- Step 3: Load the Data from S3 to Redshift Using the Copy Command

Step 1: Upload Generated Text File to S3 Bucket

We can upload files from local machines to AWS using several ways. One simple way is to upload it using the file upload utility of S3. This is a more intuitive alternative.

You can also achieve this AWS CLI, which provides easy commands to upload it to the S3 bucket from the local machine.

As a pre-requisite, you will need to install and configure AWS CLI if you have not already installed and configured it. You can refer to the user guide to know more about installing AWS CLI.

Run the following command to upload the file into S3 from the local machine

aws s3 cp D:orders.txt s3://s3bucket011/orders.txtStep 2: Create Table Schema

CREATE TABLE sales.orders (order_id INT,

customer_id INT,

order_status int,

order_date DATE,

required_date DATE,

shipped_date DATE,

store_id INT,

staff_id INT

)After running the above query, a table structure will be created within Redshift with no records in it. To check this, run the following query:

Select * from sales.ordersStep 3: Load the Data from S3 to Redshift Using the Copy Command

COPY dev.sales.orders FROM 's3://s3bucket011/orders.txt'

iam_role 'Role_ARN' delimiter 't';You will need to confirm if the data has loaded successfully. You can do that by running the query.

Select count(*) from sales.ordersThis should return the total number of records inserted.

Limitations of using Custom ETL Scripts to Connect SQL Server to Redshift

- In cases where data needs to be moved once or in batches only, the custom ETL script method works well. This approach becomes extremely tedious if you have to copy data from MS SQL to Redshift in real-time.

- In case you are dealing with huge amounts of data, you will need to perform incremental load. Incremental load (change data capture) becomes hard as there are additional steps that you need to follow to achieve it.

- Transforming data before you load it into Redshift will be extremely hard to achieve.

- When you write code to extract a subset of data often those scripts break as the source schema keeps changing or evolving. This can result in data loss.

The process mentioned above is frail, erroneous, and often hard to implement and maintain. This will impact the consistency and availability of your data in Amazon Redshift.

Method 3: Using AWS Database Migration Service (DMS)

AWS Database Migration Service (DMS) offers a seamless pathway for transferring data between databases, making it an ideal choice for moving data from SQL Server to Redshift. This fully managed service is designed to minimize downtime and can handle large-scale migrations with ease.

For those looking to implement SQL Server CDC (Change Data Capture) for real-time data replication, we provide a comprehensive guide that delves into the specifics of setting up and managing CDC within the context of AWS DMS migrations.

Detailed Steps for Migration:

- Setting Up a Replication Instance: The first step involves creating a replication instance within AWS DMS. This instance acts as the intermediary, facilitating the transfer of data by reading from SQL Server, transforming the data as needed, and loading it into Redshift.

- Creating Source and Target Endpoints: After the replication instance is operational, you’ll need to define the source and target endpoints. These endpoints act as the connection points for your SQL Server source database and your Redshift target database.

- Configuring Replication Settings: AWS DMS offers a variety of settings to customize the replication process. These settings are crucial for tailoring the migration to fit the unique needs of your databases and ensuring a smooth transition.

- Initiating the Replication Process: With the replication instance and endpoints in place, and settings configured, you can begin the replication process. AWS DMS will start the data transfer, moving your information from SQL Server to Redshift.

- Monitoring the Migration: It’s essential to keep an eye on the migration as it progresses. AWS DMS provides tools like CloudWatch logs and metrics to help you track the process and address any issues promptly.

- Verifying Data Integrity: Once the migration concludes, it’s important to verify the integrity of the data. Conducting thorough testing ensures that all data has been transferred correctly and is functioning as expected within Redshift.

The duration of the migration is dependent on the size of the dataset but is generally completed within a few hours to days. The sql server to redshift migration process is often facilitated by AWS DMS, which simplifies the transfer of database objects and data

For a step-by-step guide, please refer to the official AWS documentation.

Limitations of Using DMS:

- Not all SQL Server features are supported by DMS. Notably, features like SQL Server Agent jobs, CDC, FILESTREAM, and Full-Text Search are not available when using this service.

- The initial setup and configuration of DMS can be complex, especially for migrations that involve multiple source and target endpoints.

Conclusion

That’s it! You are all set. Hevo will take care of fetching your data incrementally and will upload that seamlessly from MS SQL Server to Redshift via a real-time data pipeline.

Find out how to move data from AWS RDS MSSQL to Redshift to improve your data analysis. Our resource provides clear steps for efficient data transfer and setup.

Extracting complex data from a diverse set of data sources can be a challenging task and this is where Hevo saves the day!

Hevo offers a faster way to move data from Databases or SaaS applications like SQL Server into your Data Warehouse like Redshift to be visualized in a BI tool. Hevo is fully automated and hence does not require you to code.

Sign Up for a 14-day free trial to try Hevo for free. You can also have a look at the unbeatable pricing that will help you choose the right plan for your business needs.

FAQs on MS SQL Server to Redshift

1. How to connect RDS to Snowflake?

To migrate data from Amazon RDS to Snowflake you can use automated data pipeline like Hevo or you can write custom scripts by manually creating and exporting DB snapshots before losing data to Snowflake.

2. What is a Snowflake in simple terms?

Snowflake is a cloud-based data warehousing platform that allows organizations to store, manage, and analyze large amounts of data efficiently. It is known for its scalability, performance, and ease of use.

3. What is the equivalent of Snowflake in AWS?

Amazon Redshift is the equivalent of Snowflake in AWS, offering a cloud-based data warehousing solution for big data analytics.

Tell us in the comments about data migration from SQL Server to Redshift!