Quick Takeaway

Quick TakeawayMigrating from Oracle to BigQuery involves transferring your data, schema, and application logic to optimize for cloud-native scalability and performance. This migration enables your organization to leverage BigQuery’s advanced analytics and cost-efficient storage.

You can migrate your data from Oracle to BigQuery using three methods – Choose your pick!

Method 1: Using no-code Hevo Platform

- Step 1: Connect Oracle as your Source

- Step 2: Connect BigQuery as your Destination

Method 2: Using Cloud Data Fusion Web Interface

- Step 1: Create a Replication Job

- Step 2: Configure Source(Oracle)

- Step 3: Configure Destination(BigQuery)

- Step 4: Start the Job and Monitor

Method 3: Using Custom ETL Scripts

- Step 1: Export Data from Oracle DB to CSV Format

- Step 2: Extract Data from Oracle DB

- Step 3: Upload to Google Cloud Storage

- Step 4: Upload to BigQuery from GCS

- Step 5: Update the Target Table in BigQuery

In a time where data is being termed the new oil, businesses need to have a data management system that suits their needs perfectly and positions them to be able to take full advantage of the benefits of being data-driven.

Data is being generated at rapid rates and businesses need database systems that can scale up and scale down effortlessly without any extra computational cost.

Enterprises are exhausting a huge chunk of their data budgets in just maintaining their present physical database systems instead of directing the said budget towards gaining tangible insights from their data.

This scenario is far from ideal and is the reason why moving your Oracle data to a cloud-based Data Warehouse like Google BigQuery is no longer a want but a need.

This post provides a step-by-step walkthrough on how to migrate data from Oracle to BigQuery.

Table of Contents

Why is Oracle the Backbone of Many Enterprise Applications?

Oracle database is a relational database system that helps businesses store and retrieve data. Oracle DB(as it’s fondly called) provides a perfect combination of high-level technology and integrated business solutions which is a non-negotiable requisite for businesses that store and access huge amounts of data. This makes it one of the world’s trusted database management systems.

Key Features of Oracle that Make it Popular

- Recovery Operations: Oracle supports redo logs for ensuring data integrity and enabling robust recovery mechanisms.

- Database Management: Oracle Database provides robust and secure data management for data-driven decision-making in the enterprise.

- High Performance and Scalability: It can handle high transaction rates and scale up for growing data and users.

- CDC Support: Oracle supports CDC for real-time data integration.

- Integration: Oracle products integrate with many applications and technologies to simplify across departments. You can seamlessly integrate it with other warehouses such as Snowflake, Redshift, etc.

What makes BigQuery a Go-To Data Warehouse?

Google BigQuery is a fully managed and serverless enterprise cloud data warehouse. It uses Dremel technology, which transforms SQL queries into tree structures. BigQuery provides an outstanding query performance owing to its column-based storage system.

Key Features:

- Machine Learning: BigQuery ML allows users to train and run machine learning models in BigQuery using only SQL syntax.

- High Scalability: It scales seamlessly to handle petabytes of data.

- Serverless Architecture: BigQuery manages servers and storage in the background, so a user does not need to.

- SQL Compatibility: It supports ANSI SQL, which is useful for people who already know SQL and want to write and run queries. This also allows a user to combine various BI tools for data visualization.

Leverage BigQuery’s features like machine learning, search, geospatial analysis, and business intelligence by migrating your data to it using Hevo. Skip long and tedious manual setup and choose Hevo’s no-code platform to:

- Effortlessly extract data from Oracle and other 150+ connectors.

- Transform and map data easily with drag-and-drop features.

- Real-time data migration to leverage AI/ML features of BigQuery.

Still not sure? See how Postman, the world’s leading API platform, used Hevo to save 30-40 hours of developer efforts monthly and found a one-stop solution for all its data integration needs.

Get Started with Hevo for FreeWhat are the Methods to Connect Oracle to BigQuery?

Here are the methods you can use to set up Oracle BigQuery migration in a seamless fashion:

Method 1: Easiest two step Method for replicating data from Oracle to BigQuery- Using Hevo

Step 1: Configure Source(Oracle)

Connect to your Oracle database by providing the Pipeline Name, Database Host, Database Port, Database User, Database Password, and Service Name.

Step 2: Configure Destination(BigQuery)

Connect to BigQuery by providing the Destination Name, Project ID, GCS Bucket, Dataset ID, Enabling Stream Inserts, and Sanitize Table/Column Names.

Method 2: Performing BigQuery Oracle Migration Using Cloud Data Fusion Web Interface

Prerequisites:

- A public Cloud Data Fusion instance created in version 6.3.0 or later. If you create a private instance, set up VPC network peering.

- Enable the Cloud Data Fusion, Dataproc, Datastream, BigQuery, and Cloud Storage APIs.

- Ask your administrator to grant you the following IAM roles:

- Dataproc Worker

- Cloud Data Fusion Runner on the Dataproc service account in the project that contains the cluster

- DataStream Admin on the Cloud Data Fusion service account and Dataproc service account

Step 1: Create a Replication Job

- Go to “Replication” in Cloud Data Fusion and click “Create a replication job.”

- Provide a job name and click “Next.”

Step 2: Configure Source(Oracle)

- Select “Oracle (by Datastream)” as the source.

- Choose a connectivity method (IP allowlisting or VPC peering).

- Enter your Oracle server’s host, port, username, and password.

- Click “Next.”

Step 3: Configure Destination(BigQuery)

Select BigQuery as the destination and click “Next.”

Step 4: Select Tables

- Choose the tables to replicate from Oracle to BigQuery. (Important: Supplemental logging must be enabled on selected tables.)

- Click “Next.”

Step 5: Review and Deploy

Review the job configuration and mappings. Resolve any issues identified in the assessment and click “Deploy replication job.”

Step 6: Start the Job and Monitor

- Go to the replication job details page and click “Start.”

- Monitor the job status (Provisioning -> Starting -> Running).

- Go to the replication job details page and click “Monitoring” to track progress.

You can go to your BigQuery account and check whether your data is getting replicated or not. You can also query your data to ensure the accuracy and quality of data.

Limitations of Using Google Cloud Fusion

- As a Google Cloud service, Data Fusion might have some vendor lock-in associated with it.

- Although it supports CDC, the capabilities are limited in comparison to other third party tools.

- For large-scale migrations or high-volume data streams, performance can be a challenge. Careful tuning and optimization might be necessary to avoid delays.

- It can get very expensive for high-volume usage.

If Google Cloud Fusion is not the right fit for your organisation, check out the 5 Best Oracle Replication Tools.

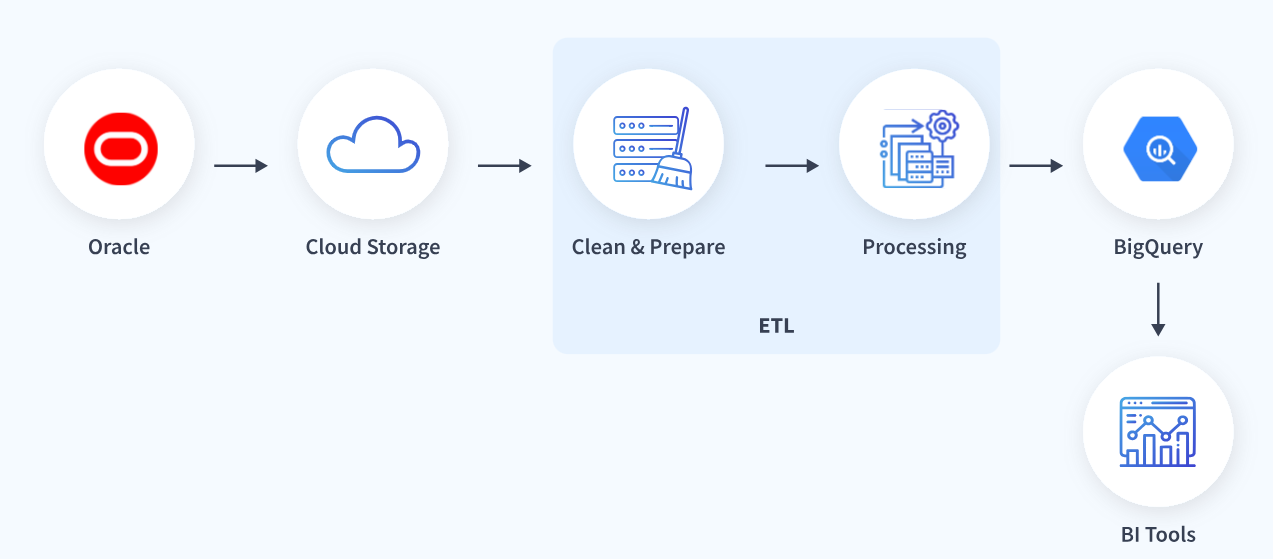

Method 3: Using Custom ETL Scripts for Oracle to BigQuery Migration

Let’s take a step-by-step look at each of the steps mentioned above.

Step 1: Export Data from Oracle DB to CSV Format

- BigQuery does not support the binary format produced by Oracle DB. Hence, we must export our data to a CSV(comma-separated value) file.

- Oracle SQL Developer is the preferred tool for carrying out this task. It is a free, integrated development environment. This tool makes developing and managing Oracle databases exceptionally simple on-premise and on the cloud. It is a migration tool for moving your database to and from Oracle. Oracle SQL Developer can be downloaded for free from here.

- Open the Oracle SQL Developer tool and right-click the table name in the object tree view.

- Click on Export.

- Select CSV, and the export data window will pop up.

- Select the format tab and select the format as CSV.

- Enter the preferred file name and location.

- Select the columns tab and verify the columns you wish to export.

- Select the Where tab and add any criteria you wish to use to filter the data.

- Click on apply.

Step 2: Extract Data from Oracle DB

- The

COPY_FILEprocedure in theDBMS_FILE_TRANSFERpackage is used to copy a file to a local file system. The following example copies a CSV file named client.csv from the /usr/admin/source directory to the /usr/admin/destination directory as client_copy.csv on a local file system. - The SQL command CREATE DIRECTORY is used to create a directory object for the object you want to create the CSV file. For instance, if you want to create a directory object called source for the /usr/admin/source directory on your computer system, execute the following code block

CREATE DIRECTORY source AS '/usr/admin/source';- Use the SQL command CREATE DIRECTORY to create a directory object for the directory into which you want to copy the CSV file. An illustration is given below

CREATE DIRECTORY dest_dir AS '/usr/admin/destination';Where dest_dir is the destination directory

- Grant required access to the user who is going to run the COPY_FILE procedure. An illustration is given below:

GRANT EXECUTE ON DBMS_FILE_TRANSFER TO admin;

GRANT READ ON DIRECTORY source TO admin;

GRANT WRITE ON DIRECTORY client TO admin;- Connect as an admin user and provide the required password when required:

CONNECT admin- Execute the COPY_FILE procedure to copy the file:

BEGIN

DBMS_FILE_TRANSFER.COPY_FILE(

source_directory_object => 'source',

source_file_name => 'client.csv',

destination_directory_object => 'dest_dir',

destination_file_name => 'client_copy.csv');

END;Step 3: Upload to Google Cloud Storage

Once the data has been extracted from Oracle DB the next step is to upload it to GCS. There are multiple ways this can be achieved. The various methods are explained below.

- Using Gsutil

GCP has built Gsutil to assist in handling objects and buckets in GCS. It provides an easy and unique way to load a file from your local machine to GCS.

To copy a file to GCS:

gsutil cp client_copy.csv gs://my-bucket/path/to/folder/To copy an entire folder to GCS:

gsutil dest_dir -r dir gs://my-bucket/path/to/parent/- Using Web console

An alternative means to upload the data from your local machine to GCS is using the web console. To use the web console alternative follow the steps laid out below.

- Login to the GCP using the link. You ought to have a working Google account to make use of GCP. Click on the hamburger menu which produces a drop-down menu. Hit on storage and navigate to the browser on the left tab.

- Create a new bucket to which you will migrate your data. Make sure the name you choose is globally unique.

- Click on the bucket you created and select Upload files. This action takes you to your local directory where you choose the file you want to upload.

- The data upload process starts immediately and a progress bar is shown. Wait for completion, after completion the file will be seen in the bucket.

Step 4: Upload to BigQuery from GCS

To upload to BigQuery you make use of either the web console UI or the command line. Let us look at a brief on both methods.

First, let’s let look into uploading the data using the web console UI.

- The first step is to go to the BigQuery console under the hamburger menu.

- Create a dataset and fill out the drop-down form.

- Click and select the data set created by you. An icon showing ‘create table’ will appear below the query editor. Select it.

- Fill in the drop-down list and create the table. To finish uploading the table, the schema has to be specified. This will be done using the command-line tool. When using the command line interacting with GCS is a lot easier and straightforward.

To access the command line, when on the GCS home page click on the Activate cloud shell icon shown below.

The syntax of the bq command line is shown below:

bq --location=[LOCATION] load --source_format=[FORMAT] [DATASET].[TABLE] [PATH_TO_SOURCE] [SCHEMA]

[LOCATION] is an optional parameter that represents your Location.

[FORMAT] is to be set to CSV.

[DATASET] represents an existing dataset.

[TABLE] is the table name into which you're loading data.

[PATH_TO_SOURCE] is a fully-qualified Cloud Storage URI.

[SCHEMA] is a valid schema. The schema must be a local JSON file or inline.

Note: Instead of using supplying a schema definition, there is an autodetect flag that can be used.You can specify your scheme using the bq command line. An illustration is shown below using a JSON file

bq --location=US load --source_format=CSV your_dataset.your_table gs://your_bucket/your_data.csv ./your_schema.jsonThe schema can also be auto-detected. An example is shown below:

bq --location=US load --autodetect --source_format=CSV your_dataset.your_table gs://mybucket/data.csv- BigQuery command-line interface offers us 3 options to write to an existing table. This method will be used to copy data to the table we created above.

The options are:

a) Overwrite the table

bq --location=US load --autodetect --replace --source_format=CSV your_dataset_name.your_table_name gs://bucket_name/path/to/file/file_name.csv

b) Append the table

bq --location=US load --autodetect --noreplace --source_format=CSV your_dataset_name.your_table_name gs://bucket_name/path/to/file/file_name.csv ./schema_file.json

c) Add a new field to the target table. In this code, the schema will be given an extra field.

bq --location=asia-northeast1 load --noreplace --schema_update_option=ALLOW_FIELD_ADDITION --source_format=CSV your_dataset.your_table gs://mybucket/your_data.csv ./your_schema.json

Step 5: Update the Target Table in BigQuery

The data that was joined in the steps above have not been fully updated to the target table.

The data is stored in an intermediate data table, this is because GCS is a staging area for BigQuery upload. Hence, the data is stored in an intermediate table before being uploaded to BigQuery:

There are two ways of updating the final table as explained below.

- Update the rows in the final table and insert new rows from the intermediate table.

UPDATE final_table t SET t.value = s.value FROM intermediate_data_table s WHERE t.id = s.id; INSERT final_table (id, value) SELECT id, value FROM intermediate_data_table WHERE NOT id IN (SELECT id FROM final_table);

- Delete all the rows from the final table which are in the intermediate table.

DELETE final_table f WHERE f.id IN (SELECT id from intermediate_data_table); INSERT data_setname.final_table(id, value) SELECT id, value FROM data_set_name.intermediate_data_table;Limitations of Using Custom ETL Scripts to Connect Oracle to BigQuery

- Writing custom code would add value only if you are looking to move data once from Oracle to BigQuery.

- When a use case that needs data to be synced on an ongoing basis or in real-time from Oracle into BigQuery arises, you would have to move it in an incremental format. This process is called Change Data Capture. The custom code method mentioned above fails here. You would have to write additional lines of code to achieve this.

- When you build custom SQL scripts to extract a subset of the data set in Oracle DB, there is a chance that the script breaks as the source schema keeps changing or evolving.

- Often, a need arises to transform the data (Eg, hide Personally Identifiable Information) before loading it into BigQuery. Achieving this would require you to add time and resources to the process.

In a nutshell, ETL scripts are fragile and highly likely to break. Therefore, we strongly recommend that you use ETL tools, check out our blog on the 10 Best Oracle ETL Tools to see which tools suits your needs.

You can also read about:

- How to migrate your data from Oracle to Redshift

- How to migrate your data from Oracle to Snowflake

- How to set up Kafka Oracle integration

- How to migrate your data from Databricks to Oracle Database

Conclusion

This blog talks about the two methods you can use to connect Oracle to BigQuery in a seamless fashion. If you rarely need to transfer your data from Oracle to BigQuery, then the first manual Method will work fine. Whereas, if you require Real-Time Data Replication and looking for an Automated Data Pipeline Solution, then Hevo is the right choice for you!

Sign up for a 14-day free trial with Hevo to experience seamless data integration. Also, check out Hevo’s pricing to choose a plan that is best for you.

FAQ on Oracle to BigQuery

How to Convert an Oracle Query to BigQuery?

Map Oracle data types and functions to their BigQuery equivalents (e.g., VARCHAR2 to STRING, NVL() to IFNULL()). Adjust syntax for differences in SQL functions and features.

How to connect Oracle to BigQuery?

Use a data migration tool like Google Cloud Dataflow, Informatica, or Fivetran, or set up an ETL pipeline to extract data from Oracle and load it into BigQuery.

What is the difference between Oracle and BigQuery?

Oracle is an on-premise, traditional relational database, while BigQuery is a fully managed, serverless data warehouse optimized for large-scale analytics on Google Cloud.