Although PostgreSQL and BigQuery are both powerful tools in their own right, integrating the two can be challenging. Ensuring performance and reliability while minimizing data delay can also be complex for teams working with massive datasets. Additionally, implementing secure connections or designing pipelines between on-premises and cloud systems can often make things more complicated.

In this blog, we will give you a brief overview of PostgreSQL and Google BigQuery. We will also provide you with 2 methods on how you can set up your Postgres to BigQuery integration using 2 methods.

Table of Contents

What Is PostgreSQL?

PostgreSQL is a popular tool primarily used as an OLTP database and for analyzing data at scale. Its novel architecture, reliable at scale, contains a robust feature set, and extensibility gives it an advantage over other databases.

What is Google BigQuery?

Google BigQuery is a serverless, cost-effective, and highly scalable data warehouse with built-in machine learning capabilities. Its operations are carried out using the business intelligence engine. BigQuery integrates speedy SQL queries with Google’s infrastructure’s processing capacity to manage business transactions, data from several databases, and access control restrictions for users who see and query data.

Take advantage of PostgreSQL’s novel architecture, reliability at scale, and robust feature set by seamlessly connecting it with various destinations like BigQuery using Hevo. Hevo’s no-code platform empowers teams to:

- Integrate data from 150+ sources(60+ free sources).

- Simplify data mapping and transformations using features like drag-and-drop.

- Easily migrate different data types like CSV, JSON, etc., with the auto-mapping feature.

Join 2000+ happy customers like Whatfix and Thoughtspot, who’ve streamlined their data operations. See why Hevo is the #1 choice for building modern data stacks.

Get Started with Hevo for FreeHow To Set up PostgreSQL to BigQuery Connection?

Method 1: Using Hevo Data

In this method, we will see how to load data from PostgreSQL to BigQuery using a pipeline tool. For this walkthrough, we will be using Hevo as our pipeline tool.

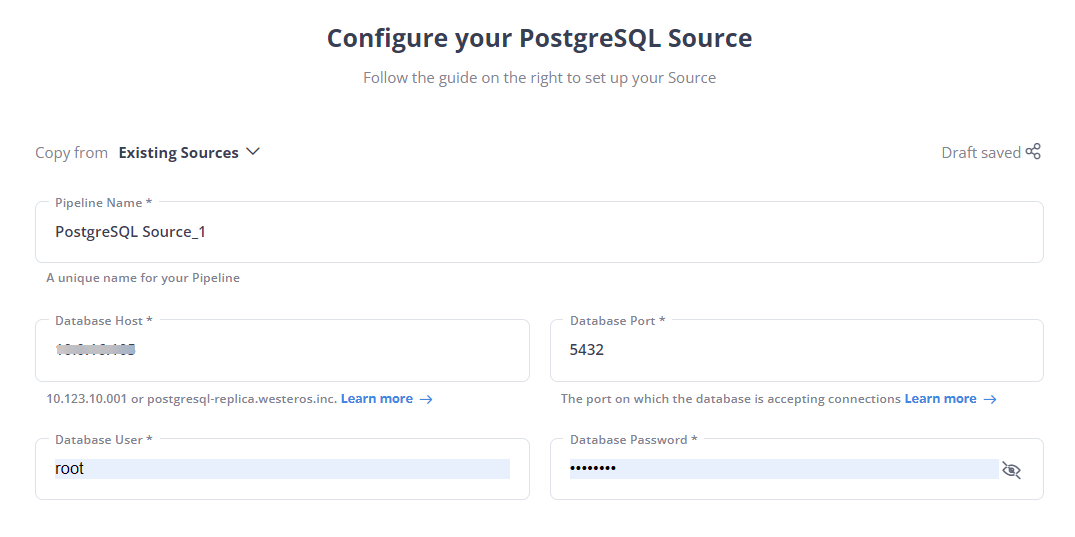

Step 1: Configure PostgreSQL As a Source

- Connect your PostgreSQL account to Hevo’s platform.

Step 2: Configure BigQuery As Destination

- Configure Google BigQuery as your destination and start moving your data.

With this, you have successfully configured Postgres to BigQuery replication using Hevo Data in only two simple steps.

Method 2: Manual ETL Process to Set Up PostgreSQL to BigQuery Integration

To execute the following steps, you require a pre-existing database and a table populated with PostgreSQL records.

Let’s take a deep dive into each step.

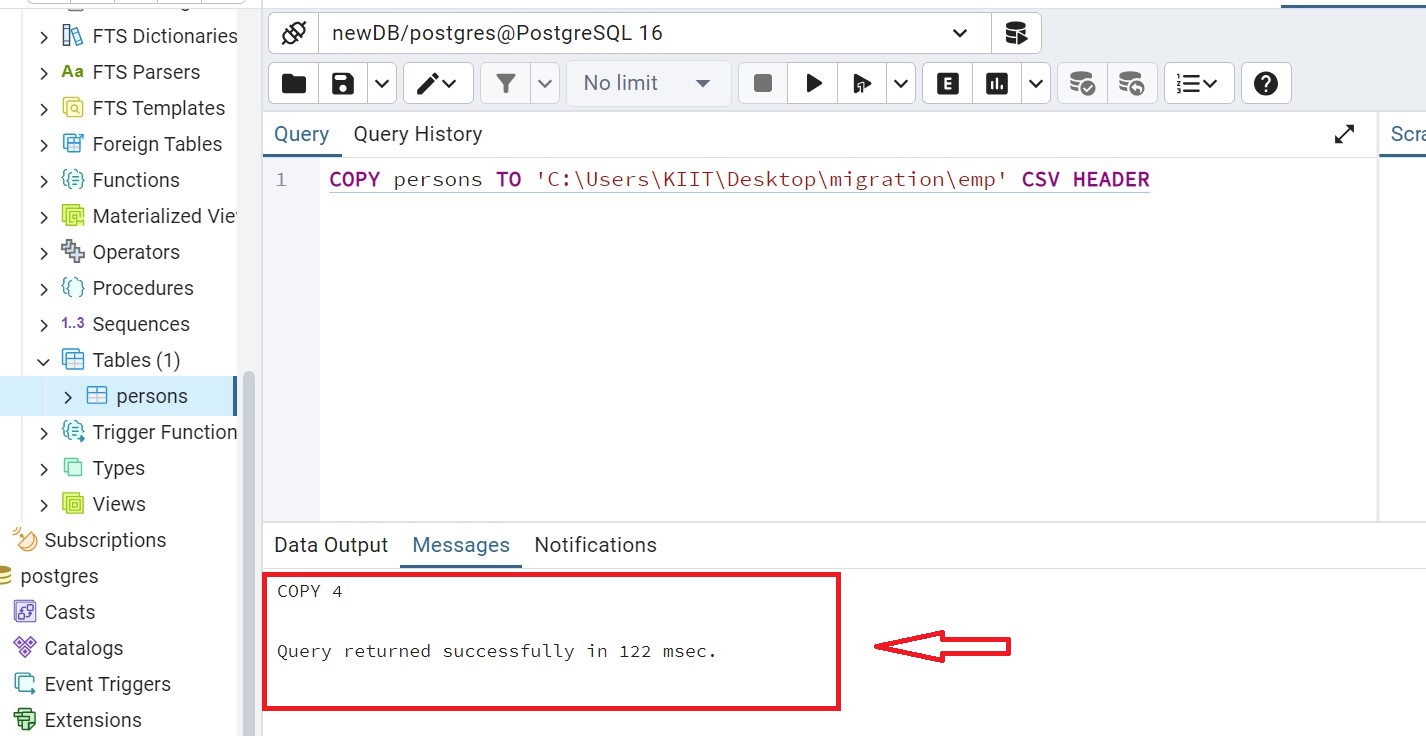

Step 1: Extract Data From PostgreSQL

You would first need to extract data from PostgreSQL and export it into a CSV file. To do this, write the following command in your PostgreSQL workbench.

COPY your_table_name TO ‘new_file_location\new_file_name’ CSV HEADER

Once you have successfully migrated the data to a CSV file

Step 2: Clean and Transform Data

While uploading the data to Google BigQuery, you need the tables and the data to be compatible with the bigQuery format. Keep the following things in mind while migrating data to bigQuery:

- BigQuery expects CSV data to be UTF-8 encoded.

- BigQuery doesn’t enforce Primary Key and unique key constraints. Your ETL process must do so.

- Postgres and BigQuery have different column types. However, most of them are convertible.

You can visit their official page to learn more about BigQuery data types.

- DATE value must be a dash(-) separated and in the form YYYY-MM-DD (year-month-day).

- Fortunately, the default date format in Postgres is the same, YYYY-MM-DD. So, if you are simply selecting date columns, it should be the incorrect format.

- The

TO_DATE - If the data is stored as a string in the table for any reason, it can be converted while selecting data.

Syntax : TO_DATE(str,format)

Example : SELECT TO_DATE('31,12,1999','%d,%m,%Y');

Result : 1999-12-31- In TIMESTAMP type, the hh:mm:ss (hour-minute-second) portion must use a colon (:) separator.

- Similar to the Date type, the

TO_TIMESTAMPfunction in PostgreSQL is used to convert strings into timestamps.

Syntax : TO_TIMESTAMP(str,format)

Example : SELECT TO_TIMESTAMP('2017-03-31 9:30:20','YYYY-MM-DD HH:MI:SS');

Result: 2017-03-31 09:30:20-07- Make sure your text columns are quoted, as they can potentially have delimiter characters.

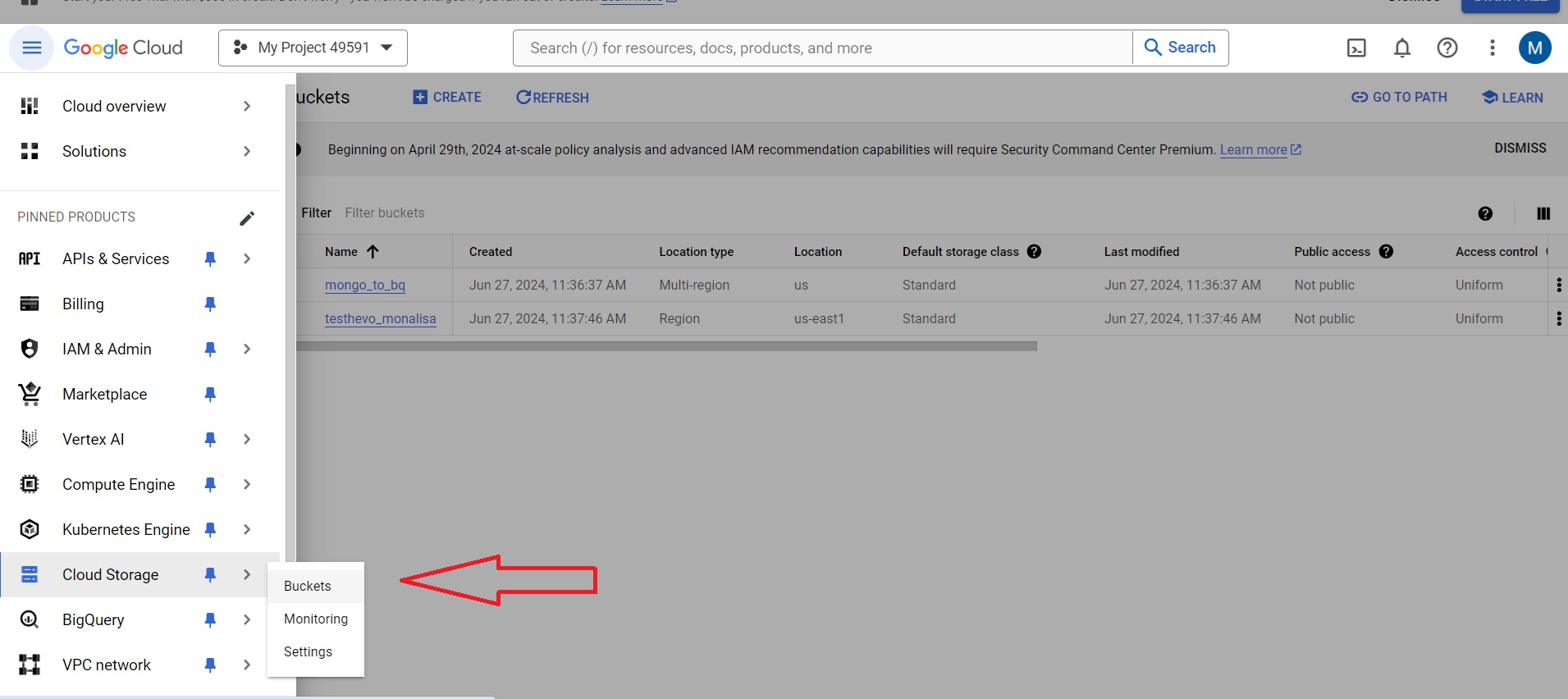

Step 3: Upload to Google Cloud Storage(GCS) bucket

If you haven’t already, you need to create a storage bucket in Google Cloud for the next step

Step 3.1: Go to your Google Cloud account and select the Cloud Storage → Bucket.

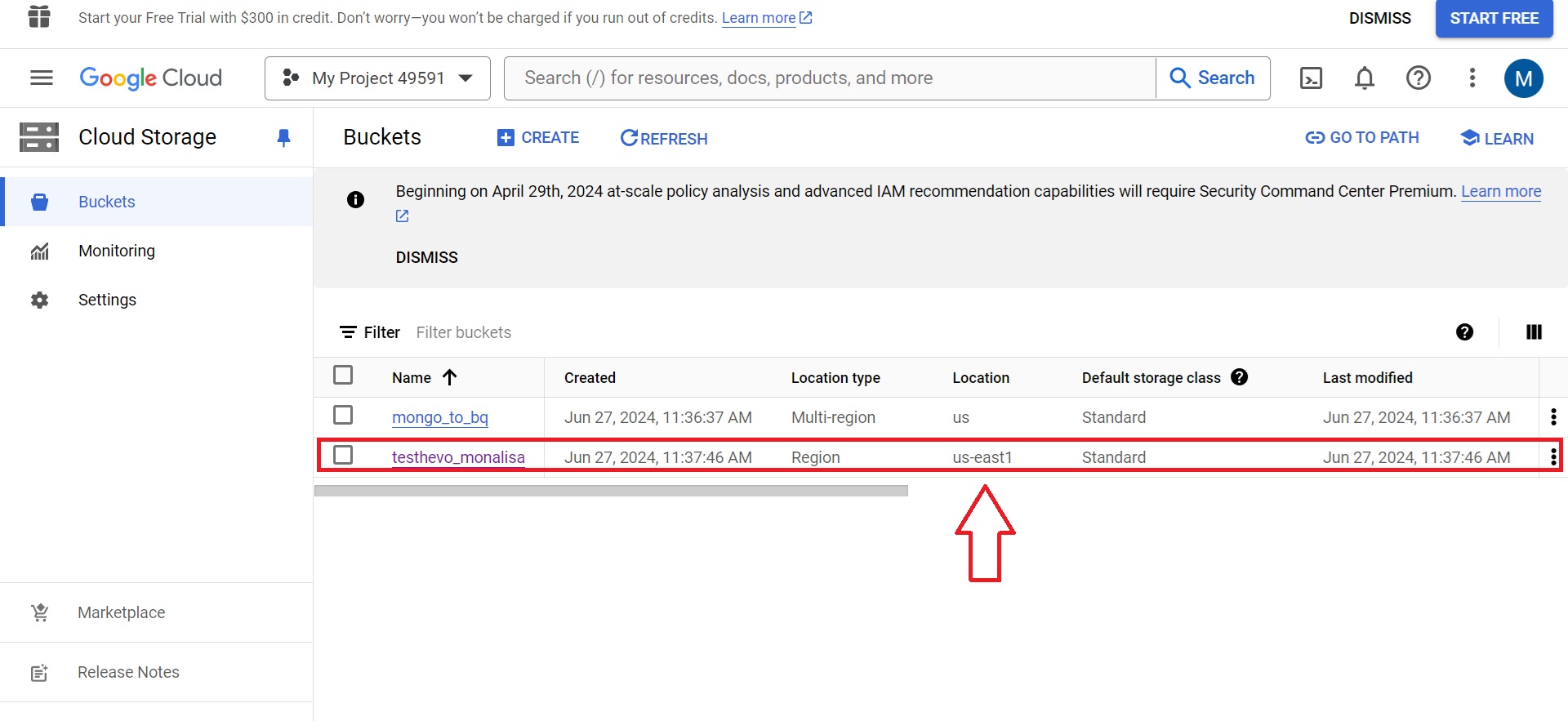

Step 3.2: Select a bucket from your existing list of buckets. If you do not have a previously existing bucket, you must create a new one.

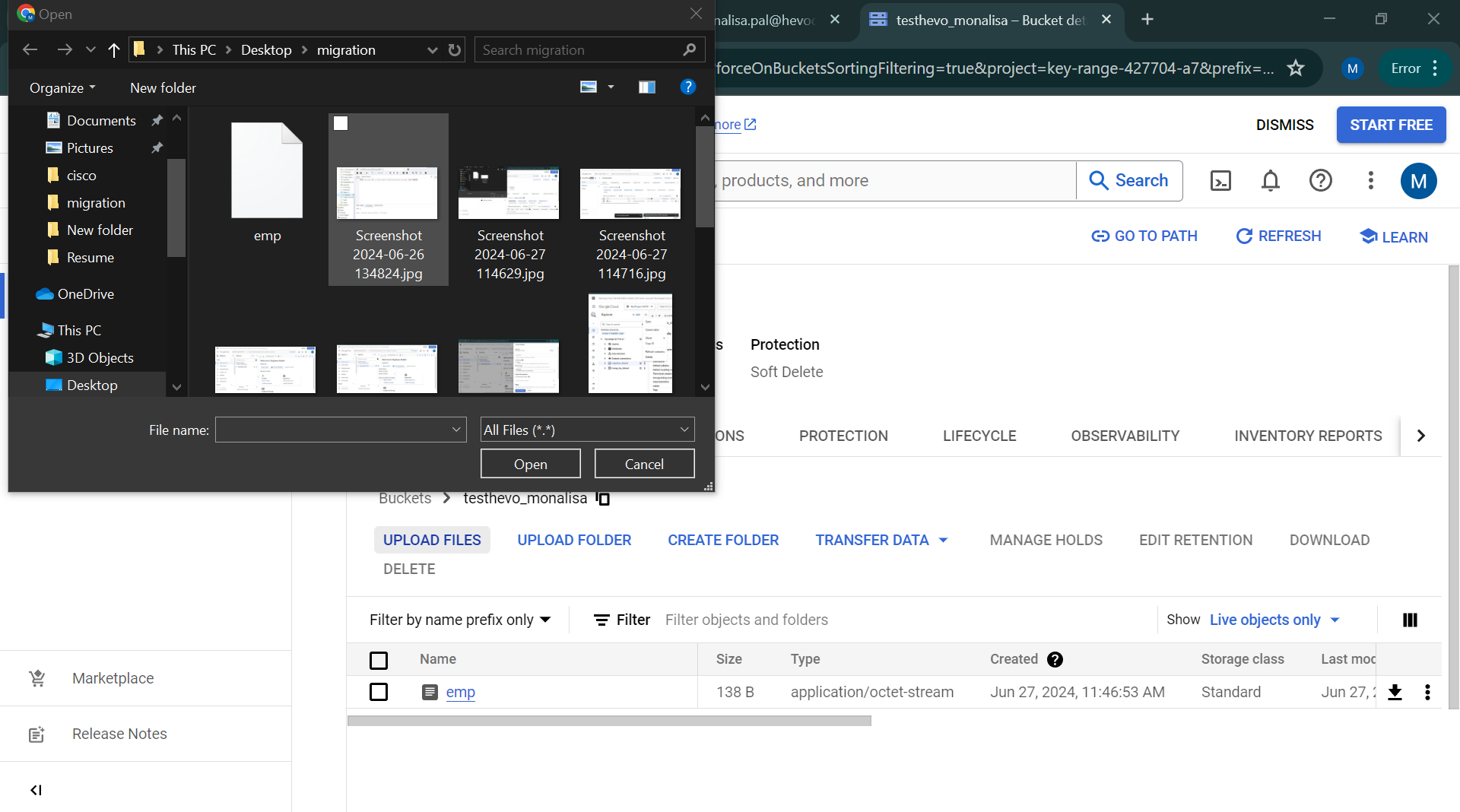

Step 3.3: Upload your .csv file into the bucket by clicking the upload file option. Select the file that you want to upload.

Step 4: Upload to BigQuery table from GCS

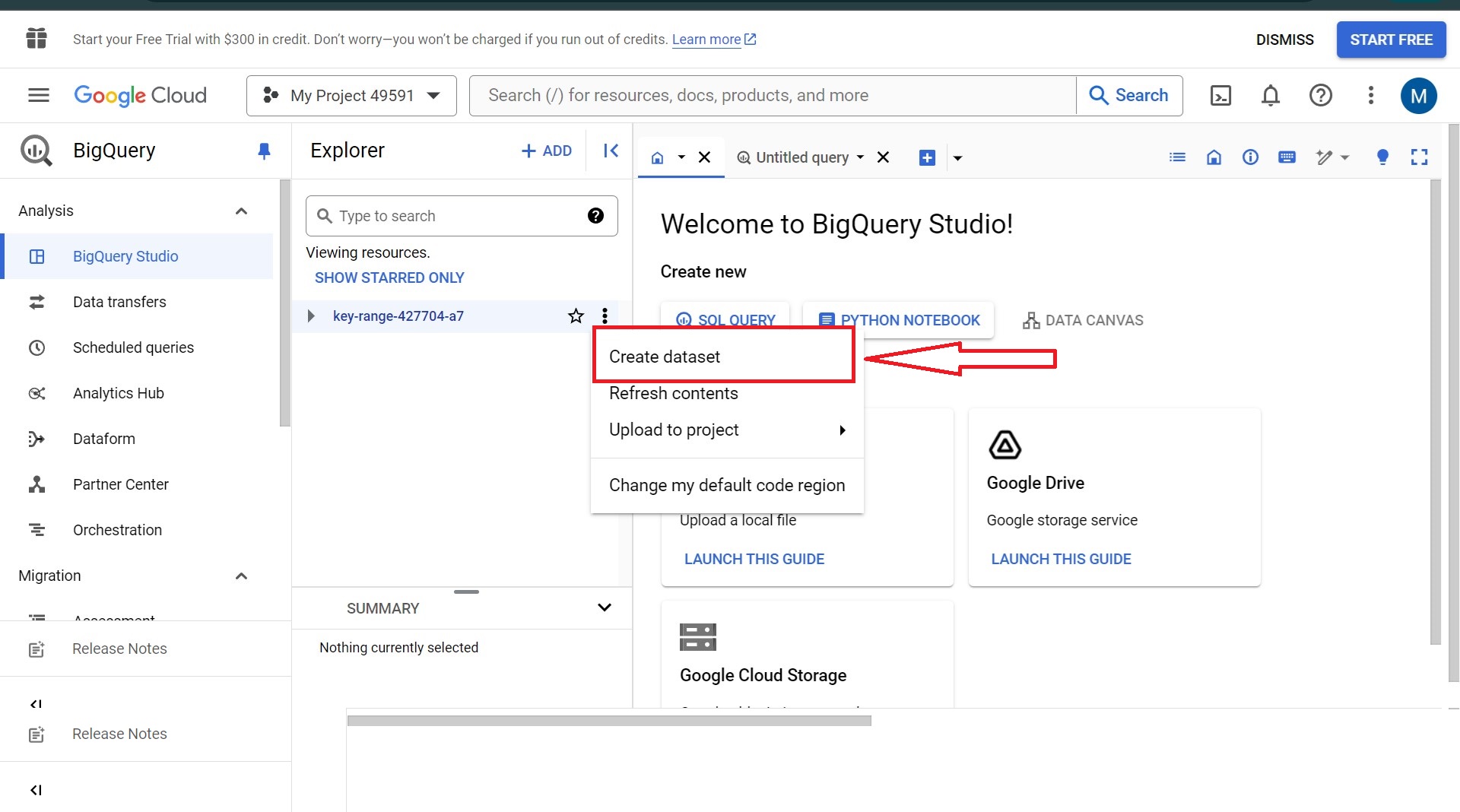

Step 4.1: Go to the Google Cloud console and select BigQuery from the dropdown. Select the Project ID you want to work with and choose Create Dataset.

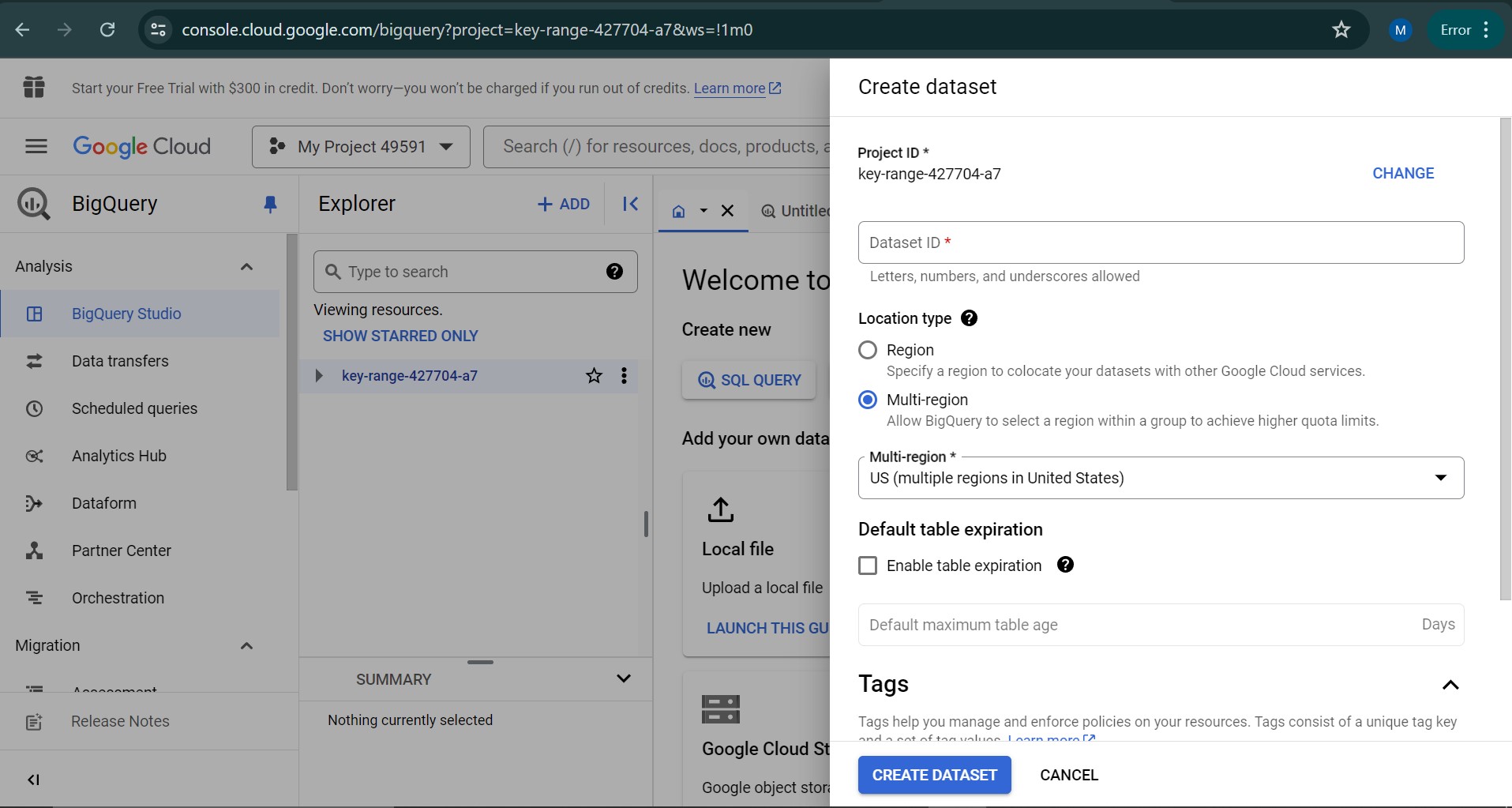

Step 4.2: Provide the configuration as per your requirements and create the dataset.

Your dataset should be successfully created after this process.

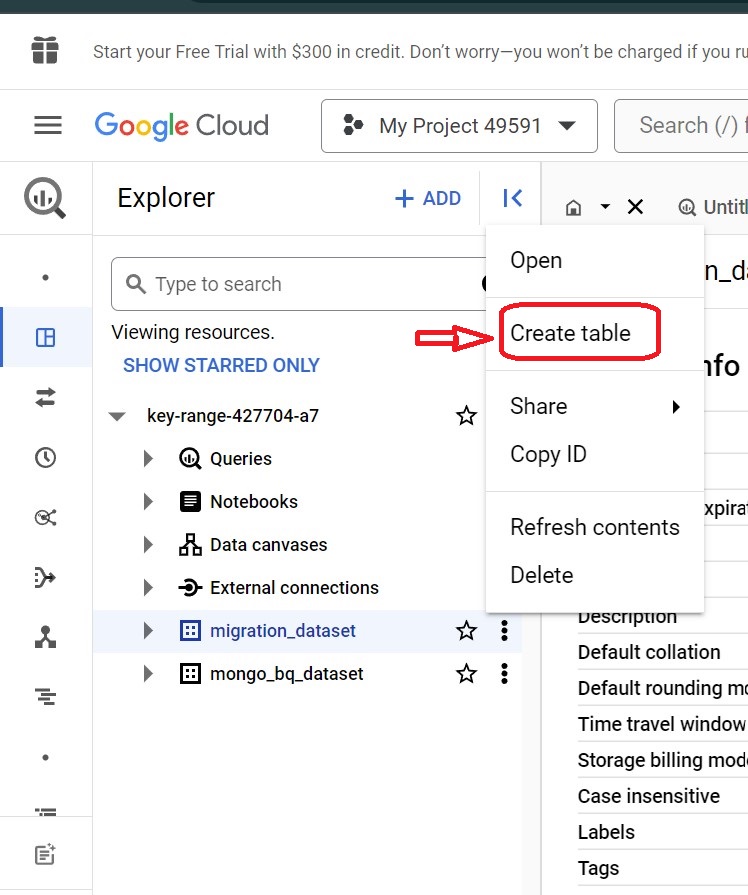

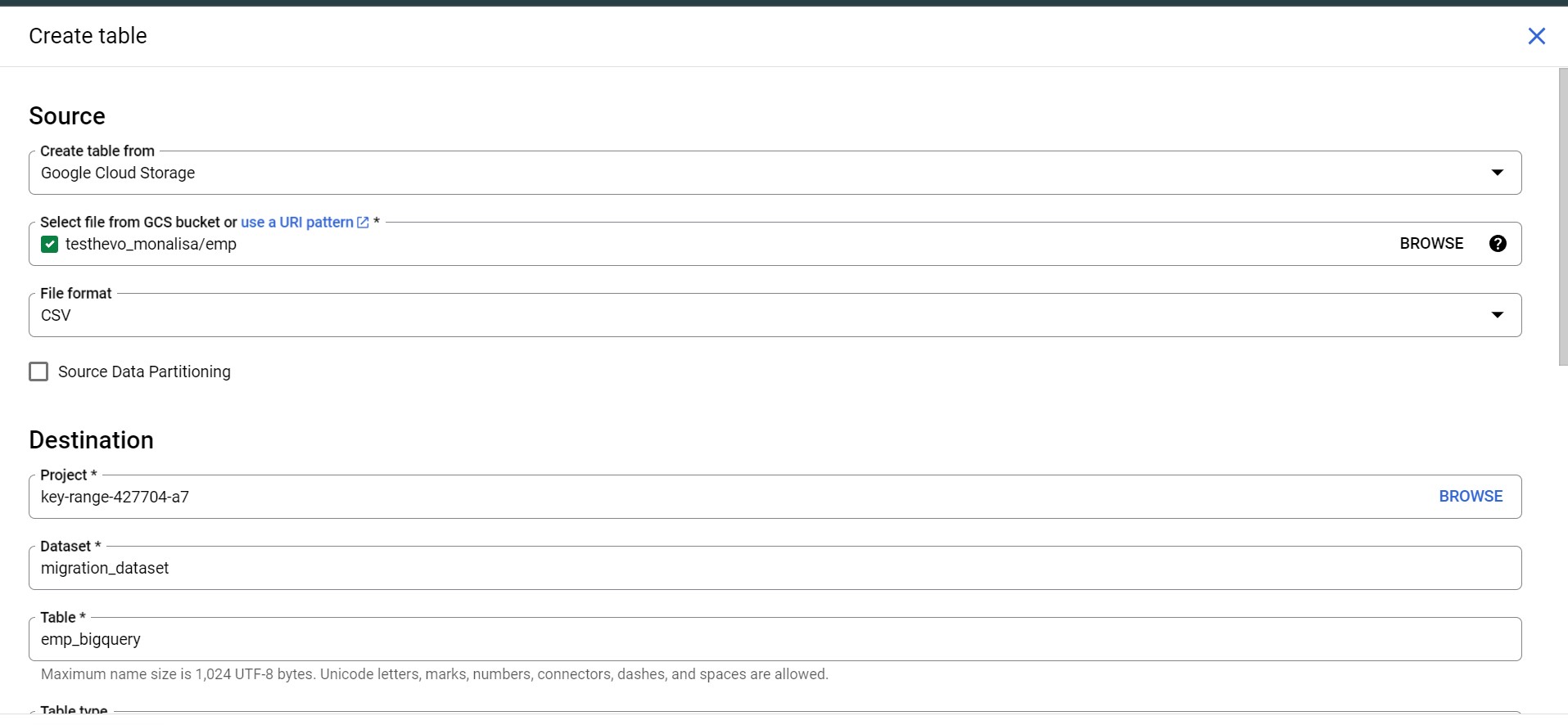

Step 4.3: To create a table, select the project ID of the dataset, choose the newly created dataset, and click ‘Create Table’ from the side menu.

Step 4.4: To create a table, select the source as Google Cloud Storage. Next, select the correct GCS bucket with the .csv file. Then, select the file format that matches the GCS bucket. In your case, it should be in .csv file format. You must provide a table name for your table in the BigQuery database. Select the mapping option as automapping if you want to migrate the data as it is.

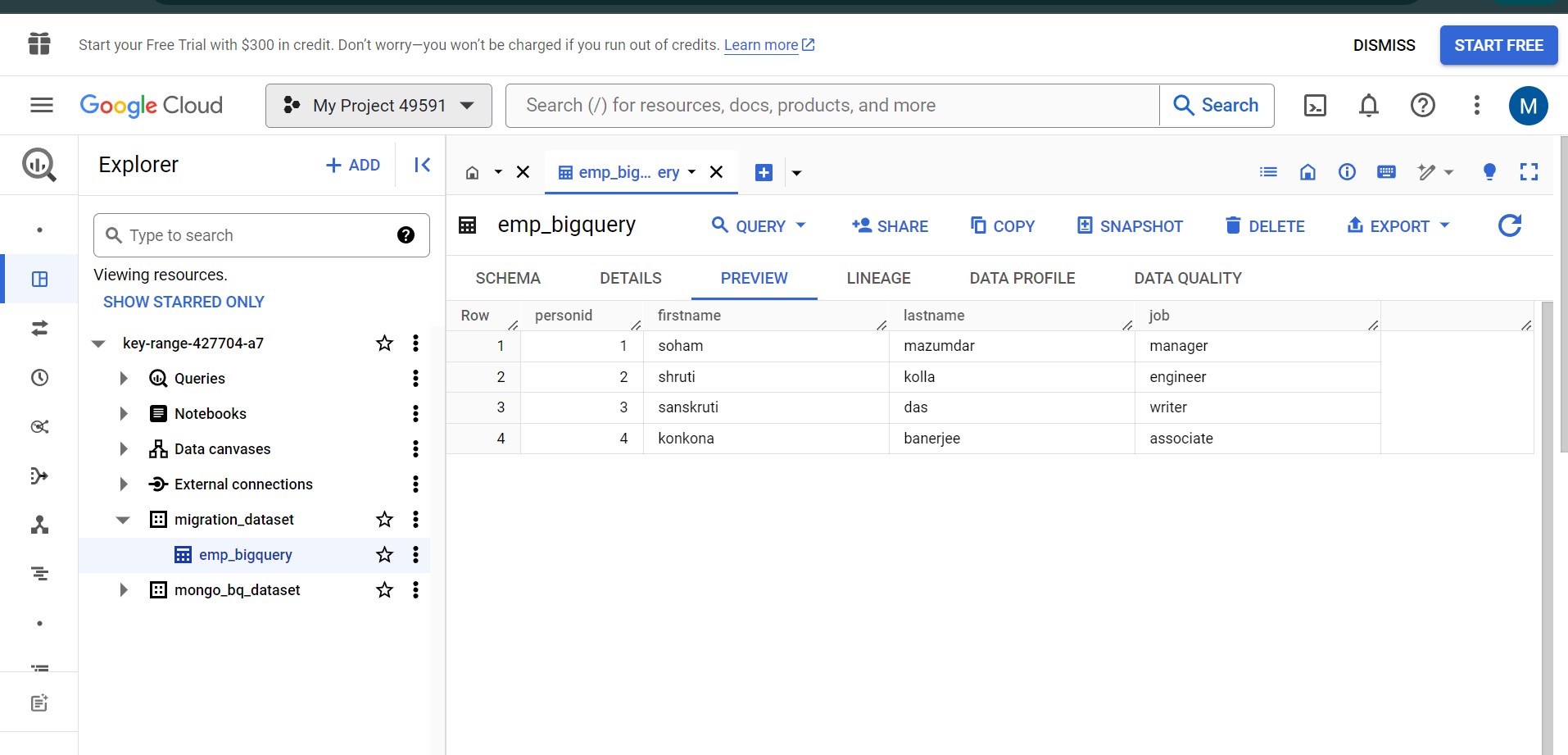

Step 4.5: Your table should be created next and loaded with the same data from PostgreSQL.

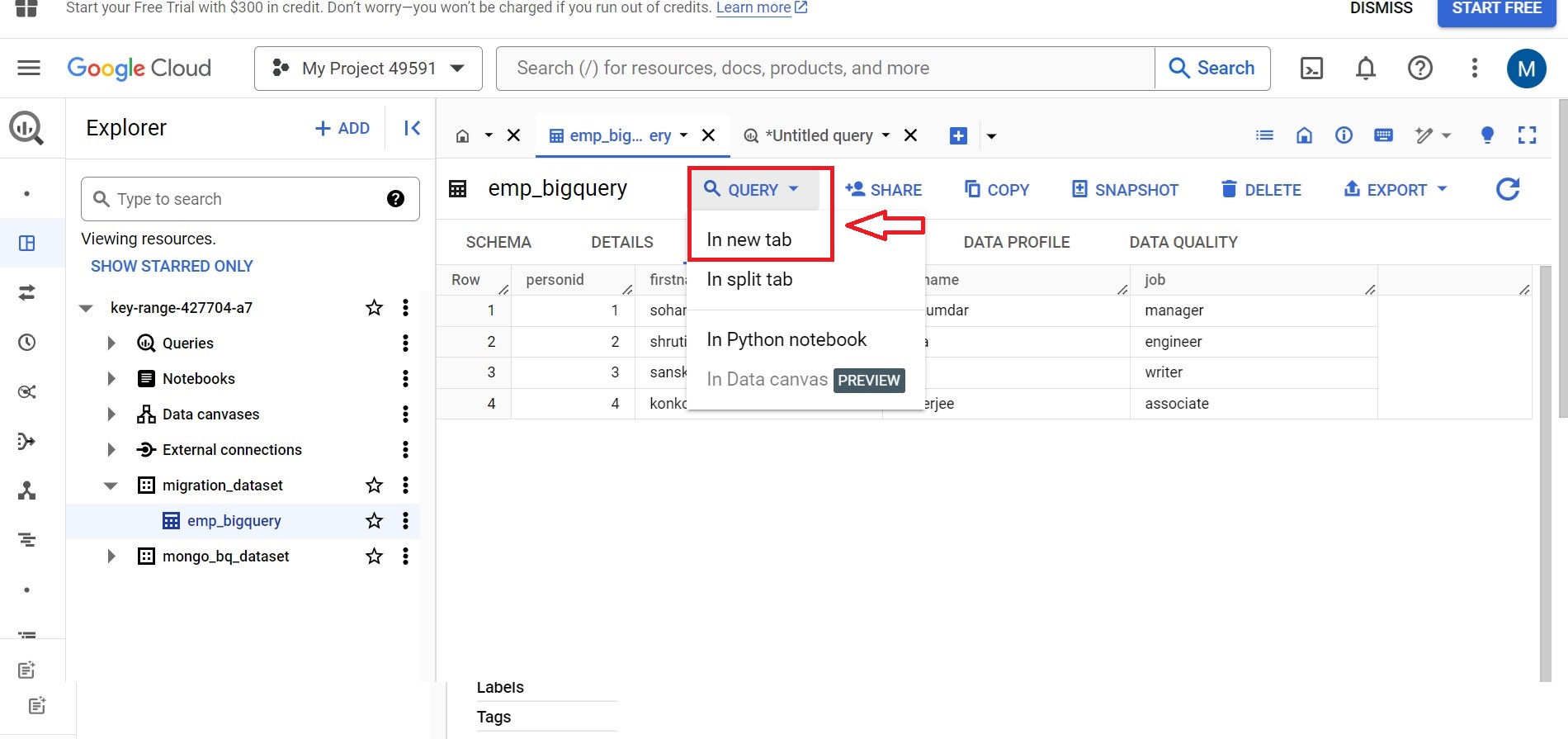

Step 5: Query the table in BigQuery

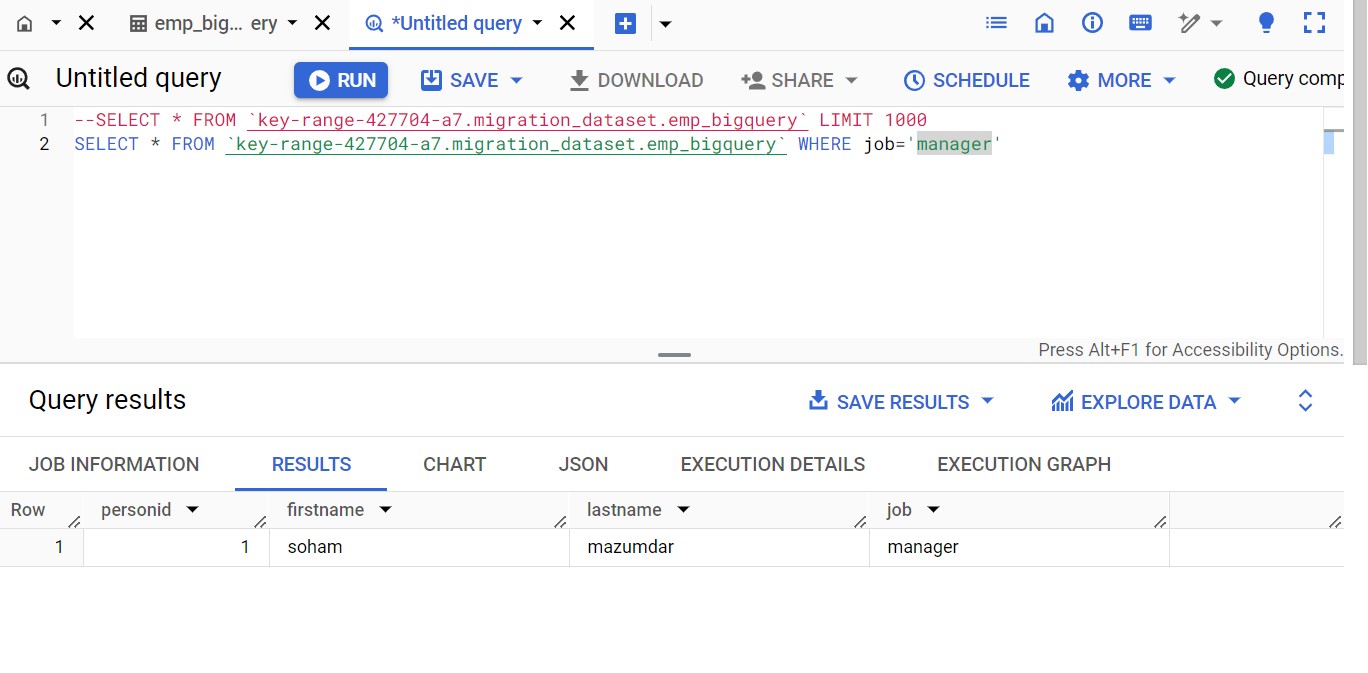

After loading the table into BigQuery, you can query it by selecting the QUERY option above the table. You can query your table by writing basic SQL syntax.

Note: Mention the correct project ID, dataset name, and table name.

The above query extracts records from the emp table where the job is manager.

Limitations of the Manual Method:

- The manual migration process can be time-consuming, requiring significant effort to export, transform, and load data, especially if the dataset is large or complex.

- Manual processes are susceptible to human errors, such as incorrect data export settings, file handling mistakes, or misconfigurations during import.

- When migration is frequent or involves multiple tables and datasets, manual processes can become repetitive, inefficient, and add to the overall workload.

- Manual migrations can be resource-heavy, taking up valuable computing power and human effort that could be better spent on more critical tasks.

Additional Read –

- Migrate Data from Postgres to MySQL

- PostgreSQL to Oracle Migration

- Connect PostgreSQL to MongoDB

- Connect PostgreSQL to Redshift

- Replicate Postgres to Snowflake

Conclusion

In this guide, we have walked you through two approaches for migrating data from PostgreSQL to BigQuery: the manual method, which requires significant configuration and effort, and the automated method using tools like Hevo Data. While manual migration can be complex, automated data pipeline tools greatly simplify the process. No matter which method you choose, following these steps will help ensure a smooth and successful migration.

With Hevo Data, you can seamlessly automate the entire migration process, eliminating the need for complex setups. Sign up for a 14-day free trial and experience the feature-rich Hevo suite firsthand.

FAQ on PostgreSQL to BigQuery

How do you transfer data from Postgres to BigQuery?

To transfer data from PostgreSQL to BigQuery, export your PostgreSQL data to a format like CSV or JSON, then use BigQuery’s data import tools or APIs to load the data into BigQuery tables.

Can I use PostgreSQL in BigQuery?

No, BigQuery does not natively support PostgreSQL as a database engine. It is a separate service with its own architecture and SQL dialect optimized for large-scale analytics and data warehousing.

Can PostgreSQL be used for Big Data?

Yes, PostgreSQL can handle large datasets and complex queries effectively, making it suitable for big data applications.

How do you migrate data from Postgres to Oracle?

To migrate data from PostgreSQL to Oracle, use Oracle’s Data Pump utility or SQL Developer to export PostgreSQL data as SQL scripts or CSV files, then import them into Oracle using SQL Loader or SQL Developer.