If you’re using Salesforce to manage your customer data and Amazon Redshift for analytics, you might need to integrate the two to gain valuable insights. Salesforce is a powerhouse for CRM, but its data can be challenging to analyze without the right tools. That’s where Redshift comes in, providing a scalable data warehouse for deep analysis.

In this blog, I’ll walk you through two easy methods to load data from Salesforce to Redshift, making your integration process seamless and helping you unlock the full potential of your data.

Table of Contents

What Is Salesforce?

Salesforce is one of the world’s most renowned customer relationship management platforms. Salesforce has many features that allow you to manage your key accounts and sales pipelines. While Salesforce does provide analytics within the software, many businesses would want to extract this data and combine it with data from other sources such as marketing, products, and more to get deeper insights into the customer. This can be achieved by bringing the CRM data into a modern data warehouse like Redshift.

What Is Redshift?

Amazon Redshift is a fully managed data warehouse designed for big data, offering scalable clusters for parallel querying. It automates tasks like backups, maintenance, and security. Amazon Redshift’s multi-layered architecture supports simultaneous queries, reduces waiting times, and provides granular insights through cluster slices.

Let’s look more closely at both of these methods. Also, before reading the methods, you can check our article on Salesforce Connect.

Are you having trouble migrating your data into Redshift? With our no-code platform and competitive pricing, Hevo makes the process seamless and cost-effective.

- Easy Integration: Connect and migrate data into Redshift without any coding.

- Auto-Schema Mapping: Automatically map schemas to ensure smooth data transfer.

- In-Built Transformations: Transform your data on the fly with Hevo’s powerful transformation capabilities.

- 150+ Data Sources: Access data from over 150 sources, including 60+ free sources.

You can see it for yourselves by looking at our 2000+ happy customers, such as Postman, DoorDash, Thoughtspot, and Pelago.

Get Started with Hevo for FreeMethod 1: Best Way To Move Data From Salesforce to Redshift: Using Hevo

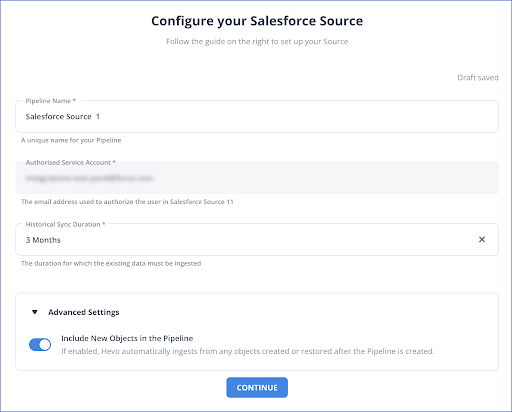

Step 1.1: Configure Salesforce As Your Source

Step 1.2: Configure Redshift As Your Destination

By automating all the burdensome ETL tasks, Hevo will ensure that your Salesforce data is securely and reliably moved to Amazon Redshift in real-time.

Method 2: Using Data Loader Export Wizard To Move Data From Salesforce to Redshift

Step 2.1: Export Data From Salesforce Using Data Loader Export Wizard

- Login to your Salesforce Account. Search “Data Export” in the search bar.

- Choose a Salesforce object from which you want to export data. If the name of your object isn’t mentioned, choose “Show all Salesforce Objects” to view every object you can access.

- You can export the data immediately, or you can schedule the export.

Step 2.2: Load Data to S3

- Open your Amazon S3 Console and click the “Create Bucket” option.

- Now, type in a unique S3 bucket name and select a region.

- Next, click “Create”.

- Now, choose the new bucket you just created, click the “Actions” button, and select “Create Folder” in the drop-down list. Name your folder.

- From the drop-down list, choose the name of the data folder you just entered.

- Now select “Files Wizard” and choose “Add Files.”

- Select the Salesforce CSV file you downloaded and click “Start Upload.”

Step 2.3: Move Data From S3 to Redshift

Run the Copy Command in Redshift to load data from S3 to Redshift.

COPY table_name [ column_list ] FROM data_source CREDENTIALS access_credentials [options] After this, your data will be successfully loaded into the Redshift data warehouse.

Limitations of Using Manual Method To Move Data From Salesforce to Redshift

- Manual effort: Requires repetitive manual tasks to export data from Salesforce and load it into Redshift, making it time-consuming.

- Data size limitations: Salesforce Export Wizard has data size restrictions, making exporting large datasets difficult.

- Lack of real-time updates: Manual exports don’t support real-time data syncing, leading to outdated information in Redshift.

- Error-prone process: Manual data handling increases the chances of errors, such as missing or incorrect data during export/import.

- No automation: The process lacks automation, requiring regular human intervention, which is inefficient for continuous data loading.

For an efficient ETL process from Salesforce to Redshift, choosing the right data integration tools can streamline data transfer, reduce manual effort, and enhance data reliability

Think about how you would:

- Know if Salesforce has changed data?

- Know when Redshift is not available for writing?

- Find the resources to rewrite code when needed.

- Find the resources to update the Redshift schema in response to new data requests.

Opting for Hevo cuts out all these questions. You will have fast and reliable access to analysis-ready data, allowing you to focus on finding meaningful insights.

Why Integrate Salesforce with Redshift?

By migrating your data from Salesforce into Redshift, you will be able to help your business stakeholders find the answers to these questions:

- What percentage of customers’ queries from a region are through email?

- The customers acquired from which channel have the maximum number of tickets raised?

- What percentage of agents respond to customers’ tickets acquired through the organic channel?

- Customers acquired from which channel have the maximum satisfaction ratings?

- How does customer SCR (Sales Close Ratio) vary by marketing campaign?

- How does the number of calls to the user affect the duration of activity with a product?

- How does Agent performance vary by Product Issue Severity?

Following ETL’s best practices can optimize data migration, ensuring data accuracy and minimizing latency during the transformation process.

Conclusion

This blog talks about the two methods you can use to seamlessly move data from Salesforce to Redshift.

Integrating Salesforce with Redshift enables seamless analysis and data-driven decision-making. While manual methods can be time-consuming and complex, using Hevo simplifies the process. With its no-code interface and automated data pipelines, Hevo ensures efficient, real-time data transfer without manual effort. It handles data mapping, transformation, and error resolution, letting you focus on insights instead of integration challenges.

You can try Hevo’s 14-day free trial. You can also have a look at the unbeatable pricing that will help you choose the right plan for your business needs!

Frequently Asked Questions

1. How Do I Export Data from Salesforce to Redshift?

Use ETL Tools like Hevo Data, Fivetran, or Talend to extract data from Salesforce and load it into Redshift.

AWS Data Pipeline or AWS Glue can be configured to automate this process.

Custom Scripts: Write custom Python or Java programs using Salesforce APIs to export data and load it into Redshift.

2. How Do I Transfer Data to Redshift?

Use the COPY command in Redshift to load data from sources like S3.

Utilize ETL tools like Hevo Data, Fivetran, or AWS Glue to manage the transfer process.

3. Can Salesforce connect to Redshift?

Yes, Salesforce can connect to Amazon Redshift using ETL tools or data connectors.