Easily move your data from Azure SQL To Redshift to enhance your analytics capabilities. With Hevo’s intuitive pipeline setup, data flows in real-time—check out our 1-minute demo below to see the seamless integration in action!

Azure SQL is a database service that facilitates high-performance querying with complete infrastructure management. However, it does not provide clarity on database transaction unit requirements and does not support connections with linked servers. All these limitations make Azure SQL a less preferred database solution.

To overcome these challenges, you can integrate it with database services like Redshift, which supports linked server connection with servers like SQL. Redshift is a data warehouse offering massively parallel processing and columnar storage facilities with high scalability at the petabyte level. You can integrate Azure SQL to Redshift to conduct advanced data processing and gain useful insights to improve your organization’s growth.

Are you wondering how do I migrate data from Azure SQL to Redshift? This article explains two methods for transferring data from Azure SQL to Redshift.

Table of Contents

Why Integrate Azure SQL to Redshift

The following reasons indicate it would be beneficial if you import data into Redshift from Azure SQL, such as:

- You can easily integrate Redshift with other AWS services to leverage its best performance and security features. Azure SQL, which is managed by Microsoft, may not be easily integrated with AWS services.

- Redshift provides massively parallel processing capabilities that allow you to query your datasets faster than that in Azure SQL.

- Azure SQL is a cost-effective option for small enterprises with a smaller volume of datasets. In contrast, Redshift is a cost-effective solution for large enterprises with a high volume of data.

- Redshift is a more suitable service for online analytical processing (OLAP), while Azure SQL is an online transactional processing (OLTP) database.

- You can store your data in a columnar format in Redshift, enabling you to store and perform complex data analytics on large volumes of datasets. Thus, you can convert Azure SQL to Redshift to handle massive datasets efficiently.

Method 1: Using Hevo Data to Integrate Azure SQL to Redshift

Hevo Data, an Automated Data Pipeline, provides you with a hassle-free solution to connect Azure SQL to Redshift within minutes with an easy-to-use no-code interface. Hevo is fully managed and completely automates the process of loading data from Azure SQL to Redshift and enriching the data and transforming it into an analysis-ready form without having to write a single line of code.

Method 2: Using CSV file to Integrate Data from Azure SQL to Redshift

This method would be time-consuming and somewhat tedious to implement. Users will have to write custom codes to enable two processes, streaming data from Azure SQL to Redshift. This method is suitable for users with a technical background.

When integrated, moving data from Azure SQL into Redshift could solve some of the biggest data problems for businesses. Hevo offers a 14-day free trial, allowing you to explore real-time data processing and fully automated pipelines firsthand. With a 4.3 rating on G2, users appreciate its reliability and ease of use—making it worth trying to see if it fits your needs.

GET STARTED WITH HEVO FOR FREE[/hevoButton]

Azure SQL Overview

Azure SQL is a PaaS (Platform as a Service) database solution offered by Microsoft Azure. As a fully managed service, it handles infrastructure management tasks such as upgradation, patching, backup, and monitoring. Azure SQL also provides high availability and performance and helps you manage your data effectively for analytics.

There are two deployment options in Azure SQL. You can use a Single Database or an Elastic Pool for Azure SQL database deployment. A single database is a fully managed, isolated database similar to the SQL Server database engine. An Elastic Pool, on the other hand, is a collection of single databases that share resources, such as CPU or memory. You can choose any deployment options according to your requirements for using Azure SQL for complex analytics.

Redshift Overview

Redshift is a cloud-based data warehouse platform managed by Amazon Web Services (AWS). It offers a petabyte level of scalability and massively parallel processing capabilities for faster querying of large amounts of data. You can also conduct predictive analytics with Redshift ML, which helps you to build and execute machine learning models within the data warehouse.

Redshift offers robust security features, such as role-based access controls, row and column-level security, and easy authentication mechanisms to secure your data. It also provides a columnar data storage format that enhances compression and provides faster query performance.

Methods to Integrate Azure SQL to Redshift

- Method 1: Using Hevo Data to Integrate Azure SQL to Redshift

- Method 2: Using CSV file to Integrate Azure SQL to Redshift

Method 1: Using Hevo Data to Integrate Azure SQL to Redshift

Step 1.1: Configuration of Azure SQL as a Source

Prerequisites

- You should have access to the MS SQL Server version 2008 or higher. Also, Whitelist Hevo’s IP address.

- If you are using Change Tracking or Table as Pipeline Mode, and the Query mode is Change Tracking, ensure that the database user is granted CHANGE TRACKING and ALTER DATABASE privileges.

- You should also grant SELECT and VIEW CHANGE TRACKING privileges to the database user.

- Check the availability of the database’s hostname and the source instance’s port number.

- Also, ensure you are assigned the Team Administrator, Team Collaborator, or Pipeline Administrator role in Hevo to create the Pipeline.

Once all the prerequisites are fulfilled, configure Azure SQL as a source in Hevo.

For further information on the configuration of Azure SQL as a source, refer to the Hevo documentation.

Step 1.2: Configuration of Redshift as Destination

Prerequisites

- You should have access to an active Amazon Redshift instance and Redshift database.

- Also, in the Amazon Redshift instance, ensure the availability of the database hostname and port number.

- Whitelist Hevo’s IP addresses and grant SELECT privileges to the database user.Further, you should be assigned the Team Collaborator or any administrator role other than the Billing Administrator role in Hevo.

After fulfilling all the prerequisites, configure Redshift as a destination in Hevo.

For more information on the configuration of Redshift as a destination, refer to the Hevo documentation.

That’s it, literally! You have connected Azure SQL to Redshift in just 2 steps. These were just the inputs required from your end. Now, everything will be taken care of by Hevo. It will automatically replicate new and updated data from Azure SQL to Redshift.

Important Features provided by Hevo Data

- Data Transformation: Hevo Data provides you the ability to transform your data for analysis with a simple Python-based drag-and-drop data transformation technique.

- Automated Schema Mapping: Hevo Data automatically arranges the destination schema to match the incoming data. It also lets you choose between Full and Incremental Mapping.

- Incremental Data Load: It ensures proper utilization of bandwidth both on the source and the destination by allowing real-time data transfer of the modified data.

With a versatile set of features, Hevo Data is one of the best tools to export data from Azure SQL to Redshift files.

Method 2: Using CSV file to Integrate Data from Azure SQL to Redshift

You can use CSV files to convert Azure SQL to Redshift as follows:

Step 2.1: Export Data from Azure SQL to a CSV file Using BCP

You can export Azure SQL data to a CSV file with the help of Bulk Copy Program (BCP) by following the below-mentioned steps:

- First, ensure that the BCP utility is installed in your system. For this, you can run the following command in the Command Prompt:

C:\WINDOWS\system32> bcp /v- The output will be the following if BCP is installed:

C:\WINDOWS\system32> bcp /v

BCP - Bulk Copy Program for Microsoft SQL Server.

Copyright (C) Microsoft Corporation. All Rights Reserved.

Version: 15.0.2000.5

- After this, run the following code to export your desired data table to a CSV file:

bcp table_name out C:\Path\target_file_name -c -U username -S Azure.database.windows.netStep 2.2: Uploading CSV file to S3 Bucket

Follow these steps to upload a CSV file in your native system to the Amazon S3 bucket.

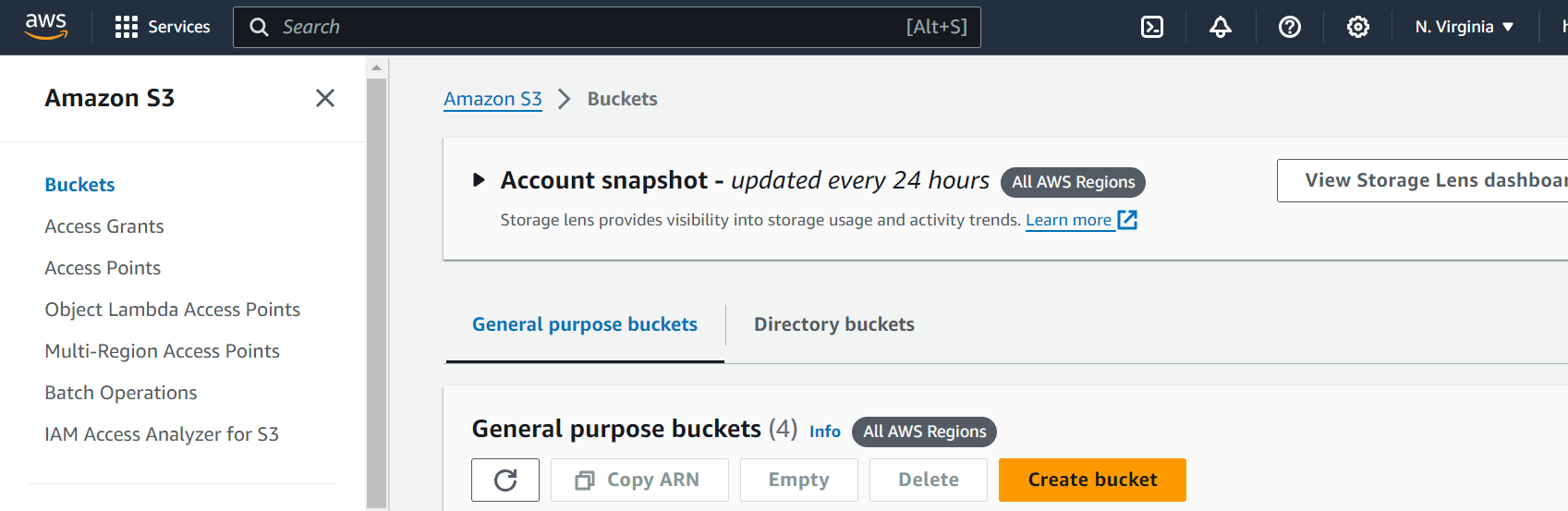

Create a Bucket

- First, sign in and go to the Amazon S3 Console in the AWS Management Console.

- Next, choose Create Bucket and the AWS region where you want to create it.

- Now, enter a unique bucket name in the Bucket Name option of the Create Bucket dialog box.

- Choose the suggested defaults for the rest of the options, and then choose Create Bucket.

- On successfully creating an Amazon S3 bucket, the Console displays your empty bucket in the Buckets panel.

Create a Folder

- First, choose the name of the new bucket.

- Then, click on the Create Folder button.

- Name the new folder as load.

Upload the CSV files to the new Amazon S3 bucket

- Choose the name of the data folder where you want to upload the CSV file.

- In the Upload wizard, choose Add Files and follow the Amazon S3 Console instructions to upload your CSV file.

- Choose Upload.

Step 2.3: Exporting Data from S3 Bucket to Redshift

After transferring the CSV file to the S3 bucket, you should use the COPY command to export the data in it to Redshift using the following code:

COPY table_name [ column_list ] FROM data_source CREDENTIALS access_credentials [options] Limitations of Using CSV File to Load Data from Azure SQL to Redshift

There are certain limitations of using a CSV file and S3 bucket to copy data from Azure SQL to Redshift, such as:

- Limited features of CSV: CSV files support basic data types such as numbers or dates but do not support complex data types like nested objects or images. Also, they do not provide data security mechanisms like access control or encryption, which may expose your data to security threats.

- Time-consuming: Redshift is suitable for handling large volumes of data. CSV files present certain constraints when dealing with massive amounts of data and may take a lot of time to transfer this data. This makes CSV files a less preferred option for data migration from Azure SQL to Redshift.

- Constraints of S3 bucket: It is not possible to transfer the ownership of the S3 bucket to an AWS account other than the one in which it was created. Also, you cannot change the name and AWS region of the S3 bucket after the initial setup. This can create constraints in seamless data integration, especially for new AWS users.

Use Cases of Azure SQL to Redshift

There are several use cases of Azure SQL to Redshift integration, such as:

- Business Intelligence: After integrating Azure SQL to Redshift, you can combine it with BI tools like Tableau, Power BI, Looker, etc., to create interactive dashboards and reports to gain useful business insights.

- Financial Sector: Redshift can help you store and analyze financial data related to stock markets, transaction data, or risk data.

- Healthcare Services: You can use Redshift to streamline the organization and analysis of healthcare data, such as patient health histories or research data related to the medical sector.

Learn More About:

- How to Migrate Azure Postgres to Redshift

- How to load data from Azure SQL to BigQuery

- Amazon Redshift vs Azure Synapse

- Azure Synapse vs Azure SQL DB

- Azure SQL to SQL Server

Conclusion

- This blog provides information on how to transfer data from Azure SQL to Redshift. It gives information on two methods for data integration.

- One involves using a CSV file to transfer data from Azure SQL to Redshift. However, it has some drawbacks because of the limitations of CSV files, such as a lack of support for complex data types and the absence of security features.

- The other method uses Hevo Data, an automated data integration platform for this integration. It offers features such as zero-code data pipeline creation capabilities, a simple interface, and an extensive connector library for seamless data transfer.

Take Hevo’s 14-day free trial to experience a better way to manage your data pipelines. You can also check out the unbeatable pricing, which will help you choose the right plan for your business needs.

FAQ on Azure SQL to Redshift

1. How is Azure SQL different from SQL Server?

Azure SQL is a cloud-based service that automates infrastructure management tasks such as upgrading or monitoring databases. You have to do these tasks manually while using the SQL Server. However, you can deploy SQL Server on a cloud platform like Azure Infrastructure as a Service (IaaS). It runs the SQL Server with the help of virtual machines while you have to take responsibility for infrastructure management tasks such as upgradation or monitoring the virtual machines.

2. What is an Amazon S3 bucket?

Amazon S3 bucket is a data storage service that stores data as objects in buckets. In addition to storage, S3 buckets are also used for data backup and delivery.

3. How can data be transferred from Azure SQL to Amazon Redshift?

Data can be transferred from Azure SQL to Amazon Redshift using ETL tools like Hevo or by setting up a custom pipeline with AWS DMS.