Imagine putting hours into manually handling data tasks only to discover that one small mistake has caused the entire process to fail. Yes, it is frustrating. This is why automation is important.

Automation is essential to ensure efficiency and data integrity in businesses. There is a McKinsey1 report that states: Task automation can spare up to 30% of your operational cost, allowing the businesses to invest in other important domains. This blog will teach you how to automate and optimize your Airflow Oracle operations, resulting in more productive workflows.

Table of Contents

Overview of Apache Airflow

Apache Airflow is a tool for designing and orchestrating workflows. It allows users to write and schedule workflows as Directed Acyclic Graphs (DAGs) using Python code. With a robust scheduling engine, extensible architecture, and powerful integrations, you can manage your repetitive data tasks. It is among the most widely used open-source systems for processing tasks and complex data pipeline automation.

Overview of Oracle Database

Known for its robustness and scalability, Oracle Database is a robust RDMS that is often used by large organizations. Whether it is processing and analyzing millions of transactions or storing enormous volumes of records in a data warehouse, it can handle any powerful use case. Due to its advanced features, Oracle is a crucial asset of many organizations’ IT infrastructure.

Are you looking for ways to connect your oracle databases? Hevo has helped customers across 45+ countries connect their cloud storage to migrate data seamlessly. Hevo streamlines the process of migrating data by offering:

- Seamlessly data transfer between Amazon S3, DynamoDB, and 150+ other sources.

- Risk management and security framework for cloud-based systems with SOC2 Compliance.

- Always up-to-date data with real-time data sync.

Don’t just take our word for it—try Hevo and experience why industry leaders like Whatfix say,” We’re extremely happy to have Hevo on our side.”

Get Started with Hevo for FreeWhy Integrate Airflow with Oracle?

- Streamlining ETL Pipelines: Airflow can automate the Extract, Transform, and Load (ETL) process into Oracle Database, effectively managing schedules and dependencies.

- Automating Data Migration: Airflow may help in automating such migration processes between Oracle and other databases, making it easier to move huge amounts of data and replicate databases to cloud environments.

- Improved Database Automation and Integration: We can automate Oracle database operations such as indexing, performance tuning and backing up data in storage using Airflow. Airflow also streamlines integration of Oracle Database with other data sources.

Check out the Top 5 Oracle Replication Tools to simplify this integration

Setting Up Oracle using Airflow

Prerequisites

There are a couple of prerequisites that you will need in your toolkit before you can integrating Oracle with Airflow:

- SQL and Python: Airflow is written in Python. Version 3.7 or later is recommended. It is also the language you’ll use to create your workflows. SQL knowledge is also essential to interact with the Oracle Database.

- Oracle Database and Oracle Instant Client: You should have access to your Oracle Database and make sure it is up and running. This is where Airflow will execute tasks and manage your data.

- Apache Airflow: Airflow must be configured in your local or cloud environment.

Configuring Oracle as Airflow’s Backend Database

To configure Oracle as the backend database, open the airflow.cfg file located in your Airflow home directory. You can update the sql_alchemy_conn setting with the connection string and reinitialize the database with airflow db init.

sql_alchemy_conn = oracle+cx_oracle://yourusername:yourpassword@yourhostname:port/SIDUnderstanding Oracle Providers in Airflow

A significant component in Airflow that allows this integration to be accessible is the Oracle provider package. It includes a collection of hooks, operators and sensors to interact specifically with Oracle databases, enabling you to automate and orchestrate Oracle-based workflows.

Oracle Provider Package

Oracle provider package gives Airflow access to the tools required to connect to Oracle databases. It gives you all the tools you need to execute SQL queries, retrieve data, and perform database operations inside Oracle.

pip install apache-airflow-providers-oracleLet us take an example of the table named employees, which has records of all employees and their information:

Key Elements of the Oracle Provider Package

Oracle Hook

The Oracle Hook is an essential component that offers a direct interface for running SQL queries and interacting with Oracle databases. It streamlines the process of connecting to Oracle, executing SQL commands, fetching data, and managing transactions.

Oracle Operators

Operators define the work to be performed. There are several operators within the package. It allows you to execute SQL queries, transfer data, and perform database operations.

These are the most common operators:

OracleOperator: It executes a SQL command on an Oracle database.OracleToGoogleCloudStorageOperator: It transfers data from Oracle to Google Cloud Storage.OracleToS3Operator: It moves data from Oracle to an Amazon S3 bucket.

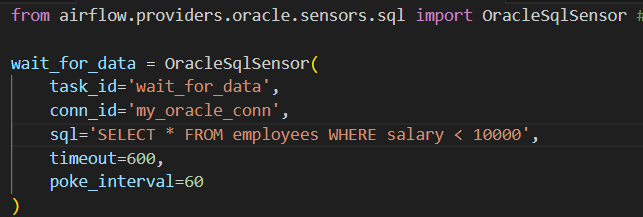

Oracle Sensors

Sensors in Airflow are a type of operator that waits for a certain condition to be true before proceeding to the next task. The package includes sensors that let DAGs wait for certain events to occur in an Oracle database, including the presence of a specific record or the completion of a database job.

Establishing Oracle Connections via Airflow UI and CLI

With the help of the Airflow-Oracle interface, you can build and automate complex workflows which enable efficient ETL and data management. You must first connect Airflow to your Oracle database before you can begin creating workflows. Let’s have a look at how to configure Oracle connections in Airflow.

Creating Airflow Oracle Connection via Airflow UI

Step 1: Access the Airflow Web User Interface

Using airflow webserver --port 8080, navigate to http://localhost:8080 and you will see the Airflow dashboard, where you can manage your workflows and connections.

Step 2: Navigate to the Connections Page

You can find the “Admin” tab on the top navigation bar in the Airflow dashboard. Simply click on it, and from the drop-down, choose “Connections.” You may view all of the current connections on the Connections page.

Step 3: Create a New Oracle Connection

You can complete the connection form with details like conn id, conn type, hostname, schema, login, password, port, and SID/Service Name. Your Oracle connection is now set up and ready to be used in your DAGs.

If you prefer working in the command line or need to automate the connection setup process, Airflow’s CLI provides a powerful alternative to the UI.

Accessing Your Airflow DAGs’ Oracle Connection

Once the Oracle connection has been established, you may use it in your DAGs to interact with the Oracle database.

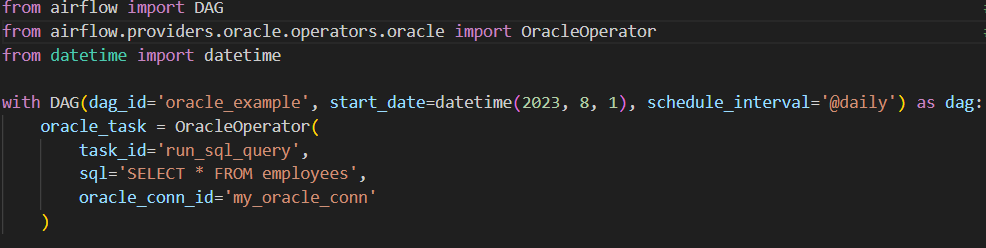

Here’s a snippet of how to use the Oracle connection in a DAG.

The OracleOperator executes a SQL query (SELECT employee_id, salary, department FROM employees) on the Oracle database, using the oracle_default connection.

Once configured, these connections can be utilized by all of your DAGs to streamline database operations, optimizing the reliability and effectiveness of your data processing.

Best Practices for Oracle Connections in Airflow

Airflow integration with Oracle is a powerful way to automate your data processes and we need to ensure that we are leveraging all of its capabilities by following the best practices.

Oracle Connection Optimisation in Airflow

1. Connection Pooling

- Use Connection Pooling: This function enables several tasks to share a single database connection which is quite beneficial for Oracle databases.This reduces the overhead of frequently opening and closing connections, improving performance.

- Change Pool Size: Configure the size of the connection pool based on the workload and the number of concurrent tasks to make sure the database will endure peak loads without becoming overwhelmed.

2. Batch Processing

- Leverage Bulk Operations: Bulk operations are used to insert or update data. By doing this, efficiency is increased and fewer database loops are made. To run batch inserts, use Oracle hooks and the executemany method.

3. SQL Queries Optimization and Minimal Data Retrieval

The SQL queries also play a big role in performance optimization. Use the necessary performance tuning techniques such as indexing, using selective columns, query caching and avoiding full table scans where appropriate.

4. Timeout and Retries Mechanism

- Set Timeouts: Setting up timeout mechanisms to prevent infinite hanging in tasks.

- Establish Retries Mechanism: Add retries in Airflow tasks in case of network glitches or unavailability to handle temporary issues.

Common Issues Faced

1. Connection Failures

When Airflow tries to connect to the Oracle database, it may fail due to bad credentials and parameters or network issues. You need to ensure that the connection details are correct. Make a test connection outside of the Airflow environment by using a basic db client tool to ensure.

2. High Time Consuming Queries

If the SQL queries take too long, Airflow tasks may hang or take longer than expected to complete. As discussed before, it is great to optimize and tune your queries to reduce execution time. You can analyze query execution plans using Oracle’s tools.

Logging and Monitoring

- You can enable detailed logging in the

airflow.cfgfile to capture task execution and SQL query details. - Leverage Oracle Enterprise Manager to track query performance, CPU and memory utilization.

- You can set up alerts via emails or integrations like Slack to receive real-time updates on your tasks, enabling quick response to minimize issues in data pipelines.

Why Choose Hevo Over Airflow for Oracle Data Integration

Although Apache Airflow is a powerful tool for handling intricate workflows and ETL procedures, it might not be the best choice in every situation. Hevo, a no-code data pipeline technology, offers an alternative that streamlines the Oracle data integration process.

1. Easy to Use and Transform

Hevo is a no-code platform which makes it very easy to use, apply data transformations, simple to set up and accessible to users with both technical and non-technical backgrounds. You can quickly setup and manage data pipelines without the need for coding skills or extensive configuration.

2. Pre-Built Connectors

Hevo has an important plus due to its huge repository of libraries over 150 pre-built connectors, among which are optimized for Oracle. By automating many aspects of the data integration process, Hevo makes the process simpler to integrate Oracle databases with other databases, cloud services, and SaaS apps.

3. Real-Time Data Integration

Hevo is effective at streaming real-time data and it can integrate data from Oracle to multiple destinations with minimal latency, which makes it ideal for real-time analytics and monitoring.

You can also read about:

- Oracle to MongoDB replication

- Connect Tableau to Oracle

- Load data from Oracle to Iceberg

- Migrate MySQL to Oracle

Conclusion

Apache Airflow is a great tool for automating and streamlining complex database operations, especially when integrated with Oracle while Hevo provides a more effective solution designed specifically for data integration activities.

Hevo is a great option for companies that need to set up and maintain data pipelines with the least amount of technical overhead due to its large library of pre-built connections, no-code platform, real-time data streaming capabilities, and integrated transformation tools. Sign up for Hevo’s 14-day free trial and experience seamless data migration.

Resources

Frequently Asked Questions

1. Does Airflow support an Oracle database?

Yes, Oracle databases are supported by Airflow. Oracle provider package enables this support and comes with a list of hooks, operators, and sensors made especially for Oracle.

2. How to connect Airflow with Oracle?

It is simple to connect Airflow with Oracle. Just install the Oracle Provider Package by running pip install the apache-airflow-providers-oracle package. You can set up Oracle Connection.

3. What is Airflow and why is it used?

Apache Airflow is a tool that allows you to design and orchestrate workflows. It allows users to write and schedule workflows as Directed Acyclic Graphs (DAGs) using Python code. You can take care of your repetitive data tasks with a robust scheduling engine, extensible architecture, and powerful integrations.

4. Is Airflow an ETL tool?

Apache Airflow is frequently used for orchestrating ETL workflows, even though it is not an ETL tool itself. Each stage of ETL procedures is scheduled and managed with Airflow. Data transformations are usually accomplished by the code or scripts that Airflow orchestrates rather than by Airflow itself.