Key Takeaways

Key TakeawaysYou can migrate data from Oracle to PostgreSQL with either a manual process or an automated tool:

Method 1: Using ora2pg

Step 1: Install Strawberry Perl, ora2pg, and set up the required drivers.

Step 2: Configure environment variables and ora2pg settings for Oracle and PostgreSQL connections.

Step 3: Scan, assess, and convert Oracle schema to PostgreSQL format.

Step 4: Export Oracle objects, compile files, and load them into PostgreSQL.

Step 5: Run tests to validate the migration.

Method 2: Using JDBC

Step 1: Install the PostgreSQL ODBC driver and create a configuration file for your target database.

Step 2: Set up Oracle’s heterogeneous services and update Oracle’s network configuration files.

Step 3: Restart the Oracle listener and create a database link to PostgreSQL.

Step 4: Query and move data from Oracle to PostgreSQL using the established connection.

Many organizations are drawn to PostgreSQL’s robust features, open-source nature, and cost-effectiveness, and hence they look to migrate their data from their existing database to PostgreSQL.

In this guide, we’ll discuss the Oracle to PostgreSQL migration process. Having done this migration several times, I understand the challenges and complexities of such a transition.

Whether you are doing this for the first time or looking to refine your process, I will walk you through two options and easy-to-follow steps to migrate your data from Oracle to PostgreSQL. Let’s begin.

Table of Contents

What is Oracle?

Oracle Database, or Oracle DB, is a relational database management system(RDBMS) primarily used for data storage, organization, and retrieval, data warehousing, and online transaction processing(OLTP). It is a commercial database(licenced by Oracle Corporation) used by the world’s largest enterprises. The Oracle Database can be run on-premise, on the Cloud, or even as a hybrid cloud installation.

What is PostgreSQL?

PostgreSQL is a free, open-source object-relational database system(ORDBMS) that supports both relational(SQL) and non-relational(JSON) querying. It is an enterprise-class database that was started as part of the POSTGRES project at the University of California, Berkeley. It is a highly extensible system where users can build many custom functions, write their own codes, and build data types.

How to Set Up Oracle to PostgreSQL Migration using ora2pg?

Ora2Pg is a free tool for migrating Oracle or MySQL databases to PostgreSQL. It automatically scans and extracts the structure or data from your Oracle database, generating SQL scripts for loading into PostgreSQL. It’s versatile, suitable for reverse engineering, large-scale migrations, or data replication. Its user-friendly interface requires minimal Oracle database knowledge, only necessitating connection parameters.

Let’s examine the steps to migrate your data from Oracle to PostgreSQL.

Before the Migration begins, you need to have the following things installed in your system :

- Oracle Database

- Strawberry Perl

- PostgreSQL

- Ora2pg

Step 1: Installation

1. a) Installing Strawberry Perl

Strawberry Perl is an MS Windows environment that contains everything you need to run and develop Perl applications. It is designed to be as close as possible to the Perl environment on UNIX systems.

You can install it from the link provided below:

- Link to install Strawberry Perl. I have downloaded the Latest Release 5.38.2.2 MSI(171.7 MB)

- After it is downloaded, Accept the license agreement and click on Next.

- Select the Destination Folder Path.

The progress bar shows the progress of your installation.

Note: To check if your Strawberry Pearl is correctly installed, open your text editor and create a new text file. While saving it, give the extension as .pl (My file name is xyz.pl). Then open your command prompt and type in:

perl filename.plIt shows the content of the text file which you just created. Now you know that Strawberry Perl is running successfully.

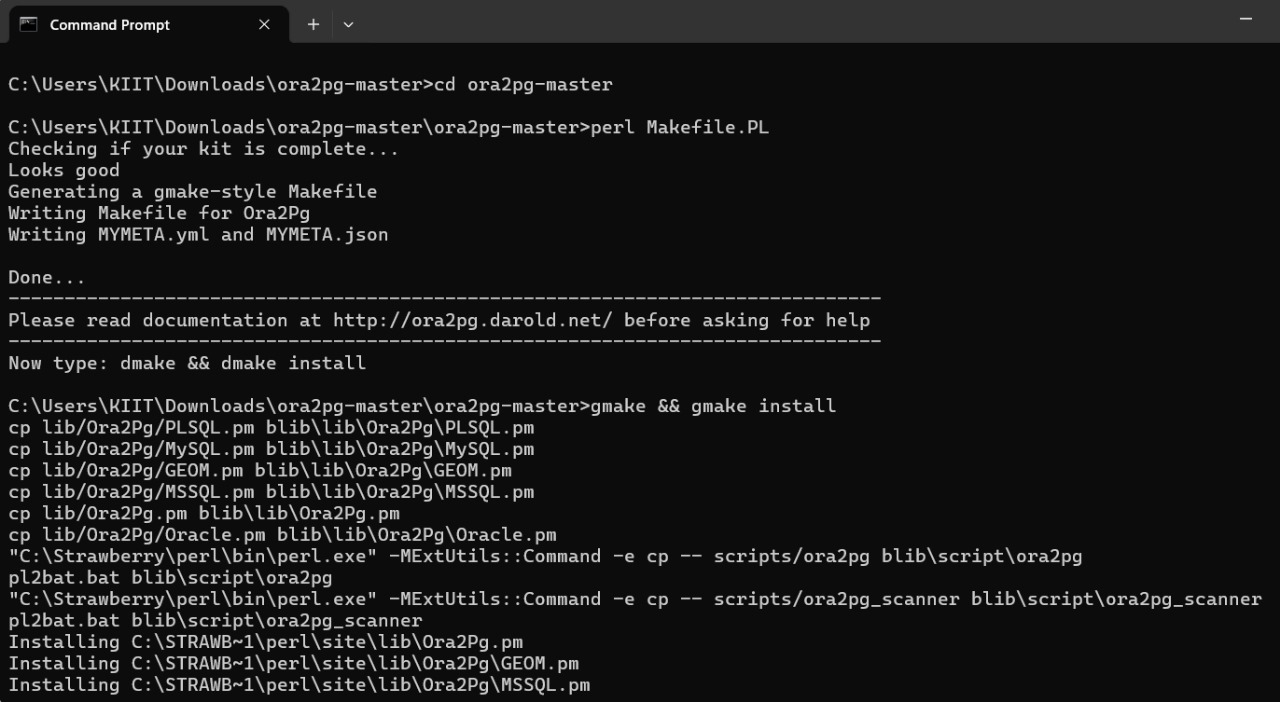

1. b) How to Install Ora2pg

- You can install Ora2pg from the link to the Github Repository.

Ora2Pg is a free tool for migrating an Oracle database to a PostgreSQL-compatible schema. It connects to your Oracle database, scans it automatically, extracts its structure or data, and generates SQL scripts that you can load into your PostgreSQL database.

- Open the command prompt and type the command cd ora2pg-master

Congratulations. We are done with the key installations. Now, we will move on to how to configure them to migrate our data from Oracle to PostgreSQL using Ora2pg successfully.

Step 2: Configurations

Ora2Pg needs the Perl module DBD::Oracle to connect to an Oracle database from Perl DBI. To get DBD::Oracle, go to CPAN, a Perl module repository.

- Install Oracle Instant Client Basic and SQL PLUS Package. Then, you need to set up the Environment Variables as follows.

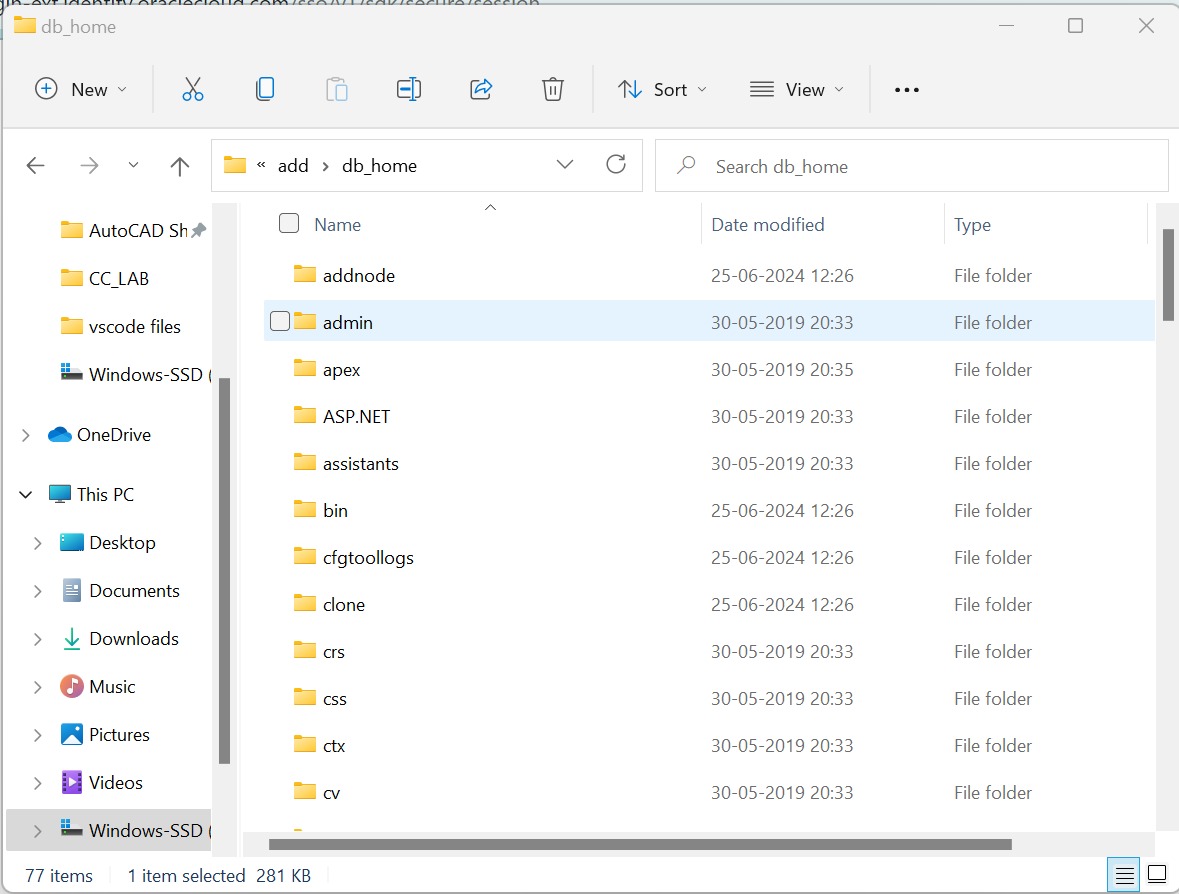

- Go to the admin folder of Instant Client.

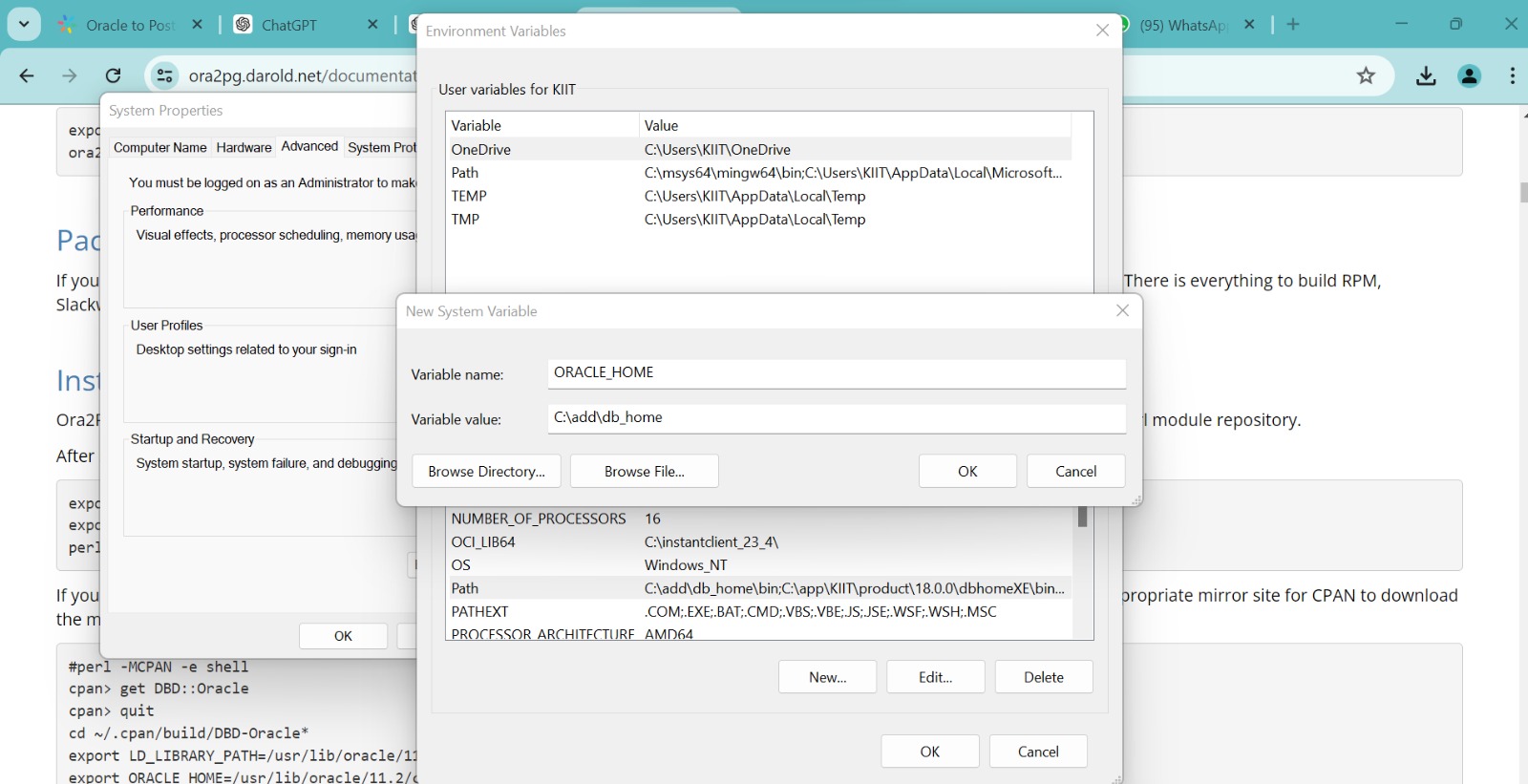

- Go to Advanced System → Environment Variables → New → New System Variable.

- Give the Variable Name ‘ORACLE_HOME’ and the Variable Value the admin folder path, which in my case is ‘C:\add\db_home.’

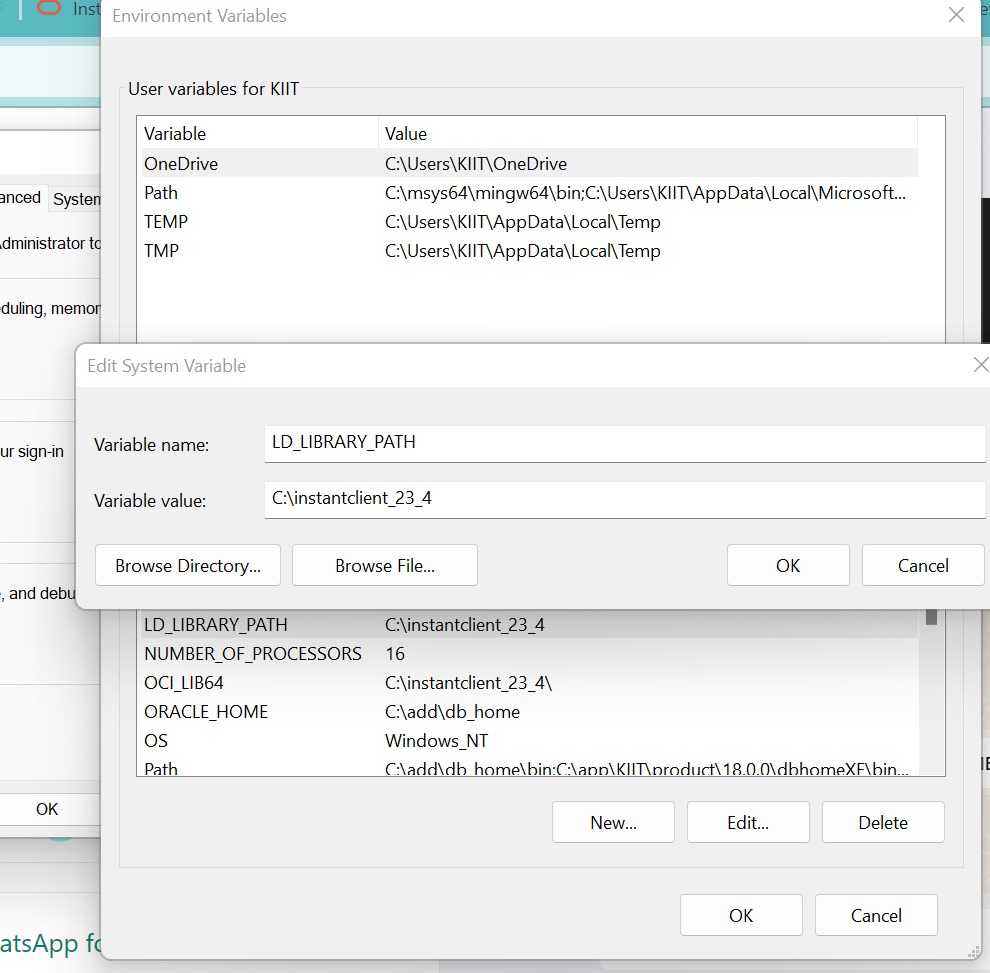

- Similarly, add another System Variable, LD_LIBRARY_PATH, with the name and value shown below.

- Open Command Prompt and type the command

echo %ORACLE_HOME%.The file directory will change to Variable Admin path, which in my case is C:\add\db_home.

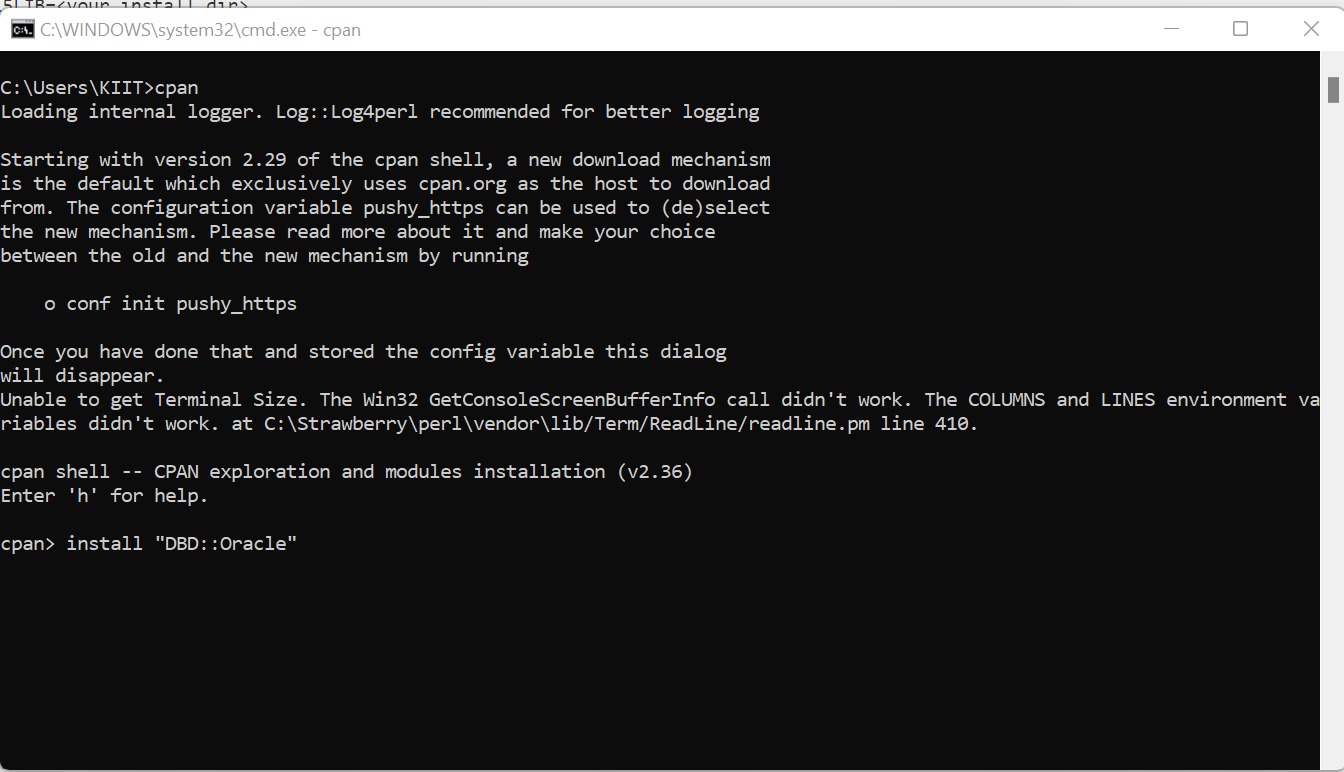

- Now, you can use the CPAN command to install DBD::Oracle. and give the command install “DBD::Oracle.”

- Then give the command install “DBD::Oracle.”

- Go to any Text Editor, such as Visual Studio Code, and create an ora2pg.conf text file in the same directory where ora2pg is downloaded.

Note: We will make changes to this file to connect to Oracle and PostgreSQL.

- We need to configure the DSN, and then we can connect to Oracle and PostgreSQL, respectively.

I used Oracle Database Express Edition (XE) version 19 for the migration. The Oracle home directory was set to C:\add\db_home, and the LD_LIBRARY_PATH environment variable was set to C:\add\db_home\bin.

Step 3: Pre-migration

3.1. Discover:

— Version of the Oracle database

SELECT banner

FROM v$version;— Instance information

SELECT instance_name, host_name, version, status

FROM v$instance;— Data file information

SELECT file_name, tablespace_name

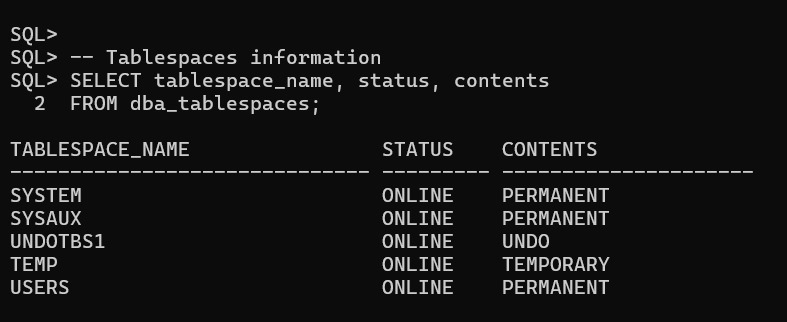

FROM dba_data_files;— Tablespaces information

SELECT tablespace_name, status, contents

FROM dba_tablespaces;

This involves scanning the present network to identify all Oracle instances’ versions and features.

3.2. Assess:

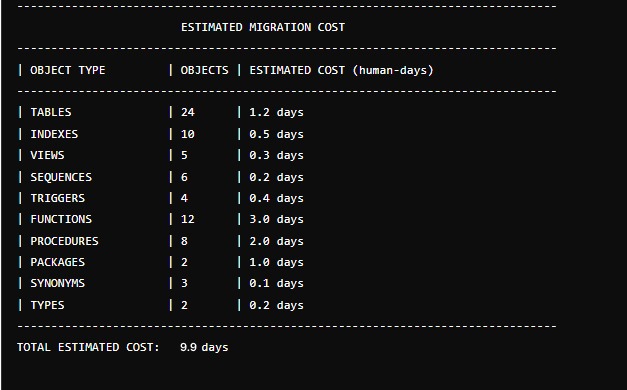

Use the command: to get a detailed text report and estimate the migration cost in human days using the estimate_cost option

<strong>ora2pg -t SHOW_REPORT --estimate_cost</strong>

3.3. Convert :

In this step, we will convert the Oracle code and DDL scripts to PostgreSQL. The ora2pg tool helps export Oracle objects in a PostgreSQL-compatible format. However, some objects require manual changes.

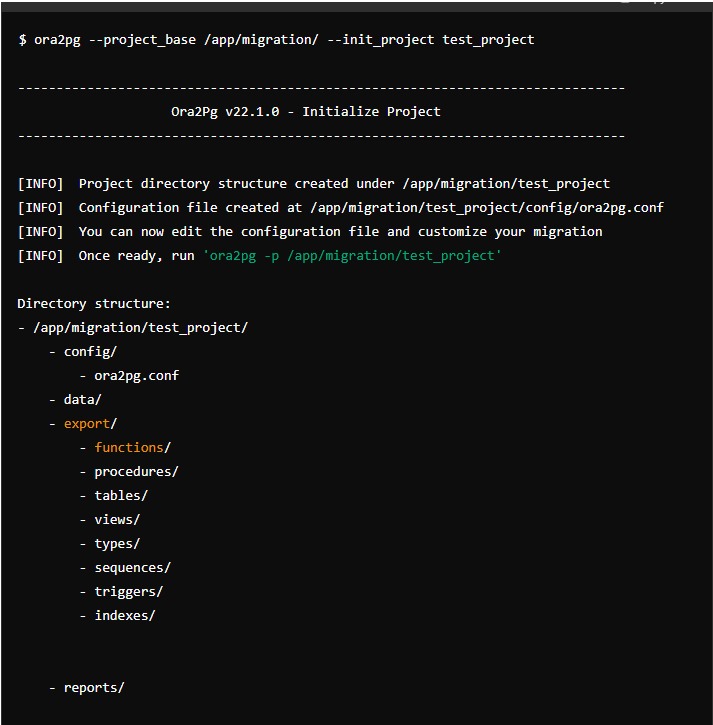

I will create a migration template using the ora2pg command:

ora2pg --project_base /app/migration/ --init_project test_projectThe following message will be displayed:

Step 4: Migration

4.1. Export Oracle Objects :

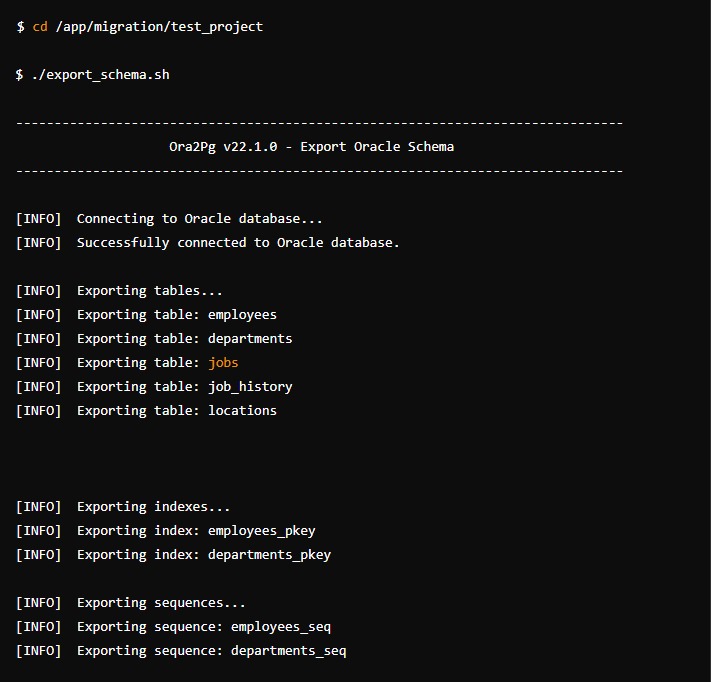

We will now export Oracle objects as PostgreSQL objects using the script:

cd /app/migration/test_project

./export_schema.sh

4.2. Compile Files :

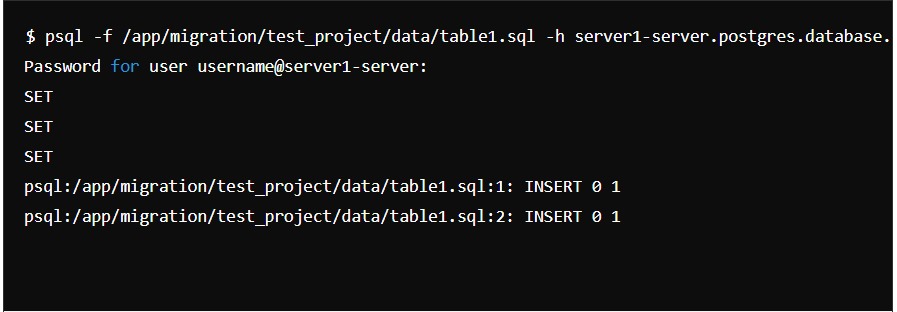

We will run SQL commands to load the DDL files and import the data into PostgreSQL.The command is :

psql -f /app/migration/test_project/schema/sequences/sequence.sql -h server1-server.postgres.database.azure.com -p 5432 -U username@server1-server -d database -L /app/migration/test_project/schema/sequences/create_sequences.log

psql -f /app/migration/test_project/data/table1.sql -h server1-server.postgres.database.azure.com -p 5432 -U username@server1-server -d database -l /app/migration/test_project/data/table1.log

4.3. Sync Data Selectively:

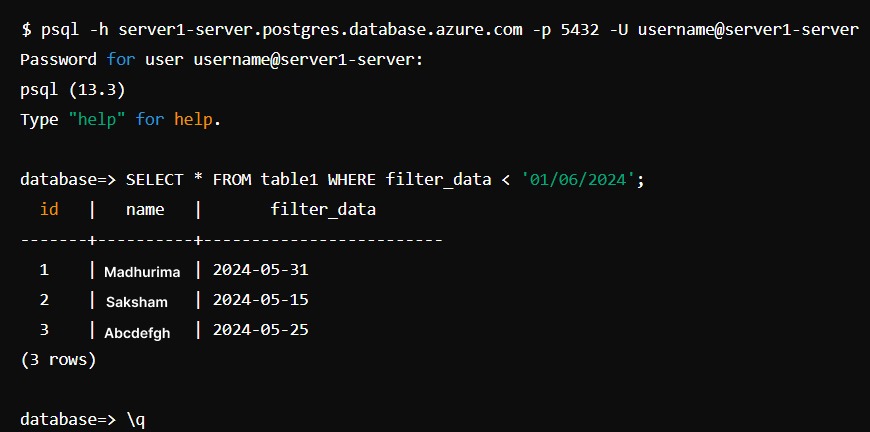

We will use specific queries to migrate data based on parameters such as date.

select * from table1 where filter_data < '01/06/2024'

Step 5: Post Migration

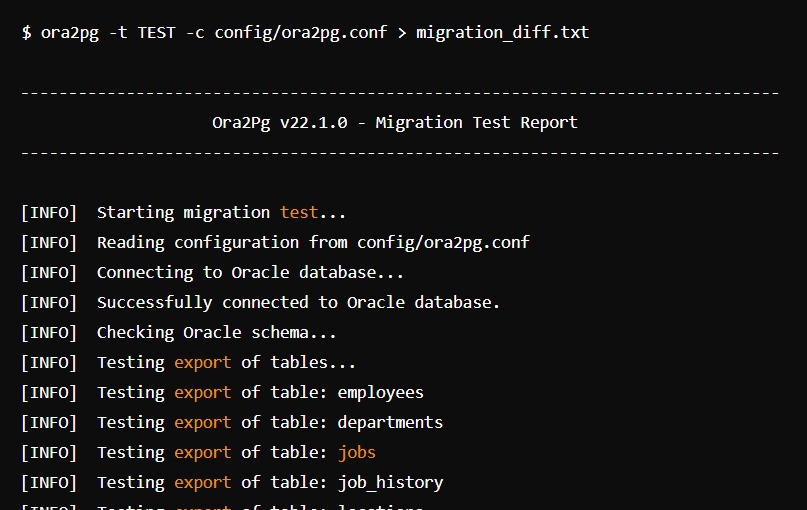

We will run tests to verify that applications work correctly with the new setup. The command for doing the same is:

ora2pg -t TEST -c config/ora2pg.conf > migration_diff.txt

How to set up Oracle to PostgreSQL Migration using JDBC?

Now, I will show you how to migrate your data from Oracle to PostgreSQL using JDBC manually. I followed the following steps to set up Oracle to PostgreSQL Migration manually:

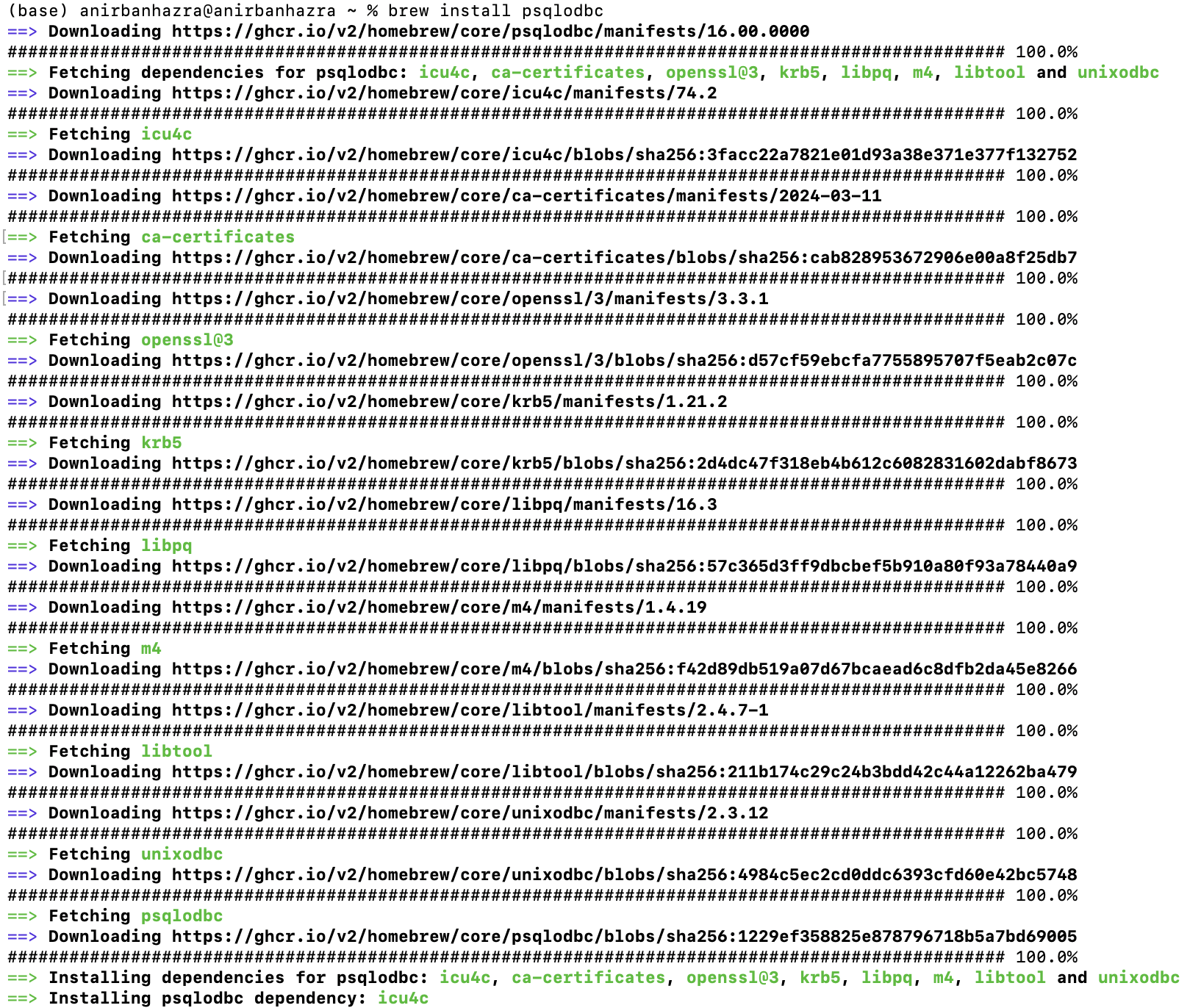

Step 1: Install PostgreSQL ODBC Driver

The first step was to install the PostgreSQL ODBC driver in the system in which Oracle is installed. As I’m using Linux, I did this by executing the following command:

yum install postgresql93-odbc

The command will differ according to the version of your Linux.

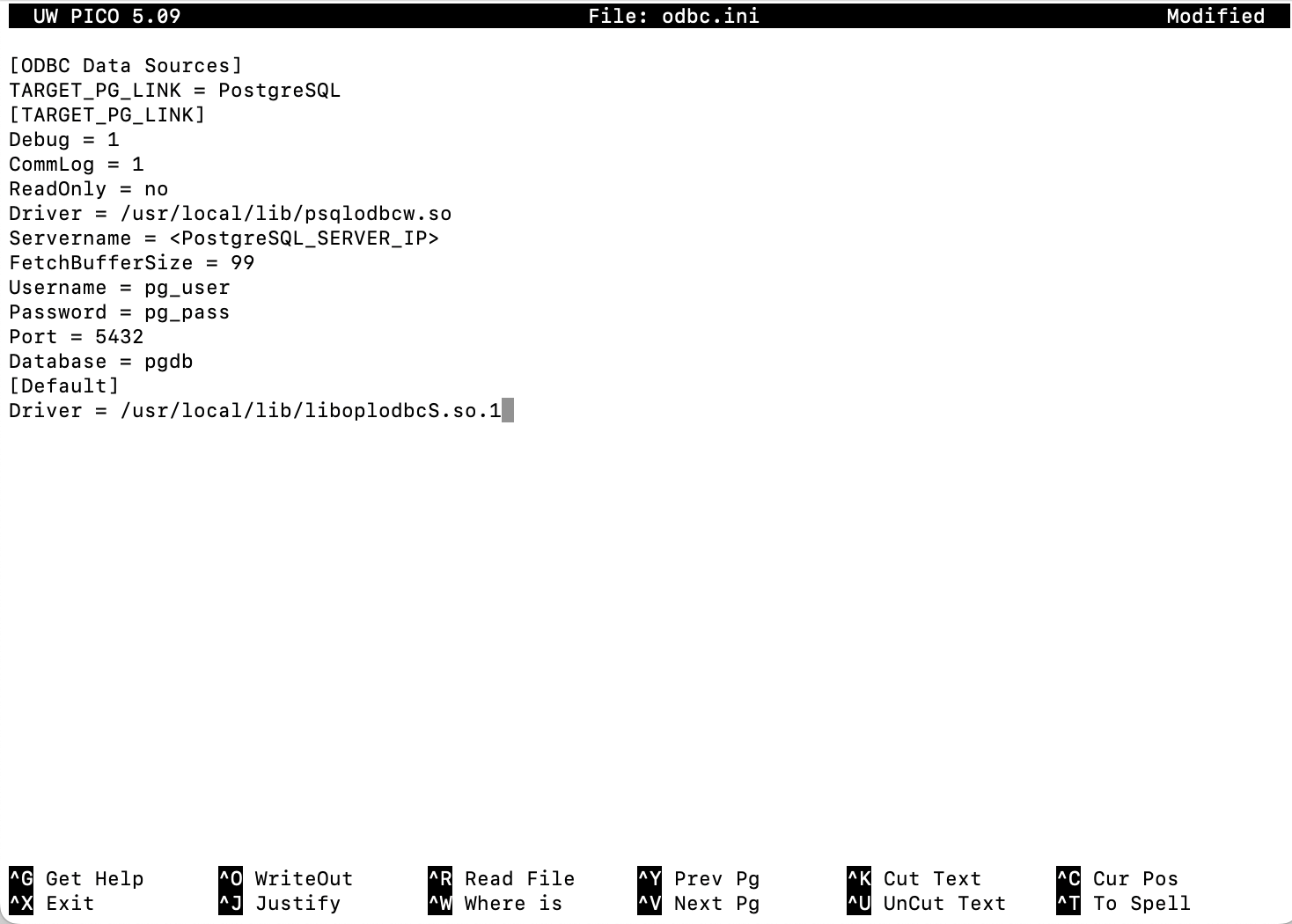

I created a file named odbc.ini and placed it in my user folder. This file contained the details of the target PostgreSQL database.

[ODBC Data Sources]

TARGET_PG_LINK = PostgreSQL

[TARGET_PG_LINK]

Debug = 1

CommLog = 1

ReadOnly = no

Driver = /usr/pgsql-9.3/lib/psqlodbc.so

Servername = <PostgreSQL_SERVER_IP>

FetchBufferSize = 99

Username = pg_user

Password = pg_pass

Port = 5432

Database = pgdb

[Default]

Driver = /usr/lib64/liboplodbcS.so.1

Step 2: Configure Heterogeneous Oracle Services

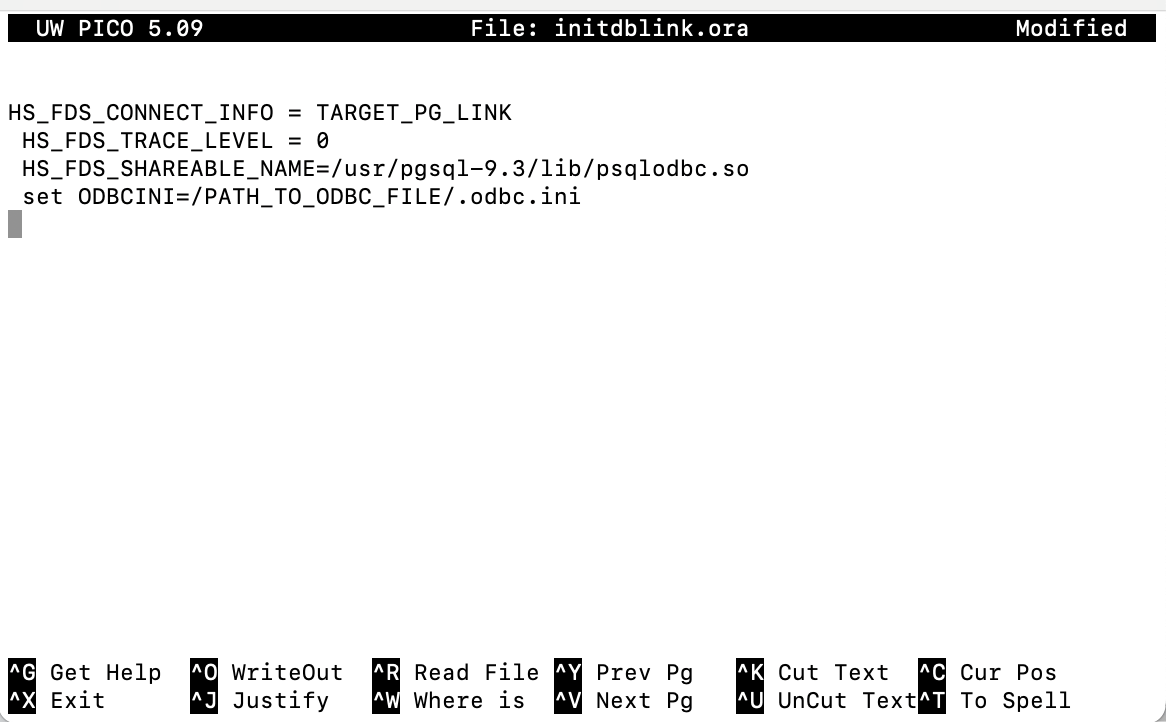

The next step was to configure the heterogeneous services in Oracle. For this, I created three files with the extension .ora. I began by creating the first while, this initialized the heterogeneous services. Navigated to /hs/admin in my Oracle installation directory and created a file named initdblink.ora with the following content:

HS_FDS_CONNECT_INFO = TARGET_PG_LINK

HS_FDS_TRACE_LEVEL = 0

HS_FDS_SHAREABLE_NAME=/usr/pgsql-9.3/lib/psqlodbc.so

set ODBCINI=/PATH_TO_ODBC_FILE/.odbc.ini

The important section is where we need to mention the ODBC information for the destination PostgreSQL installation. I ensured that I specified the same name in HS_FDS_CONNECT_INFO.

Step 3: Modify the File Located in the Directory

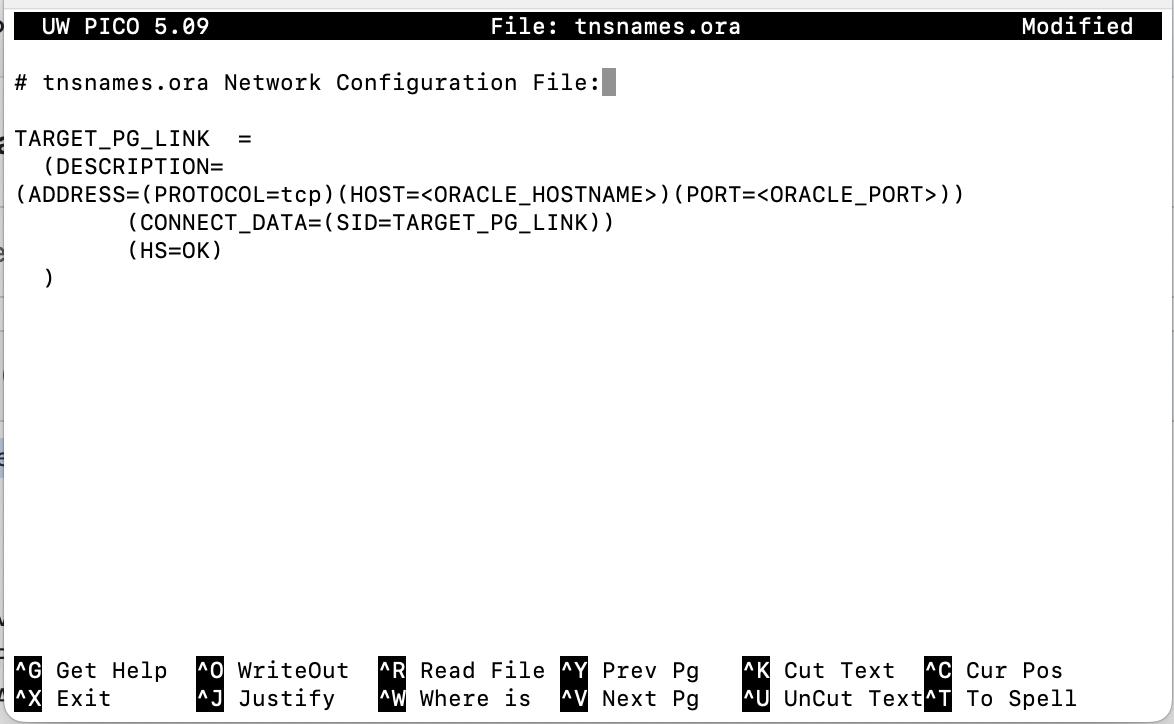

The next step was to modify a file located in the Oracle installation’s/network/admin directory. I found the file tnsnames.ora and added details about the destination PostgreSQL instance.

TARGET_PG_LINK =

(DESCRIPTION=

(ADDRESS=(PROTOCOL=tcp)(HOST=<ORACLE_HOSTNAME>)(PORT=<ORACLE_PORT>))

(CONNECT_DATA=(SID=TARGET_PG_LINK))

(HS=OK)

)

Step 4: Find the File for feeding more information

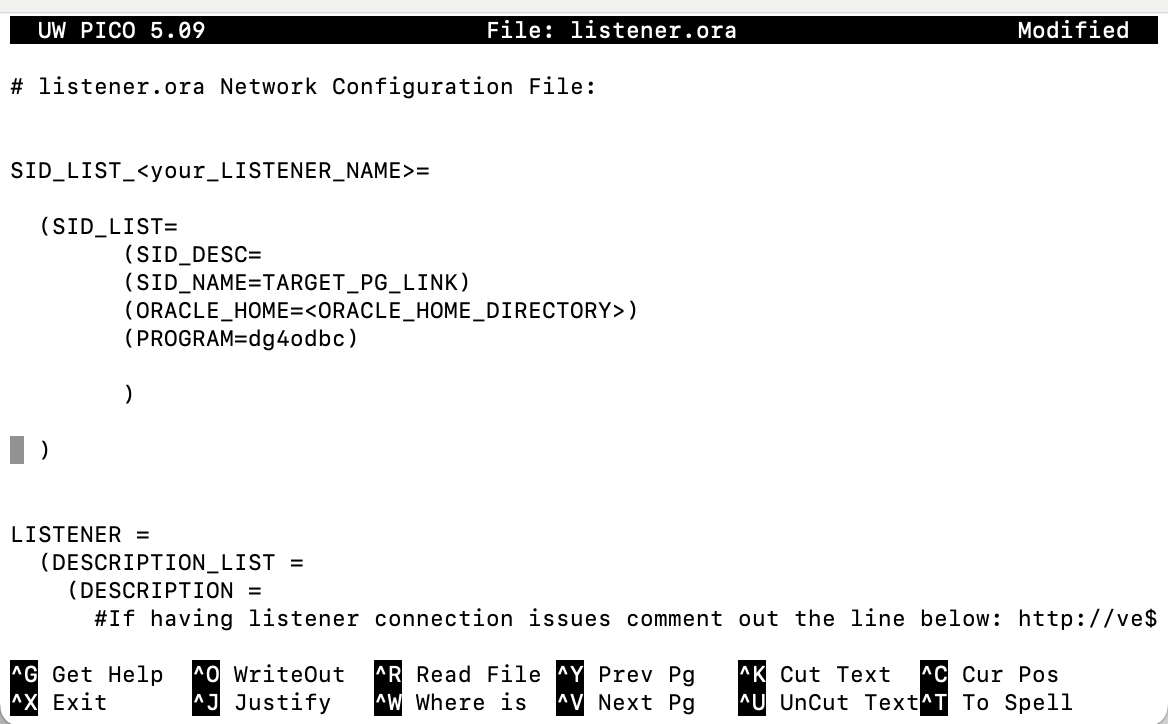

I located the listener.ora file in the same directory and added the following details:

SID_LIST_<your_LISTENER_NAME>=

(SID_LIST=

(SID_DESC=

(SID_NAME=TARGET_PG_LINK)

(ORACLE_HOME=<ORACLE_HOME_DIRECTORY>)

(PROGRAM=dg4odbc)

)

)

Oracle listeners are responsible for listening for connections to other databases and handling them appropriately. The name of the configured listener was found out from the listener.ora file. After changing the file, I executed the below command to restart the listener.

lsnrctl reload <your_LISTENER_NAME>Step 5: Establish a Connection for Oracle to PostgreSQL Migration

The final step was to start PostgreSQL and type the following statement to establish the connection:

Create database link TARGET_PG_LINK connect to "pg_user" identified by "pg_pass" using ‘TARGET_PG_LINK’;When everything went well, I executed the following query in PostgreSQL.

select * from "target_table_name"@TARGET_PG_LINK;If the manual method seems too overwhelming and you are looking for an automated method for transferring Oracle data to PostgreSQL, try Hevo Data.

You can also read about:

- Connect Databricks to Oracle

- Integrate Oracle with Elasticsearch

- Set up Kafka–Oracle integration (3 methods)

- Best Oracle replication tools & pricing

Wrapping Up

Migrating your data from Oracle to PostgreSQL can be a complex process, but with the right steps and tools, it can be achievable. In this blog, I covered a step by step approach for Oracle to PostgreSQL migration using JDBC.

If you’re looking for a more hands-on approach, the manual JDBC method offers more control. But if you’d prefer a faster and more automated solution, Hevo is a great option to explore. I hope this guide helps you choose the best migration method for your needs.

Want to take Hevo for a spin? Sign up for a 14-day free trial and experience the feature-rich Hevo suite firsthand. Also, check out our unbeatable pricing to choose the best plan for your organization.

Share your experience of Oracle to PostgreSQL migration in the comments section below!

Frequently Asked Questions

1. What are the challenges of Oracle to Postgres migration?

Migrating from Oracle to Postgres may involve challenges such as schema conversion, continuous replication, low-latency data replication, and migration validation.

2. What is the free tool to migrate Oracle to Postgres?

Ora2pg is a free tool for migrating Oracle to PostgreSQL. It connects and scans your Oracle database automatically, extracting the structure of your data to prepare SQL scripts and migrate it to PostgreSQL.

3. Is PostgreSQL compatible with Oracle?

Yes, PostgreSQL is compatible with all the RDBMSs including Oracle.