Key Takeaways

Key TakeawaysFollow any of the methods below to import a CSV file into PostgreSQL:

Hevo Data: Connect your CSV source (e.g., Google Sheets) and set PostgreSQL as the destination. Hevo handles schema mapping and real-time data transfer without any code.

COPY Command: As a superuser, run COPY table FROM ‘/path/to/file.csv’ WITH CSV HEADER in SQL to load data directly from the server’s filesystem.

pgAdmin: Use the GUI to create or select a table, choose Import/Export, pick your CSV file, set the delimiter and headers, and run the import through the wizard.

\copy Command: From your local machine in psql, run \COPY table FROM ‘file.csv’ WITH CSV HEADER to load data into PostgreSQL without needing superuser access.

One of the primary jobs of data practitioners and database administrators is PostgreSQL import CSV to add data to PostgreSQL tables. PostgreSQL is an open-source relational database that is an ideal platform for developers to build applications.

This article delves into four distinct methods for CSV File Into PostgreSQL, a pivotal process for improving data readability and enhancing the data analysis process.

The first method involves using Hevo Data’s automated data pipeline tool to replicate your data into your PostgreSQL destination seamlessly. The other three methods cover importing CSV into PostgreSQL using the COPY command, \copy command, and pgAdmin to import CSV files into PostgreSQL.

Table of Contents

How to Import CSV to PostgreSQL?

Before you move forward with performing the PostgreSQL import CSV job, you need to ensure the following two aspects:

- A CSV file containing data that needs to be imported into PostgreSQL.

- A table in PostgreSQL with a well-defined structure to store the CSV file data.

In this article, the following CSV file is considered to contain the data given below:

Employee ID,First Name,Last Name,Date of Birth,City

1,Max,Smith,2002-02-03,Sydney

2,Karl,Summers,2004-04-10,Brisbane

3,Sam,Wilde,2005-02-06,PerthYou can create a table “employees” in PostgreSQL by executing the following command:

CREATE TABLE employees(

emp_id SERIAL,

first_name VARCHAR(50),

last_name VARCHAR(50),

dob DATE,

city VARCHAR(40)

PRIMARY KEY(emp_id)

);After creating the sample CSV file and table, you can now easily import CSV to PostgreSQL via any of the following methods:

- Method 1: Using Hevo Data

- Method 2: Using the COPY Command

- Method 3: Using pgAdmin

- Method 4: Using the \copy Command

Method 1: Using Hevo

To effortlessly perform the PostgreSQL import CSV job using Hevo, follow the simple steps given below:

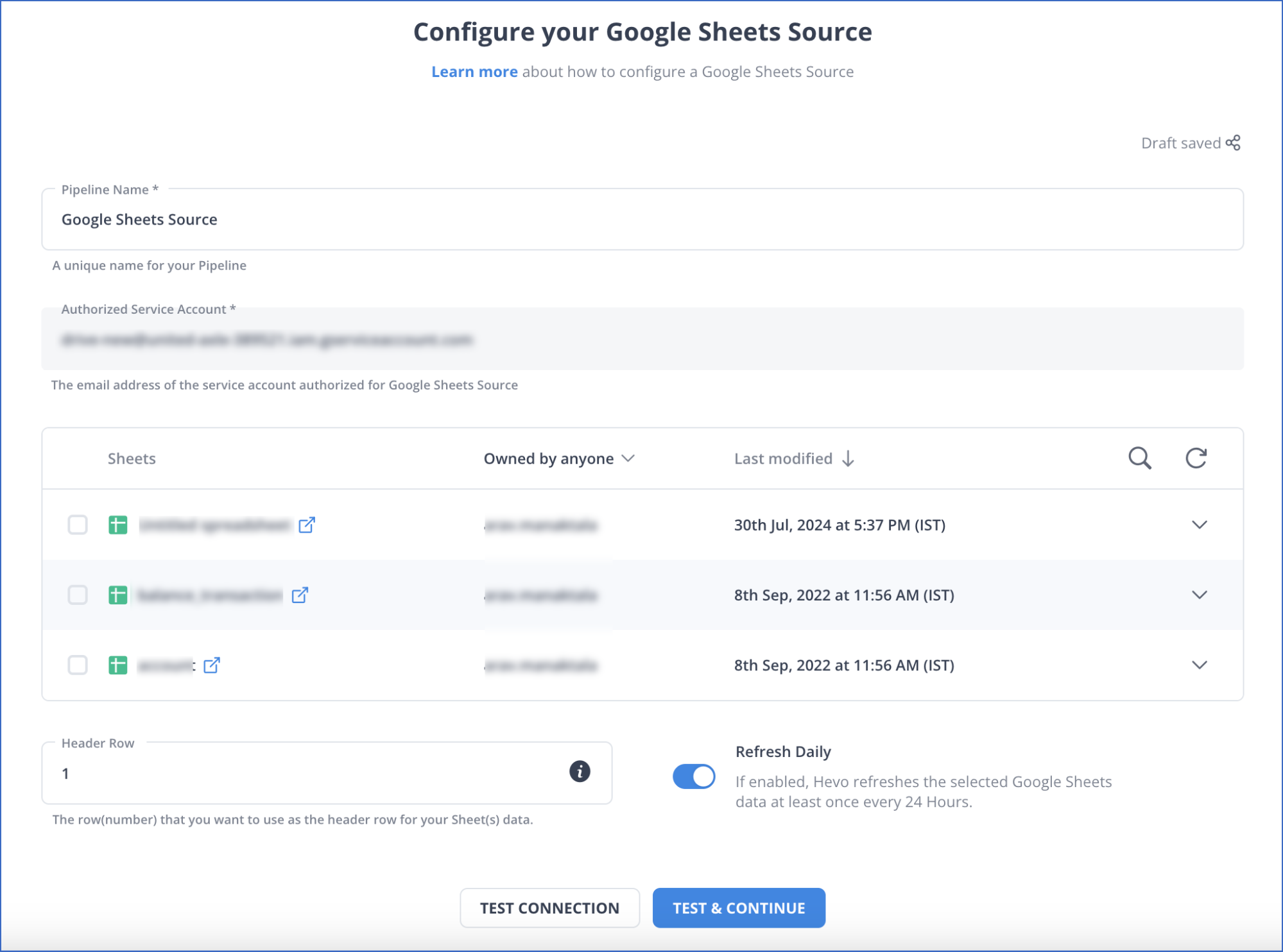

Step 1: Configure Google Sheets as your Source

Step 1: Configure PostgreSQL as your Destination

Method 2: Using the COPY Command

To successfully use the COPY command for executing the PostgreSQL import CSV task, ensure that you have PostgreSQL Superuser Access.

- Step 1: Run the following command to perform the PostgreSQL import CSV job:

COPY employees(emp_id,first_name,last_name,dob,city)

FROM ‘C:newdbemployees.csv’

DELIMITER ‘,’

CSV HEADER;Output:

COPY 3- Step 2: You can print out the contents of the table to check if the data is entered correctly.

SELECT * FROM employees;Output:

emp_id first_name last_name dob city

1 Max Smith 2002-02-03 Sydney

2 Karl Summers 2004-04-10 Brisbane

3 Sam Wilde 2005-02-06 PerthAlso Read: How to Export a PostgreSQL Table to a CSV File

Advantages and Disadvantages of the COPY Command Method

Advantages

- It is one of the quickest and efficient methods to import large amounts of data from CSV files to PostgreSQL tables

- The

COPYcommand is easy to execute, therefore automating the entire migration process.

Disadvantages

- Importing CSV files might require some knowledge of SQL and the PostgreSQL command line.

- Data transformation and validation are limited in this method.

Method 3: Using pgAdmin

pgAdmin is an open-source tool for effortlessly managing your PostgreSQL Database. You can easily download it from the official pgAdmin website. You can also perform the PostgreSQL import CSV task via pgAdmin by following the simple steps given below:

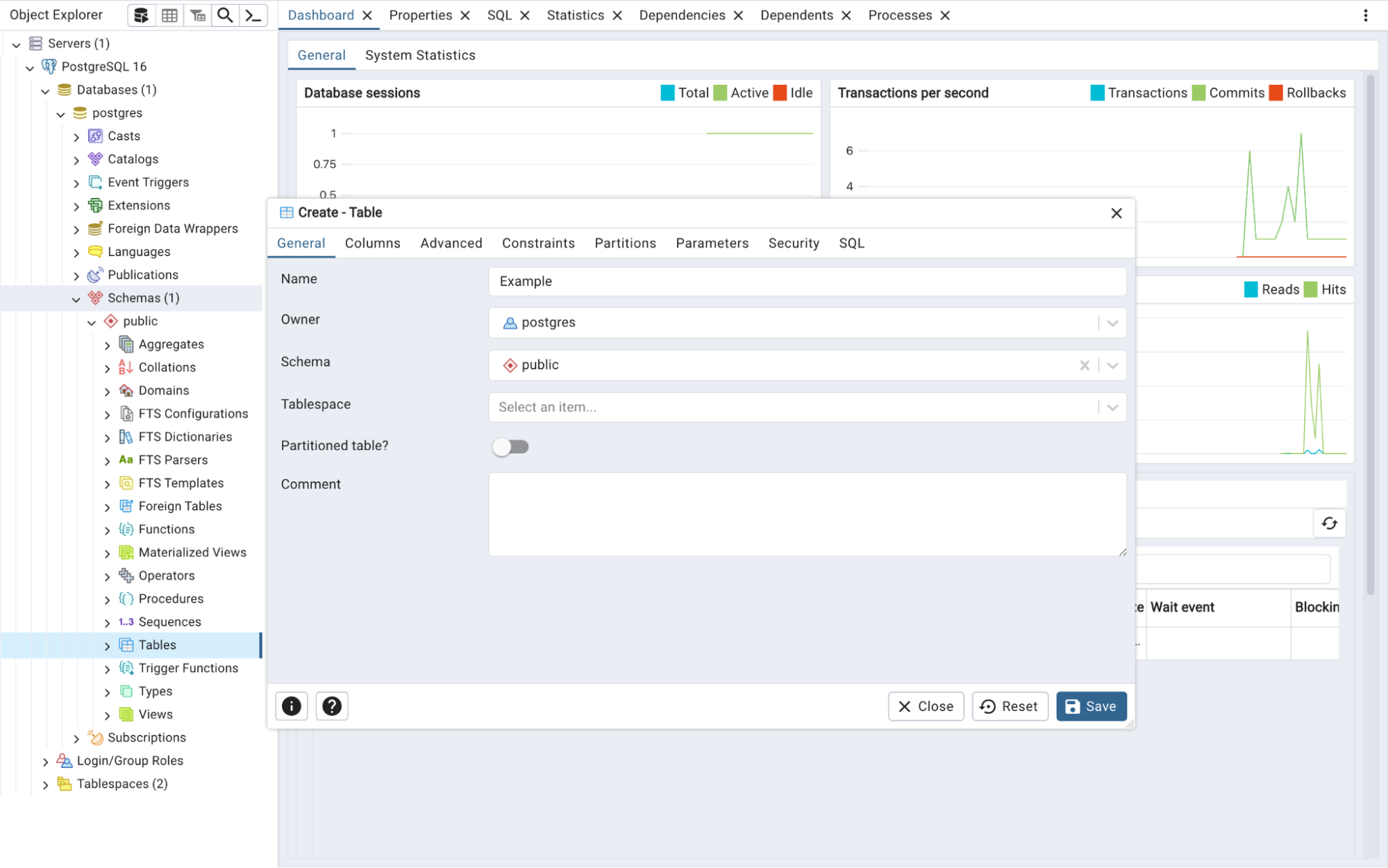

- Step 1: You can directly create a table from the pgAdmin GUI(Graphical User Interface). Open pgAdmin and right-click on the Tables option present in the Schema section on the left side menu.

- Step 2: Hover over the Create option and click on the “Table…” option to open a wizard for creating a new table.

- Step 3: You can now enter the table-specific details such as Table Name, Column Names, etc. Once done, you can click on the Save button to create a new table.

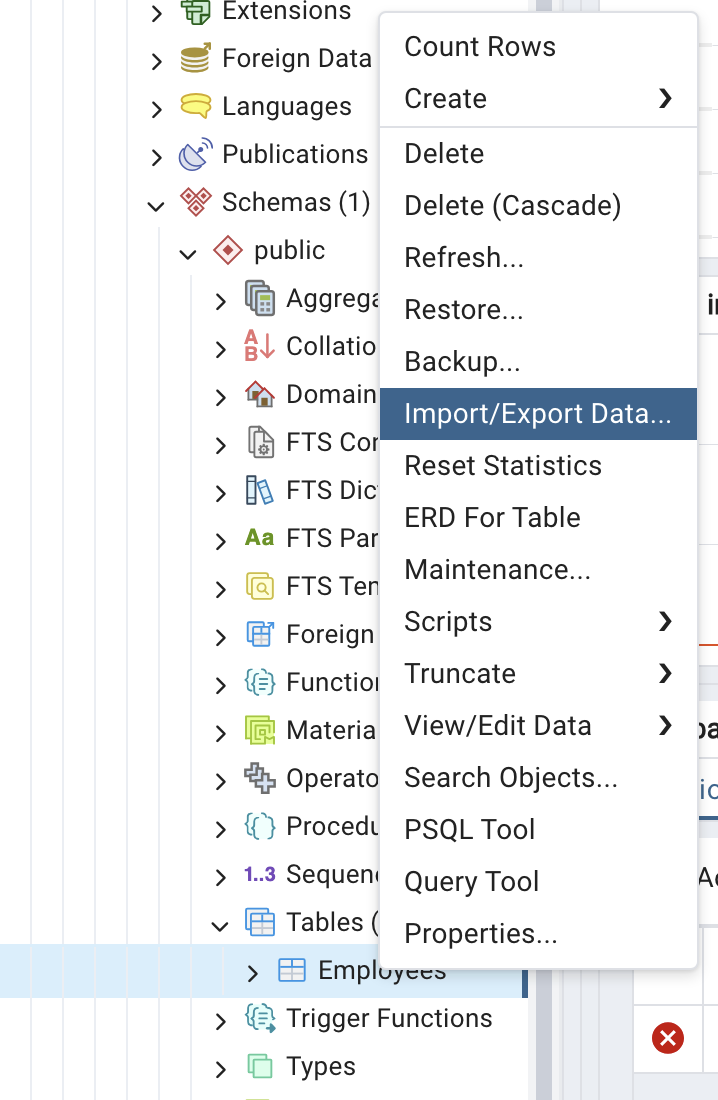

- Step 4: Now, to perform the Postgres import CSV job, go to the “Schemas” section on the left side menu and click on the Tables option.

- Step 5: Navigate to the “employees” table and right-click on it. Click on the Import/Export option to open the Wizard.

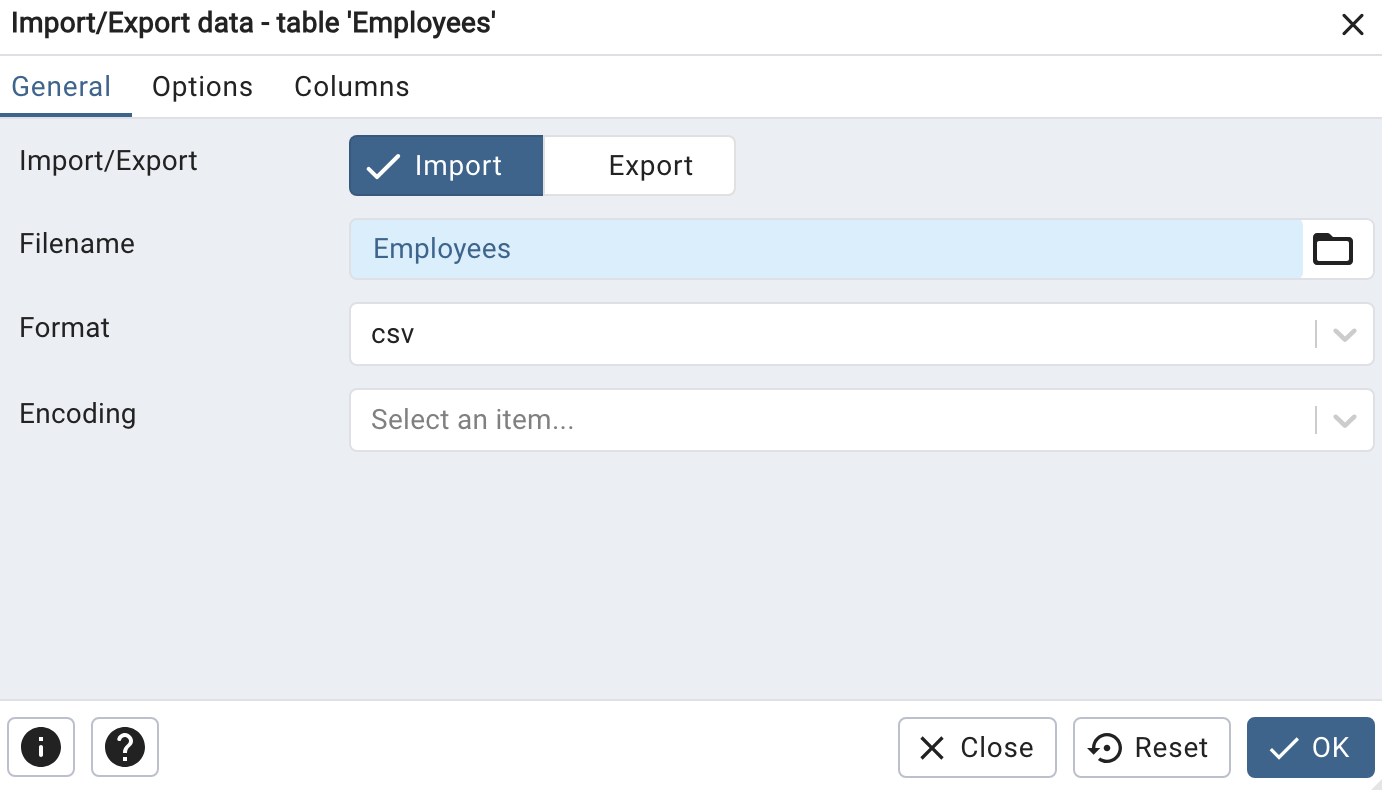

- Step 6: Toggle on the Import/Export flag button to import the data. Specify the filename as “employees” and the file format as CSV. You can toggle on the header button and specify “,” as the delimiter for your CSV file. Switch to the Columns Tab from the Options Tab to select columns and the order in which they need to be imported.

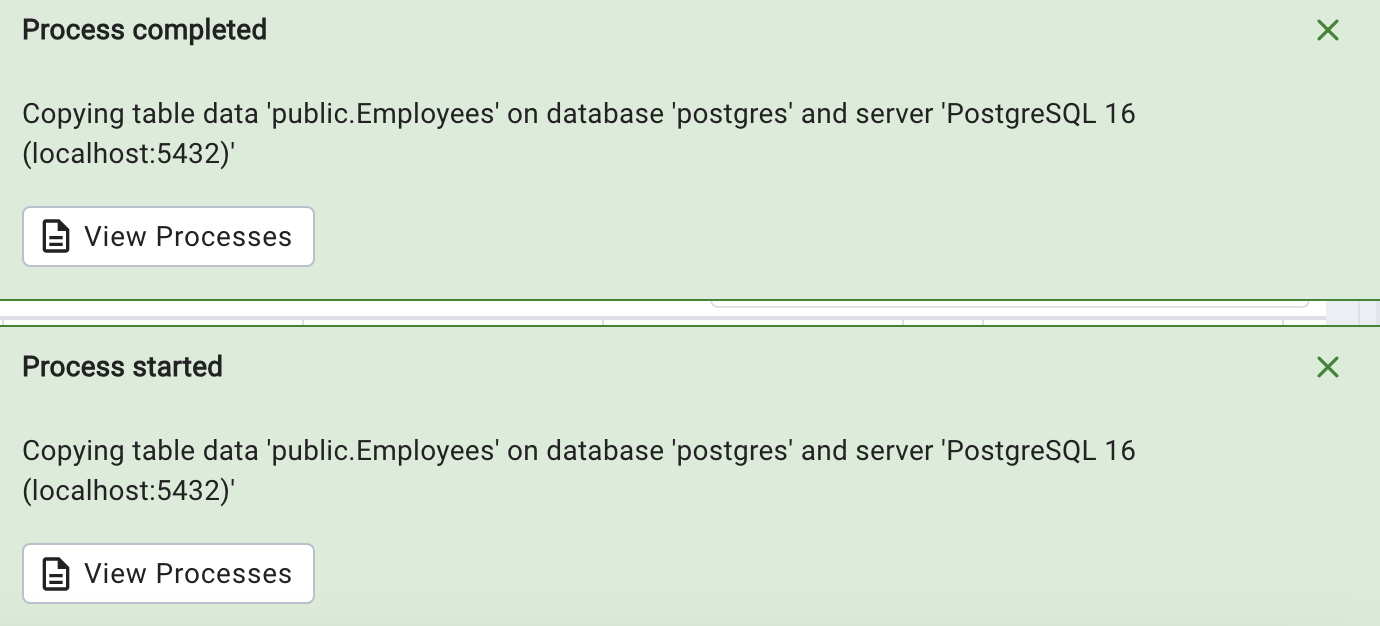

- Step 7: Click on the OK button. A window will pop up on your screen showing the successful execution of the PostgreSQL import CSV job using pgAdmin.

Advantages and Disadvantages of Using PgAdmin for CSV PostgreSQL Import

Advantages

- The graphical user interface in PgAdmin is easy and user-friendly.

- It allows more flexibility for data transformation and validation.

Disadvantages

- It can only import smaller files due to limited features in the PgAdmin interface.

- It is slower than the

COPYcommand when importing large files.

Method 4: Using \copy Command (psql Meta-Command)

The \copy command can be used for PostgreSQL import to CSV when you are interactively working with ‘psql’ and want to import data directly from your local machine or a specified file path.

- Step 1: Use ‘psql’ to connect to your Database

psql postgres://<username>:<password>@<host>:<port>/<database>

# for example

psql postgres://postgres:postgres@localhost:5432/postgres- Step 2: Import the Data from the CSV file

Once connected to psql, you can use the \copy command to import the data. Make sure to specify the correct path to your CSV file.

\COPY employee_table FROM 'employees.csv' WITH CSV HEADER;

- Step 3: Verify Data Import

After running the \COPY Command, you can verify that the data has been imported by querying the table:

SELECT * FROM employee_table;You can exit the ‘psql’ by using the ‘\q’ command.

Advantages and Disadvantages of using /copy command

Advantages

- It is an easy-to-use and straightforward method without the need for any additional scripting or tooling.

- The

/copycommand quickly transfers large amounts of data from CSV files into your PostgreSQL tables.

Disadvantages

- The

\copycommand has a relatively limited set of options for data transformation and validation. - It requires an understanding of SQL and the PostgreSQL command line interface.

What is PostgreSQL?

PostgreSQL is an Open-source Relational Database Management System (RDBMS) that fully supports both SQL (Relational) and JSON (Non-relational) querying.

PostgreSQL is an integral part of the Modern LAPP Stack that consists of Linux, Apache, PostgreSQL, and PHP (or Python and Perl). It acts as a robust back-end database that powers many dynamic websites and web applications. It also supports a wide range of popular programming languages such as Python, Java, C#, C, C++, Ruby, JavaScript, Node.js, Perl, Go & Tcl.

Key Features of PostgreSQL

PostgreSQL has become one of the most sought-after Database Management Systems due to the following eye-catching features:

- High Reliability: Includes features like MVCC, point-in-time recovery, replication, and backups.

- Data Integrity: Maintains accuracy with primary/foreign keys, locks, and constraints.

- Rich Data Types: Supports various types like INTEGER, BOOLEAN, VARCHAR, TIMESTAMP, and more.

- Strong Security: Offers multiple authentication methods such as Lightweight Directory Access Protocol (LDAP), Generic Security Service Application Program Interface (GSSAPI), SCRAM-SHA-256, Security Support Provider Interface (SSPI), etc, and advanced access controls.

Ready to start your Data Integration journey with Hevo? Hevo’s no-code data pipeline platform lets you import your CSV File in a matter of minutes to deliver data in near real-time to PostgreSQL.

Why choose Hevo?

- Experience Completely Automated Pipelines

- Enjoy Real-Time Data Transfer

- Rely on Live Support with a 24/5 chat featuring real engineers, not bots!

Take our 14-day free trial to experience a better way to manage your data pipelines. Find out why industry leaders like ScratchPay prefer Hevo for building their pipelines.

Import CSV File into PostgreSQL in Just 2 Steps!Why Import a CSV File into PostgreSQL?

Importing CSV files into a PostgreSQL database allows for seamless data integration from various sources, making the data more readily available and accessible for analysis, reporting, and other data-driven applications. PostgreSQL’s advanced features, such as data integrity constraints, transactions, and referential integrity, help ensure the consistency and reliability of the imported data.

Additionally, the database’s scalability, performance, backup and recovery mechanisms, and robust security features make it a superior choice over managing large CSV files directly.

You can explore more about: Migrate Postgres to MySQL

Additional Resources on Import CSV to PostgreSQL

- Move the data from Excel to PostgreSQL

- How to Unload and Load CSV to Redshift

- How to Load Data from CSV to BigQuery

Conclusion

In this post, we looked at four different ways to import CSV files into PostgreSQL. While the manual methods like COPY, \copy, and pgAdmin can get the job done, they often take time and a bit of technical know-how. That’s where Hevo really shines; it simplifies the entire process with an automated, no-code approach.

With Hevo, you can move your CSV data into PostgreSQL in just a few clicks, without writing any code or worrying about errors. It’s fast, reliable, and perfect for teams who want to focus more on insights and less on setup.

Try Hevo and see the magic for yourself. Sign up for a free 14-day trial to streamline your data integration process. You may examine Hevo’s pricing plans and decide on the best plan for your business needs.

FAQ on Importing a CSV File Into a PostgreSQL Table

1. How do I import a CSV file into a database?

To import a CSV file into a database, you can use the SQL command BULK INSERT, which allows you to load large datasets into a table quickly. Alternatively, SQL Server Management Studio provides a native wizard to upload CSV files directly, or you can use third-party ETL tools for automated and scalable data import.

2. How to import a CSV file into a table in pgAdmin?

In pgAdmin, you can import a CSV file into a table by right-clicking the target table, selecting the Import/Export option, choosing the CSV file, and configuring the delimiter, encoding, and other options to match your data.

3. How to add Excel CSV data to PostgreSQL?

To add Excel CSV data to PostgreSQL, first save the Excel file as a CSV, then use pgAdmin’s import feature or execute the COPY command in SQL to load the data into the desired table.