With most companies adopting cloud as their primary choice of storing data, the need for having a powerful and robust cloud data warehouse is on the rise. One of the most popular cloud-based data warehouse that meets all these requirements is Google’s BigQuery data warehouse.

It allows users to store potentially TBs of data with ease and provides fast query processing abilities along with robust integration support for a diverse set of data analytics and business intelligence tools.

The first step of using a data warehouse such as Google BigQuery is successfully loading your data to Google BigQuery tables. This article aims at providing you with a step-by-step solution to help you get started with the process of Preparing Data for BigQuery.

Upon a complete walkthrough of the content, you will be able to optimise your data source to ensure you have a smooth data loading process and also preparing data for bigquery.

Table of Contents

Introduction to Google BigQuery

It is Google Cloud Platform’s enterprise data warehouse for analytics. Google BigQuery performs exceptionally even while analyzing huge amounts of data & quickly meets your Big Data processing requirements with offerings such as exabyte-scale storage and petabyte-scale SQL queries. It is a serverless Software as a Service (SaaS) application that supports querying using ANSI SQL & houses machine learning capabilities.

Some key features of Google BigQuery:

- Scalability: Google BigQuery offers true scalability and consistent performance using its massively parallel computing and secure storage engine.

- Data Ingestions Formats: Google BigQuery allows users to load data in various formats such as AVRO, CSV, JSON etc.

- Built-in AI & ML: It supports predictive analysis using its auto ML tables feature, a codeless interface that helps develop models having best in class accuracy. Google BigQuery ML is another feature that supports algorithms such as K means, Logistic Regression etc.

- Parallel Processing: It uses a cloud-based parallel query processing engine that reads data from thousands of disks at the same time.

Struggling to migrate your data? Hevo makes it a breeze with its user-friendly, no-code platform. Here’s how we simplify the process:

- Seamlessly pull data from HubSpot and over 150+ other sources with ease.

- Utilize drag-and-drop and custom Python script features to transform your data.

- Efficiently migrate data to a data warehouse, ensuring it’s ready for insightful analysis in Tableau.

Experience the simplicity of data integration with Hevo and see how Hevo helped fuel FlexClub’s drive for accurate analytics and unified data.

Get Started with Hevo for FreePrerequisites

- Working knowledge of Google BigQuery.

- A Google BigQuery Account.

Factors to Consider while Preparing Data for BigQuery Data Loads

1. Cost of Loading Data

Google BigQuery follows a pricing model that charges users depending upon the amount of data, the type of data and geographical location they reside while loading the data. Google further charges users depending upon their usage of the Storage APIs.

A good practice to follow while working with Google BigQuery is to filter the data you want to insert using the where clause, especially when you’re working with bulk inserts. If you’re able to partition/cluster your tables correctly, you will have to pay comparatively lower charges.

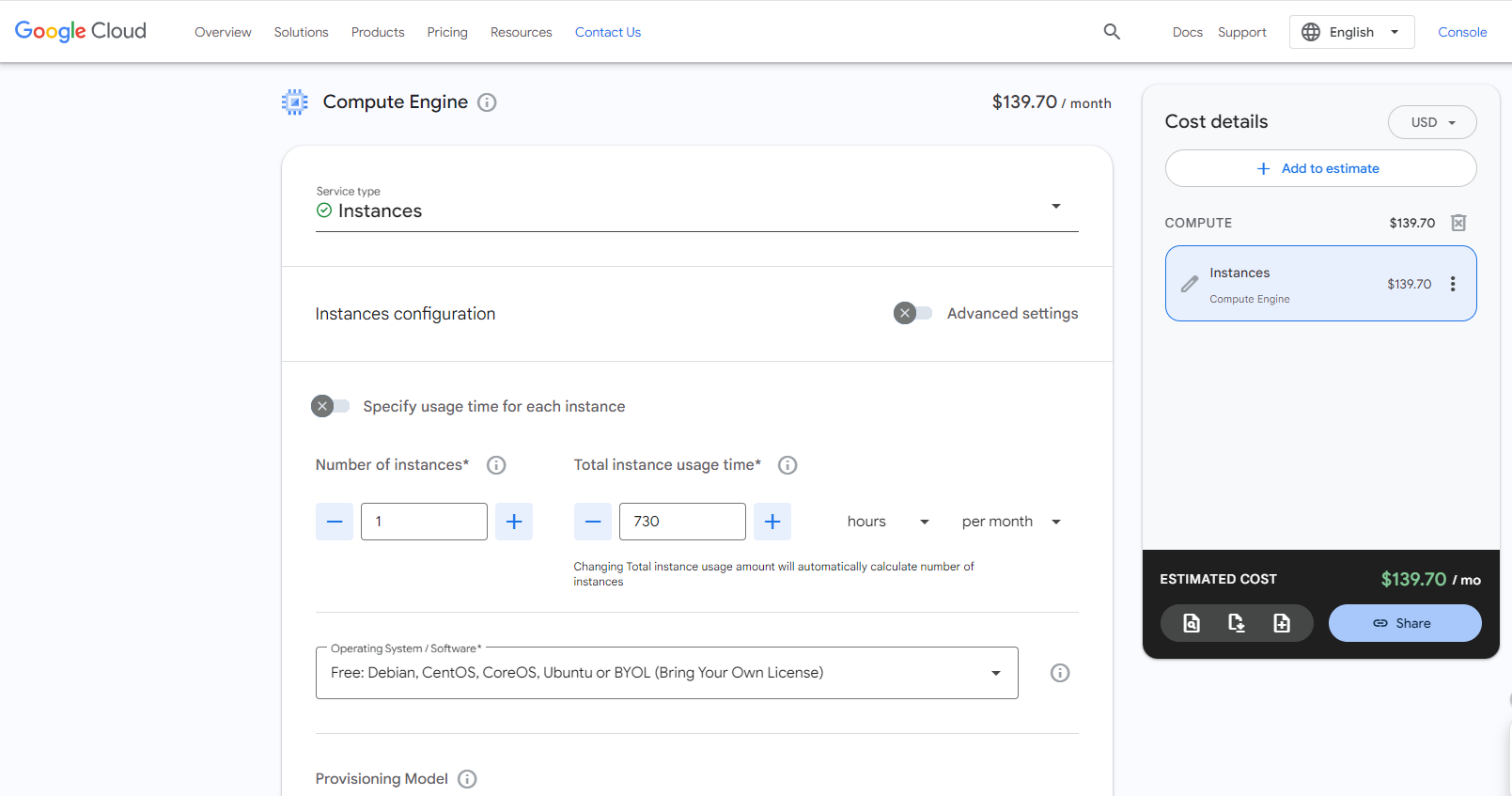

To help users estimate their costs, Google provides users with the Cloud Pricing Calculator. You can also write code manually in a way similar to how it’s done, in this Github project.

2. Data Organisation and Formats

To maintain aggregated data records and complex relationships in Google BigQuery, users must avoid joins to leverage the advancement in storage and processing power. One function of Google BigQuery is its ability to

work with denormalised data by taking advantage of data localisation.

When working with hierarchal or relational data in Google BigQuery, it’s better that you represent it with nested or repeated fields in your source file, which can be of any format such as JSON, AVRO, ORC, etc. This will help you utilise your Googe BigQuery storage better.

When working with JSON files, Google BigQuery makes use of newline as a delimiter, and hence you must ensure that your column names and structure match with your Google BigQuery tables. It also allows uploading uncompressed CSV, JSON, Parquet, etc. files parallelly. Such files are read sequentially, only when they are compressed.

Here are some extra points you must also keep in mind while loading data:

- When you’re loading data from a local data source, you must load files individually.

- Ensure that files from your local datastore contain less than 16,000 rows and are less than 10MB in size.

- With BigQuery you can add new fields to current tables and also relax existing fields to NULL.

3. Preparing Data for BigQuery Cloud Datastore Data Loads

When working with Google Datastore to load data, you must keep track of the following conditions:

- You can use the projection_fields flag to tell Google BigQuery the properties that you want to load.

- You cannot append data to an existing Google BigQuery table. To add data, you must either create a new table or overwrite the current table.

- You can only use one Google Storage API when loading data using the Google Datastore exports functionality.

- You cannot use any wildcards while specifying the filenames while loading data.

- Google Datastore requires users to make all necessary data transformations at the source level and then load the data. Google BigQuery will automatically convert the Datastore data types. For example, the Datastore key changes to records.

4. Firestore Data Loads

Loading data from Google Firestore works similarly to Google Datastore. You must keep check of the following while using Google Firestore:

- Similar to Google Datastore, Google Firestore, requires users to make all data transformations at the source level and then load their data.

- Google BigQuery will automatically convert the Firestore data types. For example, the date and time change to timestamp in Google BigQuery.

- You cannot append data to an existing Google BigQuery table or use any wildcards while loading data using Google Firestore.

5. Preparing Data for BigQuery APIs

Google provides users with various BigQuery APIs to help them load their data from a variety of sources with ease. When working with BigQuery APIs to load data, you should keep in mind the following points:

If you want to load data that contains large media files, you must use the “uploadType=resumable” parameter.

POST https://www.googleapis.com/upload/bigquery/v2/projects/YOUR_PROJECT_ID/jobs?uploadType=resumableIt will help start a session enabled request to transfer the file reliably. It will also help accommodate an additional HTTP request per upload.

Google BigQuery also allows users to upload metadata using the BigQuery API endpoint as follows:

POST /bigquery/v2/projects/projectId/jobsHence, you will be successful in preparing data for Bigquery.

Some Good Practices to follow while working with Google BigQuery

- If you face a 500/503/50x error while uploading large data files, you can use the exponential backoff strategy to tackle this. The exponential backoff strategy allows users to retry failed uploads over an increasing amount of time. For example, if the data upload fails on the first try giving a 50x error, you can retry after a time of 3 seconds + random_number_milliseconds.

- When loading data into Google BigQuery, you must keep your dataset in the same multi-regional location as your Cloud storage bucket, which contains your export files.

- If you get a 404 not found or 410 gone error while loading your data, you must start your data upload from the very beginning to avoid any discrepancies between your source and destination.

Looking for the right tools to streamline your data preparation? Check out our blog Best Data Preparation Tools for 2025 for top recommendations!

Conclusion

This article helps you get started with Preparing Data for BigQuery. It provides in-depth knowledge about the concepts behind every step to help you understand and implement them efficiently.

Using Google BigQuery to draw crucial insights about your business, requires you to bring in data from a diverse set of sources by setting up various ETL pipelines.

Find a plan that’s right for you, Experience transparent pricing that ensures no billing surprises even as you scale. Get a 14 day free trial with 24×5 support. No credit card required. Get a custom quote tailored to your requirements.

Frequently Asked Questions

1. Is BigQuery a SQL database?

BigQuery is not a SQL database in the conventional sense. This is actually a serverless, cloud-based data warehouse that’s optimized to address SQL queries to process enormous sized datasets. It is designed more for analytic workload instead of transactional workloads that conventional SQL databases are designed for.

2. In which format data is stored in BigQuery?

BigQuery uses Google’s Capacitor storage format, which is optimized for columnar data storage suitable for high-performance analytics and efficient compression of data; that’s why it’s possible to retrieve big chunks of data, while costs remain as low as possible.

3. Is BigQuery OLTP or OLAP?

BigQuery is an OLAP (Online Analytical Processing) system, which is designed to analyze huge quantities of data and run complex queries. It’s rather not supported to use for OLTP (Online Transaction Processing), that is actually designed for numerous frequent, small transactions.