Easily move your data from Azure Postgres To Databricks to enhance your analytics capabilities. With Hevo’s intuitive pipeline setup, data flows in real-time—check out our 1-minute demo below to see the seamless integration in action!

Most businesses face a significant challenge in efficiently managing and extracting insights from disparate data. Azure Postgres offers a robust storage solution but needs built-in tools for performing complex analytics tasks, like building machine learning models. This is where Databricks comes in.

Databricks is a comprehensive platform that offers scalability, advanced data processing tools and functionalities, and a collaborative environment for deriving actionable insights to foster innovation. By integrating Azure Postgres with Databricks, you can gain a competitive advantage and develop cutting-edge solutions.

In this article, you will explore how to transfer Azure Postgre data to Databricks using different methods, the benefits and use cases of integration, and more. Let’s get started!

Table of Contents

Why Integrate Azure Postrges to Databricks?

When you copy data from Azure Postgres to Databricks, you can gain several advantages. Let’s examine some of them:

- By integrating Azure Postgres with Databricks, you can perform real-time data analysis. This will help you generate instant insights and proactive actions based on up-to-date data.

- Databricks has distributed computing and parallel processing capabilities. Data migration from Azure Postgres to Databricks enables high performance through faster data processing and efficient resource utilization.

- Databricks seamlessly integrates with robust machine learning frameworks and libraries such as TensorFlow, PyTorch, and more. By migrating Azure Postgres data into Databricks, you can build machine learning models using PostgreSQL data.

- Databricks provides robust data governance and security features, including encryption, authentication, and auditing. By integrating Azure Postgres with Databricks, you can ensure data security and compliance.

Method 1: Integrating Azure Postgres to Databricks Using Hevo

Hevo Data, an Automated Data Pipeline, provides you with a hassle-free solution to connect Azure Postgres to Databricks within minutes with an easy-to-use no-code interface. Hevo is fully managed and completely automates the process of loading data from Azure Postgres to Databricks and enriching the data and transforming it into an analysis-ready form without having to write a single line of code.

Method 2: Export Data from Azure Postgres to Databricks File or Table Using CSV File

This method would be time-consuming and somewhat tedious to implement. Users will have to write custom codes to enable two processes, streaming data from Azure Postgres to Databricks. This method is suitable for users with a technical background.

Get Started with Hevo for FreeOverview of Azure Postgres

- Azure Postgres is a managed database service provided by Microsoft within the Azure cloud platform. Postgres DB instance focuses on application development, such as database schema design, integration, business logic implementation, and more, rather than database administration tasks.

- It offers scalability to adjust compute and storage resources according to your application needs.

- Postgres also has automated backups and point-in-time capabilities that help you ensure data protection and disaster recovery.

Overview of Databricks

- Databricks is a unified platform simplifying big data processing and machine learning tasks. It is built on lakehouse architecture, supporting data lake and data warehouse capabilities.

- You can collaborate with your team through Databricks’ interactive user workspace, where you can write and execute code using Python, SQL, and Scala.

- It also has a machine-learning (ML) platform where you can build, tune, test, and deploy the ML models.

How to Connect Azure Postgres to Dataricks?

You can load Azure Postgres file to Databricks using two methods.

Method 1: Integrating Azure Postgres to Databricks Using Hevo.

Method 2: Export Data from Azure Postgres to Databricks File or Table Using CSV File.

Method 1: Integrating Azure Postgres to Databricks Using Hevo

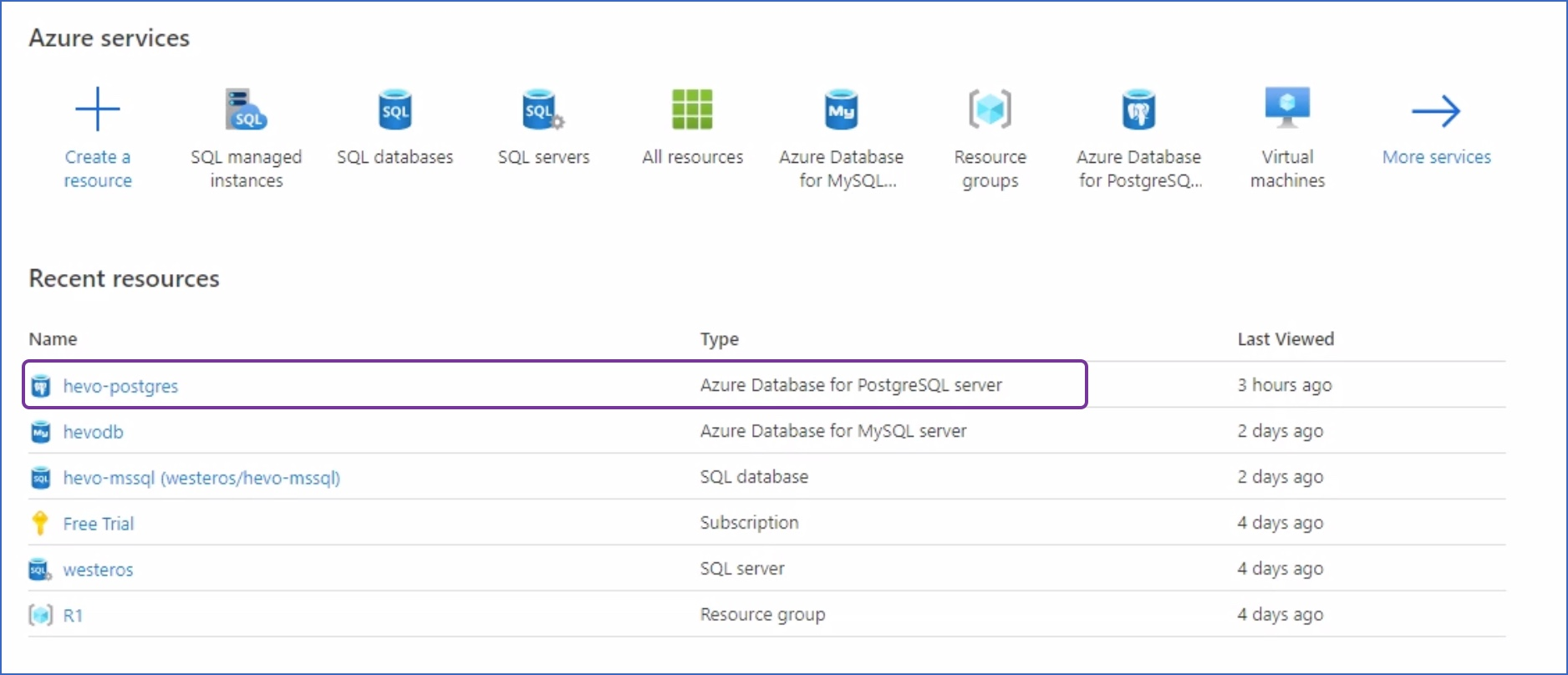

Step 1.1: Configure Azure Postgres as Your Source

Prerequisites

- Your Postgres DB instance’s IP address or hostname should be available.

- The Postgres version which you are using should be 9.5 or higher.

- If the pipeline mode is Logical Replication, you should enable log-based incremental replication.

- Whitelist Hevo’s IP addresses to enable the connection between the Postgres database and Hevo.

- Create a database user and grant them Select, Usage, and Connect privileges.

- Your source instance’s database name or port number must be available.

- To create a data pipeline in Hevo, you must be a Team Administrator, Team Collaborator, or Pipeline Administrator.

Configure Azure Postgres as Your Source

You can refer to the Hevo documentation to know more about configuring Azure Postgres as your source

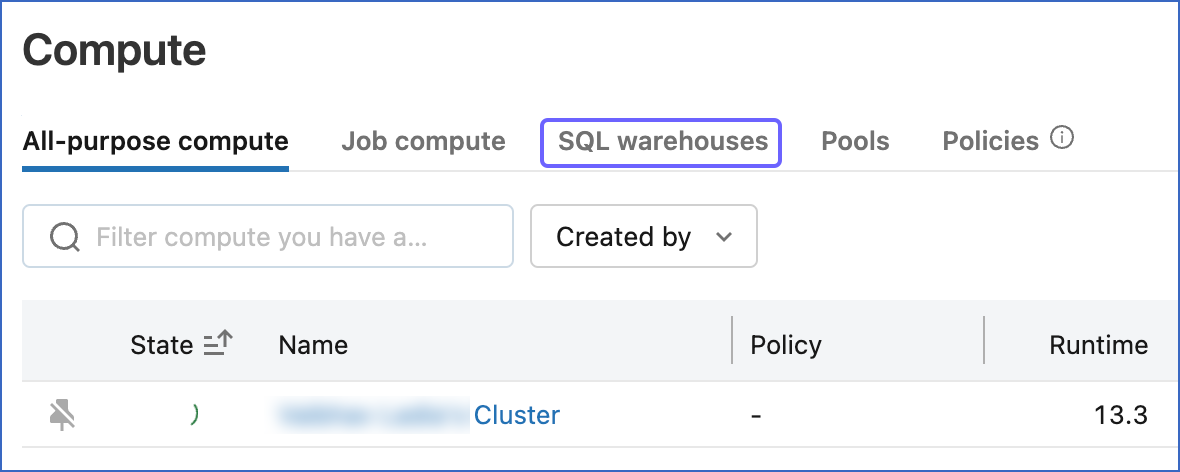

Step 1.2: Set up Databricks as Your Destination

Prerequisites

- An active AWS, GCP, or Azure account should be available.

- Create a Databricks workspace on your cloud service account.

- Enable the IP access list feature to connect your Databricks workspace.

- The URL of the Databricks workspace must be available in the format – https://<deployment name>.cloud.databricks.com.

- To connect to your Databricks workspace, you must meet the following requirements:

- Create an SQL warehouse or Databricks cluster.

- The port number, HTTP Path, and database hostname must be available.

- The Personal Access Token must be available.

- You must be assigned the role of any administrator, except for the Billing Administrator, to create the destination in the Hevo data pipeline.

You can also connect Databricks as your destination using a recommended method: Databricks Partner Connector.

Configure Databricks as Your Destination

For more information, refer to the Hevo documentation on configuring Databricks as the destination.

Let’s look at some of Hevo’s benefits, which help simplify data integration between Azure Postgre and Databricks.

Benefits of Using Hevo for Azure Postgres to Databricks Integration

- Data Transformation: Hevo Data provides you the ability to transform your data for analysis with a simple Python-based drag-and-drop data transformation technique.

- Automated Schema Mapping: Hevo Data automatically arranges the destination schema to match the incoming data. It also lets you choose between Full and Incremental Mapping.

- Incremental Data Load: It ensures proper utilization of bandwidth both on the source and the destination by allowing real-time data transfer of the modified data.

With a versatile set of features, Hevo Data is one of the best tools for exporting data from Azure Postgres to Databricks files.

Method 2: Export Data from Azure Postgres to Databricks File or Table Using CSV File

In this method, you will learn how to convert Azure Postrges to Databricks table using a CSV file. First, you will export data from the Postgres DB instance for Azure into Azure blob storage, which acts as a container to store your data. Then, you will export that data to the Databricks table.

Step 2.1: Export Data in CSV Format inside Blob Storage

Prerequisites

- You must have a running Azure Storage account.

- You must have permission to export data from the Postgres DB instance to a blob storage.

- You need to install and allowlist your azure_storage extension in the database.

- The Azure DB generates two 512-bit storage account access keys, which authorize access to data in the storage account you created.

- You need to map the account using account_add method.

You can export data from the Postgres database to Azure Blob Storage in CSV file format using the COPY command.

COPY <table_name>

TO 'https://mystorageaccount.blob.core.windows.net/mytestblob/employee2.csv'

WITH (FORMAT 'csv');

Step 2.2: Connect Databricks to Azure Blob Storage

Prerequisites

- You must provide the necessary permissions to Databricks to access the storage account on your Azure blob storage.

- Compute permissions to set spark properties to configure your Azure credentials to access blob storage.

- Create a database and a table for your Databricks, where you will load the CSV files.

- You must create a Dataframe and a cluster for the Databricks.

Step 2.2.1 Get the Necessary Credentials to Connect Databricks with Your Blob Container

You can connect Databricks to your blob container using different credential methods to access data in blob storage.

Step 2.2.2: Configure Azure Credentials to Access Azure Storage

You can configure Azure credentials to access data in Azure storage using account keys by setting up Spark properties.

spark.conf.set(

"fs.azure.account.key.<storage-account>.dfs.core.windows.net",

dbutils.secrets.get(scope="<scope>", key="<storage-account-access-key>"))In the above configuration:

- Replace <storage-account> with the name of the Azure storage account.

- Replace <scope> with your Dataricks secret scope name.

- Replace <storage-account-access-keys> with the name of the key that contains the Azure storage account access keys.

Step 2.2.3: Access Azure Storage Data

After configuring credentials, you can interact with resources in storage accounts using URIs to convert Azure Postgres to Databricks table.

The Databricks platform recommends you use an abfss driver to perform data integration for greater security.

- You can use Python in Apache Spark to read data from Azure blob storage using the abfss driver and list contents using the Databricks utility.

spark.read.load("abfss://<container-name>@<storage-account-name>.dfs.core.windows.net/<path-to-data>")

dbutils.fs.ls("abfss://<container-name>@<storage-account-name>.dfs.core.windows.net/<path-to-data>")- You can use SQL to load the data, which is present in CSV format from Azure blob storage, into the Databricks table.

COPY INTO <database-name>.<table-name>

FROM 'abfss://container@storageAccount.dfs.core.windows.net/path/to/folder'

FILEFORMAT = CSV

COPY_OPTIONS ('mergeSchema' = 'true');Limitations for Method: Export Data from Azure Postgres to Databricks File or Table Using CSV Files

- To view your account access keys, you must have the role of owner, storage account key operator, or contributor to the Azure storage account. If you don’t have any of these roles, you can’t access the data inside the Azure storage account.

- If you don’t store your credentials over the secret scope in your workspace, there can be a possibility of data breach or unauthorized access.

Use Cases of Azure Postgres to Databricks

- You can apply targeted market campaigns by integrating customer data in Azure Postgres to Databricks and using machine learning algorithms. The algorithms help you analyze customer data and identify patterns, trends, and insights into purchase history and likelihood.

- You can use Databricks structured streaming to integrate and process real-time transaction data from Azure Postgres to optimize your business operations.

You can also read more about:

- GCP Postgres to Databricks

- AWS Aurora Postgres to Databricks

- PostgreSQL to Databricks

- Amazon S3 to Databricks

Key Takeaways

- Azure Postgres and Databricks are two robust data management and analysis platforms.

- By integrating your data from Azure Postgres to Databricks, you can use Databricks’ advanced analysis capabilities.

- You can integrate your Azure Postgres data to Databricks Either using CSV files or an ELT platform, Hevo.

- While CSV files can be a straightforward method for transferring data from Azure Postgres to Databricks, it might not provide scalability and efficiency for large-scale data integration.

- On the other hand, Hevo streamlines and automates your data integration by applying necessary transformations using its built-in features to prepare your data for analysis.

Take Hevo’s 14-day free trial to experience a better way to manage your data pipelines. You can also check out the unbeatable pricing, which will help you choose the right plan for your business needs.

FAQs (Frequently Asked Questions)

1. Do you need a separator connector for PostgreSQL to connect to Databricks?

You can connect to Databricks using the standard JDBC driver or PostgreSQL JDBC driver. While using either, you need to ensure the driver is included in your Databricks runtime, where you need to form a correct JDBC driver URL.

2. How do you update records in Postgres using Spark Databricks?

There are many ways to update records in Postgres using Spark Databricks:

– You can do a full join and select all the entries existing only in the dataset, the rest taken from the database, and then overwrite it.

– For PostgreSQL, you can convert ‘(foreach)’ into INSERT on CONFLICT, so you don’t have to read the entire database.

– You can write your data in a temporary table and via the JDBC issue merge command to incorporate the changes in your table.

3. How do I migrate from PostgreSQL to Databricks?

To migrate data from PostgreSQL to Databricks, you can use Hevo’s automated integration for a seamless transfer or export the data as a CSV and then import it into Databricks manually.