Unlock the full potential of your Marketo data by integrating it seamlessly with Databricks. With Hevo’s automated pipeline, get data flowing effortlessly—watch our 1-minute demo below to see it in action!

Building an entirely new data connector is difficult, especially when you’re already heavily swamped with monitoring and maintaining your existing custom data pipelines. When you have an ad-hoc Marketo to Databricks connection request from your marketing team, you’ll have to compromise your engineering bandwidth with this manual task. We understand you are pressed for time and require a quick solution. If you need to write GET API requests and download and upload JSON files, this might be redundant if done regularly. Alternatively, you might use an automated solution.

Well, you’ve landed in the right place! I’ve prepared a simple and straightforward guide to help you replicate data from Marketo to Databricks.

Table of Contents

What is Marketo?

- Developed in 2006, Adobe Marketo is a very popular Software-as-a-Service(SaaS) based marketing automation software.

- Adobe has developed Marketo to help businesses automate and measure marketing engagement tasks and workflows.

- Marketo monitors automation tasks like email marketing, lead management, revenue attribution, account-based marketing, customer-based marketing, and more.

Know how to migrate data from Marketo to MySQL.

Overview of Databricks

- Databricks provides a unified platform for data analytics that empowers simplicity in big data processing and machine learning, tightly integrating with Apache Spark for the power of an open-source analytics engine.

- It provides a cloud-based environment that simplifies the data pipeline from ingesting data to analyzing it.

- Principally, Databricks provides collaborative notebooks, automated cluster management, and advanced analytics capabilities that enable data engineers and data scientists to work more collaboratively on big data projects.

Check out the Databricks Query Optimization Techniques

Method 1: Using Hevo to Set Up Marketo to Databricks

Hevo accelerates the migration of Marketo with Databricks by automating data workflows and minimizing manual setup. This solution ensures smooth and reliable data transfer, enabling marketers to gain actionable insights from their campaigns in Databricks without complex configurations.

Method 2: Using Custom Code to Move Data from Marketo to Databricks

Custom code for transferring data from Marketo to Databricks offers tailored integration but demands thorough programming skills and regular updates, which can be difficult to manage.

Method 1: Using Hevo to Set Up Marketo to Databricks

Here’s how Hevo Data, a cloud-based ETL tool, makes Marketo to Databricks data replication ridiculously easy:

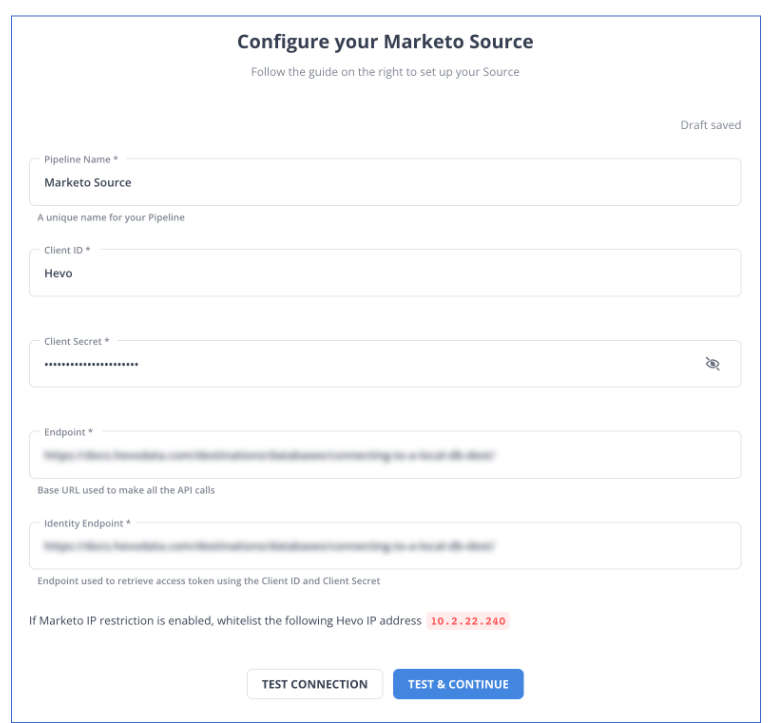

Step 1: Configure Marketo as a Source

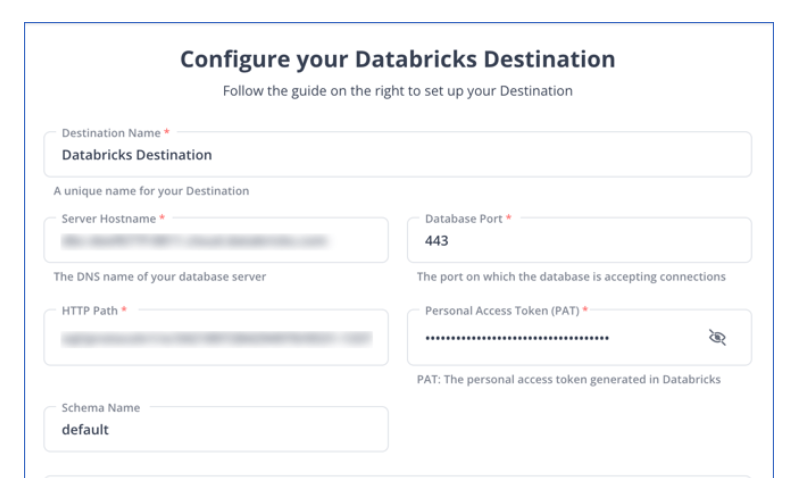

Step 2: Configure Databricks as a Destination

All Done to Setup Your ETL Pipeline

After implementing the 2 simple steps, Hevo Data will take care of building the pipeline for replicating data from Marketo to Databricks based on the inputs given by you while configuring the source and the destination.

By employing Hevo to simplify your data integration needs, you can leverage its salient features:

- Reliability at Scale: With Hevo Data, you get a world-class fault-tolerant architecture that scales with zero data loss and low latency.

- Monitoring and Observability: Monitor pipeline health with intuitive dashboards that reveal every state of the pipeline and data flow. Bring real-time visibility into your ELT with Alerts and Activity Logs.

- Stay in Total Control: When automation isn’t enough, Hevo Data offers flexibility – data ingestion modes, ingestion, and load frequency, JSON parsing, destination workbench, custom schema management, and much more – for you to have total control.

- Auto-Schema Management: Correcting improper schema after the data is loaded into your warehouse is challenging. Hevo Data automatically maps the source schema with the destination warehouse so that you don’t face the pain of schema errors.

- 24×7 Customer Support: With Hevo Data, you get more than just a platform; you get a partner for your pipelines. Discover peace with round-the-clock “Live Chat” within the platform. Moreover, you get 24×7 support even during the 14-day full-featured free trial.

- Transparent Pricing: Say goodbye to complex and hidden pricing models. Hevo Data’s Transparent Pricing brings complete visibility to your ELT spending. Choose a plan based on your business needs. Stay in control with spend alerts and configurable credit limits for unforeseen spikes in the data flow.

Method 2: Using Custom Code to Move Data from Marketo to Databricks

For users that want to export data programmatically, Marketo offers two types of REST APIs.

- Lead Database APIs are used to fetch data from Marketo personal records and associated object types such as Opportunities, Companies, etc.

- Asset APIs are used to make marketing collateral and workflow records available to the public.

Step 1: Export Data from Marketo

Let’s dive into the steps for exporting data from Marketo using REST APIs.

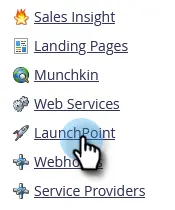

- Visit the Admin panel in Marketo. Then select the “LaunchPoint” button to use the APIs.

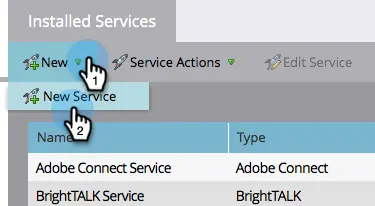

- Now, get an authorization token by creating a new service.

- Now, select the API that corresponds to the data you require.

For example, you could use an API path such as /rest/v1/opportunities.json to get a list of opportunities. To customize the data Marketo returns, you can add a couple of alternative filters.

The following is an example of a well-formed REST URL in Marketo:

https://284-RPR-133.mktorest.com/rest/v1/lead/318581.json?fields=email,firstName,lastNameThe above URL is composed of the following parts:

- Base URL: https://284-RPR-133.mktorest.com/rest

- Path: /v1/lead/

- Resource: 318582.json

- Query parameter: fields=email,firstName,lastName

In this same format, you can write the REST URL to fetch data from Opportunities object.

These are the following GET request API path & resource for fetching data from the Opportunities object.

- Get Opportunity Field by Name: /rest/v1/opportunities/schema/fields/{fieldApiName}.json

- Get Opportunity Fields: /rest/v1/opportunities/schema/fields.json

- Get Opportunities: /rest/v1/opportunities.json

- Describe Opportunity: /rest/v1/opportunities/describe.json

- Get Opportunity Roles: /rest/v1/opportunities/roles.json

- Describe Opportunity Role: /rest/v1/opportunities/roles/describe.json

After making a GET API request to fetch data from Marketo, the data will be returned in JSON format. An example of data returned by an Opportunities query is as follows:

{

"requestId":"e42b#14272d07d88",

"success":true,

"result":[

{

"seq":0,

"marketoGUID":"dff23271-f996-47d7-984f-f2676861b6fa ",

"externalOpportunityId":"19UYA31581L000000",

"name":"Tables",

"description":"Tables",

"amount":"1605.47",

"source":"Inbound Sales Call/Email"

},

{

"seq":1,

"marketoGUID":"dff23271-f996-47d7-984f-f2676861b5fc ",

"externalOpportunityId":"29UYA31581L000000",

"name":"Big Dog Day Care-Phase12",

"description":"Big Dog Day Care-Phase12",

"amount":"1604.47",

"source":"Email"

}

]

}

Step 2: Prepare Marketo Data

You’ll need to construct a schema for your data tables if you don’t already have one for storing the data you obtain. Then, for each value in the response, you must identify a predefined datatype (INTEGER, DATETIME, etc.) and create a table to receive it. Marketo’s documentation should list the fields and datatypes available by each endpoint.

However, you can store the API response JSON file in your local system as well.

import requests

import json

# Marketo API credentials

base_url = "https://<your-instance>.mktorest.com"

api_path = "/rest/v1/opportunities.json"

auth_token = "YOUR_AUTH_TOKEN"

# Set up the headers with authorization

headers = {

'Authorization': f"Bearer {auth_token}"

}

# Make the API request

response = requests.get(f"{base_url}{api_path}", headers=headers)

# Check if the request was successful

if response.status_code == 200:

data = response.json()

# Save the JSON response to a local file

with open('opportunities.json', 'w') as f:

json.dump(data, f, indent=4)

else:

print("Error fetching data:", response.status_code, response.text)Step 3: Import JSON Files into Databricks

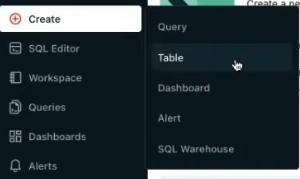

- In the Databricks UI, go to the side navigation bar. Click on the “Data” option.

- Now, you need to click on the “Create Table” option.

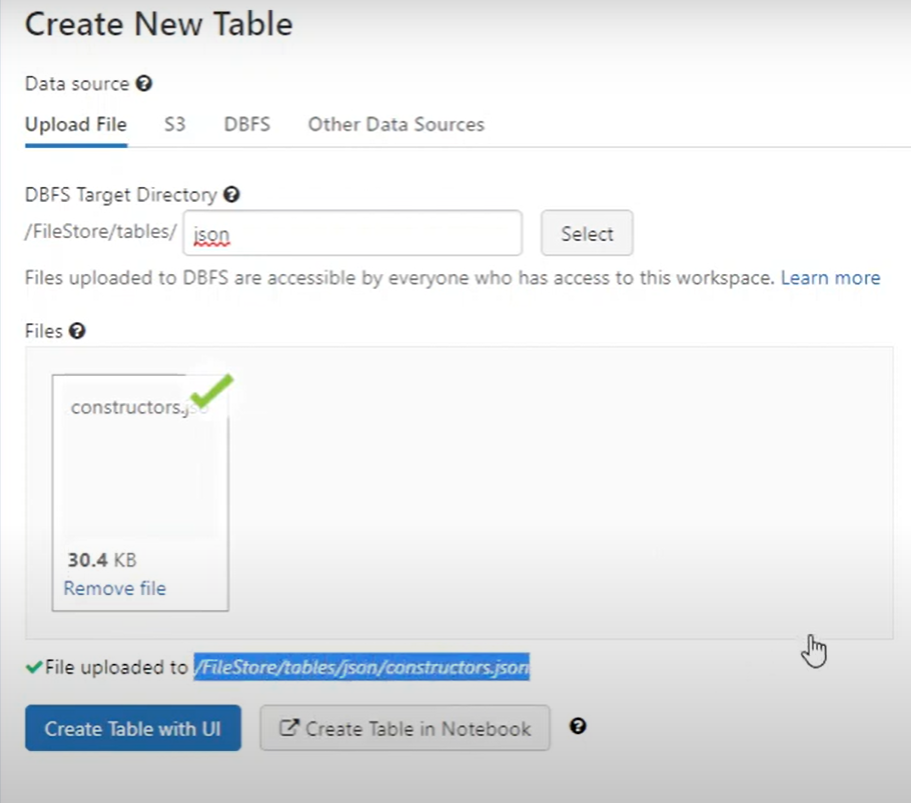

- Then drag the required JSON files to the drop zone. Otherwise, you can browse the files in your local system and then upload them.

Once the JSON files are uploaded, your file path will look like: /FileStore/tables/<fileName>-<integer>.<fileType>

If you click on the “Create Table with UI” button, then follow along:

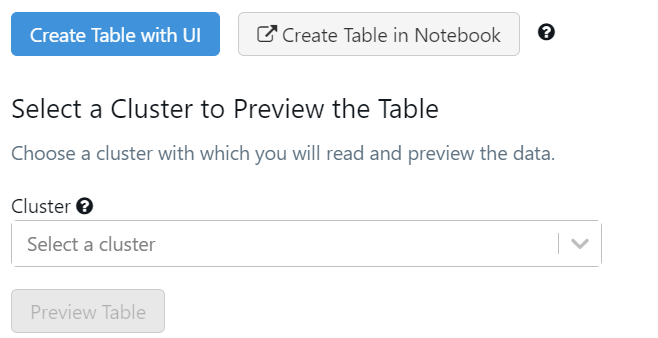

- Then select the cluster where you want to preview your table.

- Click on the “Preview Article” button. Then, specify the table attributes such as table name, database name, file type, etc.

- Then, select the “Create Table” button.

- Now, the database schema and sample data will be displayed on the screen.

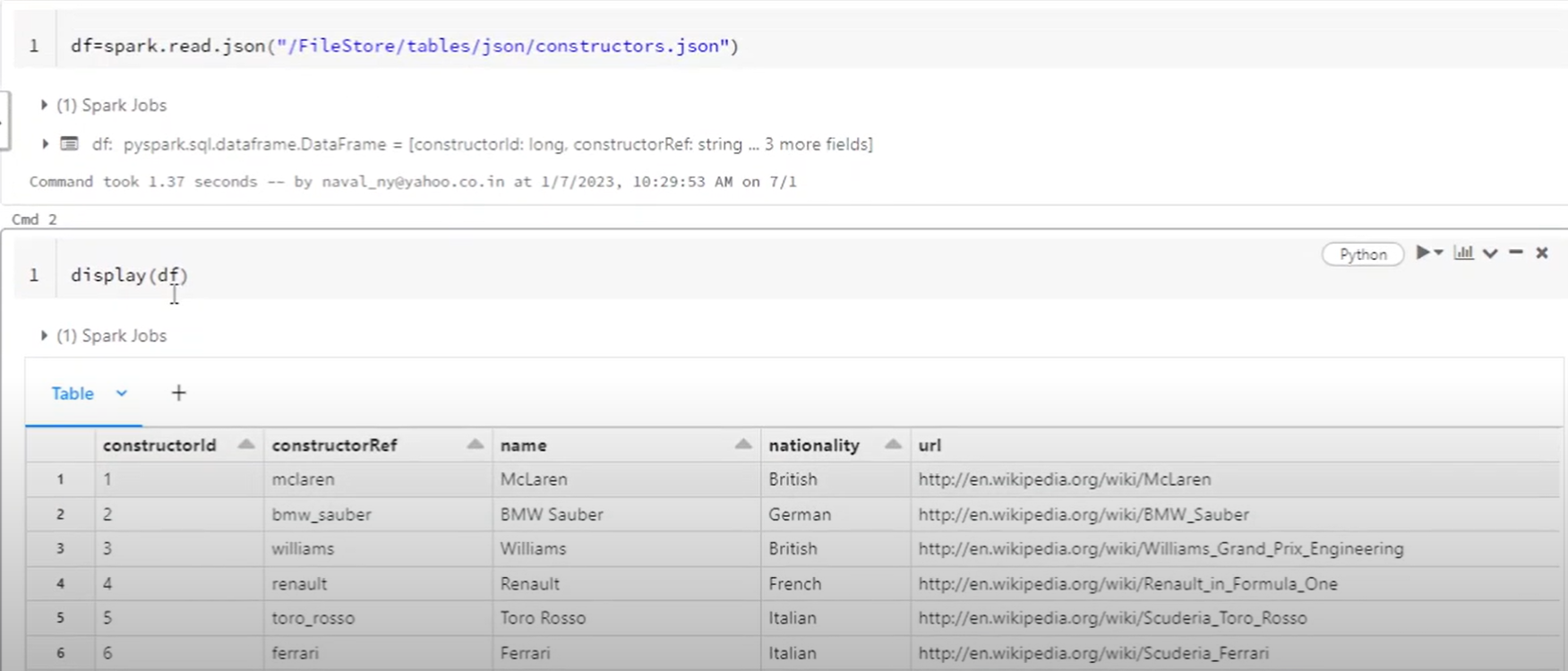

If you click on the “Create Table in Notebook” button, then follow along:

- A Python notebook is created in the selected cluster.

The above picture shows a CSV file being imported to Databricks. However, in this case, it’s a JSON file.

- You can edit the table attributes and format using the necessary Python code. You can refer to the below image for reference.

- You can also run queries on SQL in the notebook to understand the data frame and its description.

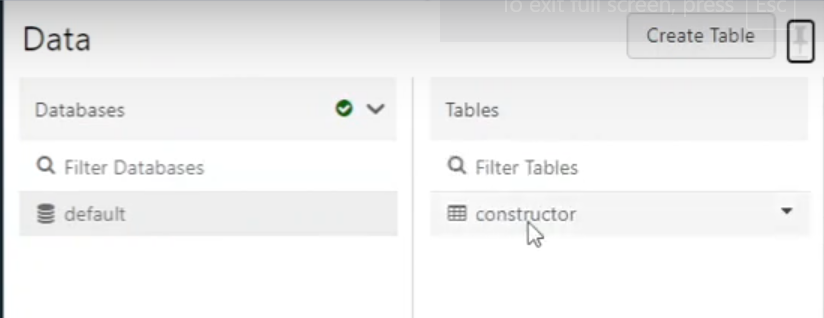

In this case, the name of the table is “emp_csv.” However, in our case, we can keep it as a “constructor.”

- Now, on top of the Pandas data frame, you need to create and save your table in the default database or any other database of your choice.

In the above table, “mytestdb” is a database where we intend to save our table.

- After you save the table, you can click on the “Data” button in the left navigation pane and check whether the table has been saved in the database of your choice.

Step 4: Modify & Access the Data

- The data now gets uploaded to Databricks. You can access the data via the Import & Explore Data section on the landing page.

- To modify the data, select a cluster and click on the “Preview Table” option.

- Then, change the attributes accordingly and select the “Create Table” option.

With the above 4-step approach, you can easily replicate data from Marketo to Databricks using REST APIs. This method performs exceptionally well in the following scenarios:

- Low-frequency Data Replication: This method is appropriate when your marketing team needs the Marketo data only once in an extended period, i.e., monthly, quarterly, yearly, or just once.

- Dedicated Personnel: If your organization has dedicated people who have to manually write GET API requests and download and upload JSON data, then accomplishing this task is not much of a headache.

- Low Volume Data: It can be a tedious task to repeatedly write API requests for different objects and download & upload JSON files. Moreover, merging these JSON files from multiple departments is time-consuming if you are trying to measure the business’s overall performance. Hence, this method is optimal for replicating only a few files.

When the frequency of replicating data from Marketo increases, this process becomes highly monotonous. It adds to your misery when you have to transform the raw data every single time. With the increase in data sources, you would have to spend a significant portion of your engineering bandwidth creating new data connectors. Just imagine — building custom connectors for each source, transforming & processing the data, tracking the data flow individually, and fixing issues. Doesn’t it sound exhausting?

Instead, you should be focussing on more productive tasks. Being relegated to the role of a ‘Big Data Plumber‘ that spends their time mostly repairing and creating the data pipeline might not be the best use of your time.

Advantages of Connecting Marketo to Databricks

Replicating data from Marketo to Databricks can help your data analysts get critical business insights. Does your use case make the list?

- Customers acquired from which channel have the maximum satisfaction ratings?

- How does customer SCR (Sales Close Ratio) vary by Marketing campaign?

- How many orders were completed from a particular Geography?

- How likely is the lead to purchase a product?

- What is the Marketing Behavioural profile of the Product’s Top Users?

You can also read more about:

- Mailchimp to Databricks

- Segment to Databricks

- Google Analytics 4 to Databricks

- Setting up Databricks ETL

Summing It Up

Collecting an API key, sending a GET request through REST APIs, downloading, transforming uploading the JSON data would be the smoothest process when your marketing team requires data from Marketo only once in a while. But what if the marketing team requests data of multiple objects with numerous filters in the Marketo data every once in a while? Going through this process over and again can be monotonous and would eat up a major portion of your engineering bandwidth. The situation worsens when these requests are for replicating data from multiple sources.

So, would you carry on with this method of manually writing GET API requests every time you get a request from the Marketing team? You can stop spending so much time being a ‘Big Data Plumber’ by using a custom ETL solution instead.

A custom ETL solution becomes necessary for real-time data demands such as monitoring email campaign performance or viewing the sales funnel. You can free your engineering bandwidth from these repetitive & resource-intensive tasks by selecting Hevo Data’s 150+ plug-and-play integrations (including 60+ free sources such as Marketo).

Want to take Hevo Data for a spin? Sign Up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs. Share your experience of connecting Marketo to Databricks! Let us know in the comments section below!

FAQs

1. How do I save data to Databricks?

To save data to Databricks, you can write your DataFrame or table using commands like .write() in PySpark, specifying your preferred format (e.g., CSV, Parquet) and storage location within your Databricks environment.

2. How do I import data from Excel to Databricks?

To import data from Excel, first convert the file to a CSV or Parquet format, then upload it directly to Databricks or use external storage options. Once uploaded, use Databricks commands to read and analyze the file.

3. What is the maximum data syncing rate between Marketo and Databricks?

The data syncing rate between Marketo and Databricks largely depends on factors like API rate limits, data volume, and network bandwidth. Marketo’s REST API has a rate limit of 100 calls per 20 seconds per endpoint, meaning large data transfers might require batching and throttling, which can impact overall speed.