A Collection of SQL statements is known as a Bigquery script. all the statements can be executed in a single request. Scripts consist of Variables and Control-flow statements and have a larger and better execution capability.

A script that can be invoked from inside a SQL statement is known as a stored procedure. The Big Query Stored Procedure takes arguments as input and returns the output.

This article gives a comprehensive guide on BigQuery Stored Procedure along with examples.

What are SQL Scripts?

Image Source: learn.onemonth.com

Image Source: learn.onemonth.com

The SQL script is a group of SQL (Structured Query Language) commands that are stored together in a text file to perform an operation or task. These tasks are typically repetitive, meaning they have performed over and over again on a regular basis. The commands contained in a script can be compatible with the SQL language. When combined, they are often flexible enough to be used in a variety of related situations. You can use scripts to:

- run multiple queries in sequence with common status.

- Automate administrative tasks like creating or deleting tables.

- Implement more complex logic using programming constructs such as IF and WHILE.

Features of SQL scripts

- Ease of Use: Scripts can be saved and loaded as needed.

- Consistency: If your scripts work right the first time, they will work right every time.

- Minor Bugs: Manually entered commands are prone to human error. Scripts reduce this possibility.

- Scheduling operations: Scripts can be scheduled to run at a convenient time or when no one is present.

Learn more about SQL SCRIPTS.

Hevo Data, a No-code Data Pipeline helps to load data from any data source such as Databases, SaaS applications, Cloud Storage, SDKs, and Streaming Services and simplifies the ETL process. It supports 150+ data sources (including 30+ free data sources) like Asana and is a 3-step process by just selecting the data source, providing valid credentials, and choosing the destination. Hevo not only loads the data onto the desired Data Warehouse/destination but also enriches the data and transforms it into an analysis-ready form without having to write a single line of code.

GET STARTED WITH HEVO FOR FREE[/hevoButton]

Its completely automated pipeline offers data to be delivered in real-time without any loss from source to destination. Its fault-tolerant and scalable architecture ensure that the data is handled in a secure, consistent manner with zero data loss and supports different forms of data. The solutions provided are consistent and work with different BI tools as well.

Check out why Hevo is the Best:

- Secure: Hevo has a fault-tolerant architecture that ensures that the data is handled in a secure, consistent manner with zero data loss.

- Schema Management: Hevo takes away the tedious task of schema management & automatically detects the schema of incoming data and maps it to the destination schema.

- Minimal Learning: Hevo, with its simple and interactive UI, is extremely simple for new customers to work on and perform operations.

- Hevo Is Built To Scale: As the number of sources and the volume of your data grows, Hevo scales horizontally, handling millions of records per minute with very little latency.

- Incremental Data Load: Hevo allows the transfer of data that has been modified in real-time. This ensures efficient utilization of bandwidth on both ends.

- Live Support: The Hevo team is available round the clock to extend exceptional support to its customers through chat, email, and support calls.

- Live Monitoring: Hevo allows you to monitor the data flow and check where your data is at a particular point in time.

SIGN UP HERE FOR A 14-DAY FREE TRIAL

What are Stored Procedures in SQL?

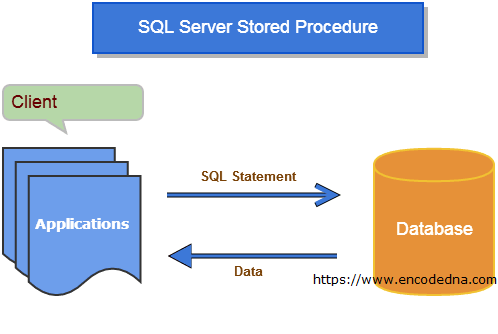

Image Source: www.encodedna.com

Image Source: www.encodedna.com

A group of one or more precompiled SQL statements in a group is known as a Stored Procedure in SQL. The database server considers it as an object. A stored procedure can be considered as a computer language subprogram that is stored in a database. Aspects like name, a parameter list, and a Transact-SQL statement are always present in an SQL stored procedure. Triggers, java, python, PHP, and other procedures can be used to call a Stored procedure. All relational databases support the concept of Stored Procedure.

SQL server stores prepare an execution plan and store it in cache memory, the first time a stored procedure is executed. the plan mapped out by the server ensures that the stored procedure can be executed efficiently.

Features of Stored Procedures in SQL Server

The following are the features of stored procedure in SQL Server:

- Reduced Traffic: The traffic between the server and application is reduced by a larger margin as the application just calls the name of the stored procedure instead of many SQL statements resulting in increased performance.

- Stronger Security: The procedure is usually secure because it manages which processes and activities we will perform. It removes the necessity for permissions to be granted at the database object level and simplifies the safety layers.

- Reusable: Stored procedures are reusable. It reduces code inconsistency, prevents unnecessary rewrites of an equivalent code, and makes the code transparent to all or any applications or users.

- Easy Maintenance: The procedures are easier to take care of without restarting or deploying the appliance.

- Improved Performance: Stored Procedure increases the appliance performance. Once we create the stored procedures and compile them the primary time, it creates an execution plan reused for subsequent executions. The procedure is typically processed quickly because the query processor doesn’t need to create a replacement plan.

Understanding BigQuery Stored Procedures and scripting

Image Source:47billion.com

Image Source:47billion.com

Bigquery allows its users mainly data engineers and data analysts to execute functions using the Bigquery Scripting. These functions range from simple queries to complex queries that include IF and While statements. Variables are also used in the concept of scripting.

BigQuery Stored procedures allow users to save these scripts and run them BigQuery Stored procedures allow users to save lots of these scripts and run them within BigQuery within the future. BigQuery Stored Procedures also can be shared with others within the organization, all while maintaining one canonical version of the BigQuery Stored Procedure.

The following example shows a script that sets a variable, runs an INSERT

The example shows the use of the INSERT statement using a variable and displaying a formatted string as an output.

Example of BigQuery Script vs BigQuery Stored Procedure

DECLARE id STRING;

SET id = GENERATE_UUID();

INSERT INTO mydataset.customers (customer_id)

VALUES(id);

SELECT FORMAT("Created customer ID %s", id);

Bigquery Script as a BigQuery Stored Procedure

CREATE OR REPLACE PROCEDURE mydataset.create_customer()

BEGIN

DECLARE id STRING;

SET id = GENERATE_UUID();

INSERT INTO mydataset.customers (customer_id)

VALUES(id);

SELECT FORMAT("Created customer %s", id);

END

In the preceding example, the name of the BigQuery Stored Procedure is mydataset.create_customer, and the body of the BigQuery Stored Procedure appears between BEGIN and END statements.

To call the procedure, use the CALL statement:

CALL mydataset.create_customer();

Writing a BigQuery Script

Multiple SQL statements separated by semicolons are combined into a Script. A script can comprise only valid SQL statements and scripting statements. Scripts also allow you to declare variables and implement control flow statements. The following example declares a variable and uses the variable inside an IF statement:

DECLARE day INT64;

SET day = (SELECT EXTRACT(DAYOFWEEK from CURRENT_DATE));

if day = 1 or day = 7 THEN

SELECT 'Weekend';

ELSE

SELECT 'Weekday';

END IF

Bigquery executes Scripts using the jobs.insert command. the multistatement script can be run in the same manner as any other script. Child jobs are additional jobs that are created for every statement in the script when it is executed. The jobs.list can be used to enumerate the child job of a script by passing the script’s job ID as the parent.jobID parameter

To get the result of the last SELECT, DDL or DML statements the jobs.getQueryResults method is used. It returns null if none of the mentioned statements are executed. the same command can be used to enumerate child jobs by calling it on each of them.

Multiple statements of SQL under the same source is considered as a script in the exception being the statements with CREATE TEMP FUNCTION followed by queries. The following example is not considered a script by bigquery.

CREATE TEMP FUNCTION Add(x INT64, y INT64) AS (x + y);

SELECT Add(3, 4);

Variables

Variables must be declared either at the beginning of the script or at the beginning of a BEGIN block. Variables declared at the beginning of the script are in scope for the whole script. Variables declared inside a BEGIN block have scope for the block. they are going out of scope after the corresponding END statement. The maximum size of a variable is capped at 1 MB, and maximum of 10MB cap for script.

DECLARE x INT64;

BEGIN

DECLARE y INT64;

-- Here you can reference x and y

END;

-- Here you can reference x, but not y

If a variable and column share the same name, the column takes precedence.

This returns column x + column x:

DECLARE x INT64 DEFAULT 0;

SET x = 10;

WITH Numbers AS (SELECT 50 AS x)

SELECT (x+x) AS result FROM Numbers;

+--------+

| result |

+--------+

| 100 |

+--------+

This returns column y + variable x:

DECLARE x INT64 DEFAULT 0;

SET x = 10;

WITH Numbers AS (SELECT 50 AS y)

SELECT (y+x) AS result FROM Numbers;

+--------+

| result |

+--------+

| 60 |

+--------+

Temporary tables

Temporary tables allow you to save intermediate results to a table. These temporary tables exist at the session level, so you do not got to save or maintain them during a dataset. they’re automatically deleted sometime after the script completes.

The following example creates a temporary table to store the results of a query and uses the temporary table in a subquery:

-- Find the top 100 names from the year 2017.

CREATE TEMP TABLE top_names(name STRING)

AS

SELECT name

FROM `bigquery-public-data`.usa_names.usa_1910_current

WHERE year = 2017

ORDER BY number DESC LIMIT 100

;

-- Which names appear as words in Shakespeare's plays?

SELECT

name AS shakespeare_name

FROM top_names

WHERE name IN (

SELECT word

FROM `bigquery-public-data`.samples.shakespeare

);

Other than the use of TEMP or TEMPORARY the syntax is identical to the CREATE TABLE syntax.

When you create a temporary table, don’t use a project or dataset qualifier in the table name. The table is automatically created in a special dataset.

You can ask a short-lived table by name for the duration of the present script. temporary tables created by a BigQuery Stored Procedure are included within the script. The temporary table is not shareable as it is not visible in any list and table manipulation methods.

The lifespan of a temporary table is about 24 hours after the script is executed. it saved by a random name assigned to it, instead of the name you gave it. BigQuery COnsole > Query History > choose the query that created table. Then Destination Row > Temporary Table. Usage of Temprorany table is free of cost.

You can delete a temporary table explicitly before the script completes by using the DROP TABLE statement:

CREATE TEMP TABLE table1(x INT64);

SELECT * FROM table1; -- Succeeds

DROP TABLE table1;

SELECT * FROM table1; -- Results in an error

When temporary tables are used together with a default dataset, When temporary tables are used along side a default dataset, unqualified table names ask a short lived table if one exists, or a table within the default dataset. The exception is for CREATE TABLE statements, where the target table is taken into account a short lived table if and as long as the TEMP or TEMPORARY keyword is present.

For example, consider the subsequent script:

-- Create table t1 in the default dataset

CREATE TABLE t1 (x INT64);

-- Create temporary table t1.

CREATE TEMP TABLE t1 (x INT64);

-- This statement selects from the temporary table.

SELECT * FROM t1;

-- Drop the temporary table

DROP TABLE t1;

-- Now that the temporary table is dropped, this statement selects from the

-- table in the default dataset.

SELECT * FROM t1;

You can explicitly indicate that you are referring to a temporary table by qualifying the table name with _SESSION:

-- Create a temp table

CREATE TEMP TABLE t1 (x INT64);

-- Create a temp table using the `_SESSION` qualifier

CREATE TEMP TABLE _SESSION.t2 (x INT64);

-- Select from a temporary table using the `_SESSION` qualifier

SELECT * FROM _SESSION.t1;

I

If you use the SESSION qualifier to find the temporary table that is not available, the script returns a error stating the table does not exist.

for instance , if there’s no temporary table named t3, the script throws a mistake albeit a table named t3 exists within the default dataset.

You cannot use _SEYou cannot use _SESSION to make a non-temporary table:

CREATE TABLE _SESSION.t4 (x INT64); -- Fails

Debugging a script

Here are some tips for debugging scripts and BigQuery Stored procedure:

- Use the ASSERT statement to say that a Boolean condition is true.

- Use can use the BEGIN(…)EXCEPTION to catch errors and then display the error message.

- Use SELECT FORMAT(“…”) to point out intermediate results.

- Every statement within the script will produce an output that can be viewed as soon as it is run in Google Cloud Console. The bq command-line tool’s ‘bq query` command also shows the results of every step once you run a script.

- within the Google Cloud Console, you’ll select a private statement inside the query editor and run it.

Writing a Bigquery Stored procedure

To create a BigQuery Stored procedure, use the CREATE PROCEDURE statement. The BEGIN and END are the boundaries of The body of the BigQuery Stored procedure

CREATE PROCEDURE dataset_name.procedure_name

BEGIN

-- statements here

END

Parameters

A BigQuery Stored procedure can take an inventory of named parameters. Each parameter features a data type.

CREATE OR REPLACE PROCEDURE mydataset.create_customer(name STRING)

BEGIN

DECLARE id STRING;

SET id = GENERATE_UUID();

INSERT INTO mydataset.customers (customer_id, name)

VALUES(id, name);

SELECT FORMAT("Created customer %s (%s)", id, name);

END

A BigQuery Stored procedure can have output parameters. An output parameter returns a worth from the BigQuery Stored procedure but doesn’t allow input for the BigQuery Stored procedure. to make an output parameter, use the OUT keyword before the name of the parameter.

For exFor example, this version of the BigQuery Stored procedure returns the new customer ID through the id parameter:

CREATE OR REPLACE PROCEDURE mydataset.create_customer(name STRING, OUT id STRING)

BEGIN

SET id = GENERATE_UUID();

INSERT INTO mydataset.customers (customer_id, name)

VALUES(id, name);

SELECT FORMAT("Created customer %s (%s)", id, name);

END

To call this BigQuery Stored procedure, you want to use a variable to receive the output value:

--- Create a new customer record.

DECLARE id STRING;

CALL mydataset.create_customer("alice",id);

--- Display the record.

SELECT * FROM temp.customers

WHERE customer_id = id;

A BigQuery Stored procedure can also have input/output parameters. An

A BigQuery Stored procedure also can have input/output parameters. An input/output parameter returns a worth from the BigQuery Stored procedure and also accepts input for the BigQuery Stored procedure with parameters. to make an input/output parameter, use the INOUT keyword before the name of the parameter.

Pricing

Pricing

BigQuery charges for the amount of bytes processed during the execution of the script

Conclusion

BigQuery is a trusted data warehouse that a lot of companies use and store data as it provides many benefits but transferring data into it is a hectic task. The Automated data pipeline helps in solving this issue and this is where Hevo comes into the picture. Hevo Data is a No-code Data Pipeline and has awesome 150+ pre-built Integrations that you can choose from.

visit our website to explore hevo[/hevoButton]

Hevo can help you Integrate your data from numerous sources and load them into a destination to Analyze real-time data with a BI tool such as Tableau. It will make your life easier and data migration hassle-free. It is user-friendly, reliable, and secure.

SIGN UP for a 14-day free trial and see the difference!

Share your experience of learning about BigQuery Stored procedures in the comments section below.

Arsalan is a research analyst at Hevo and a data science enthusiast with over two years of experience in the field. He completed his B.tech in computer science with a specialization in Artificial Intelligence and finds joy in sharing the knowledge acquired with data practitioners. His interest in data analysis and architecture drives him to write nearly a hundred articles on various topics related to the data industry.