Databases and Stream Processing are here to stay, and it seems like their future is bright. While databases like MySQL have greatly helped us in storing data efficiently and creating relationships between hundreds of entities, they are still far from helping businesses solve data problems in real-time.

This is because data in MySQL is passive. It just sits there waiting for a user to take action and turn it into actionable insights (using Business Intelligence Systems). Stream Processing Technologies like Kafka have enabled companies to store, and process, streams of events from databases like MySQL in real-time. These technologies leverage asynchronous communication and next-generation architectures for the transfer of continuous feeds of data.

In this guide, we teach you exactly how to configure MySQL Kafka Connector for your Kafka modern architecture. We explore two methods to enable MySQL to Kafka connection: one using Kafka Confluent Cloud Console and the other using Confluent CLI toolkit.

What Is MySQL?

MySQL is the world’s most popular open-source Relational Database Management System (RDBMS) used by all types of Small and Medium-Size Businesses (SMBs) and large enterprises. MySQL was initially developed, marketed, and supported by MySQL AB, a Swedish company but later got acquired by Sun Microsoft Systems in 2010 (currently known as Oracle Corporation).

Just like any other typical relational database, MySQL can store user/business/customer information in the form of rows and columns in a table. It provides referential integrity between rows and columns of various tables and processes user requests using SQL.

MySQL holds a highly regarded name in businesses working with databases and Cloud-based Data Warehousing solutions. It’s scalable, reliable, and user-friendly. It also works cross-platform which means that users can run MySQL on Linux and Windows, and restore backups from the other platforms.

Business Benefits of Using MySQL

MySQL is popular all over the world and is used by leading tech giants owing to the following reasons:

Easy to Install and Deploy

Businesses can set up and run SQL queries on their data using MySQL in minutes. MySQL enables them to deliver new applications faster than other proprietary databases.

High Speed

It’s a no-brainer that if you are working with large datasets, you wouldn’t want to spend extensive time working with datasets and tables. Unlike other databases, MySQL is comparatively faster and can query information from large datasets faster, helping drive faster business intelligence.

Read more on the top 10 MySQL ETL tools for your business here.

Industry Standards

Whether you are a developer who does rapid software development or a freelancer who seeks to work with databases, MySQL has been in use for over 20 years, and you can be sure of using MySQL as a fully integrated transaction-safe, ACID-compliant database.

Reliability and High Availability

MySQL has a well-established reputation for reliability among its 5 million user base. In addition to reliability, MySQL clusters give your users an unmatched 99.999 percent availability.

Multiple Platform Support

MySQL can be used on 20 platforms including Linux, Solaris, AIX, HP-UX, Windows, and macOS. This provides organizations with complete flexibility in terms of delivering a solution on the platform of their choice.

What Is Apache Kafka?

Apache Kafka is an open-source, Distributed Streaming Platform that allows for the development of Real-time Event-Driven Applications. It enables developers to create applications that consistently produce and consume streams of data records, relying on a Message Broker. This Message Broker relays messages from the “publishers” (systems that transform data into the desired format from Data Producers) to the “subscribers” (systems that manipulate or analyze data in order to find alerts and insights and deliver them to Data Consumers).

Apache Kafka is superfast and maintains a high level of accuracy for all data records. These data records are maintained in order of their occurrence inside “Clusters” that can span multiple Servers or even multiple Data Centers. Apache Kafka replicates these records and partitions them in such a way that allows for a high volume of users to use the application simultaneously.

As a result, Apache Kafka has a fault-tolerant and resilient architecture. Kafka copies the partitions to other Brokers (also known as replicas) from the elected Broker (leader) to ensure robustness. A Broker is a working Server or Node; like a facilitator between Data Producer and Data Consumer Groups. All writes and reads to a Topic are routed through the leader, who organizes the updating of replicas with new data.

Business Benefits of Using Apache Kafka

Low Latency Publish-Subscribe Messaging Service

For huge volumes of data, Apache Kafka has a very low end-to-end latency, up to 10 milliseconds. This means that the time it takes for a data record produced to Kafka to be retrieved by the consumer is quite quick. It is because it decouples the message, allowing the consumer to retrieve it at any moment.

Seamless Messaging and Streaming Functionality

Apache Kafka provides a unique capacity to publish, subscribe, store, and process data records in real time, thanks to its special ability to decouple messages and store them in a highly efficient manner.

With such seamless messaging functionality, dealing with huge volumes of data becomes simple and easy, giving business communications a considerable edge over conventional communication approaches.

Consumer Friendly

Kafka may be used to integrate with a wide range of consumers. The best thing about Kafka is that it may behave or act differently depending on the consumer with whom it connects because each consumer has a varied ability to manage the messages that come out of Kafka. Furthermore, Kafka integrates nicely with consumers written in a wide range of languages.

What Is MySQL Kafka Connector Used For?

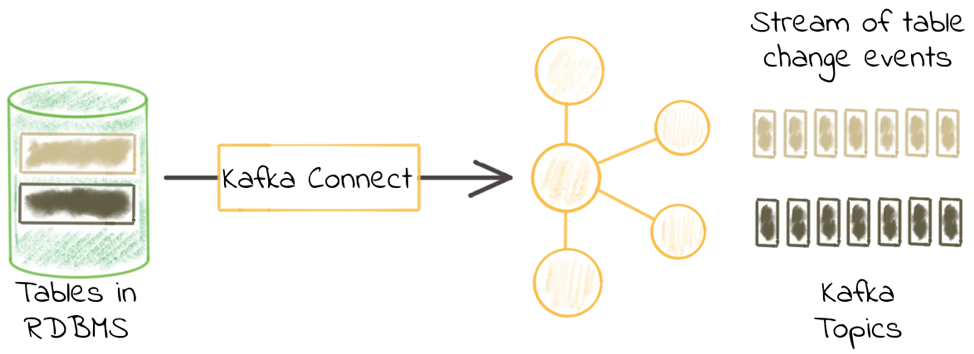

Image Source: Confluent

Image Source: Confluent

MySQL Kafka Connector is a useful tool for streaming data from external databases like MySQL into Kafka Topics. This tool allows you to pull data from MySQL relational database tables and record all resultant row-level changes to your data. The connector is available on the Confluent website and is called the Confluent JDBC MySQL Source Connector. It runs in the cloud.

MySQL Kafka Connector supports multiple data formats like Avro, JSON Schema, Protobuf, or JSON to sync all database events for each database table as separate Kafka Topics. Using MySQL to Kafka Connector, you can transfer important data residing in your MySQL database tables like customer information, and stakeholders data and perform Stream Processing on this data using Kafka’s inbuilt functionalities.

Prerequisites for Setting Up MySQL Kafka Connector

Connecting and configuring your relational tables from MySQL database to Kafka Topics requires the following preconditions:

- You have authorized access to the Confluent Cloud Cluster on Amazon Web Services (AWS), Azure, or Google Cloud Platform (GCP).

- You have installed and configured Confluent CLI for your Confluent Cloud Cluster.

- You have enabled public access to your MySQL database.

- You have enabled schema registry to use schema registry-based formats like Avro, JSON_SR (JSON Schema), or Protobuf.

- You possess Kafka Cluster credentials for creating a new MySQL to Kafka connection.

Set Up MySQL to Kafka Connection Using Confluent Cloud Console

To set up your connection from MySQL database tables to Kafka Topics, you can configure MySQL to Kafka Connector in the Confluent Cloud Console by following the steps below:

Step 1: Launch Confluent Cloud Cluster

Before we ingest the data into Kafka from the MySQL database, we recommend you check that your database has been set up with the data correctly. This step ensures that you have the right data in your MySQL relational tables to be transferred to your Kafka Topics.

For the data residing in MySQL tables, we need a Kafka Topic into which our records will be written.

To create a new Kafka Cluster, visit Add cluster > Create cluster and choose from Basic, Standard, or Dedicated.

To create a new Kafka Topic, hover to the Topics section on the left panel of your Confluent Cloud dashboard, then click on Topics > Create Topics. Here, you can enter a Topic name, and specify the number of partitions.

To change the default settings, like when you wish to set up a cleanup policy for entity data (e.g. customer’s changing phone number or email addresses), you can click on Show advanced settings and specify the same.

Step 2: Add MySQL Kafka Connector

From the left navigation menu, visit the Connectors section and search for MySQL Source connector card.

After clicking on the connector, Confluent will bring you to the MySQL Kafka Connector configuration page. The next steps illustrate what data entries are to be made in the MySQL Kafka Connector.

Step 3: Set Up MySQL to Kafka Connection

Kafka Confluent Cloud will request you to enter the following details on the MySQL Kafka Connector configuration page:

- Name of the connector.

- Kafka Cluster credentials. You can provide these using either the service account resource ID or by entering an API key.

- Topic prefix. MySQL Kafka Connector automatically creates Kafka Topics as: <topic.prefix><tableName>, and tables with the properties like topic.creation.default.partitions=1 and topic.creation.default.replication.factor=4. If you want to create topics with specific settings, please create Kafka Topics before running MySQL Kafka Connector.

- Connection details, which include details for host address, and SSL connection mode.

- Database details, which include timestamp column name to enable timestamp mode and detect new and modified rows, incrementing column name to enable incrementing mode.

- Schema pattern to fetch table metadata from the database.

- Kafka output record value to bring in data from multiple formats like AVRO, JSON_SR (JSON Schema), PROTOBUF, or JSON (schemaless). You must define a valid schema in your schema registry to enable the same.

- Number of tasks to be used by MySQL Kafka Connector.

- Transforms and predicates to support Single Message Transforms (SMTs).

Step 4: Verify and Launch MySQL Kafka Connector

Once you’ve input the required details, you can verify your connection details from the preview page, and then launch your MySQL Kafka Connection.

These connection properties are made available in a JSON file format like this.

Review the JSON configuration against what you have entered in the Confluent Web UI, and click Launch to start your MySQL to Kafka connection.

Just like any other data generator connector, you’ll have to wait a few minutes for it to provision, and once the connection is live, you can check the connector status change from Provisioning to Running under the Connectors tab.

Step 5: Validate Your Kafka Topic

After your MySQL to Kafka Connector is live and running, you can validate your messages that populate from the MySQL database into your Kafka Topics and check if correct data entries are getting synced to your Kafka Topics.

Set Up MySQL to Kafka Connection Using Confluent CLI

You can also use Confluent CLI to set up MySQL to Kafka connection. The steps to take are provided below:

Step 1: Display All Available Connectors

The following command helps you list all the available Kafka Connectors:

confluent connect plugin list

Step 2: Establish the Required Connector Configuration Properties

The following is a generic command that helps you list all the required connection properties for any Kafka Connector.

confluent connect plugin describe <connector-catalog-name>

For MySQL Kafka Connector, the same command changes to:

confluent connect plugin describe MySqlSource

When you execute the script, it will present you with all the properties you need to specify in order to configure your MySQL Kafka Connector.

Following are the required configs:

connector.class

name

kafka.auth.mode

kafka.api.key

kafka.api.secret

topic.prefix

connection.host

connection.port

connection.user

connection.password

db.name

ssl.mode

table.whitelist

timestamp.column.name

output.data.format

tasks.max

Step 3: Create MySQL to Kafka Configuration File

Kafka Confluent is a Java-based platform that takes in information about any connector through JavaScript Object Notation (JSON) files. If you wish to build any connector in Kafka Confluent using Confluent CLI, you’ll have to specify the connector configuration using a JSON file.

Similarly, MySQL Kafka Connector configuration files are JSON files that contain all the required connection properties for creating a connection between MySQL and Kafka Topics. Here’s one example to define MySQL Kafka Connector configuration properties:

{

"name" : "confluent-mysql-source",

"connector.class": "MySqlSource",

"kafka.auth.mode": "Your_Kafka_API_Key",

"kafka.api.key": "<your-kafka-api-key>",

"kafka.api.secret" : "<your-kafka-api-secret>",

"topic.prefix" : "mysql_1",

"connection.host" : "<your-database-endpoint>",

"connection.port" : "3306",

"connection.user" : "<your-database-username>",

"connection.password": "<your-database-password>",

"ssl.mode": "prefer",

"db.name": "mysql-test",

"table.whitelist": "customers",

"timestamp.column.name": "created_at",

"output.data.format": "JSON",

"db.timezone": "UCT",

"tasks.max" : "2"

}

The description of property definitions contained within this code is as follows:

- “name”: Refers to the name of your MySQL Kafka Connector.

- “connector class”: Specifies the connector plugin name.

- “kafka.auth.mode”: Specifies the connector authentication properties. In this property, you can either use SERVICE_ACCOUNT or KAFKA_API_KEY (default option).

- “topic.prefix”: Refers to the prefix to be used while naming Kafka Topics in the format <topic.prefix><tableName>. Similarly, your tables are created with the properties: topic.creation.default.partitions=1 and topic.creation.default.replication.factor=4.

- “ssl mode”: Enables encrypted connection to the MySQL database server.

- “db.name”: Refers to the name of your MySQL database.

- “timestamp.column.name”: Represents a way to read data from MySQL tables. It uses a timestamp column to detect new and modified rows. Use this property with “incrementing.column.name” and you can enable timestamp and incrementing mode for handling updates using a globally unique ID which can be assigned a unique stream offset.

- “schema.pattern”: Fetches table metadata from your MySQL database.

- “output.data.format”: Specifies the preferred output format for Kafka records (data coming from connector). The acceptable formats are AVRO, JSON_SR, PROTOBUF, or JSON.

- “db.timezone”: Determines the database timezone. The default timezone is UTC.

Step 4: Load Configuration File to Create MySQL Kafka Connector

Once you have set up the property definitions for MySQL to Kafka Connector in the required JSON format, you need to load the file into Kafka Confluent Cloud and start your connector.

Here’s the command to do so.

confluent connect create --config <file-name>.json

You can specify your MySQL to Kafka connector file name after –config. Assuming that your file name is “mysql-to-kafka-source”, you can execute the same command as follows:

confluent connect create --config mysql-to-kafka-source.json

Upon successful execution, you’ll receive a confirmation message.

Created connector confluent-mysql-to-kafka-source lcc-zl7ds

Try Similar Integrations:

Integrate REST API to MySQL

Integrate Webhooks to MySQL

Step 5: Check Your Connector Status

Now that we’ve loaded our configuration files and started our connector, we need to validate if our newly configured connector is up and running. You can check the status of your MySQL Kafka Connector by using the command below:

confluent connect list

As discussed previously, this command will list down all the available Kafka connectors which have already been configured in your dashboard.

For our case, you might receive an output like this:

ID | Name | Status | Type

+-----------+---------------------------------+---------+-------+

lcc-zl7ds | confluent-mysql-to-kafka-source | RUNNING | source

And there you have it. You have successfully configured and set up your MySQL to Kafka Connector.

For extensive details on MySQL to Kafka Connector configuration properties, you can visit Kafka Confluent’s official documentation page.

Related:

Conclusion

Transferring data from MySQL source to Kafka using MySQL Kafka Connector is only the beginning when it comes to leveraging Real-time Stream Processing capabilities and exploring insights to drive and deliver success in business operations. In this guide, we discussed two methods to create and configure MySQL to Kafka Connector: one using Confluent Cloud Console and the other using Confluent CLI toolkit.

The real deal for most businesses is how to leverage this data and make sense of it. Although many do know of Business Analytics Systems, but to be able to take advantage of such systems, you require consolidated data, in a single repository like a Data Warehouse. Replicating data from MySQL or Kafka to a Data Warehouse requires the fabrication of complex ETL Pipelines, which themselves require continuous monitoring and routine maintenance.

Luckily, with Hevo, you can build Data Pipelines from MySQL, Kafka, and 150+ Data Sources to a Data Warehouse, but without the need for technical resources or know-how. That’s right! Hevo Pipelines can be set up in minutes, even by non-data professionals.

Visit our Website to Explore Hevo

Hevo connects your applications to Data Warehouses like Amazon Redshift, Snowflake, Google BigQuery, Firebolt, or even Database Destinations like PostgreSQL, MySQL, or MS SQL Server where you can run transformations for analytics, and deliver Operational Intelligence to Business Tools.

FAQ on MySQL Kafka Connector

How to connect Kafka to MySQL?

1. Configure Kafka Connector

2. Install JDBC Connector

3. Configure Connector Properties

4. Start Kafka Connect distributed mode

5. Deploy Connector

How to connect Kafka to SQL Server?

1. Install JDBC Connector

2. Configure Connector Properties

3. Start Kafka Connect distributed mode

4. Deploy the Connector

What is a Kafka connector?

A Kafka connector is a plugin or module that integrates Kafka with external systems, allowing data to flow between Kafka topics and the external systems. It serves as an interface between Kafka and different data sources or sinks, enabling scalable, fault-tolerant, and efficient data pipelines.

Divyansh is a Marketing Research Analyst at Hevo who specializes in data analysis. He is a BITS Pilani Alumnus and has collaborated with thought leaders in the data industry to write articles on diverse data-related topics, such as data integration and infrastructure. The contributions he makes through his content are instrumental in advancing the data industry.